UniApply RAG : Using RAG for more precise and human friendly document search.

Table of contents

About the project

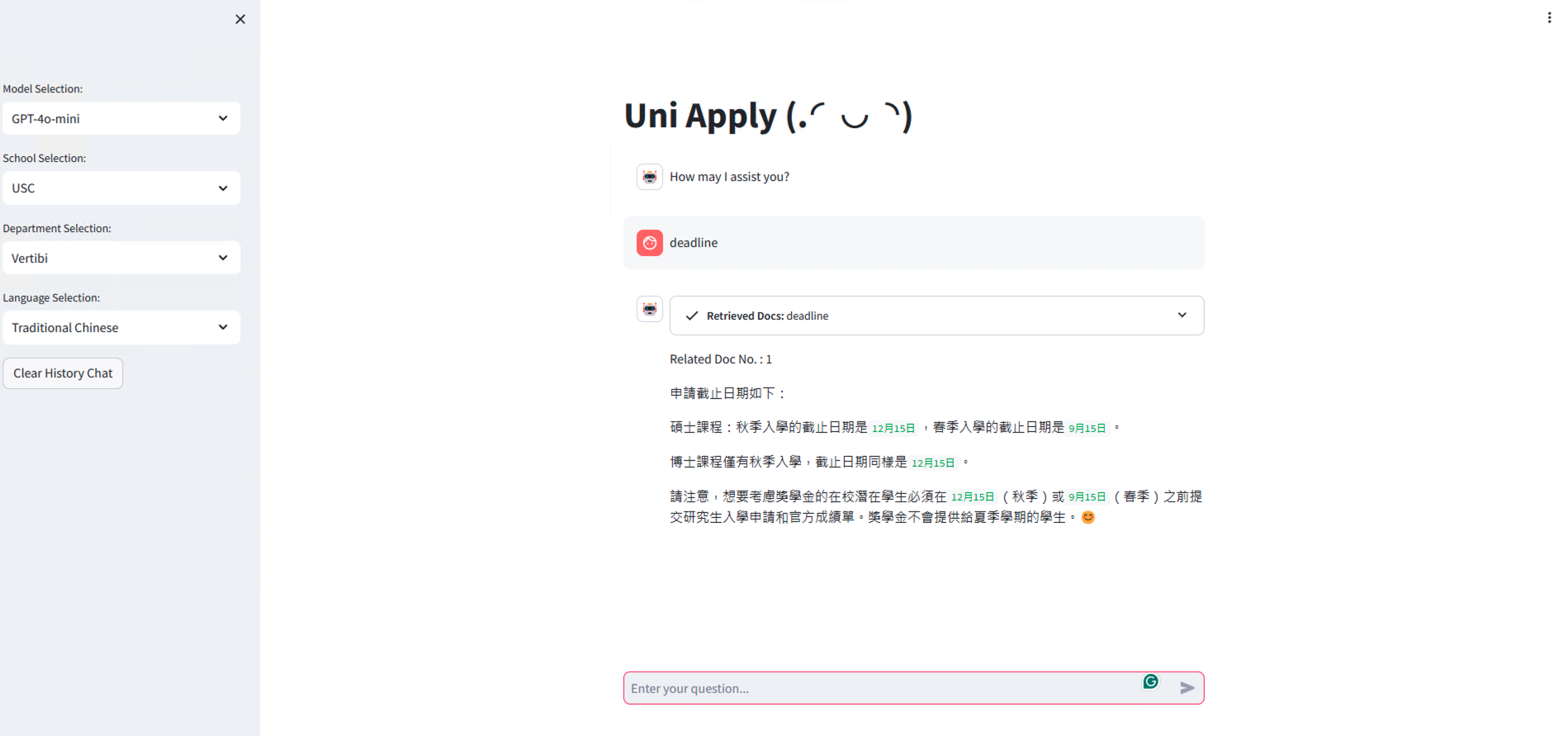

This is a Uni/Colledge Application F&Q Chatbot through RAG implementation.

This is an implementation of the Retrieval-Augmented Generation (RAG) model by Langchain, and a chatbot interface built with Streamlit using application F&Q collected from universities around the world.

Regarding the RAG, we prepare the data in advance instead of implementing real-time retrieval. Since most application information do not vary much throughout the year, we only have to update the information yearly, therefore there is no need to do realtime search to lower the computation and development cost.

The vector-databse we use in this project is created using FAISS, it is a library for efficient similarity search and clustering of dense vectors. The embedding and generation model we use is offered by OpenAI GPT-4o-mini and GPT-3.5 for comparison.

The Implementation of RAG

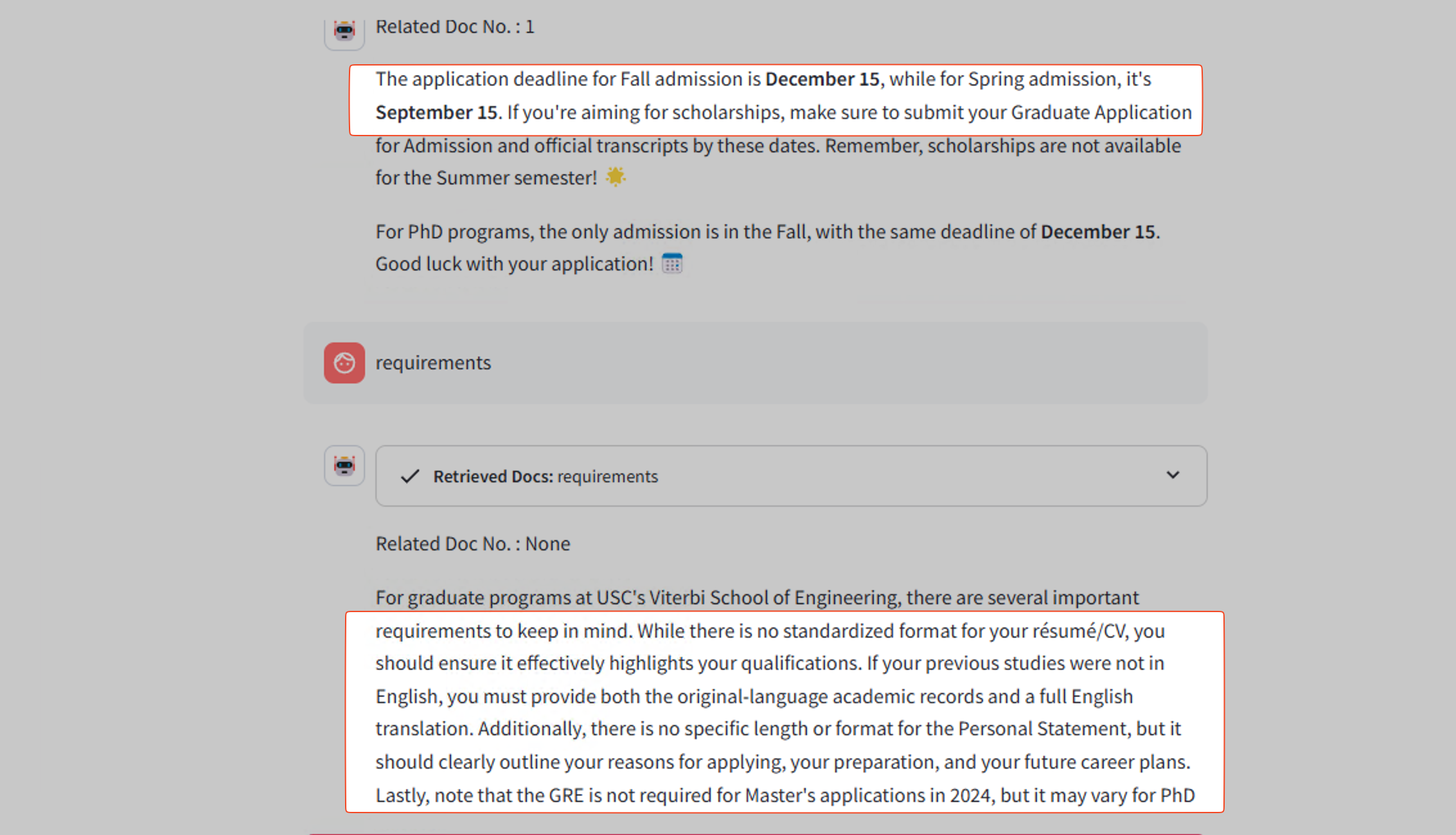

The results showed that with the RAG implemented with our model, it can providing accurate information and link for your reference. This mechanism also allows user to ask free form questions, instead of looking through the F&Q going through the questions one by one or ctrl-F and try to figure out which is the correct answer, non-native speakers and also confused user can easily get the right answer.

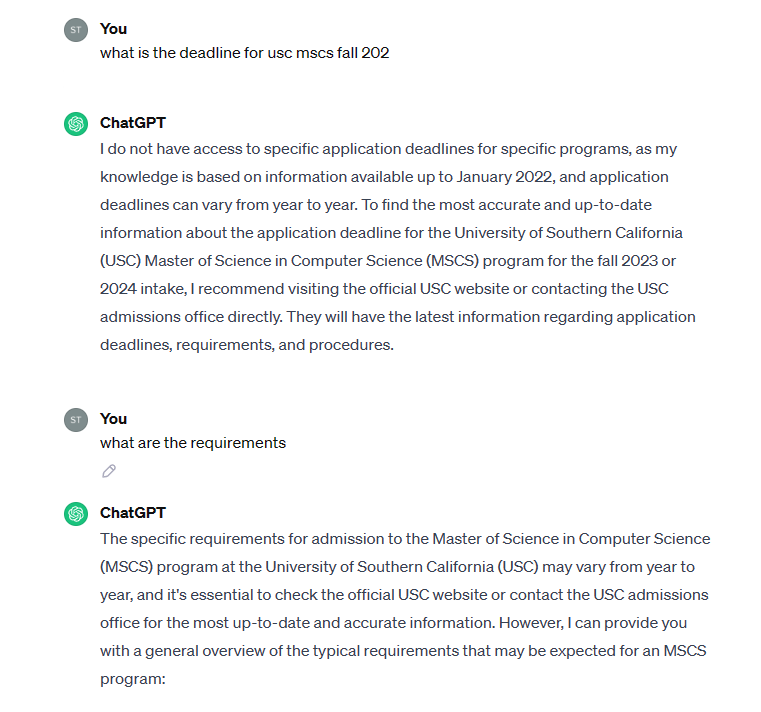

Comparison on GPT-3.5 and GPT3.5 with RAG

Getting Started

To deploy the application simply go to deploy folder.

-

Modify

.envfile- OPENAI_API_KEY

- HOST_PORT

(Retrieval Related Settings)

[!NOTE] you can change below settings regarding to the documents and language you have prepared

- EMBEDDING_MODEL_NAME

- RETRIEVER_RETURN_TOP_N\

-

Build image

bash components/Streamlit_ver/script/build-docker-image.sh

- Start the application

docker-compose up -d