Abstract

The Safety Jackets and Helmets Detection System is an AI-based solution designed to enhance safety compliance monitoring in industrial or construction environments. By utilizing the YOLOv11 object detection model, the system classifies individuals into four categories: wearing only helmets, wearing only safety jackets, wearing both helmets and jackets, or wearing neither. This project focuses on robust annotation techniques, training a custom YOLOv11 model on accurately labeled data, and evaluating real-world applicability. The resulting system offers real-time detection and classification, contributing significantly to workplace safety automation.

Introduction

Ensuring safety compliance is crucial in industrial, construction, and hazardous environments, where wearing helmets and safety jackets is mandatory. Manual monitoring of safety gear compliance is labor-intensive and prone to errors. To address this challenge, the Safety Jackets and Helmets Detection System leverages AI-based object detection to automate the process.

Requirements:

The following tools and technologies were used for the successful implementation of this project:

*Ubuntu OS: Chosen for its reliability in handling AI workflows.

*Docker: Containerizes the development environment for consistency.

*Kubeflow: A platform that supports the development and deployment of machine learning models.

*YOLOv9: A state-of-the-art object detection model used for real-time detection.

*Roboflow: Facilitates dataset annotation and pre-processing.

*PyTorch: Powers the deep learning framework behind YOLOv11.

*Python: The programming language used for scripting and training tasks.

*Ultralytics: A library for implementing YOLO models.

This project aims to improve safety monitoring by providing an automated system capable of detecting whether an individual is wearing the required protective equipment (helmets and jackets) in real-time.

Methodology

The methodology for this project involves several crucial steps to ensure effective detection and classification. These steps are outlined below:

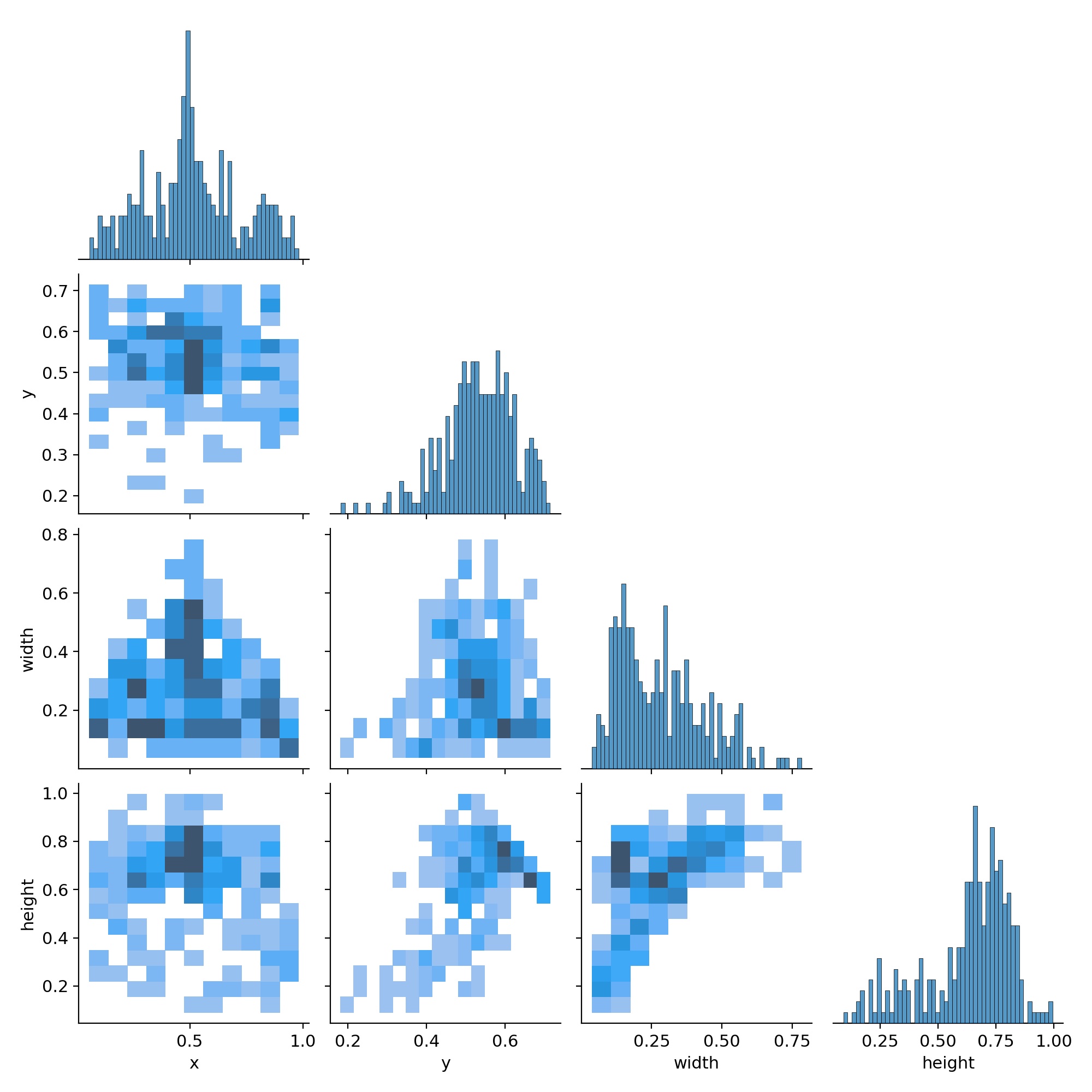

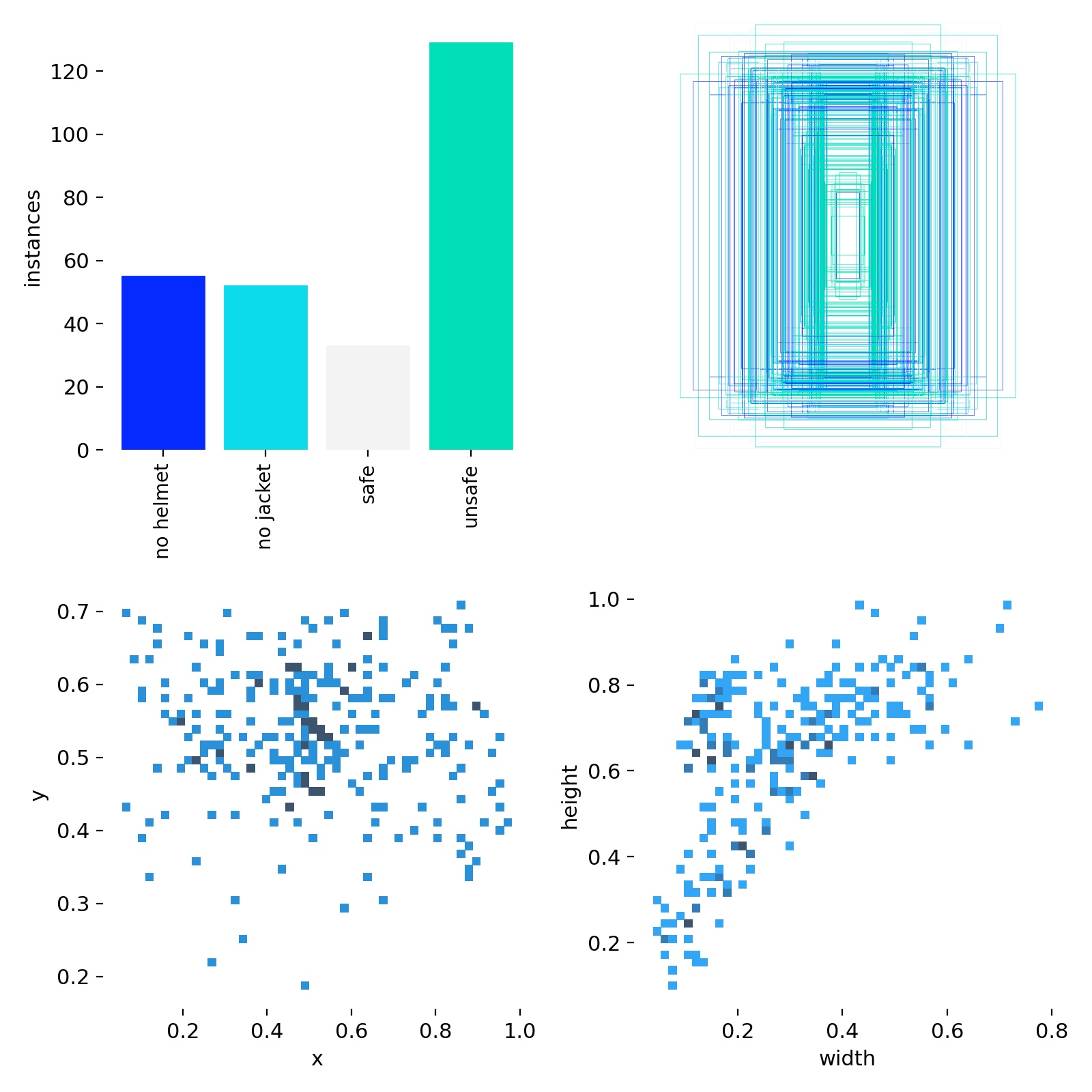

** Dataset Annotation: Annotation is a critical process for training an object detection model. To ensure high accuracy, each image or video frame is annotated using Roboflow. The categories for annotation are:

- Helmet Only: Individuals wearing only helmets.

- Jacket Only: Individuals wearing only safety jackets.

- Both Helmet and Jacket: Individuals wearing both safety items.

- No Helmet or Jacket: Individuals not wearing either safety item.

Annotating the dataset accurately is essential for training the YOLOv11 model to achieve optimal performance. The dataset is then exported in the YOLOv11-compatible format for training.

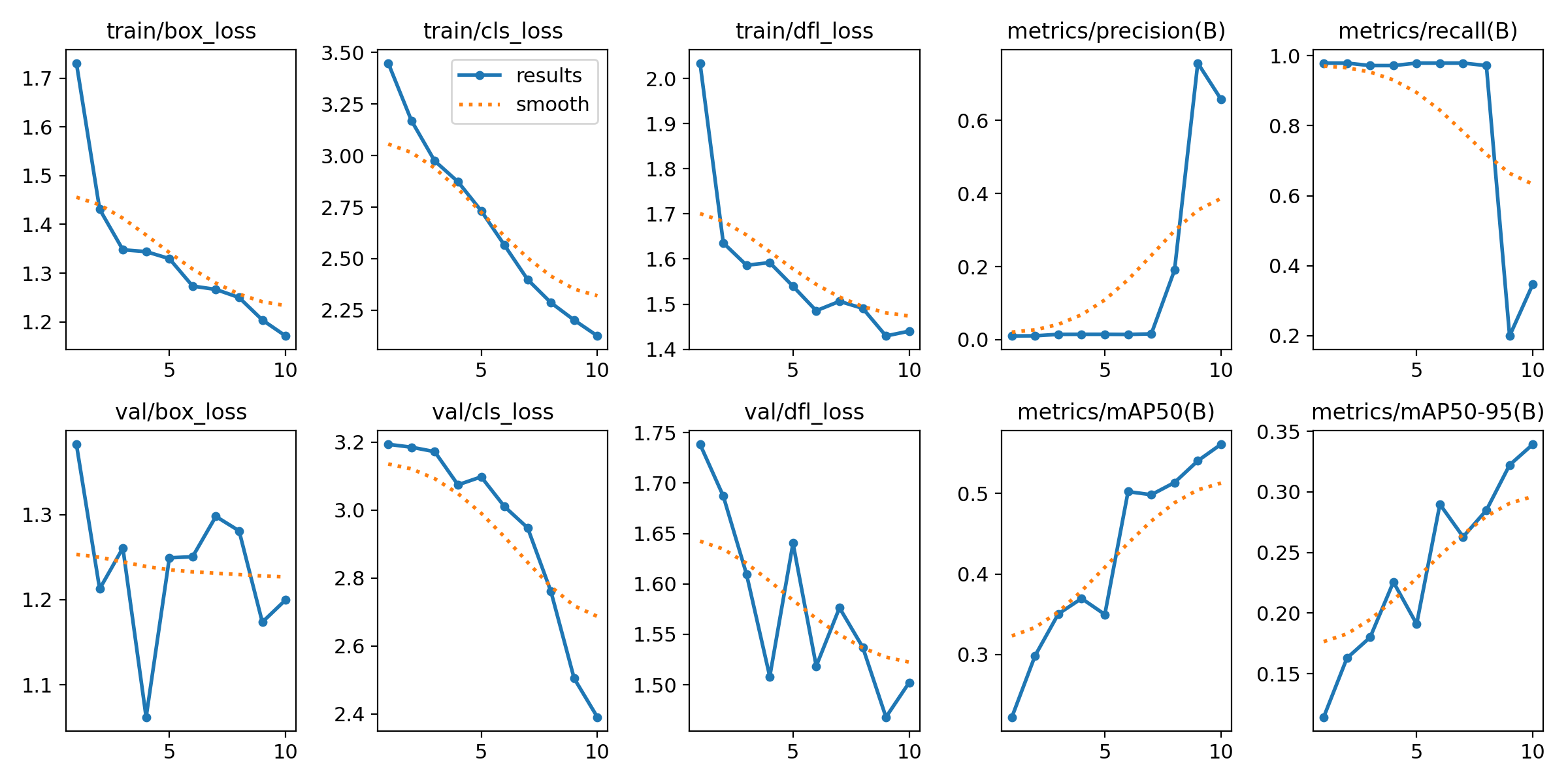

** Model Training: The YOLOv11 model is employed for object detection, leveraging its real-time detection capabilities. The training process is performed using PyTorch and Ultralytics, two powerful libraries for building and training deep learning models. The training involves using the labeled dataset to enable the model to distinguish between the four categories of safety gear compliance.

.png?Expires=1772778286&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=g9anDw2VkSnHr~7XDeE7VqN2vtERgU6PN5Jq7WnYo78sQBjZOfxKu5dni13YIM2Sod7crPkoFw10akx2~6gP7ZG1EAR9S-oJYRfXsgE8~qDEbpzvDlAMhDvjPkGlXC6V2JIchKY5AMWbFJyJNxG-BdPhv3hEG3MLaW1KDulH9UXyW5h~SUNEZcGL5bI6vtJYvo8JDTFOxy3HZT365ZmEEoFoWeaHJR9FeFQQrqkwe0qEpaohq7uSPgriVtGBSwxpBgM8F2cLdDCVBqXRpexx4x86U1fmfsyqDRJEKSAD77YyQZrHbxM12scrrzyf6-US1N5xz~6E6d4iHScRrnsqMQ__)

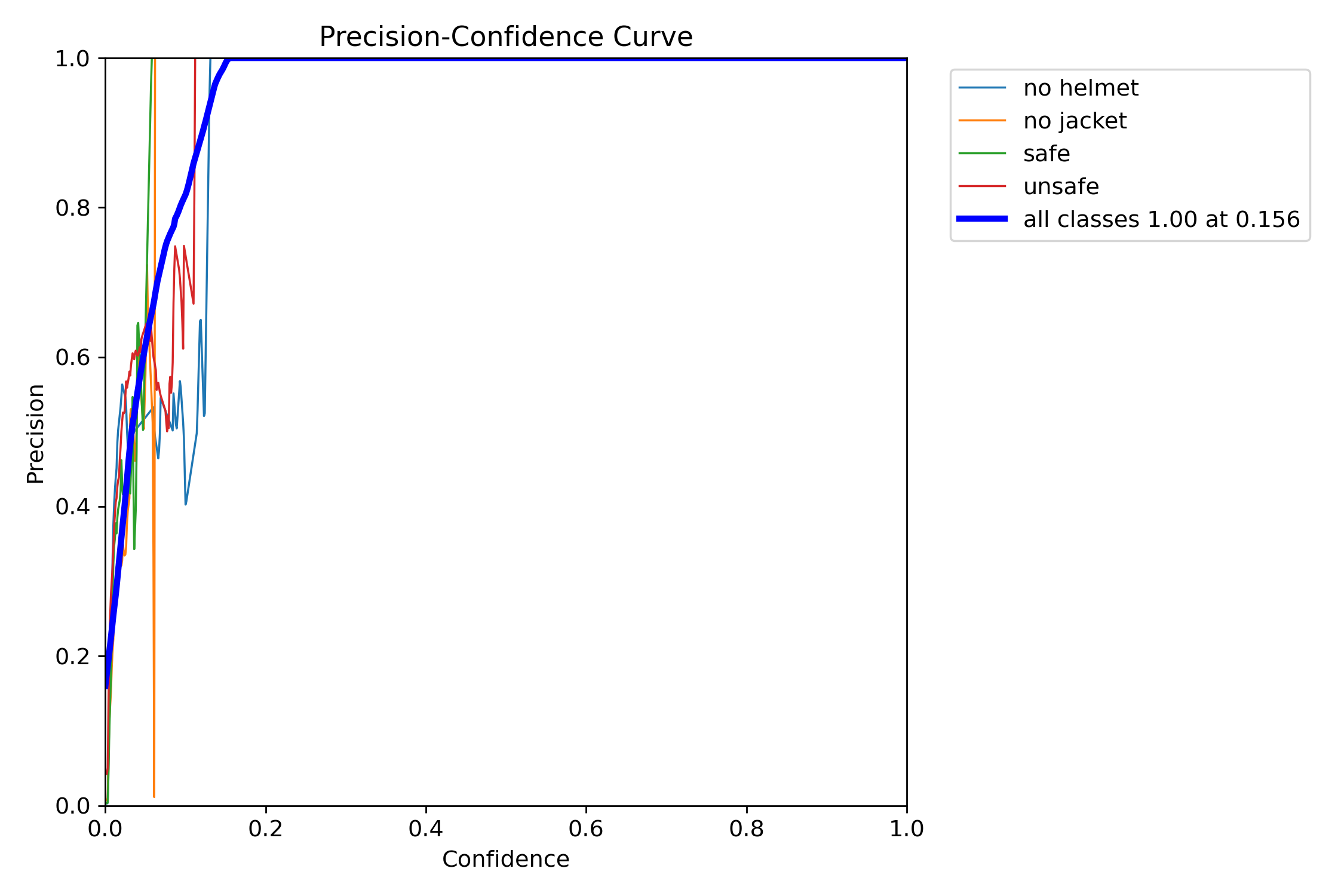

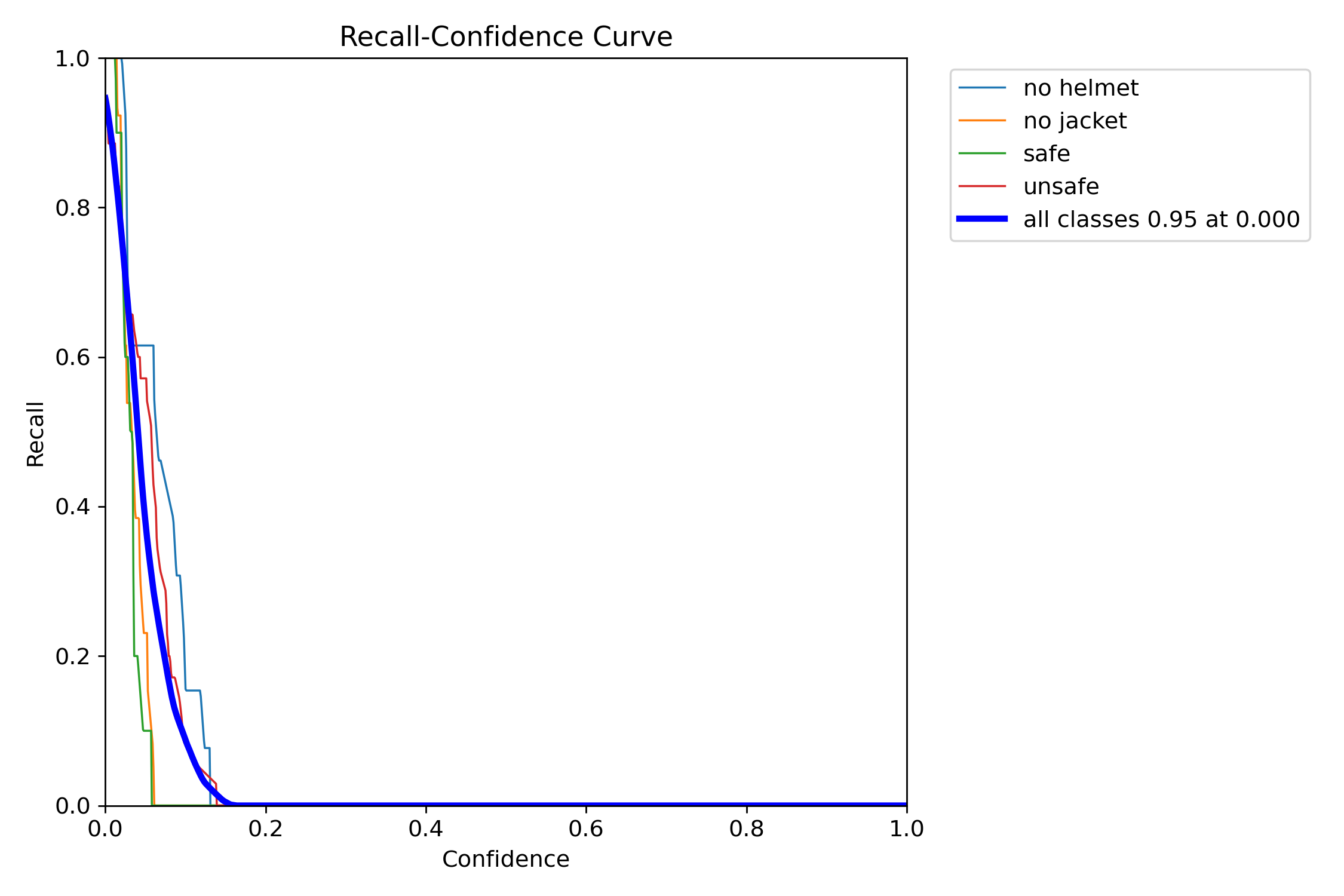

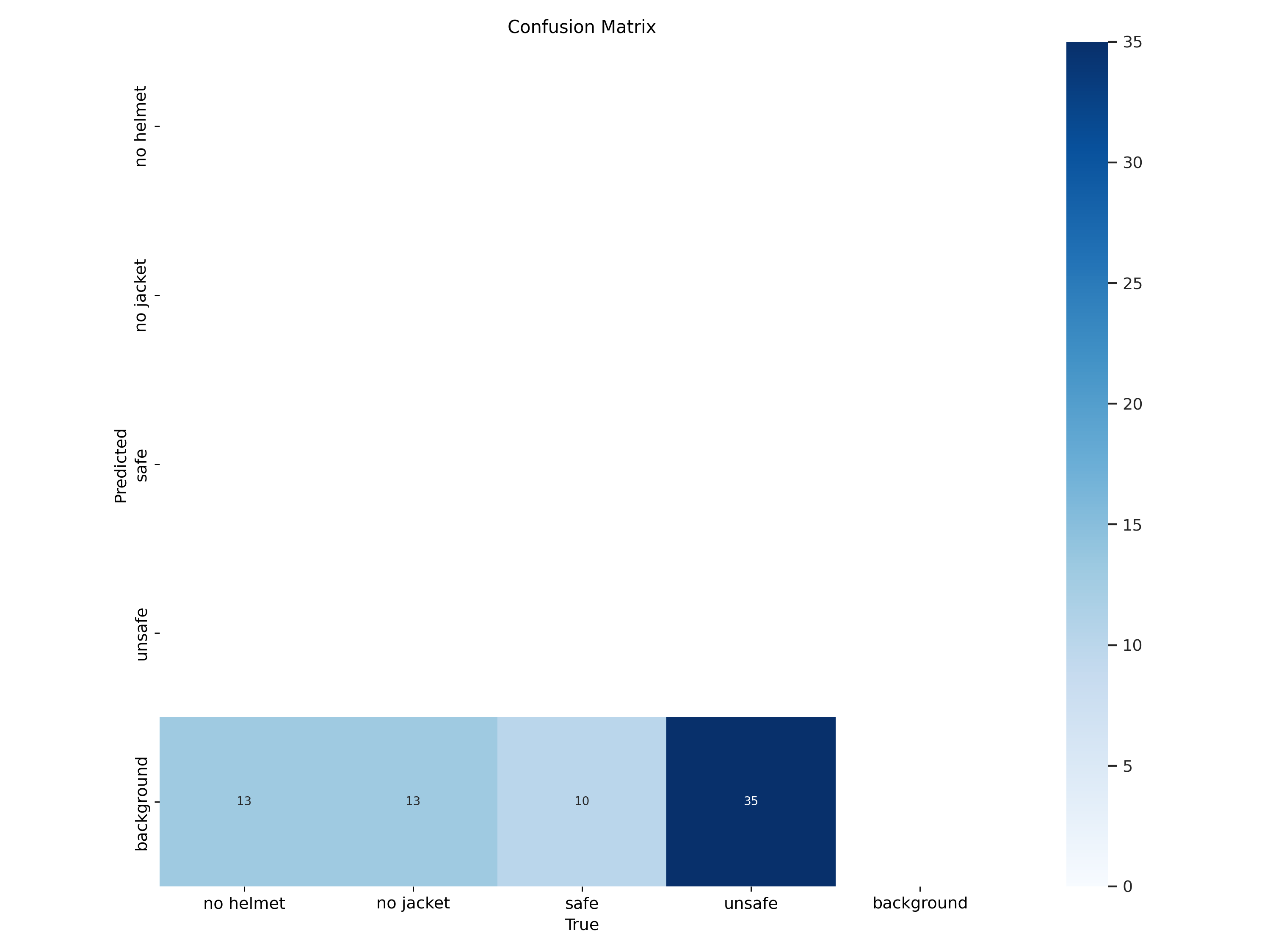

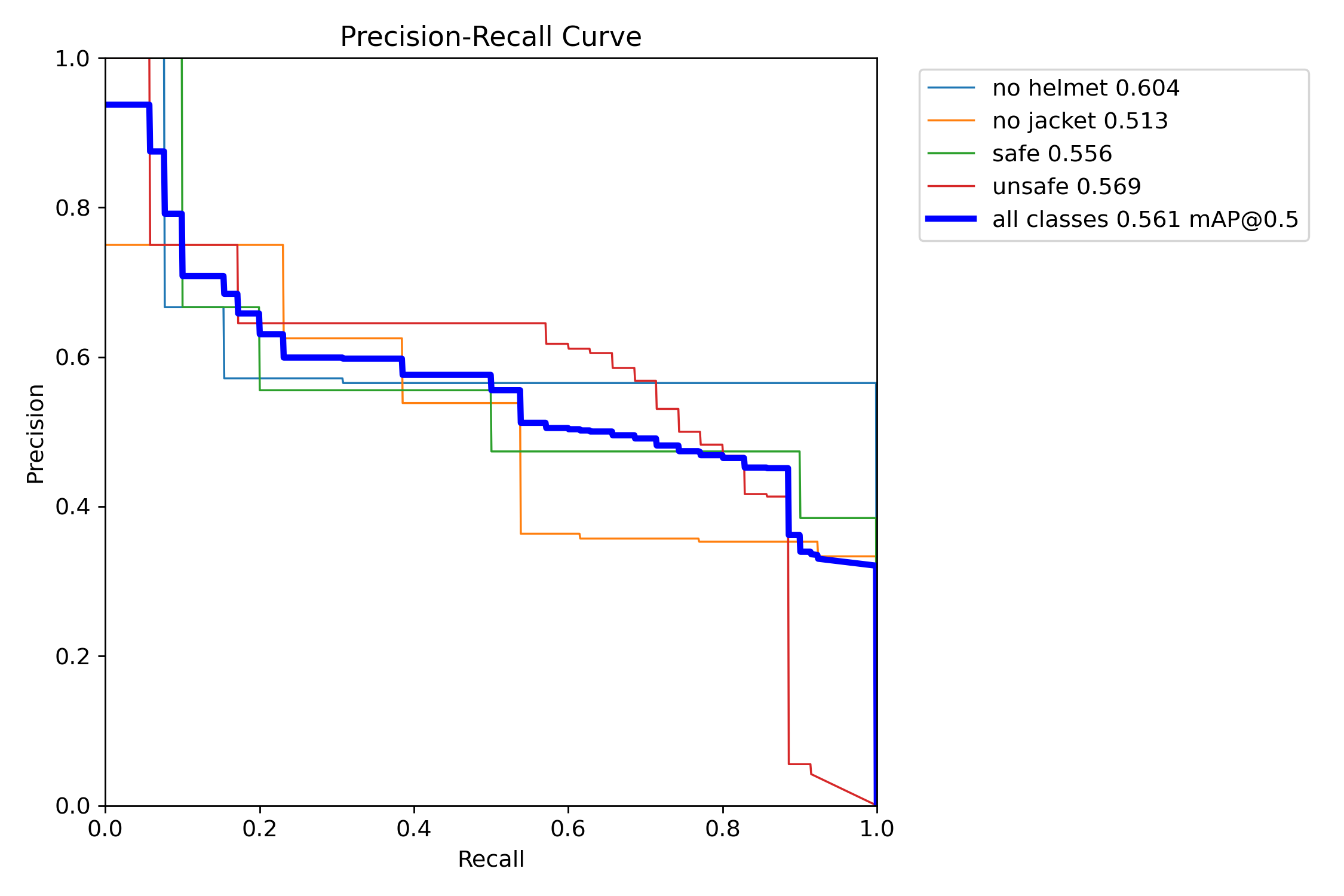

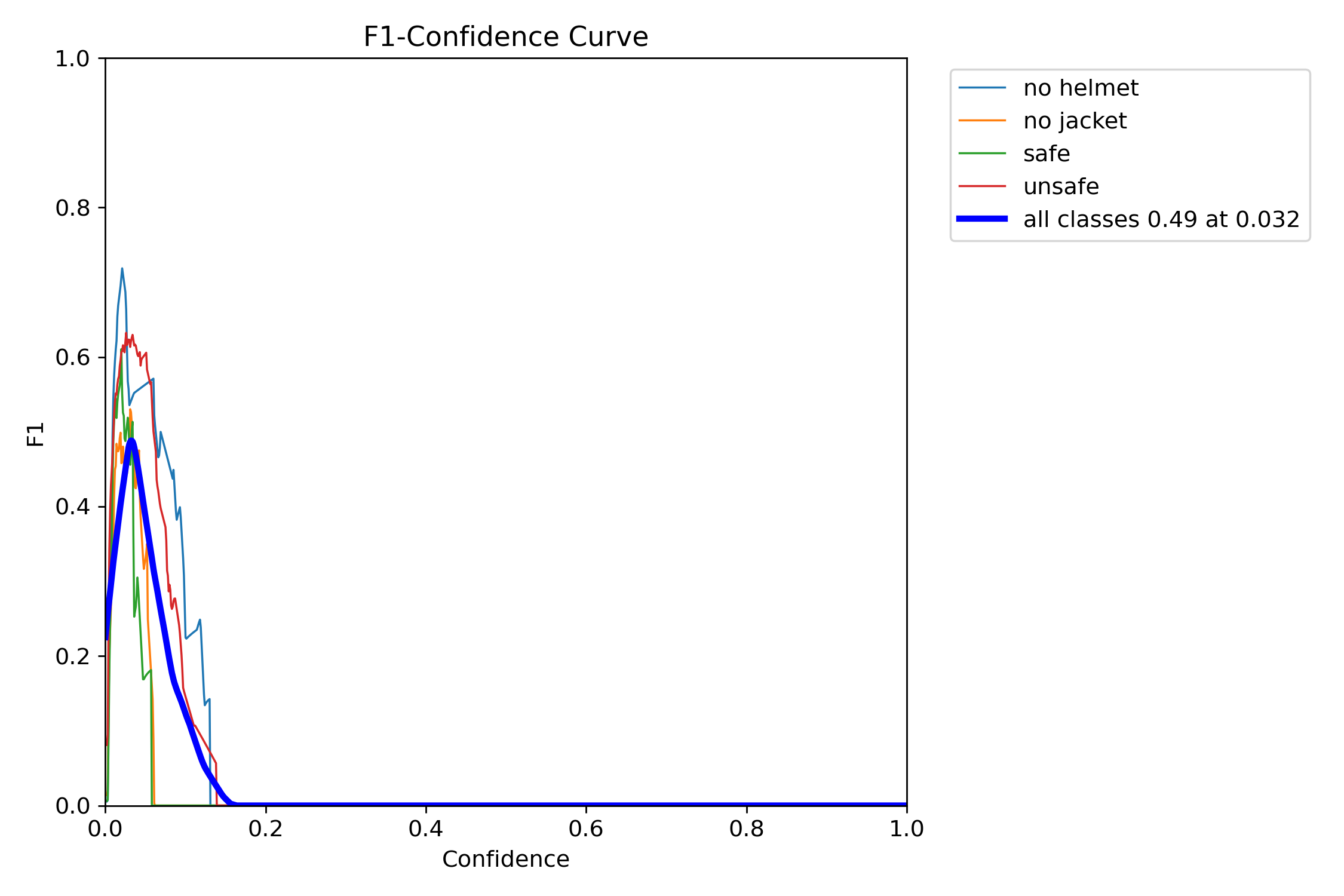

** Inference and Evaluation: Inference is carried out to test the trained model's detection capabilities on test images or videos. If there are any issues with the detection, such as errors related to specific dependencies like NumPy, these are resolved by ensuring compatibility and installing the necessary packages. For CPU-specific inference, the model is tested on CPU devices to assess performance.

** Kubeflow Platform for Model Deployment: Kubeflow is used to streamline the development and deployment process. The notebook server in Kubeflow is set up with sufficient resources (CPU, RAM, GPU) to train the YOLOv11 model efficiently.

.png?Expires=1772778286&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=Se9ItVxDzrACm5ER6V0iNOxcgOEgFo~gMCSyArvfOUJ7hZtNFrngLFrHszacDNS5YOmqSz2J3ZGC0hgHQCBD3nvCVF4eAIHHo42PBW1JXMJZZnjfkcd51YFi228WN4pdVjGQ5Nra~9Pc9bG8IqMkSBqYHy4yGHkfNJJcy40WGxQesXb8AggwdcMkYFk1xTAbeMx7XU3Ey5Y3SxVmNBMPp02ccC2-SZC0ovCOZeBJOqsxNE3VFts~ACAUmyZC3g8nL~xzZsVZnMI9Ko9C3qLAKsPezhj8GDX9~1dbIzaAcOZo1QdZhxkaTmP8GVeUKc36RCSDpiiC53c33DY1mXcEAw__)

After training the model, the results are evaluated by testing on video files and tracking the detection performance.

The final model is saved as a best.pt file, which is then used for inference on new, unseen data.

** Command Execution for Model Training and Evaluation: Key commands executed during the workflow:

*Dataset Preparation: Exporting annotated data from Roboflow:

bash:

mkdir custom_dataset

cd custom_dataset/

curl -L "<download_url>" > roboflow.zip

unzip roboflow.zip

rm roboflow.zip

cd ..

*Model Training:

yolo task=detect mode=train model=yolo11n.pt data="custom_dataset/custom_data.yaml" epochs=10 imgsz=640

- Inference:

yolo task=detect mode=predict model=best.pt source="vid.mp4"

Experiments

The system was tested under various conditions to evaluate its robustness and accuracy. Several datasets were used, including images and video frames captured from industrial environments. The experiments were conducted using different configurations, including varying the number of epochs, image sizes, and the type of hardware used for training (CPU vs. GPU).

.png?Expires=1772778286&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=eElXwswgEWWMFU46ssOw76lTVcFmEt6yW3QYwy23sEf17Jj7082FckdkTiPic8X3lDyexW96S9UhP0XRuVmZoU5dRN~oHeF0-pIruLjYoDoGoTCBb7U3w4FIDy~2y~WKpVPteGBMiNJMKa29El4XFlhgWztal-i~7Pfu9qdpNnle9kybm4Yq4b81gPqmrBNa1PK927~VHMVmuZaWpJT7ihlsTXQob9yVOzbXaM4TfZnTzBLojIJfkKKT772Tqq6Pq5ykl9fGKal-afph3wL3aNBH3PsXzuW1zqhisx-c05B-DxGkiFy88gv8LWySGflm6vinHq1t5~gOJNZUfchQZQ__)

** Testing the Model: The model was subjected to inference using both images and video files. For example, running:

yolo task=detect mode=predict model=yolo11n.pt source="testimg.jpg"

allowed the evaluation of the model's detection capability on single images, while testing with video files, like:

yolo task=detect mode=predict model=best.pt source="vid.mp4"

provided insight into how well the model handles real-time processing.

** Dataset Preparation: The annotated dataset was resized to 640x640 pixels to meet the input requirements of the YOLOv11 model. The dataset was then structured into training, validation, and testing sets, and the corresponding configuration file was updated.

Results

After training the model for 10 epochs, the YOLOv11 model achieved strong detection performance on both images and video files. The evaluation results indicated that the model could accurately classify individuals into the four categories of safety gear compliance.

The best-trained model was saved in the runs/detect/train/weights/best.pt directory and was used to perform inference on test data. The inference results, stored in the runs/detect/predict directory, were visually verified to ensure the model was functioning correctly.

Conclusion

The Safety Jackets and Helmets Detection System successfully demonstrated the capabilities of AI in automating safety compliance monitoring. By utilizing the YOLOv11 model, the system achieved high accuracy in detecting individuals wearing helmets, safety jackets, both, or neither. This system can be easily deployed in industrial and construction environments to monitor safety compliance in real-time.

Learnings::

This project provided deep insights into several key areas of machine learning and AI:

-

A comprehensive understanding of the object detection pipeline and how it can be adapted to real-world use cases.

-

Effective use of Kubeflow for managing AI workflows.

-

The importance of precise dataset annotation and its impact on model performance.

-

Hands-on experience with YOLOv11, including training, inference, and evaluation on custom datasets.

This system not only contributes to workplace safety by automating compliance monitoring but also demonstrates the potential of AI in practical, high-impact scenarios.

The complete codebase for the Intelligent Speed Monitoring and Alert System is available on GitHub. Visit the GitHub repository to explore the source code, annotated datasets, and implementation details.

Thank you for taking the time to go through my project! I am truly grateful for the opportunity to participate in the global Computer Vision Projects Expo 2024 and share my work with such an inspiring community.