Abstract

Solution implemented in this project is implemented with Python using OpenCV for video processing and TensorFlow for machine learning to recognize traffic participants and situations. The project involves vehicle detection and traffic condition classification using CNN and YOLO. The system predicts three classes of traffic conditions: Heavy Traffic, Medium Traffic, and No Traffic, with real-time implementation.

Introduction

Traffic congestion and road safety are major concerns in modern urban environments, impacting not only commuter efficiency but also economic productivity and environmental sustainability. Addressing these challenges requires innovative solutions capable of real-time analysis and decision-making.

The human visual system is exceptionally adept at understanding complex visual scenes, identifying objects, and determining their spatial relationships. Mimicking this capability in machines is the cornerstone of computer vision, a field that has seen remarkable advancements with the advent of deep learning.

Object detection is a fundamental task in computer vision, involving three main goals:

-

Classification: Identifying the type of object in an image.

-

Localization: Determining the object's position.

-

Detection: Combining classification and localization to identify all objects in an image.

In this project, we address these challenges by developing a real-time traffic situation recognition system that employs two state-of-the-art techniques:

-

YOLO (You Only Look Once): A fast and accurate object detection algorithm that processes images in a single forward pass, making it ideal for real-time

applications. -

Convolutional Neural Networks (CNN): A class of deep neural networks particularly effective for image recognition tasks, used here for traffic condition classification.

The applications of such a system are vast, ranging from autonomous vehicles and smart traffic lights to efficient traffic management systems and advanced surveillance technologies. By leveraging the power of computer vision, this project aims to:

-

Enhance traffic flow management by accurately classifying conditions into three categories: No Traffic, Medium Traffic, and Heavy Traffic.

-

Provide a scalable and adaptable solution for smart city infrastructure.

Challenges in Object Detection and Traffic Management

Implementing a robust traffic detection system involves addressing several challenges:

-

Dynamic Environments: Varying lighting conditions, weather effects, and fluctuating traffic densities can impact detection accuracy.

-

Real-Time Processing: Ensuring the system operates efficiently with minimal latency on standard hardware.

-

Data Limitations: Collecting and annotating diverse datasets representative of real-world traffic conditions.

This project leverages advancements in deep learning, including pre-trained models like YOLO, combined with a custom-trained CNN, to overcome these challenges and deliver a reliable solution for real-time traffic management.

Methodology

This project is designed to detect and classify road traffic conditions using a combination of object detection and traffic condition classification techniques. The methodology can be broken down into the following key components:

Related Work

Various algorithms have been evaluated to determine the most effective solution for real-time traffic detection and classification:

- R-CNN (Region-Based Convolutional Neural Networks): Known for accuracy but computationally expensive, making it unsuitable for real-time applications.

- SSD (Single Shot MultiBox Detector): Faster than R-CNN but less precise.

- YOLO (You Only Look Once): Combines speed and accuracy, making it ideal for real-time systems.

After thorough analysis, YOLO was selected due to its ability to perform object detection in real time without compromising accuracy.

YOLO Framework

YOLOv3, a state-of-the-art object detection model, divides an image into a grid of cells. Each grid cell predicts:

- Bounding Boxes: The position and dimensions of detected objects.

- Class Probabilities: The likelihood of each object belonging to specific classes.

Using a single forward pass of the neural network, YOLOv3 achieves high-speed and reliable predictions. The grid-based approach ensures that even overlapping objects are accurately detected.

Key Features of YOLOv3:

-

Regression and Frame Selection: Predicts the location of objects and adjusts bounding boxes.

-

Non-Maximum Suppression: Ensures only the most relevant bounding boxes are retained.

-

Multi-Scale Predictions: Improves detection for objects of varying sizes.

Convolutional Neural Network (CNN)

A CNN was designed and trained to classify traffic conditions into three categories:

- No Traffic

- Medium Traffic

- Heavy Traffic

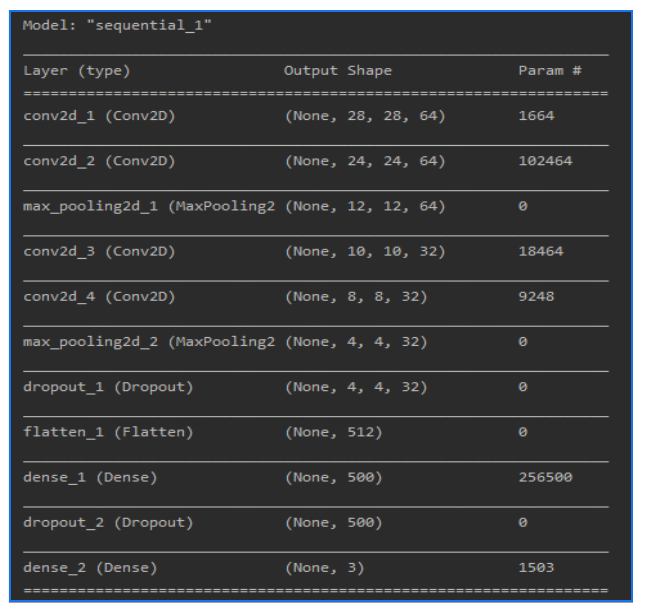

CNN Architecture

The CNN consists of the following layers:

-

Convolutional Layers: Extract features from input images by applying convolution operations.

-

Pooling Layers: Reduce spatial dimensions and ensure translation and rotation invariance.

-

Fully Connected Layers: Map the extracted features to traffic condition labels.

Data Augmentation

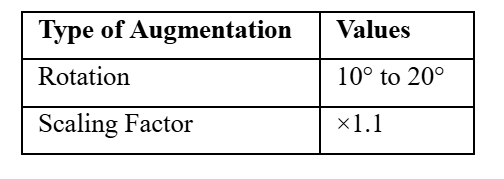

To prevent overfitting and improve model robustness, data augmentation techniques such as rotation (10°–20°) and scaling (×1.1) were applied to the dataset.

System Design

The proposed system is divided into three stages:

- System Initialization:

- A GUI allows users to select either a real-time webcam feed or pre-recorded videos as input.

- Frames are extracted from the video feed using OpenCV for processing.

- Traffic Participants Detection:

- YOLOv3 processes each frame to detect and identify vehicles and other traffic participants.

- Traffic Condition Prediction:

- Frames are passed to the CNN, which classifies the traffic situation into one of the three predefined categories.

- Bounding boxes and traffic condition probabilities are displayed on the GUI.

Experiments

To evaluate the proposed system, we conducted a series of experiments, including dataset preparation, training, testing, and performance evaluation. These steps demonstrate the robustness and accuracy of our real-time traffic classification model.

Dataset Preparation

The dataset used for this project was created from two primary sources:

- Custom-Collected Data:

Videos of traffic from various streets in Frankfurt were captured and converted into frames. These frames were labeled and categorized into three classes: No Traffic, Medium Traffic, and Heavy Traffic.

- Online Resources:

Additional traffic images were sourced online to increase dataset diversity and ensure better model generalization.

The final dataset was divided as follows:

-

Training Set: 2,500 images for model learning.

-

Testing Set: 500 images for performance evaluation.

-

Validation Set: 20% of the remaining training set for fine-tuning the model.

To show how the dataset was prepared, categorized, and distributed.

To show how the dataset was prepared, categorized, and distributed.

To illustrate sample images from the training set for each traffic condition class.

To illustrate sample images from the training set for each traffic condition class.

Data Augmentation

To address overfitting and improve the model’s ability to handle variations, data augmentation techniques were applied:

-

Rotation: Images were rotated randomly between 10° and 20°.

-

Scaling: Images were scaled by a factor of 1.1.

These transformations ensured that the model could generalize well to unseen data.

Training the CNN

The CNN was trained using the Keras library with a TensorFlow backend. Key training steps included:

-

Initialization: The Sequential model was used to define the architecture, including convolutional, pooling, and fully connected layers.

-

Optimization: The Adam optimizer was applied with a learning rate fine-tuned during experiments.

-

Loss Function: Categorical cross-entropy was chosen to measure classification performance.

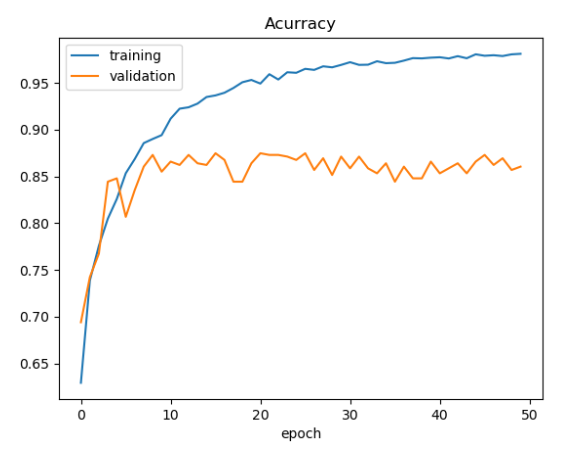

Results

The model achieved:

- Training Accuracy: Above 80%.

- Validation Accuracy: A stable performance indicating low overfitting.

The training and validation accuracy curves (below in the figure) showed a consistent upward trend, confirming the robustness of the learning process.

To depict the accuracy trends during training and validation phases.

Testing and Real-Time Evaluation

The trained model was tested on a laptop with the following specifications:

- Processor: Intel Core i3 (2.30 GHz).

- RAM: 8 GB.

- Camera: Built-in webcam for real-time detection.

The system was tested using image sequences from street views, and the results were evaluated based on detection and classification accuracy.

To present the architecture of the CNN used in this project.

Experimental Results

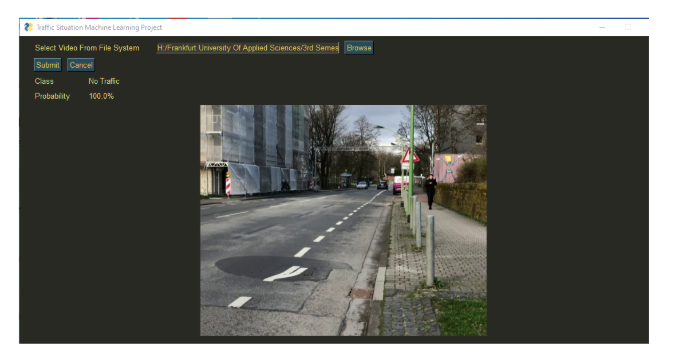

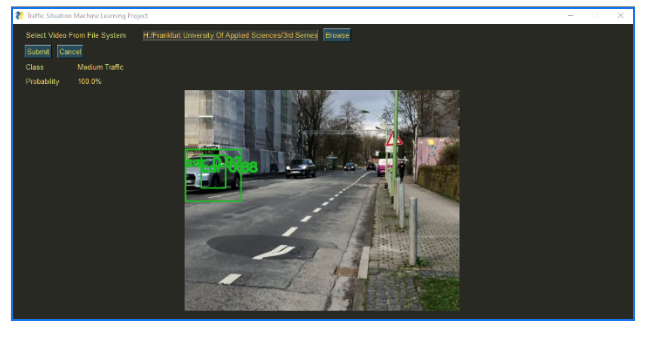

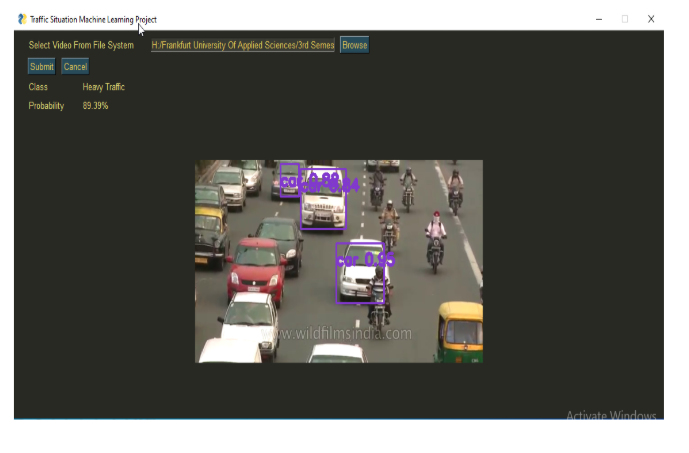

We tested this system on a laptop powered by an Intel Core i3 (2.30 GHZ) CPU and 8GB RAM. equipped with web cam. We tested the system on image sequences of street scenes. The system can detect vehicles and classify traffic successfully. Below figures show some results of our system.

A real-time example of detecting no traffic.

A real-time example of detecting medium traffic.

A real-time example of detecting heavy traffic.

- The system successfully detected vehicles and classified traffic conditions with high confidence scores.

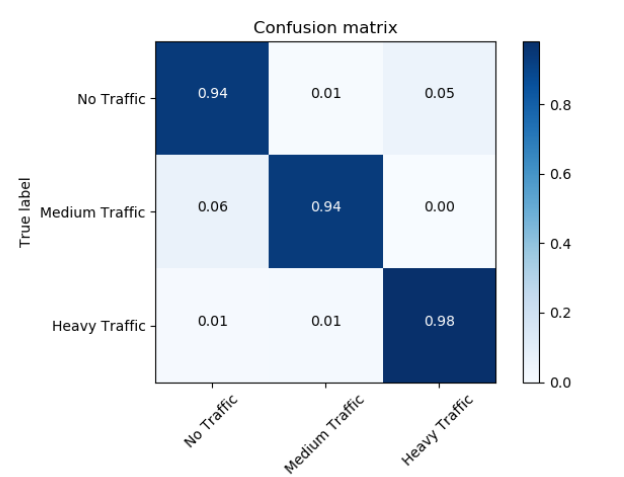

Confusion Matrix

A confusion matrix was generated to analyze the performance across the three classes.

To analyze classification performance across the three traffic classes.

The results showed:

-

No Traffic: 94% accuracy.

-

Medium Traffic: 94% accuracy.

-

Heavy Traffic: 98% accuracy.

This demonstrates that the model performs reliably for all traffic scenarios.

Insights and Observations

-

The model performed well under varying lighting conditions and traffic densities, confirming its robustness.

-

The use of data augmentation played a significant role in improving generalization, particularly for edge cases in the dataset.

Conclusion

In this work, we have successfully developed and implemented a real-time traffic situation recognition system by combining two powerful machine learning models: YOLOv3 for object detection and a custom Convolutional Neural Network (CNN) for traffic classification. The system was designed to address critical challenges in traffic management, including real-time operation, adaptability to dynamic environments, and robustness across varying traffic conditions.

The model achieved an average classification accuracy exceeding 80%, demonstrating its effectiveness in real-world scenarios. By leveraging a custom-built dataset enriched with diverse traffic conditions and augmented with transformations like rotation and scaling, we ensured the system’s ability to generalize well to unseen data. The system’s performance was validated using real-time video feeds and pre-recorded traffic sequences, confirming its practicality for real-time deployment.

Key Achievements

-

Real-Time Performance: The system operates efficiently on standard hardware, processing traffic data in real time using webcam feeds or pre-recorded videos.

-

Accurate Classification: The combination of YOLOv3 and CNN provided robust detection and classification across three traffic categories: No Traffic, Medium Traffic, and Heavy Traffic.

-

Adaptability: The use of data augmentation and diverse datasets enhanced the system’s performance under varying conditions, including lighting changes and diverse traffic densities.

Practical Implications

This project highlights the potential of integrating machine learning and computer vision for traffic management. Its applications include:

-

Smart Traffic Lights: Real-time detection can optimize traffic light timings based on current traffic conditions.

-

Autonomous Vehicles: Enhanced object detection and traffic classification can improve vehicle navigation and safety.

-

Urban Planning: Insights from traffic data can assist in designing more efficient road networks.

Future Work

while the system has demonstrated strong performance, there are several avenues for improvement and extension:

-

Integration with IoT Devices: Expanding the system’s capabilities to incorporate data from multiple cameras or IoT sensors can provide a holistic view of traffic conditions.

-

Enhanced Dataset: Including data from different geographical regions and weather conditions will further improve the model’s generalizability.

-

Performance Optimization: Leveraging GPU-accelerated hardware or lightweight model architectures like YOLOv5 can significantly enhance processing speed and efficiency.

-

Multimodal Analysis: Integrating additional features, such as road sign recognition or pedestrian detection, can expand the system’s applications.

Conclusion Statement

By combining advanced object detection with traffic condition classification, this project showcases the transformative potential of deep learning in real-time traffic management. It lays a foundation for more intelligent, data-driven traffic solutions, contributing to the vision of smarter and safer urban mobility.

References

- A. Rajagede, Recognizing Arabic Letter utterance using convolutional neural network. Proceedings, 18th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing , SNPD 2017, pp. 181 – 186., 2017.

- C. Dewa, Javanese vowels sound classification with convolutional neural network, in: Proceedings of International Seminar on Intelligent Technology and Its Applications (ISITIA), 2016.

- R. D. C. a. A. Rajagede, Recognizing Arabic letter utterance using convolutional neural network, in: 2017 18th IEEE/ACIS International Conference on software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), pp. 181-186., 2017.

- F. A. A. Dewa C.K., Convolutional neural networks for handwritten Javanese character recognition., Indonesian Journal of Computing and Cybernetics Systems, 12, pp. 83-94, 2018.

- M. A. Nielsen, Neural Networks and Deep Learning, 2015 .

- M. S. R. Shuvendu Roy, Emergency Vehicle Detection on Heavy Traffic, 2019.