Revolutionizing Fashion E-Commerce with Visual Search and Real-Time Product Discovery

Picture this: you’re out on the street and spot a pair of shoes you absolutely love. But the store is closed, or maybe it’s out of your size. Frustrated, you wonder—what if you could snap a picture and instantly find thousands of similar options online? That moment of curiosity sparked the creation of this project.

Inspired by Alibaba’s revolutionary visual search feature, which lets users take a photo to find similar products, I set out to bring this technology to the fashion world. My goal was simple: to make shopping as effortless and intuitive as taking a photo. By building a system that combines real-time image recognition with advanced search capabilities, I’ve created a tool that transforms how people discover and shop for clothings online.

This project isn’t just about technology—it’s about enhancing the joy of discovery for shoppers and empowering retailers to connect with their customers like never before.

What is Built

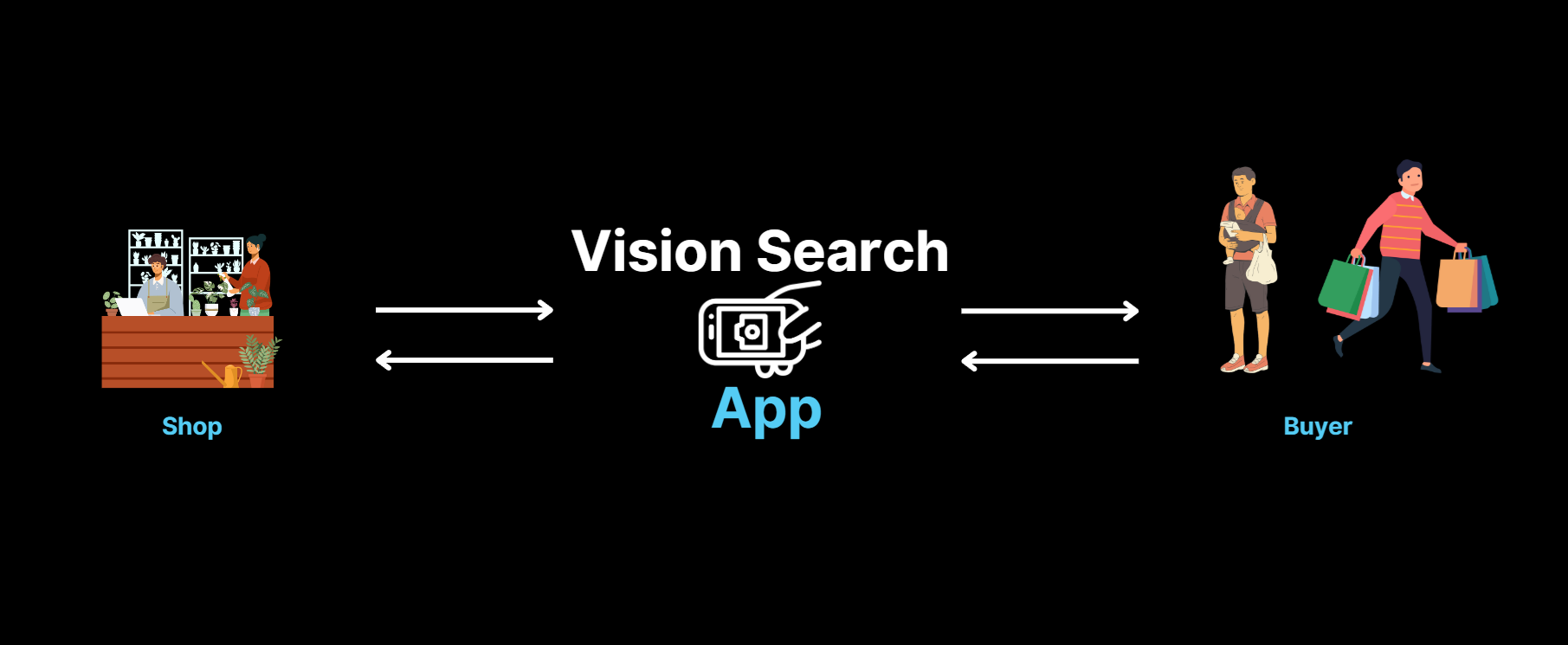

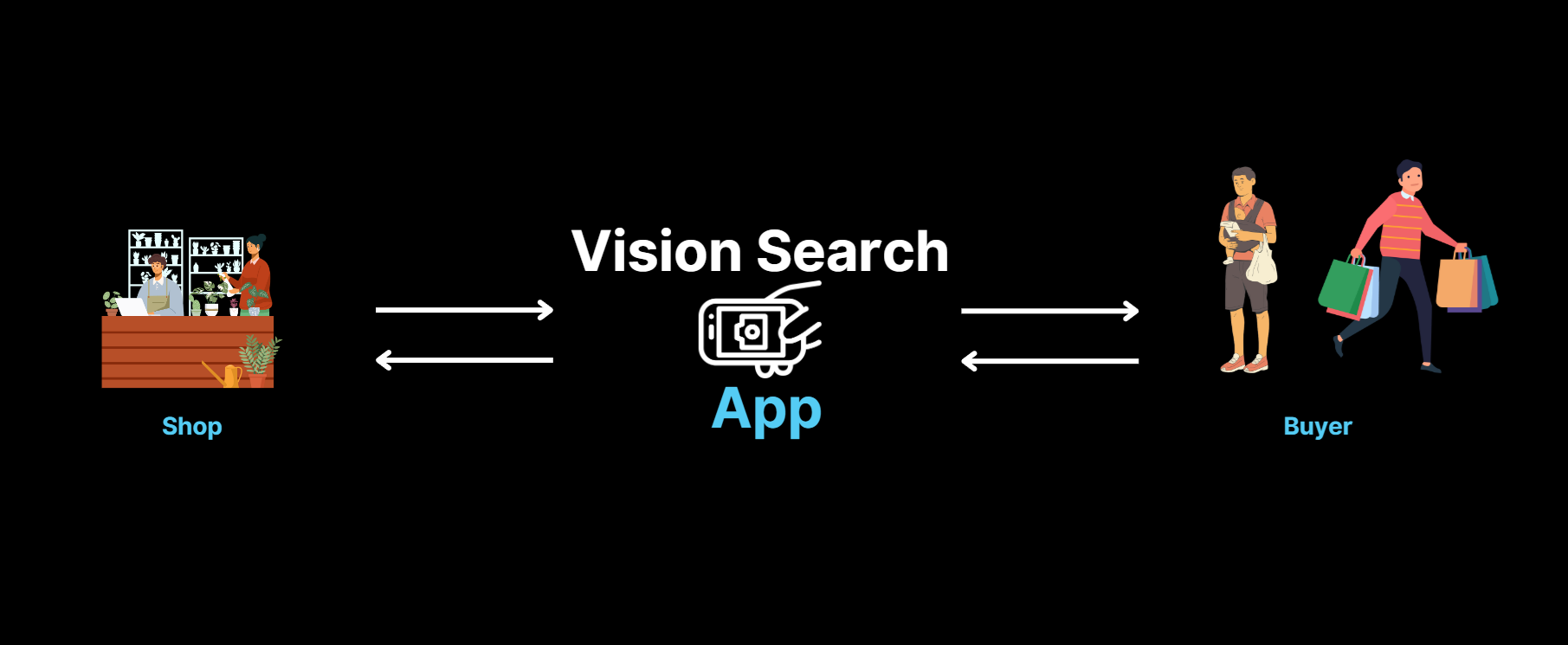

My system integrates advanced computer vision models and multi-modal techniques into two complementary interfaces:

- Mobile App : A real-time visual recognition system leveraging mobile cameras to detect and find similar products instantaneously.

- Web Platform : A versatile platform that enables users to perform similarity-based searches via uploaded images or keyword inputs.

The project uses a dataset of 2,000+ high-quality fashion images, including shoes and clothing, to train and test the system. The models are built using OpenCLIP ViT-L/14 embeddings for robust image feature extraction, and ChromaDB is utilized for fast and accurate similarity searches.

All code, including model implementation and database integration, has been carefully documented and can be referenced for deeper technical insight which are available in the project’s code repository and attached:

- Dataset used

- Model used

- Video of Working system and explanation (Both mobile and web)

How It Works

Behind the scenes, advanced technology powers this seamless experience:

- Image Recognition: Using OpenCLIP ViT-L/14 embeddings, the system extracts details like shape, color, and texture from images.

- Similarity Search: A vector database (ChromaDB) matches these details to a catalog of thousands of items, delivering fast and accurate results.

- Real-Time Interaction: The mobile app processes live camera feeds, turning a moment of inspiration into instant discovery.

- Multi-Modal Search: Users can upload photos, take snapshots, or search with keywords to find the product their looking for, making the system adaptable and inclusive.

This combination of advanced tools and real-world usability creates a truly transformative shopping experience.

Applications and Impact

- Enhancing Fashion E-Commerce:

- Makes it effortless to find clothing, shoes, or accessories that match your style.

- Reduces the frustration of endless browsing by providing instant matches based on photos or keywords.

- Encourages exploration, helping shoppers discover new styles, colors, or brands they might not have considered.

- Real-World Use Cases:

- Fashion Retail: Assists customers in finding similar products, whether it’s a shoe in a different color or a shirt in a comparable style.

- Footwear: Identifies alternatives for running shoes, casual sneakers, or formal footwear.

- Trend Watchers: Quickly compare similar items to stay ahead of fashion trends.

- Key Impacts:

- Turns catalogs into discovery engines by offering instant recommendations based on visual and textual inputs.

- Drives higher conversion rates in e-commerce through precise, relevant suggestions.

- Demonstrates a scalable solution for cross-domain applications.

Why It Stands Out

This project doesn’t just replicate existing ideas—it redefines how people discover and shop for fashion:

- Real-Time Search: The mobile app offers instant results from live camera input, making it ideal for on-the-go users.

- Broad Fashion Focus: From shoes to outerwear and beyond, the system is optimized for a wide range of items.

- Multi-Modal Flexibility: Supports photos, camera scans, and text input, adapting to any user preference.

- Scalable and Efficient: Built using OpenCLIP and ChromaDB, the system is fast, reliable, and capable of handling large catalogs with ease.

Future Potentials

The system can be further developed to:

- Augmented Reality Try-Ons: Imagine virtually trying on shoes or clothes before making a purchase.

- Expanded Catalog: Add accessories, activewear, or luxury items for a more comprehensive shopping experience.

- In-Store Integration: Enable kiosks or wearable tech to connect the online and offline shopping worlds seamlessly.

- Hyper-Personalization: Integrate user behavior analytics to provide tailored recommendations. For example, suggest products based on browsing history, favorite styles, or seasonal trends, turning visual search into a fully personalized shopping journey.

Conclusion

In summary, this project bridges the gap between inspiration and discovery, revolutionizing how people shop for fashion. By combining advanced computer vision, real-time interaction, and multi-modal search, I’ve created a seamless and intuitive shopping experience. This solution not only simplifies the journey for shoppers but also empowers retailers with a powerful tool to connect with their audience. It’s more than a system—it’s a new way to discover, explore, and enjoy fashion