Have you spent countless hours trying to tailor your resume for a specific job? Needless to say, it is one of the most tedious and boring tasks that you can ever do. Even if you have the right skills for the job, if your resume does not catch the eye of the Hiring Manager, it is rendered worthless. In the era of artificial intelligence, your resume will go through not only a human but also an applicant tracking system (ATS) that may not be able to parse your resume properly and may even reject you if it does not find the right set of keywords. Keeping all these things in mind, there was a need to develop a system that can generate resumes that are tailored to the job description, are easily understood by the ATS, and have a clean and professional outlook with minimal language errors. ResuMate is designed to produce such resumes within minutes and make job hunting easier for people in technology.

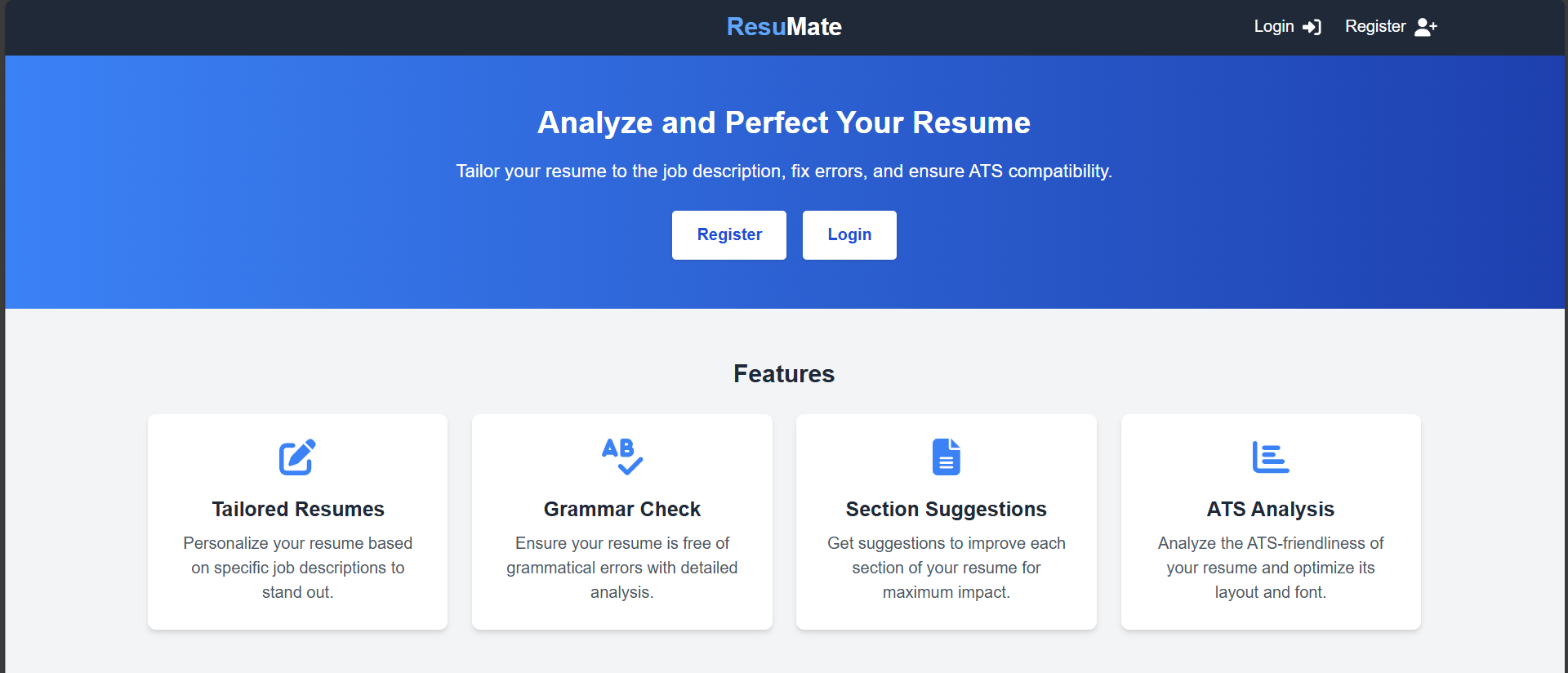

Most resumes get rejected by an automated Applicant Tracking well before they are seen by an Hiring Manager. These applicant tracking systems, may not be able to parse resumes that have a complex layout or contain too many unnecessary items other than text. Also, most HR deal with many resumes daily, and give at max 30 seconds of attention to any resume. Thus, it becomes inevitable to design a resume that not only looks professional but is also well understood by the Applicant Tracking Systems. ResuMate is an agentic workflow that uses LLMs to transform your existing resume to a professional-looking, minimalistic, and easily parseable PDF. The workflow can be accessed using the Web App interface. It has the following salient features:

Provides suggestions to improve your existing resume based on the parseability, language usage, job description. and actionable insights.

Generation of a clean single-column LaTeX-based PDF resume with all the suggestions incorporated.

This section explains the architecture of the application and workings of the agentic workflow.

The figure above shows the architecture of the agentic workflow. It was created keeping three things in mind:

The user inputs the resume in pdf format along with an optional job description. The resume is first converted into jpg images (one image for each page) using pdf2image and then converted to base64 strings that can be used to make API calls with the Multimodal Large Language Model (MLLM). Here we are using the Gemini Flash 2.0 model (see Experiments on why this model was selected) as the MLLM, which offers fast inference times and low hallucination rates. Below snippet shows the code for conversion:

from pdf2image import convert_from_path pdf_path = '/content/resume.pdf' images = convert_from_path(pdf_path) for i, image in enumerate(images): image.save(f'page_{i + 1}.jpg', 'JPEG')

We use the pdfminer.six library to extract information such as the most commonly used font and its size. The font information can be used to alert the user if an unprofessional-looking font or a small font size is being used. Also, the PyPDF2 library has been used to extract text from the PDF. This acts as a fallback mechanism in case the MLLM model fails to service the API call. Moreover, PyPDF extracts hyperlinks such as Github Account URL, LinkedIn Page URL, email address, and other important Links from the PDF. A sample snippet has been shown which extracts information using PyPDF:

def extract_text_num_pages_and_links(pdf_stream): reader = PdfReader(pdf_stream) text = "" links = [] num_pages = len(reader.pages) # Extract text from all pages for page in reader.pages: text += page.extract_text() or "" # extract links from annotations if '/Annots' in page: for annot in page['/Annots']: obj = annot.get_object() # Check if it's a link annotation if obj['/Subtype'] == '/Link': if '/A' in obj and '/URI' in obj['/A']: uri = obj['/A']['/URI'] links.append(uri) return { "resume_parsed_text": text, "resume_num_pages": num_pages, "resume_links": links, }

Resumes can be highly unstructured documents and may have completely different sections based on the candidate. Upon investigating many tech resumes, the most common sections present in tech resumes were found. For segregating the resume into the different sections the following prompt was used:

**Instruction:** Extract the following sections from the resume **if present** and output them **strictly in Markdown format**. Each section should have its own subheading (`##`). **Do not modify any text** from the resume—preserve all original formatting and wording. **Output only the extracted content in Markdown format, without any additional text.** ---------- **Resume Extraction Format:** ```markdown ## Candidate Name [Extracted Name] ## Location of Candidate [Extracted Location] ## Experience/Work History [Extracted Work History, including Freelance Experience] ## Education [Extracted Education Details] ## Position of Responsibilities [Extracted Responsibilities] ## Skills [Extracted Skills] ## Profile Summary / Objective [Extracted Profile Summary or Objective] ## Projects [Extracted Projects] ## Publications [Extracted Publications] ## Interests / Hobbies / Extracurricular Activities [Extracted Interests, Hobbies, or Extracurricular Activities] ## Awards / Achievements [Extracted Awards or Achievements] ## Certifications [Extracted Certifications] ## Languages Known [Extracted Languages] ## Relevant Coursework [Extracted Relevant Coursework] **Rules for Extraction:** - If a section is missing in the resume, **do not generate it** in the output. - **Do not add, modify, or infer** any information—strictly extract only what is present. - Maintain the **original text, order, and formatting** from the resume. - Ensure **only Markdown-formatted output** without any additional explanations or notes.

Having each section in Markdown format helps with easy display on the frontend side so that user can edit their resumes before improving them.

Take the given **Markdown-formatted resume** and extract its contents into a structured **JSON format** as shown below. **Ensure the response strictly follows JSON syntax**, and the output should be a valid Python dictionary. ### **Instructions:** - Extract information under the following sections: 1. **Education** 2. **Projects** 3. **Experience** (includes Work History and Freelance) 4. **Position of Responsibilities** - **If a section is missing**, include the key in the JSON but leave it as an **empty list (`[]`)**. - **Do not modify the original text**—preserve all content exactly as it appears in the resume. - **All dates must be formatted as "Abbreviated Month-Year" (e.g., "Jan-2023").** - **Strictly output only the JSON**—no additional text, explanations, or formatting. --- ### **Output Format:** { "Education": [ { "Degree": "<Degree Name>", "Institution": "<Institution Name>", "Location": "<City/Remote>", "Period": { "Start": "<Start Date>", "End": "<End Date>" } } ], "Experience": [ { "Role": "<Role Title>", "Company": "<Company Name>", "Location": "<City/Remote>", "Period": { "Start": "<Start Date>", "End": "<End Date>" }, "Description": "<Brief Description of Responsibilities>" } ], "Position of Responsibilities": [ { "Title": "<Position Title>", "Organization": "<Organization Name>", "Location": "<City/Remote>", "Period": { "Start": "<Start Date>", "End": "<End Date>" }, "Description": "<Brief Description of Responsibilities>" } ], "Projects": [ { "Name": "<Project Name>", "Description": "<Project Description>", "Technologies": "<Technologies Used>", "Period": { "Start": "<Start Date>", "End": "<End Date>" } } ] } - ### **Strict Rules:** **If a section is missing**, return an **empty list (`[]`)** instead of omitting it. **No explanations, additional text, or formatting**—**only valid JSON output**. **Preserve original text** exactly as found in the resume. **Ensure JSON is well-formed and can be parsed directly in Python.** ---

The above prompt converts a group of sections from Markdown to json. The sections are divided into multiple groups, and separate identical prompts are made for each group. This is done to avoid any conflicts between sections and make it easier for the LLM to generate accurate json responses. Although one could directly use the MLLM to generate JSON data but it would lead to a higher number of API calls to a much costlier MLLM.

Each LLM response is then checked using Pydantic AI to ensure that only valid Json responses are received. Also, we use the Deepseek V3 model, which has a good balance of inference time and accuracy, performing well on general-purpose tasks with an MMLU(General) score of 88.5%.

Now that we have structured sections in front of us, we can prepare specific prompts to enhance each section using certain guidelines. For example, take a look at the prompt which can be used to improve the 'Projects' section in a resume.

**Prompt:** You will receive **two inputs**: 1. A **Job Description (JD)** in text format. 2. A **JSON object** containing **projects** extracted from a resume in the following format: { "Projects": [ { "Name": "<Project Name>", "Description": "<Project Description>", "Technologies": "<Technologies Used>", "Period": { "Start": "<Start Date>", "End": "<End Date>" } } ] } ### **Task:** - **Analyze the job description** and **align each project's description** according to it. - **Prioritize** projects that match the **technologies, skills, and keywords** found in the job description. The most relevant projects should appear **first** in the JSON. - **Modify the project descriptions** to subtly align them with the job description while keeping them **concise, clear, and well-structured**. - **Format the project descriptions in Markdown**, ensuring: - Use of **bullet points** for clarity. - **Bold formatting** (`**skill**`) for skills/technologies mentioned in both the **job description** and the **project**. - **Preserve all other data as is** (name, technologies, period, etc.), making no other modifications. --- ### **Output Format:** The output must be **strictly JSON**, preserving the original structure but with: 1. **Projects reordered** based on relevance to the job description. 2. **Descriptions formatted in Markdown** with relevant skills in **bold**. Example Output: { "Projects": [ { "Name": "AI Chatbot", "Description": "- Developed an AI-driven chatbot using **Python** and **TensorFlow**.\n- Integrated with **FastAPI** for real-time communication.\n- Implemented **NLP models** for enhanced user interactions.", "Technologies": "Python, TensorFlow, FastAPI, NLP", "Period": { "Start": "Jan-2023", "End": "Dec-2023" } }, { "Name": "E-commerce Website", "Description": "- Built a full-stack e-commerce platform with **React** and **Next.js**.\n- Implemented a **Node.js** backend with a **MongoDB** database.\n- Optimized performance and user experience with **server-side rendering (SSR)**.", "Technologies": "React, Next.js, Node.js, MongoDB, SSR", "Period": { "Start": "Mar-2022", "End": "Oct-2022" } } ] } --- ### **Strict Rules:** **Only output valid JSON**—no additional text, explanations, or formatting. **Preserve all original fields** (except reordering projects and modifying descriptions as instructed). **Descriptions must be formatted in Markdown**, using bullet points and **bolding** relevant skills. **No other modifications** beyond those explicitly stated. **Final instruction:** **Ensure the response is strictly JSON-compliant, ready to be parsed as a Python dictionary.**

Similarly, prompts are created for each section that is present in the resume. Also, the LLM responses are validated by Pydantic AI to ensure accuracy.

With the enhanced resume sections ready, we populate the empty LaTeX template with the extracted information and obtain a nice and minimalistic resume.

After we have the LaTeX (.tex) file ready, we can compile it to PDF directly using TinyTeX, which has a much smaller footprint ideal for resource-constrained servers.

pdflatex document.tex

async def get_llm_response(self, api_key, messages, temperature=1, top_p=1, max_retries=10, initial_backoff=1): """ Fetches a response from the API with retry logic to handle rate limits. Args: api_key (str): API key for authentication. messages (list): Messages to send to the model. temperature (float): Sampling temperature for the response. top p (float): top p value for the response. max_retries (int): Maximum number of retries in case of rate limit errors. initial_backoff (int): Initial backoff time in seconds. Returns: str: The content of the model response. """ attempt = 0 # Define the API URL url = "https://openrouter.ai/api/v1/chat/completions" # Define the headers for authentication headers = { "Authorization": f"Bearer {api_key}", "Content-Type": "application/json", } all_models = ["deepseek/deepseek-chat-v3-0324:free", "meta-llama/llama-3.1-70b-instruct:free","google/gemma-2-9b-it:free","meta-llama/llama-3.2-3b-instruct:free", "meta-llama/llama-3.1-405b-instruct:free", "mistralai/mistral-7b-instruct:free"] # Define the request payload payload = { "model": None, "messages": messages, "temperature": temperature, "top_p": 0.6, } async with aiohttp.ClientSession() as session: while attempt < max_retries: try: payload["model"] = all_models[attempt % len(all_models)] print(f"Attempt {attempt + 1} with API key: {api_key}") # proxy_list = await FreeProxy().get(count=1) async with session.post(url, headers=headers, data=json.dumps(payload), timeout=30, proxy=None) as response: if response.status == 200: data = await response.json() return data["choices"][0]["message"]["content"] else: print(f"Error: {response.status}, {await response.text()}") except aiohttp.ClientError as e: print(f"HTTP error: {e}") except Exception as e: print(f"Unexpected error: {e}") attempt += 1 wait_time = initial_backoff * (2 ** (attempt - 1)) # Exponential backoff print(f"Retrying in {wait_time} seconds (attempt {attempt}/{max_retries})...") await asyncio.sleep(wait_time) # Wait before retrying print(f"Max retries reached. Could not fetch response.") return None # Return None if all retries fail

The above snippet shows the mechanism for sending queries to LLM, it ensures that other fallback models are used in case of failure. Also, we use asynchronous programming to send multiple queries in an efficient way without blocking other queries.

The web application was built using FastAPI for the backend and Next.js with React for the frontend. FastAPIis a modern, high-performance Python framework that leverages ASGI for faster execution, making it a popular choice for AI applications. Next.js was used for the frontend, providing a robust framework for building production-ready web applications.

The whole prompt framework was designed from scratch without using any readily available agentic frameworks to ensure optimum performance and pinpoint customization.

This section describes the various experiments that were performed to finalize the various tools in the project.

Accurately parsing resumes is a critical task in this project. PDF, while commonly used for resumes, presents significant challenges due to its complex layout and the fact that each character is positioned at a specific location in the document. Several tools are available for parsing PDFs in Python, which can be broadly categorized into non-AI-based and AI-based solutions. Non-AI-based tools, such as PyPDF2 and pdfminer.six, offer fast parsing capabilities but struggle with accuracy, especially when handling complex or unstructured layouts. On the other hand, AI-based solutions like MLLMs (Multimodal Large-Language Models) and marker-pdf, an open-source tool that converts PDFs into structured Markdown format, provide more accurate parsing but at the cost of speed.

Below is a comparison of these tools based on speed, accuracy, and the availability of free APIs:

| Tool | Speed | Accuracy | Free API Available |

|---|---|---|---|

| Non-AI-based Tools | High | Low | Not needed |

| MLLM | Low | High | Yes |

| marker-pdf | Moderate | Moderate | Yes (Limited) |

While non-AI-based tools are fast, they fall short in terms of accuracy, which is crucial for parsing resumes accurately. Additionally, these tools do not directly output data in a structured format like Markdown. During experimentation, it was found that MLLM provided the best balance of high accuracy and usability, especially since it offers a free API with generous limits through OpenRouter. Therefore, MLLM was chosen for this project due to its superior overall performance compared to marker-pdf.

The selection of MLLM was based mainly on two criteria:

Inference time of a Large Language Model (LLM) refers to the time it takes for the model to process input data (such as text, images, or both) and generate an output (e.g., a response, prediction, or transformation). Alongside inference time, the hallucination rate —which measures how frequently an LLM generates inaccurate or non-factual information—is another critical metric, especially when tasks like document summarization are involved. Both of these metrics are essential: reducing hallucinations ensures the accuracy and reliability of generated content, while faster inference times improve API response times, contributing to a more efficient user experience. Considering both factors, the Google Gemini Flash 2.0 model was selected for its strong performance in minimizing hallucinations while delivering fast inference times as compared to other MLLMs, making it an ideal choice for this project.

The two key hyperparameters considered were:

Both parameters were tested with values ranging from 0 to 1, in increments of 0.2.

For the MLLM, a top P value of 0.4 and temperature of 0.2 were chosen to prioritize factual accuracy in responses.

For the LLM, which required some degree of creativity to enhance the resume, the temperature was set to 0.4 and top P to 0.6 to allow for more diverse and creative outputs.