Problem Understanding

The problem addresses potential safety threats that women may

face, such as walking alone at night or during odd hours, where

risks are higher.

It was divided into following parts:

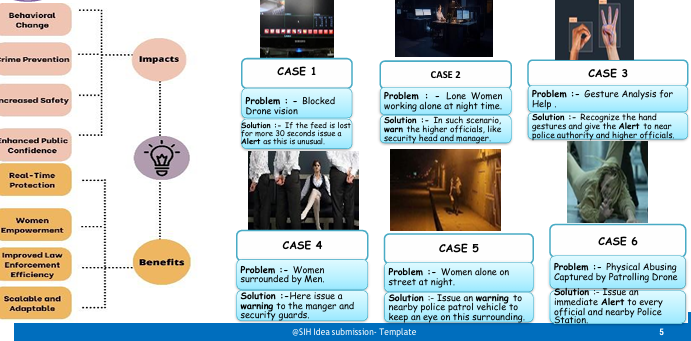

a) Prevention: The system identifies a lonely woman walking at

night or at odd hours. Upon detection, the system issues a

warning to a centralized server. If a woman encounters a safety

threat, an alert is triggered. This alert is also sent to the

centralized server, marking the critical phase of prevention.

b) Detection: The system uses camera technology to detect

people and classify their gender. In case the woman is identified

in a threatening situation, detection and gender classification

enable further actions like alerting the appropriate authorities.

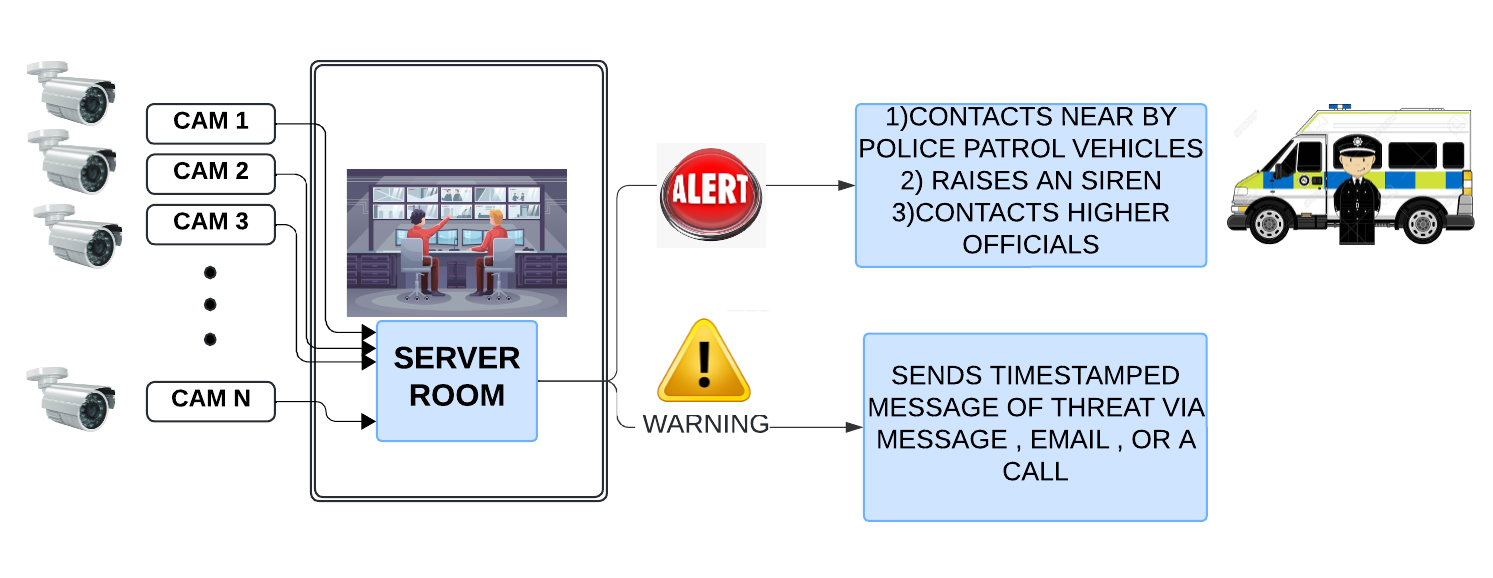

c) Action: When an odd or dangerous situation is identified (e.g.,

based on behavior patterns), the system sends a notification (via

email/SMS) to authorities with a timestamp. The system is also

equipped to establish direct communication with the police, who

are informed through the centralized server

Related work

All the related Research Papers were Studied

Implemented a Hand Gesture Detection algo using Deep Sequential NN and Mediapipe .

Implemented A Object Detection Algorithm for Detecting people and then a classifier for gender prediction using YOLOv8 and v11 .

The Runtime Data Collected by the Algorithm will pass through a Anomaly Detection Algorithm .

We Implemented 3 Algorithms (Isolation_Forest ,AutoEncoder ,LocalOutlierFactor ) for anomaly detection.

Implemented ZeroShotClassification for Danger Detection (can modify it to any necessary detail)

Implemented CLIP /BLIP based Human Action Prediction model for detecting basic activities like fighting, hugging, laughing, running, sitting, sleeping, etc .

Implemented a BLIP based IMAGE Info Generator for real time summary generation.

We tried it by using various Open Source models

Experiments

How the System Works:

Cameras Input : Multiple surveillance Cameras are setup in various locations

and send live footage to the central Server Room for analysis.

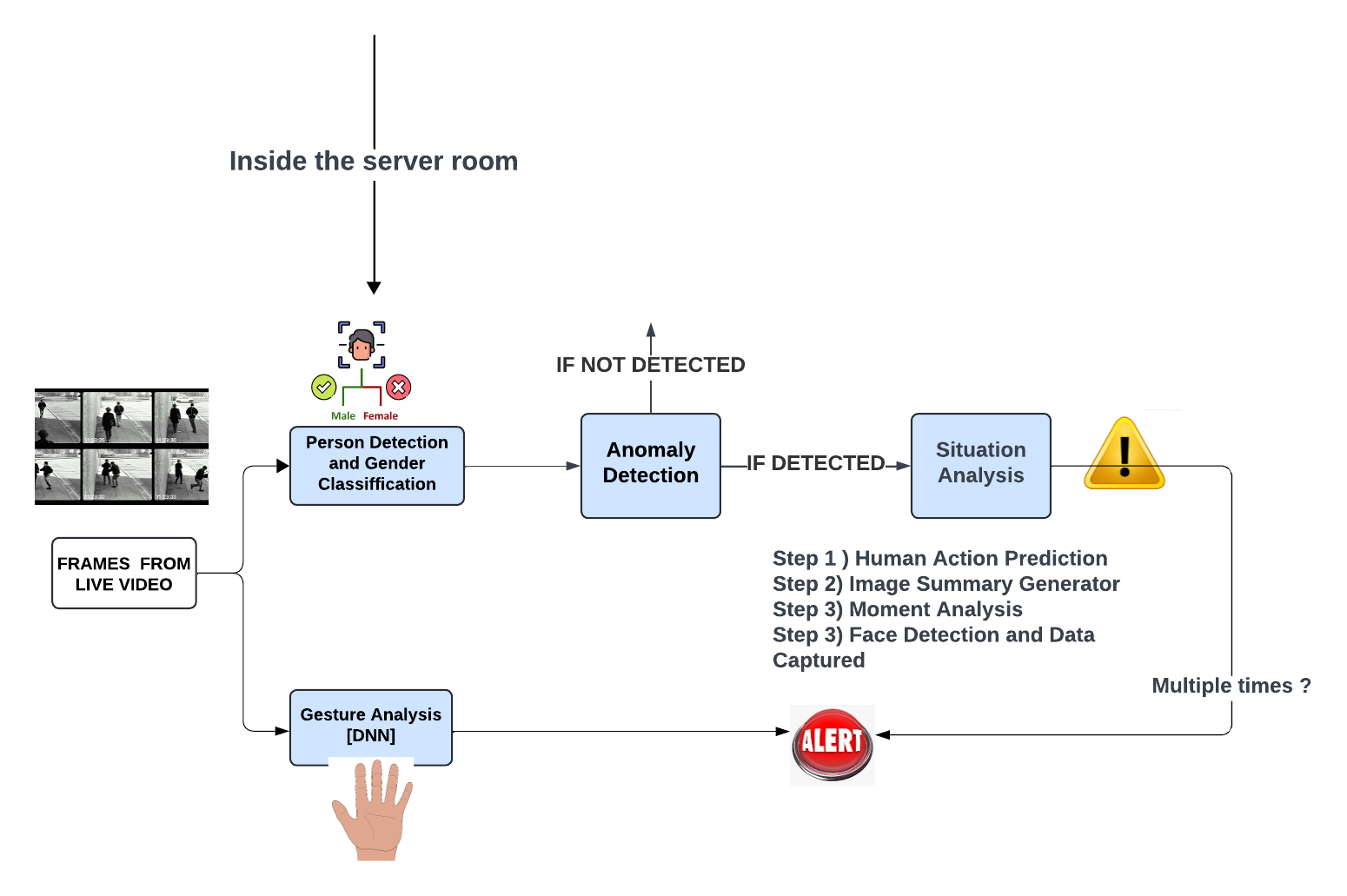

Inside the Server Room:

● Person Detection & Gender Classification: Using object detection models like

YOLOv8, the system processes live frames to detect individuals in real-time

and classify them by gender.

● Gesture Analysis (Deep Neural Networks): The system identifies specific

gestures, such as an SOS signal, using gesture analytics, powered by deep

learning models.

● Real-Time Gender Ratio: The gender distribution is calculated for the scene

to monitor for situations where a woman is surrounded by men, which can be a

red flag.

Action Chain

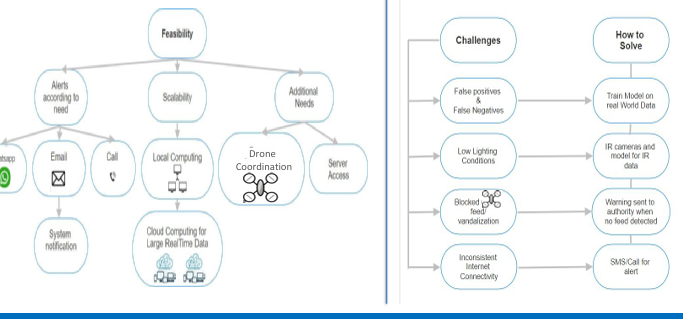

occurrences of unusual behavior in a short time), which can be escalated to an Alert.

Alert Mechanism: The alert system contacts nearby police patrol vehicles, raises a

siren, and informs higher officials via messages, emails, or calls, ensuring that law enforcement is notified in real-time.

Results

The results from the implemented models have been promising across different tasks. The Hand Gesture Detection algorithm using Deep Sequential NN and Mediapipe has shown accurate recognition of various hand gestures in real-time, enabling intuitive human-computer interaction. The Object Detection and Gender Prediction pipeline using YOLOv8 and YOLOv11 has demonstrated strong performance in detecting individuals and accurately classifying their gender. The Anomaly Detection algorithms (Isolation Forest, AutoEncoder, and LocalOutlierFactor) have successfully identified outliers in runtime data, improving the overall robustness of the system. The ZeroShotClassification model for Danger Detection has effectively adapted to different threat scenarios, showcasing the versatility of the approach. The CLIP/BLIP-based Human Action Prediction model has accurately detected a wide range of human activities, contributing to a deeper understanding of human behavior in videos. Additionally, the BLIP-based Image Info Generator has provided real-time, meaningful summaries of images, adding a layer of context for faster decision-making. Overall, these implementations have demonstrated a high level of accuracy, adaptability, and efficiency, making them suitable for various real-world applications.

Discussion

The results obtained from the various models reflect the effectiveness of the approaches chosen for each task. The Hand Gesture Detection algorithm demonstrated high accuracy in recognizing real-time gestures, highlighting the potential of using Deep Sequential Neural Networks in combination with Mediapipe for gesture-based interfaces. However, the performance can be further improved by incorporating more diverse training data to handle edge cases and ensure robustness in varied environments.

The Object Detection and Gender Prediction model, built using YOLOv8 and YOLOv11, successfully detected individuals and classified gender with good precision. While the model performed well on standard datasets, challenges like gender ambiguity or occlusion could affect its performance in real-world scenarios, requiring further optimization and data augmentation to ensure reliability across diverse conditions.

The Anomaly Detection pipeline, integrating Isolation Forest, AutoEncoder, and LocalOutlierFactor, demonstrated its capacity to detect outliers effectively, providing valuable insights into unusual patterns in runtime data. Each algorithm has its strengths, with Isolation Forest being effective for high-dimensional data, AutoEncoder working well with complex patterns, and LocalOutlierFactor excelling in cases with noisy data. Future improvements could involve combining these models in a hybrid system that leverages the strengths of each approach, further enhancing detection accuracy.

The ZeroShotClassification model for Danger Detection proved to be highly adaptable, successfully identifying various forms of danger without the need for specific training data. This flexibility is a key advantage for real-time systems that must operate in dynamic environments. However, further testing and fine-tuning are needed to refine its contextual understanding and to minimize false positives and negatives.

The CLIP/BLIP-based Human Action Prediction model performed well in recognizing a range of activities, such as fighting, running, and laughing, across different video sources. However, the model's performance can be impacted by factors like motion blurring, occlusion, and varying action speeds. Future work should focus on enhancing temporal sequence processing and robustness to these challenges.

Lastly, the BLIP-based Image Info Generator successfully produced real-time summaries, which can be valuable for applications requiring quick image interpretation, such as security and surveillance. However, while the summaries were useful, the system could be further enhanced by incorporating domain-specific knowledge to generate more detailed and accurate insights.

Conclusion

The implementation of these AI/ML models across various domains, including gesture detection, object detection, anomaly detection, danger detection, human action prediction, and image summarization, has shown positive results with significant potential for real-world applications. Despite the successes, there is room for improvement in robustness, accuracy, and adaptability, particularly when dealing with diverse and challenging environments. Future work will focus on optimizing model performance through data augmentation, hybridization of techniques, and fine-tuning based on specific use cases. These models have demonstrated their capabilities in enhancing human-computer interaction, improving safety and security systems, and enabling more effective analysis of multimedia data.

References

1] A study on implementation of real-time intelligent video surveillance system based on embedded module

Author

Journal journal on image and video processing 2021

https://jivp-eurasipjournals.springeropen.com/articles/10.1186/s13640-021-00576-0

2] Third Vision for Safeguarding Women with A Live Surrounding Update using Deep-Learning for Computer Vision

Author Anju; M R Abilash; V Balaji; J K Sayee Darshan

Journal 2021 6th international conference

https://ieeexplore.ieee.org/document/9609496

3] Human action recognition and prediction: A survey

Yu Kong, Yun Fu

International Journal of Computer Vision 130 (5), 1366-1401, 2022

http://surl.li/uwlavi

Github

FAQs

4] An Algorithm of Occlusion Detection for the Surveillance Camera

Authors: Peng Shi,Bin Hou ,Jing Chen,Yunxiao Zu

Journal : February 2021Scientific Programming 2021

https://www.researchgate.net/publication/349528272_An_Algorithm_of_Occlusion_Detection_for_the_Surveillance_Camera