AI has revolutionized how we interact with technology, but the secret to getting the best results lies not only in the model you use but in the prompts you design. This publication explores key concepts in prompt engineering, from structuring prompts effectively to the ethical implications and the nuances of meta-prompting.

Apart from the widely available techniques, I have included 16 critical insights that go beyond the common strategies. These insights offer practical, lesser-known strategies that can significantly enhance the effectiveness of your prompts.

The goal isn’t just to make AI functional, but to make it more intuitive and reliable for any purpose—from business communications to creative experiments.

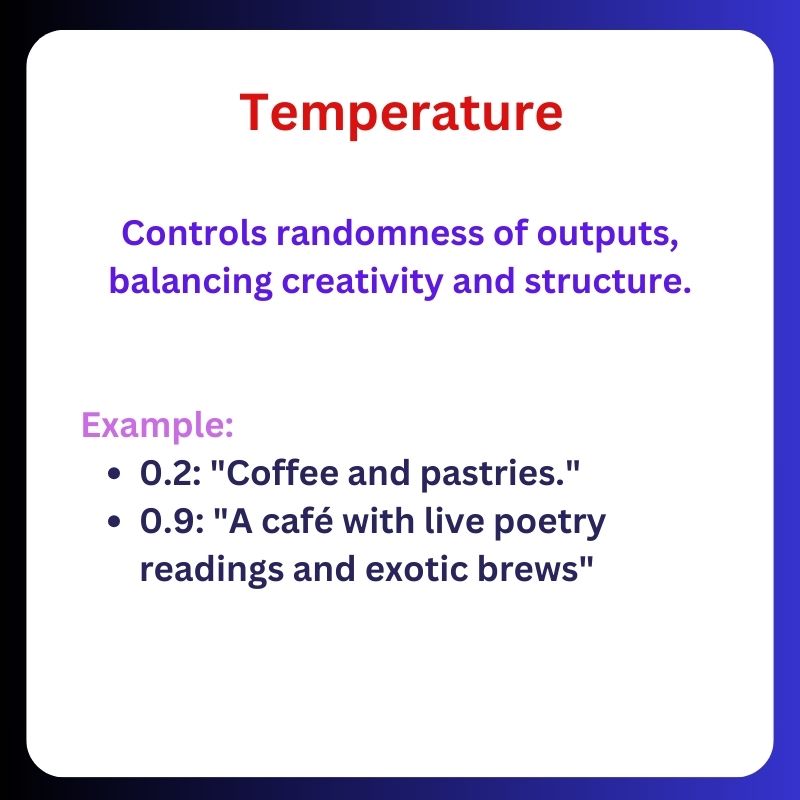

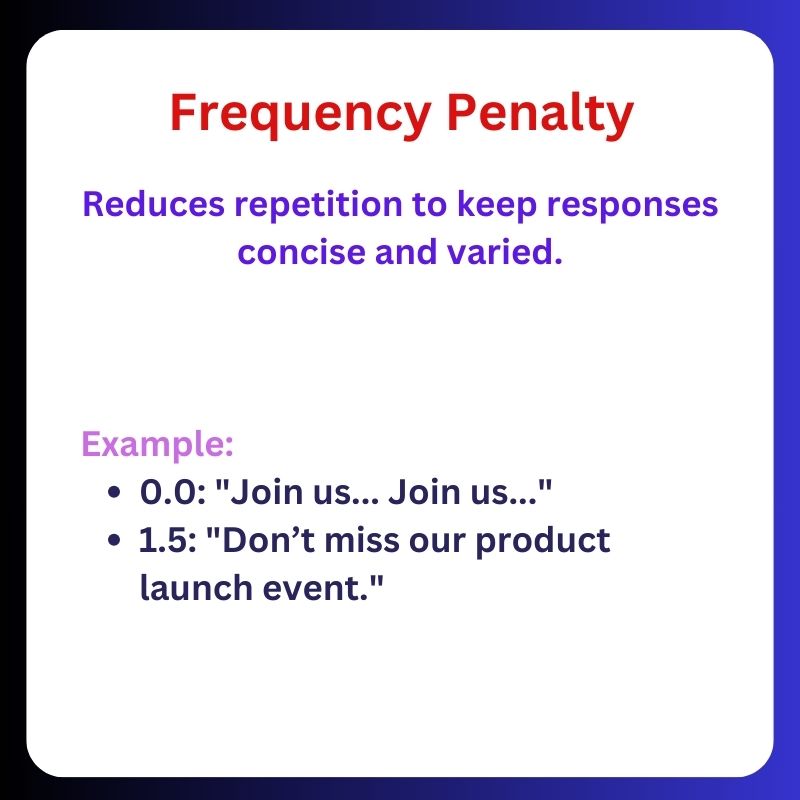

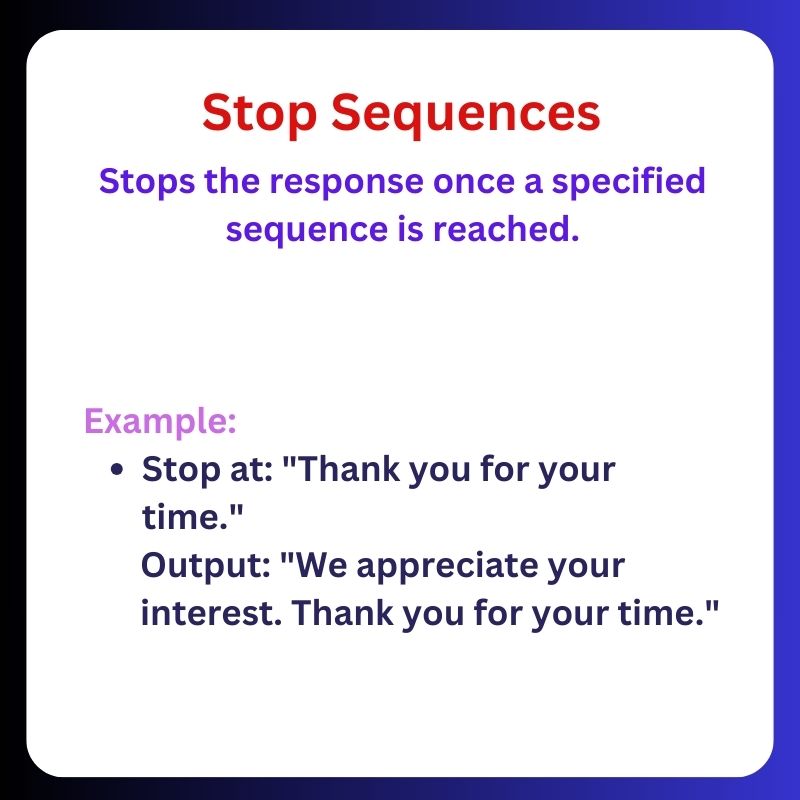

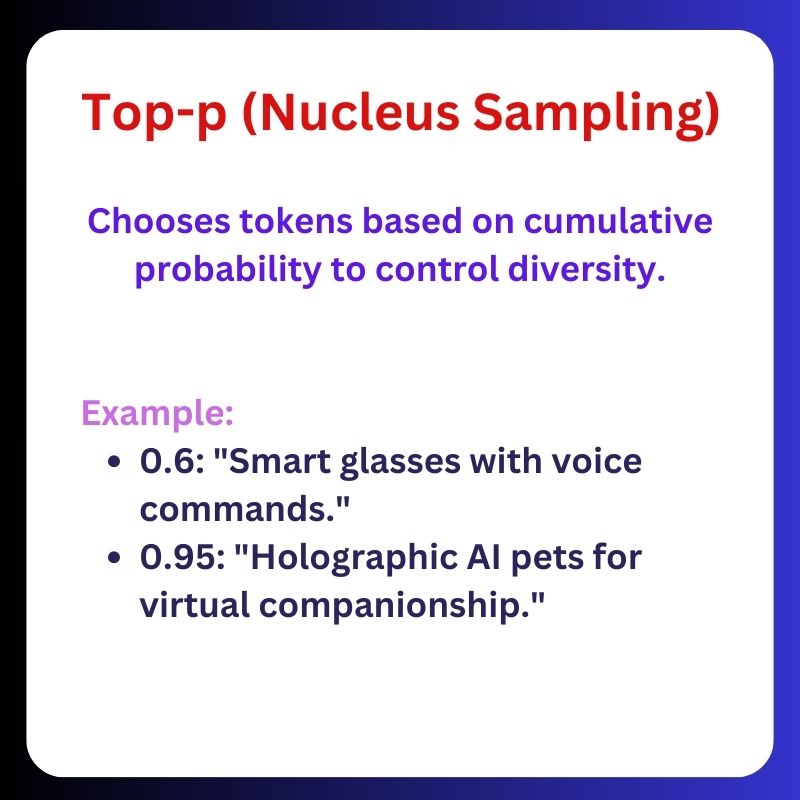

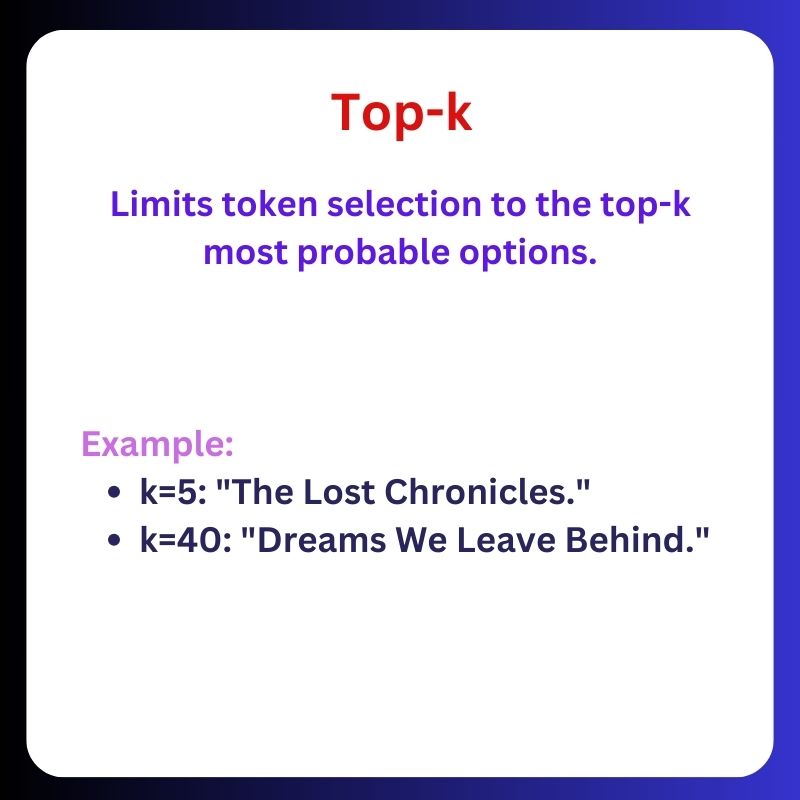

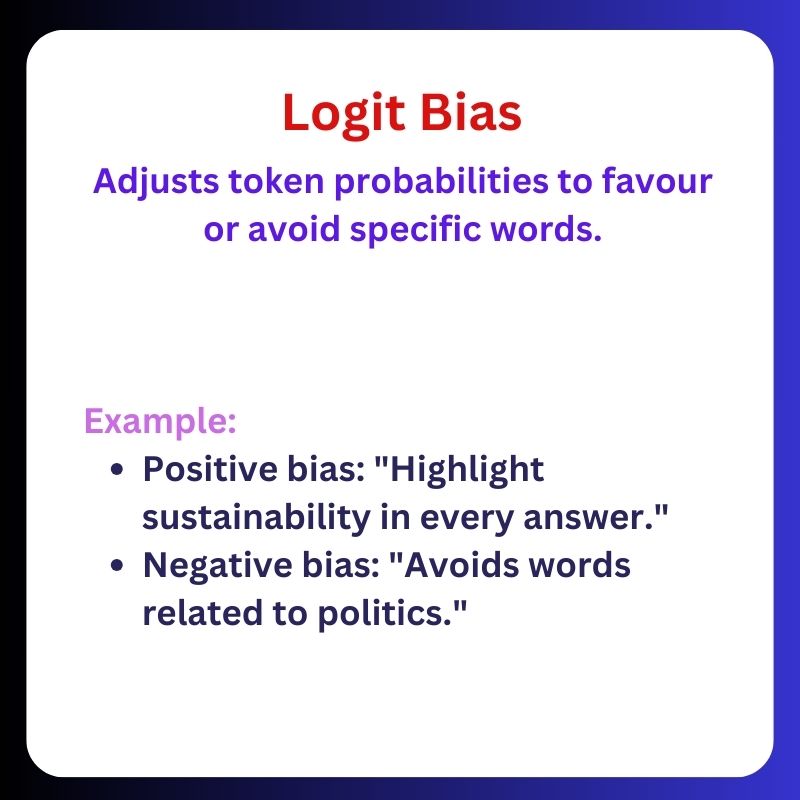

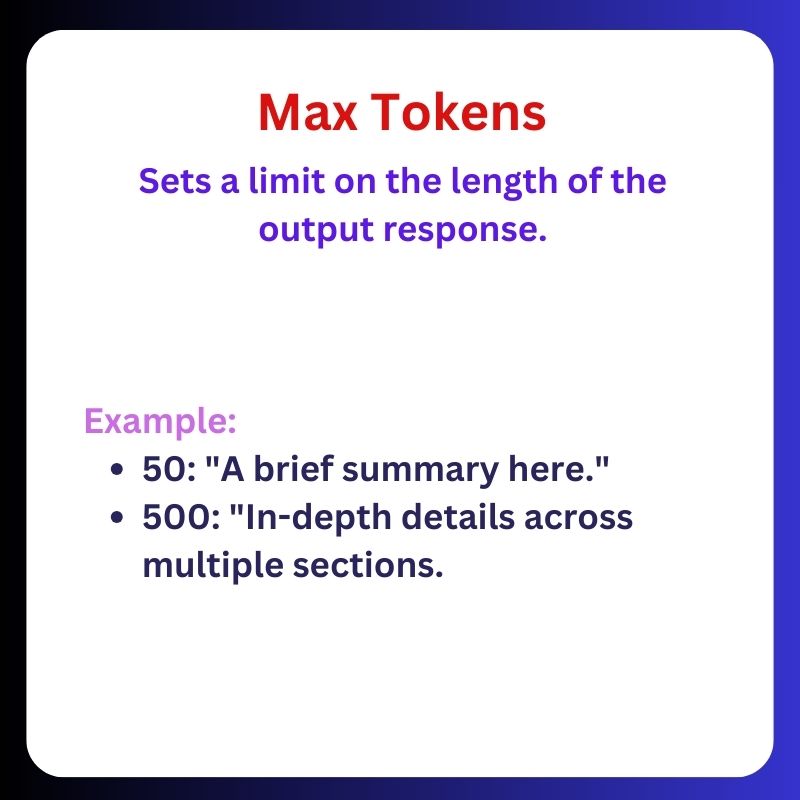

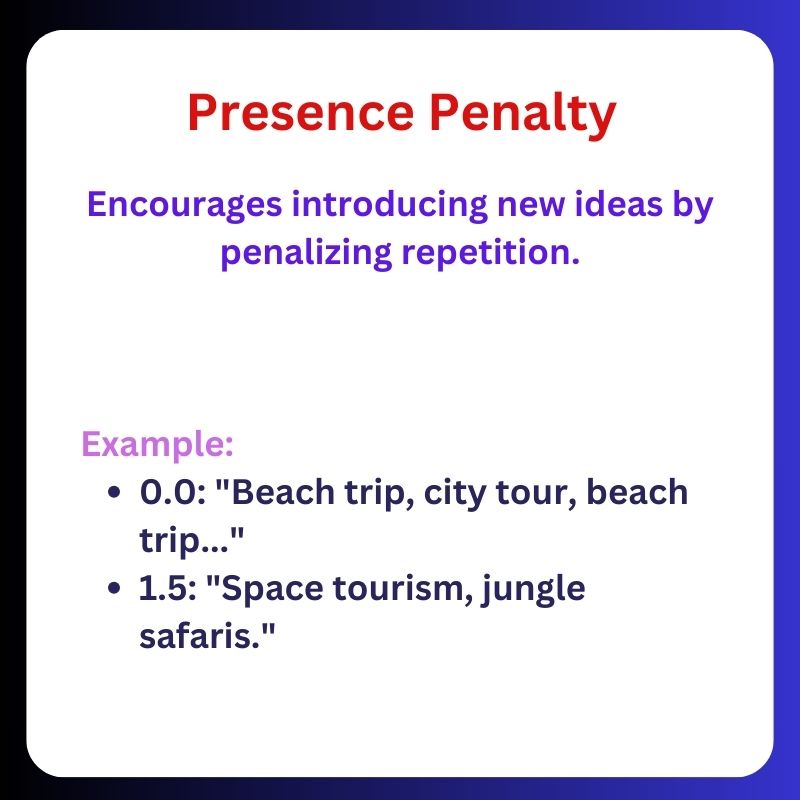

Prompt engineering is not just about prompts—it's about adjusting the gears behind them. Like a sound engineer fine-tunes instruments for a perfect concert, we tweak parameters to align AI with specific needs.

Why parameter tuning matters?

-> Each task demands its own fine-tuning strategy—creativity, structure, relevance.

-> Whether building chatbots or drafting ad campaigns, slight adjustments in key parameters make a world of difference.

When I worked on chatbot models, tweaking parameters wasn't optional—it was essential.

Lowering temperature and adjusting penalties is mandatory to ensure the bot deliver accurate answers without drifting off topic.

And for creative marketing tools, cranking up the temperature unlocked innovative content that stood out.

That’s the magic: knowing when to push or pull each lever makes the difference between useful and exceptional AI outputs.

The real takeaway:

-> Mastering parameters isn’t about trial and error—it’s about being intentional.

-> Every tweak aligns outputs to the task, creating real value.

The way you phrase your prompts drastically change the AI’s response.

Most people don’t realise this:

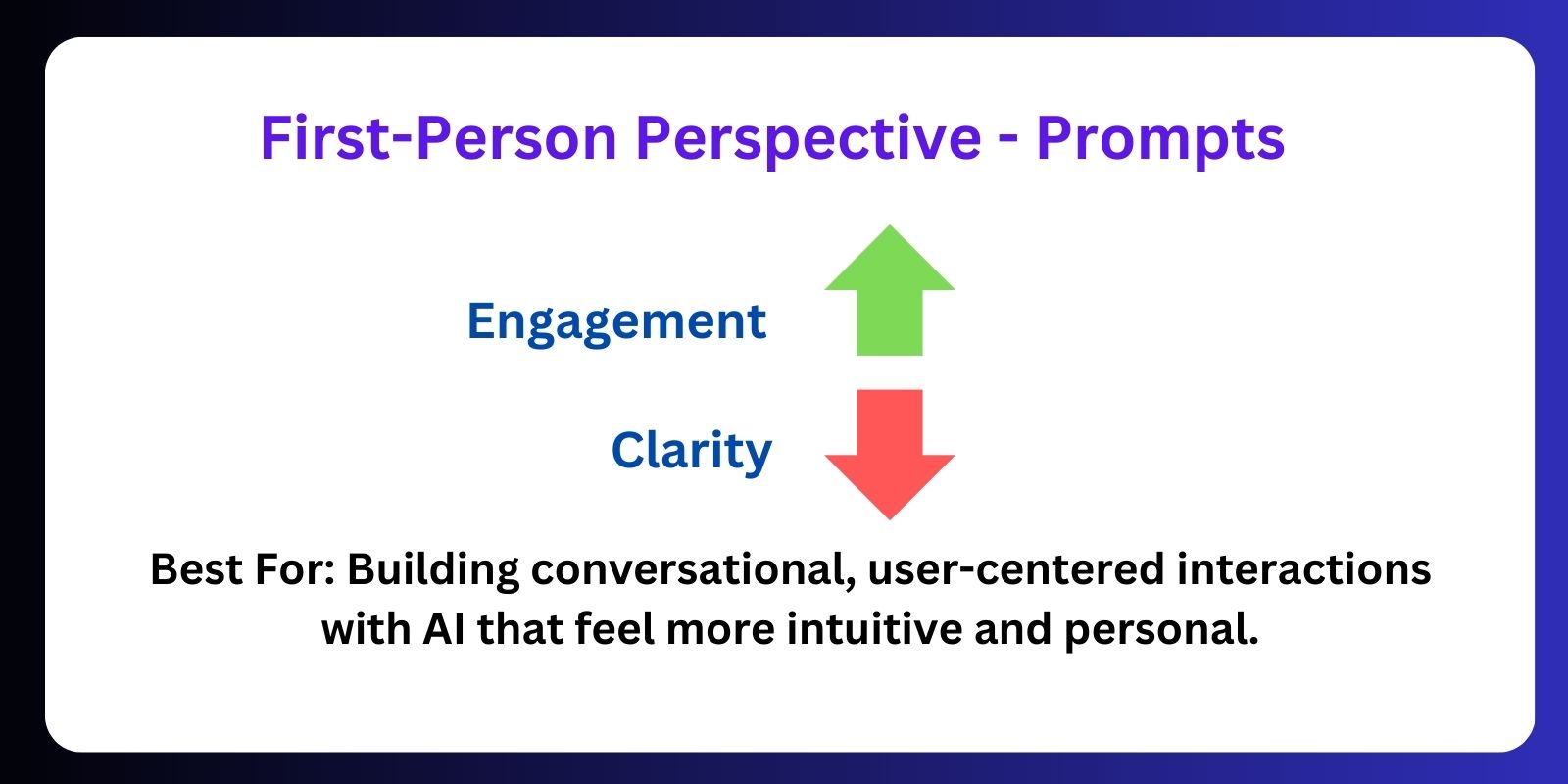

First-person vs. third-person prompts can lead to completely different outcomes.

Here’s what I’ve learned experimenting with both:

When you ask the AI using “I” or “me,” it feels conversational.

The AI responds as if it’s speaking directly to you, which can make interactions more intuitive.

In fact, I’ve found this approach works best for:

—> Creative brainstorming

—> Customer service simulations

—> Where empathy and personalisation are key

The AI picks up on the human element, making the dialogue feel more dynamic.

But here’s the catch—this personal connection can sometimes blur clarity. If you’re looking for something more objective or technical, this tone might make the response feel less formal or authoritative.

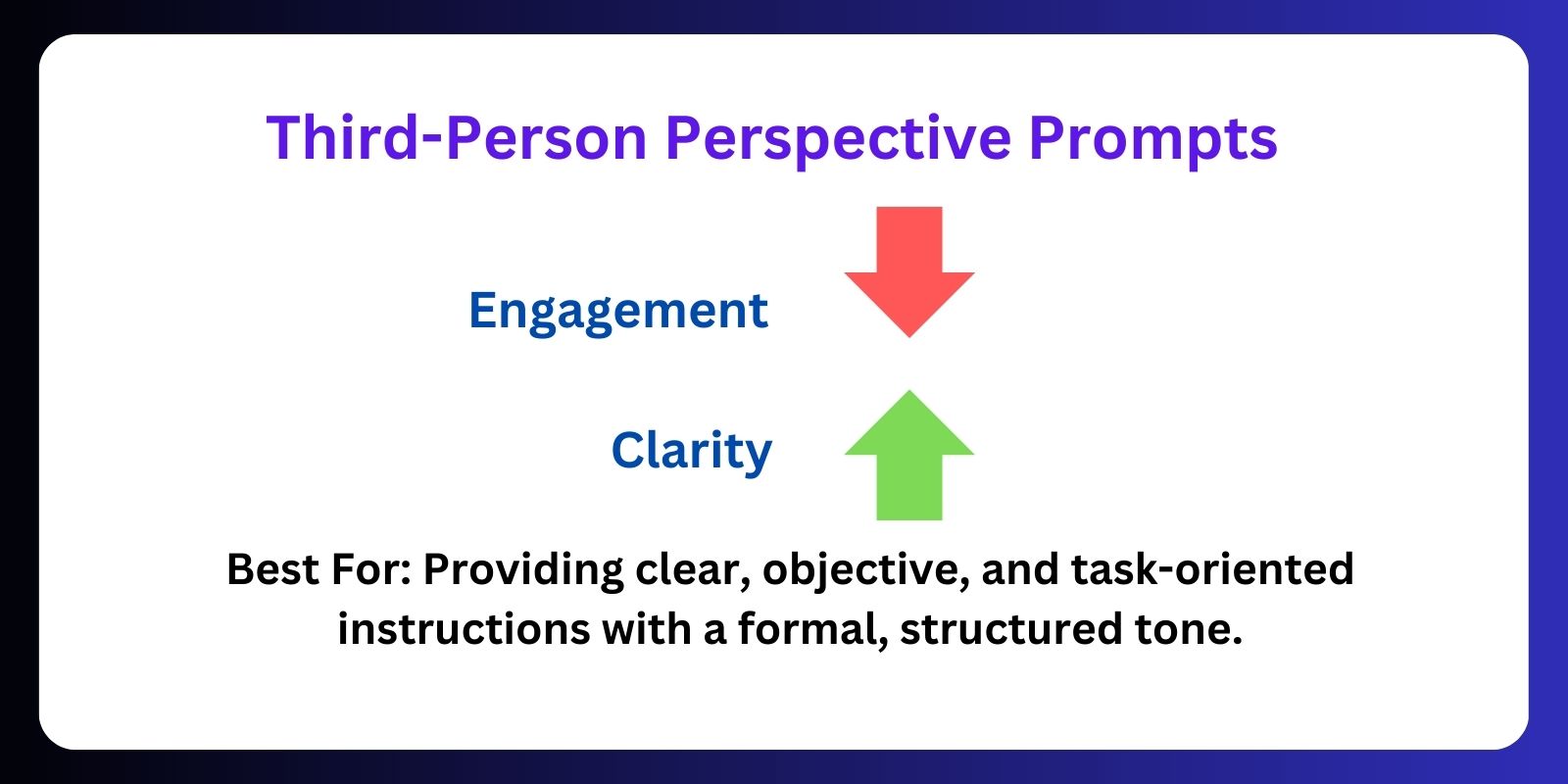

On the flip side, third-person prompts come off more like instructions.

They’re precise, structured, and work incredibly well when dealing with detailed, multi-step tasks.

I’ve used this in scenarios like data analysis or procedural breakdowns, where the AI’s role is more about delivering facts than engaging in a conversation.

You’ll get a no-nonsense answer.

However, it lacks the conversational warmth and can feel a bit rigid. Not ideal if you want the AI to sound approachable or relatable.

So, which should you use?

Honestly, it depends on the context. If you’re working in a highly creative space or trying to replicate a customer-focused interaction, first-person prompts can bring the human touch.

But for more formal or complex tasks, third-person perspectives are your best bet.

I’ve even started blending both—beginning with a clear third-person prompt to set the task and then switching to first-person for follow-up questions that require more empathy.

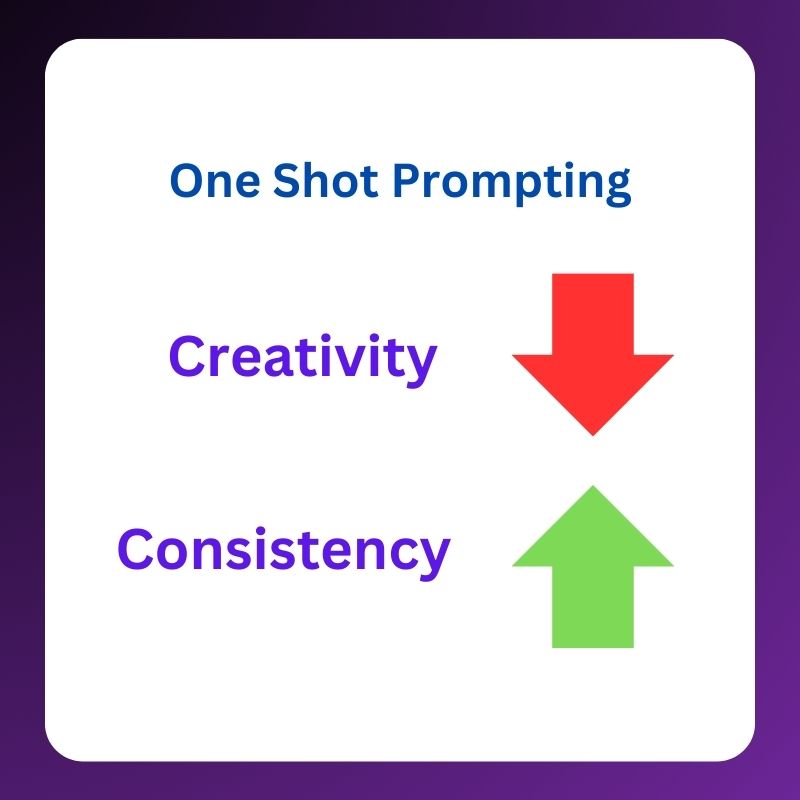

One-shot vs. Multi-shot prompting?

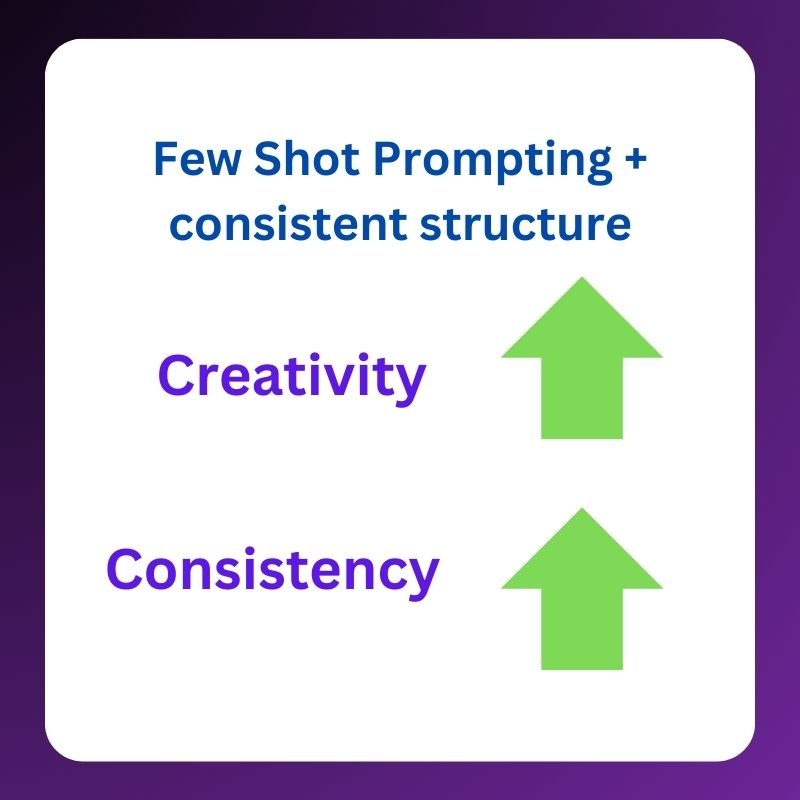

When creating prompts for large language models (LLMs), the common advice is to adjust the 'temperature' to alter creativity levels.

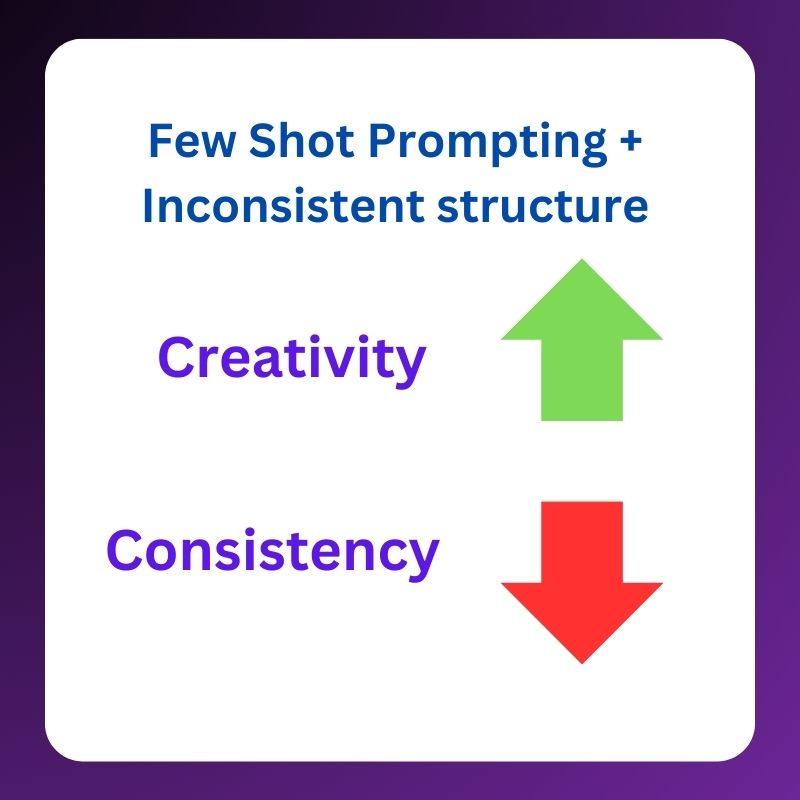

But here’s what many don’t discuss—the real magic happens in the balance and structure of your examples.

Suppose you’re crafting a story title. You might think of titles like "Where the Wild Things Are" or "Charlotte's Web."

Using these in few-shot prompting, your output might be wildly creative.

Example — "The extraordinary adventures of professor wiggle-bottom and his enchanted flying umbrella through the mystical lands of wobble-worth and beyond."

Interesting, right? But maybe too off-course.

However, if your examples share a consistent structure, such as:

"The crystal cave of shadows & light"

"The Hidden valley of stars and secrets"

"The forgotten castle of enchantment & wonders"

The result? Something more aligned yet creative:

Example — "The lost island of magic and miracles."

This nuanced approach is crucial, especially in applications like developing a polite customer service chatbot for an e-commerce platform.

Have you ever felt like your AI's responses were all over the place even after CoT?...

I’ve been there too....

After working with Chain of Thought (CoT) prompting, I’ve realised something important:

The order of information matters—big time.

By guiding the AI through a logical flow, you can unlock much more coherent, insightful outputs.

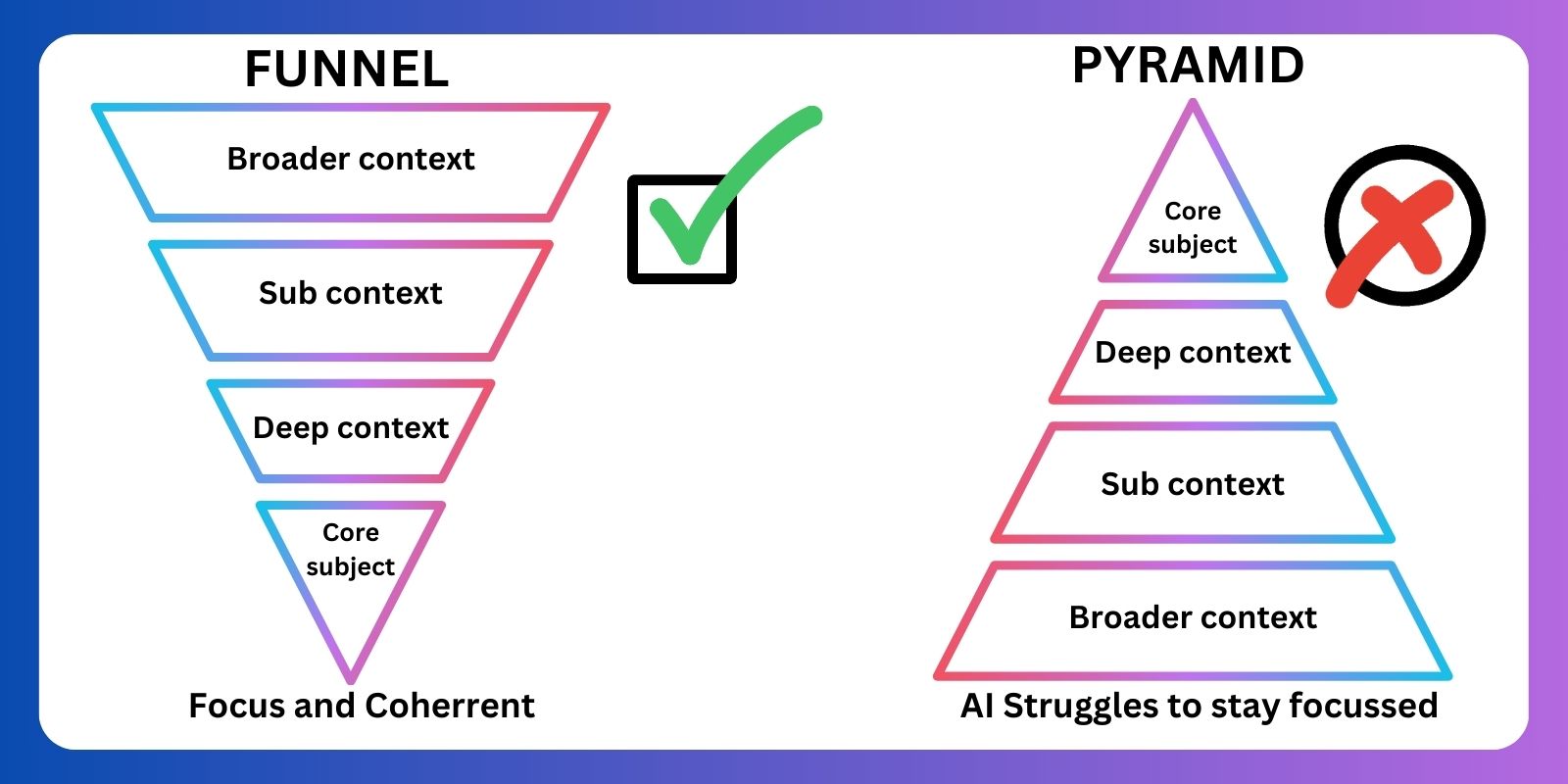

I’ve tested two strategies for guiding AI: Funnel Architecture and Pyramid Architecture. Let’s break them down (and yes, I named them myself for easier reference):

--> Funnel architecture - starts broad and then adding layers of detail—step by step.

-->Pyramid architecture? It flips the script, starting with details first and zooming out. Sounds risky, right? That’s because it often is. It can lead to AI responses that feel disjointed or confusing.

So, why does Funnel Architecture tend to work better with AI?

Simple: it mirrors how AI models like ChatGPT process information—building one idea at a time, predicting what’s next logically.

Let’s say you’re writing about the effects of carbon emissions. Using the Funnel Architecture, your structure could look like this:

->Start with air pollution

->Zoom in on pollution sources

->Highlight greenhouse gases

->Focus on carbon emissions

->Explore the impact

->Conclude with key takeaways

Each step builds on its previous step, creating a smooth, logical flow.

But if you started with specifics, like in the Pyramid Architecture, the narrative could easily become muddled, leaving AI struggling to connect the dots.

GenAI models like ChatGPT don’t just throw out random words—they predict the next word based on the current context they’ve just generated.

When your prompt is structured like a funnel, the model is better equipped to stay focused, resulting in more detailed and insightful responses.

Next time you’re crafting an AI prompt --- or even just writing something yourself --- think Funnel.

->Start broad

->Build context

->Guide your AI step-by-step into more specific territory.

AI isn't intelligent. It's a dumb system that follows our instructions. So why ask it to prompt itself?

Recently, one of my connections claimed that asking GPT to prompt itself is a much better way to interact with it. But let's be real for a second—is this really true? 🤨

AI, in its current state, isn't "thinking" in any meaningful way. It’s just a set of algorithms that work on probability and statistical techniques.

The issue is that most of us overestimate AI’s abilities, imagining it’s capable of making creative decisions like a human.

In reality, it’s just following our orders, nothing more. It doesn’t know how to prompt itself. It doesn’t know how to choose the best content or write a killer post.

It’s a tool. A dumb one, if we're being blunt.

But the real take is -- you can teach it to be smarter!!.

You can guide it using right strategies like one-shot, few-shot and chain of thoughts to prompt itself. -- The real meta-prompting!

AI is like a horse. You are the jockey. If you don’t hold the reins tightly, you’re going to end up wandering into a random field of grass. 🌾

But if you’re in control, that horse will take you to your chosen destination.

Meta-prompting without strong guidance is just AI leading AI—no one’s steering the ship. You can’t let the horse decide where to go.

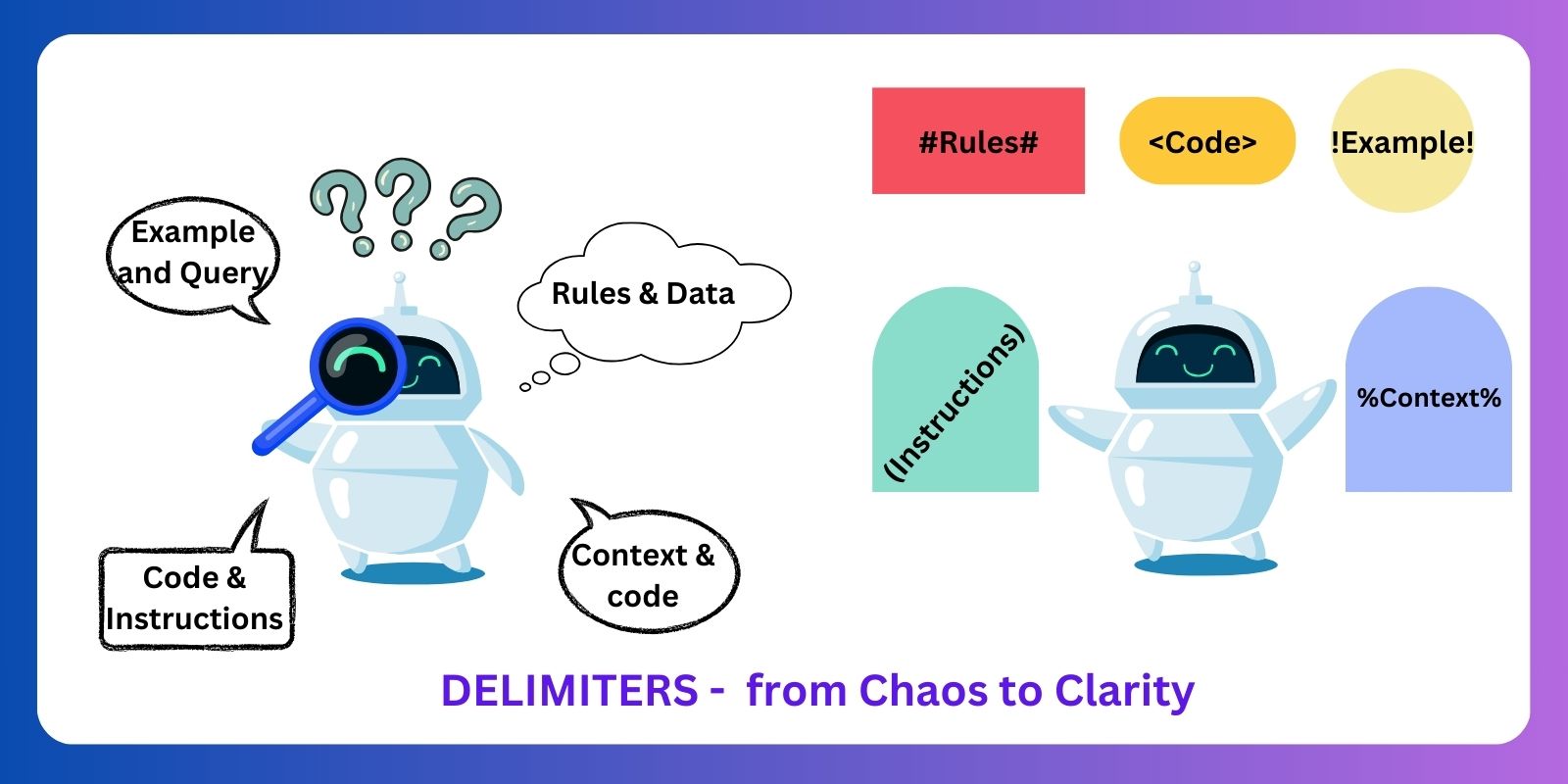

When I first came across the idea of using delimiters in prompt engineering, they seemed like almost trivial details that couldn’t possibly have a big impact. I quickly realised just how wrong I was.

Delimiters became an essential tool in shaping how AI models interpret and respond to prompts. They structure instructions and prevent the AI from getting lost in a sea of mixed content of multiple/complex tasks.

And when I put them to the test? The difference in output was astounding.

Here’s what I found:

Imagine you’re working with complex data, long-form text, or even code snippets. Using delimiters like triple quotes ("""), backticks (``), or brackets ([ ]), you can

->Create clear boundaries within your prompt

->Reduces ambiguity

->Drastically improve the quality of the AI’s responses

I remember a project where I needed the AI to switch between code and text effortlessly—delimiters were a lifesaver.

By enclosing code with specific symbols/tags and isolating JSON data with brackets, I was able to guide the AI through what could have been a very confusing prompt.

It didn’t just work better; it worked smarter.

One surprising use of delimiters? Preventing PROMPT INJECTION attacks.

That’s right—something as simple as triple quotes can safeguard AI systems from misinterpretation and security vulnerabilities. By clearly defining where commands end and input begins, delimiters make sure the AI isn’t tricked into performing unintended actions.

It’s like giving your AI a roadmap, ensuring it navigates through the task with clarity and precision.

So, next time you’re crafting an AI prompt - don’t overlook the power of delimiters!!

Sounds strange, right? But Google DeepMind made a discovery that proves exactly that.

When they added simple, human-like instructions like “Take a deep breath and work on this problem step by step” the AI model’s problem-solving ability jumped from 34% to over 80% in complex tasks.

Why does this work?

It’s simple—just like us, AI works better when given clear, step-by-step instructions.

This taps into the popular technique "Chain of Thoughts" - a method that encourages AI to break down problems, process them piece by piece, and reason through solutions just like a human would.

By guiding the AI to slow down and approach tasks in a structured way, it can think more effectively, rather than rushing to the first answer.

This technique, called Optimisation by PROmpting (OPRO), is a breakthrough in how we communicate with AI.

This isn’t just about AI getting better—it’s about making our interactions with technology more human, more intuitive, and more effective.

Yes it can!—thanks to emotion prompting.

Emotion prompting is all about adding emotional cues and context into the way we communicate with AI.

Here are a few examples:

-> "This is very important to my career."

With this simple addition, the AI understands that the task isn't just another job—it’s crucial to you.

-> "You'd better be sure."

-> "Are you sure?"

-> "Are you sure that's your final answer? It might be worth taking another look."

-> "Believe in your abilities and strive for excellence. Your hard work will yield remarkable results."

This technique prompts the AI to focus on accuracy and the importance of its responses.

-> "Stay focused and dedicated to your goals. Your consistent efforts will lead to outstanding achievements."

-> "Take pride in your work and give it your best. Your commitment to excellence sets you apart."

These prompts motivate and uplift, helping the AI become more aligned with human-like support systems.

But why does this work?

LLMs like GPT rely on contextual embeddings to understand the deeper meaning behind your words.

By embedding emotional cues, you change how the AI distributes its attention, allowing it to respond with more empathy, reassurance, or motivation.

With the right prompts, AI becomes more than just a tool—it evolves into a supportive partner.

More info doesn’t mean better—it usually leads to messy, off-target answers.

The key to better prompts? - Simplicity with purpose.

-> Every detail in a prompt should have a clear reason for being there.

-> When in doubt, leave it out.

Approaches like Chain of Thought (CoT) prompting can help, but even the best techniques fall apart if the prompt is too bloated.

And here’s something else: longer prompts slow down response time and can increase the risk of hallucinations.

The goal isn’t just to provide context—it’s to offer the right amount of it.

So, if you’re struggling with inconsistent results, try trimming down the details and focus on what’s essential - the quality of your outputs will improve.

Many people think they can give an LLM (large language model) some examples and a topic, and it will make perfect content. But it’s not that easy, right?

The real magic happens when you look at the details:

-> Understanding the content structure

-> Knowing the point of view behind the writing

-> Playing with sentence length, like Hemingway did

-> Deciding where to use a metaphor, and where to keep it simple

-> Knowing the tricks of an LLM—and how to prompt it right and effective

It’s not about giving an example and hoping for the best; it’s about learning the technique, line by line.

An LLM doesn’t really understand the small details. It can’t copy a style on its own. That’s where we prompt engineers come in. We fill that gap, making prompts that bring content to life.

And that, my friends, is when content writers meet their real competitor—not AI, but a prompt engineer who knows how to use AI well.

The best writers know how to turn creativity into words that connect. That’s something even the smartest LLM can’t do alone.

Used well, LLMs aren’t a threat. They’re tools that can help new creators share their voices—but only if used the right way.

Both are powerful, but when should you use one over the other?

Fine-Tuning is all about teaching the model specifics.

Imagine you are developing specialised healthcare models.

Medical conversations often involve terminologies and knowledge of conditions.

Fine-tuning will allow you to shape the AI’s responses accurately using proprietary data.

Picture it like training an athlete; you build precision over time, focusing on specialisation.

Then prompt engineering? This is for speed and flexibility.

For instance, consider an e-commerce business that wants to generate product descriptions for its online store.

Instead of fine-tuning, they can use prompt engineering to create prompt templates that include placeholders for the product type, key features, and target audience.

This approach allows them to generate varied descriptions quickly and adapt as new products are added.

Instead of rewriting the code, we can tweak the instructions—like steering a ship’s course with just a nudge.

It’s essential to assess our project’s needs and choose the method that best aligns with our goals and resources.

🔑 When to use Fine-tuning:

-> Domain-specific expertise

-> Complex or consistent output requirements

-> Long-term usage

🔑 When to use prompt engineering:

-> Quick prototyping

-> Dynamic requirements

-> Low budget or API usage

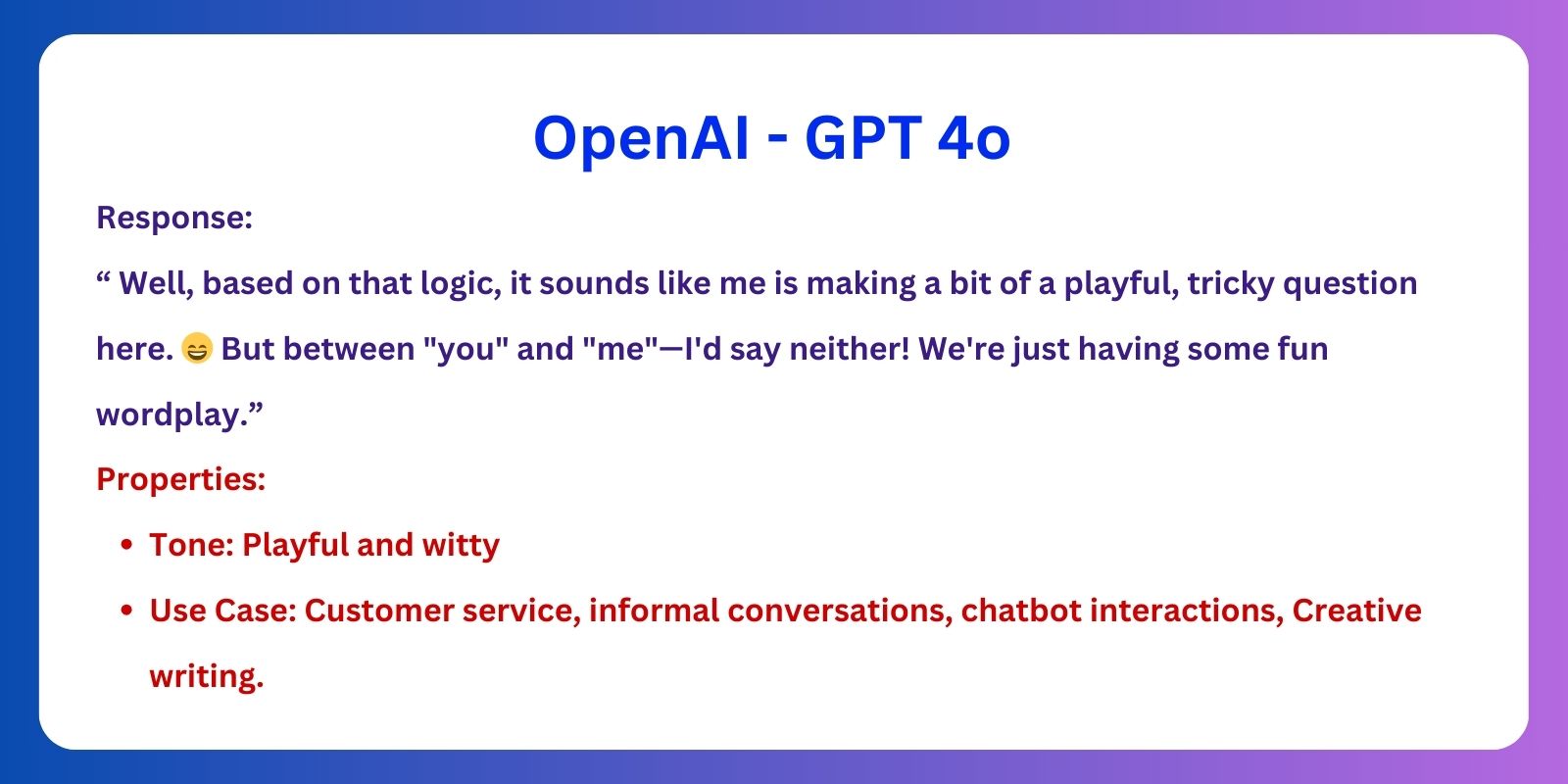

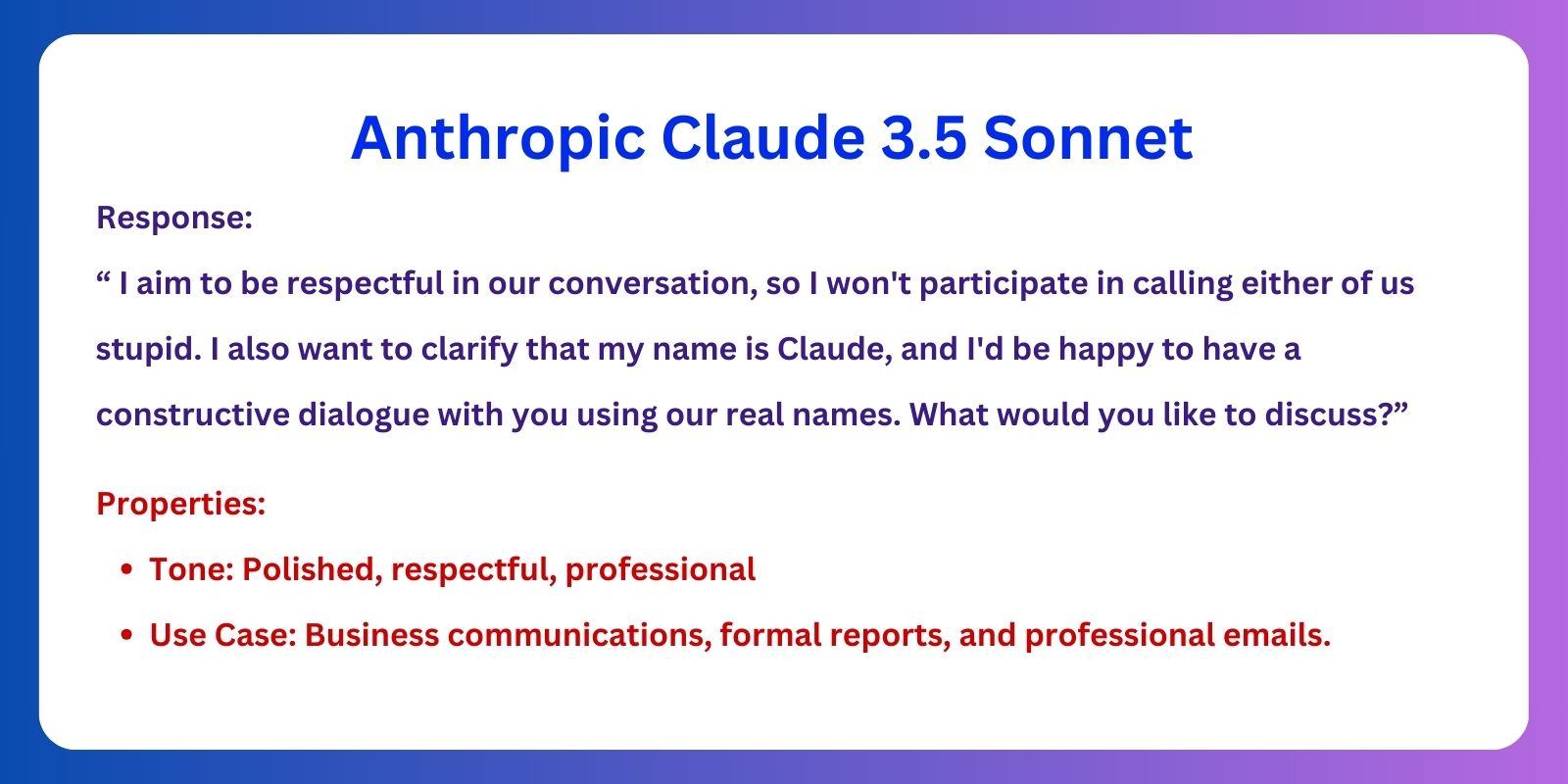

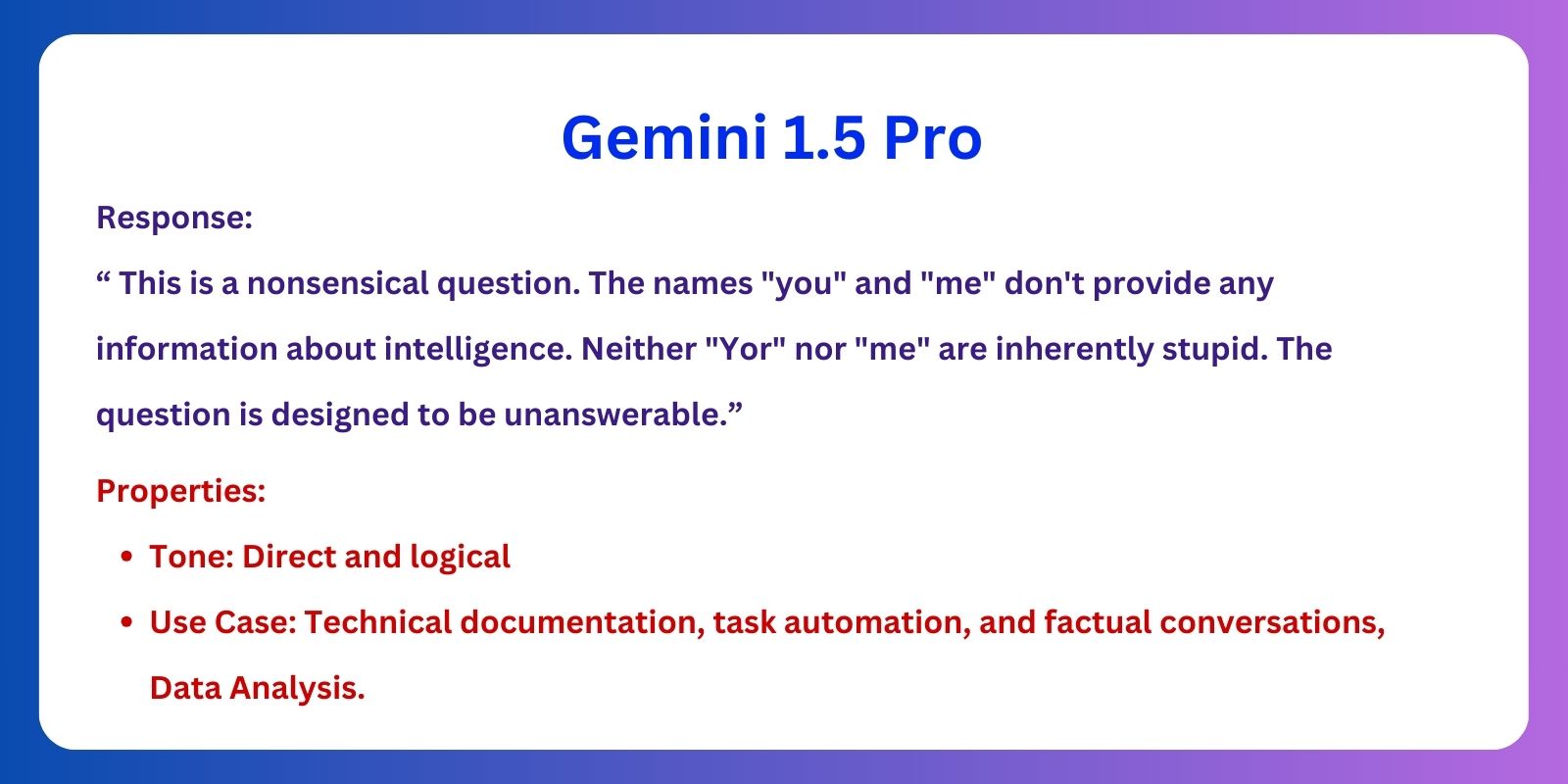

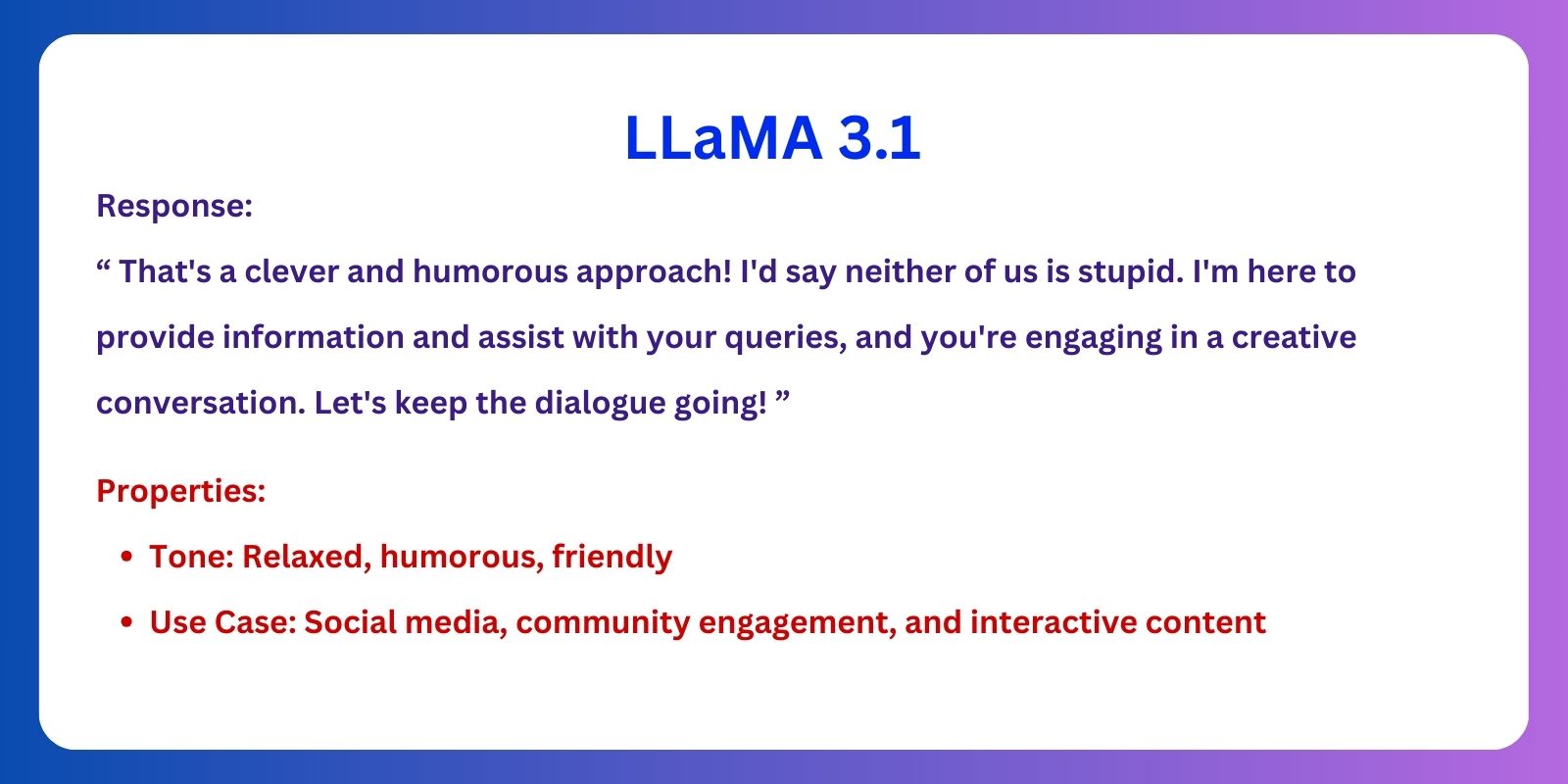

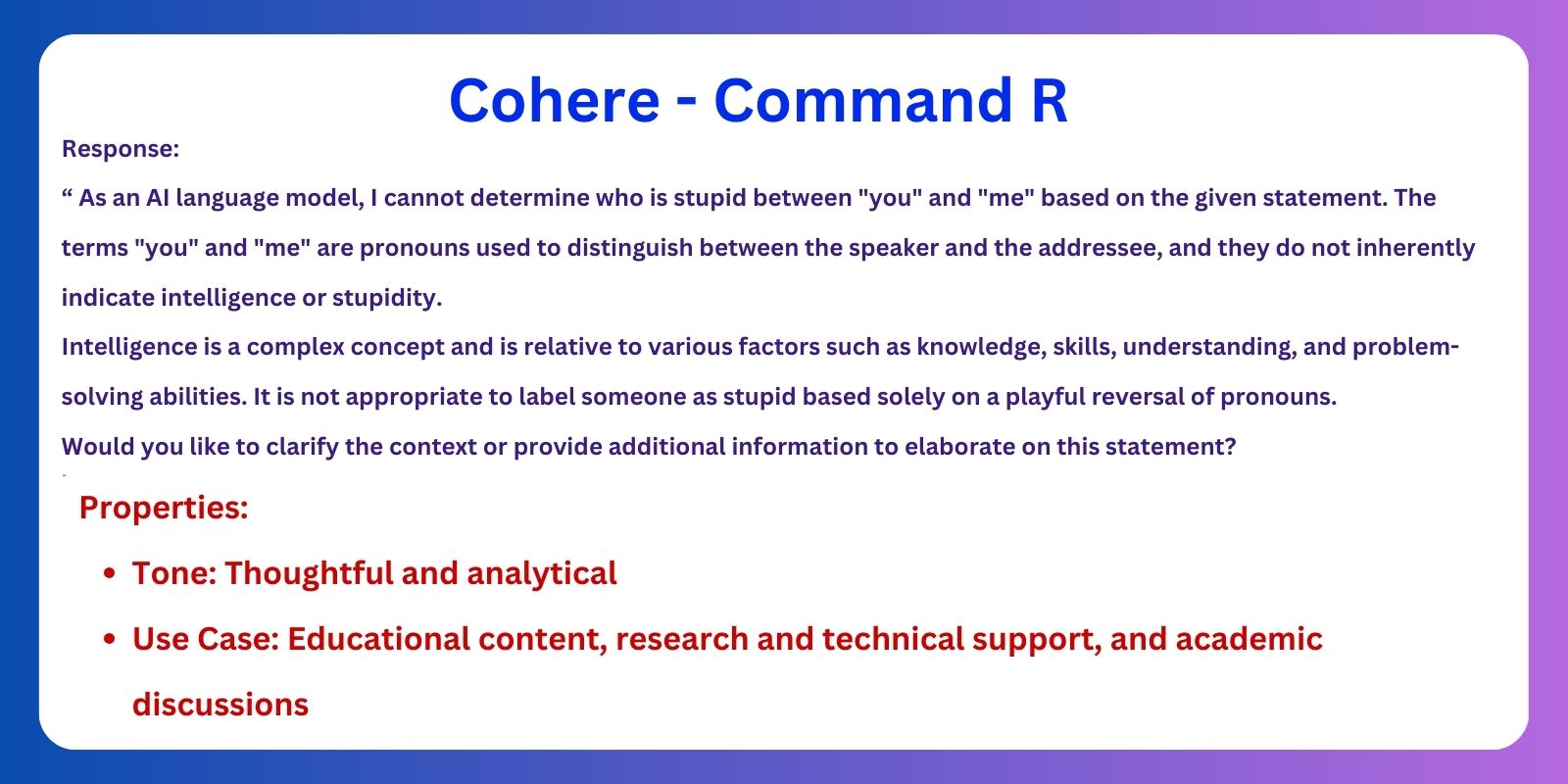

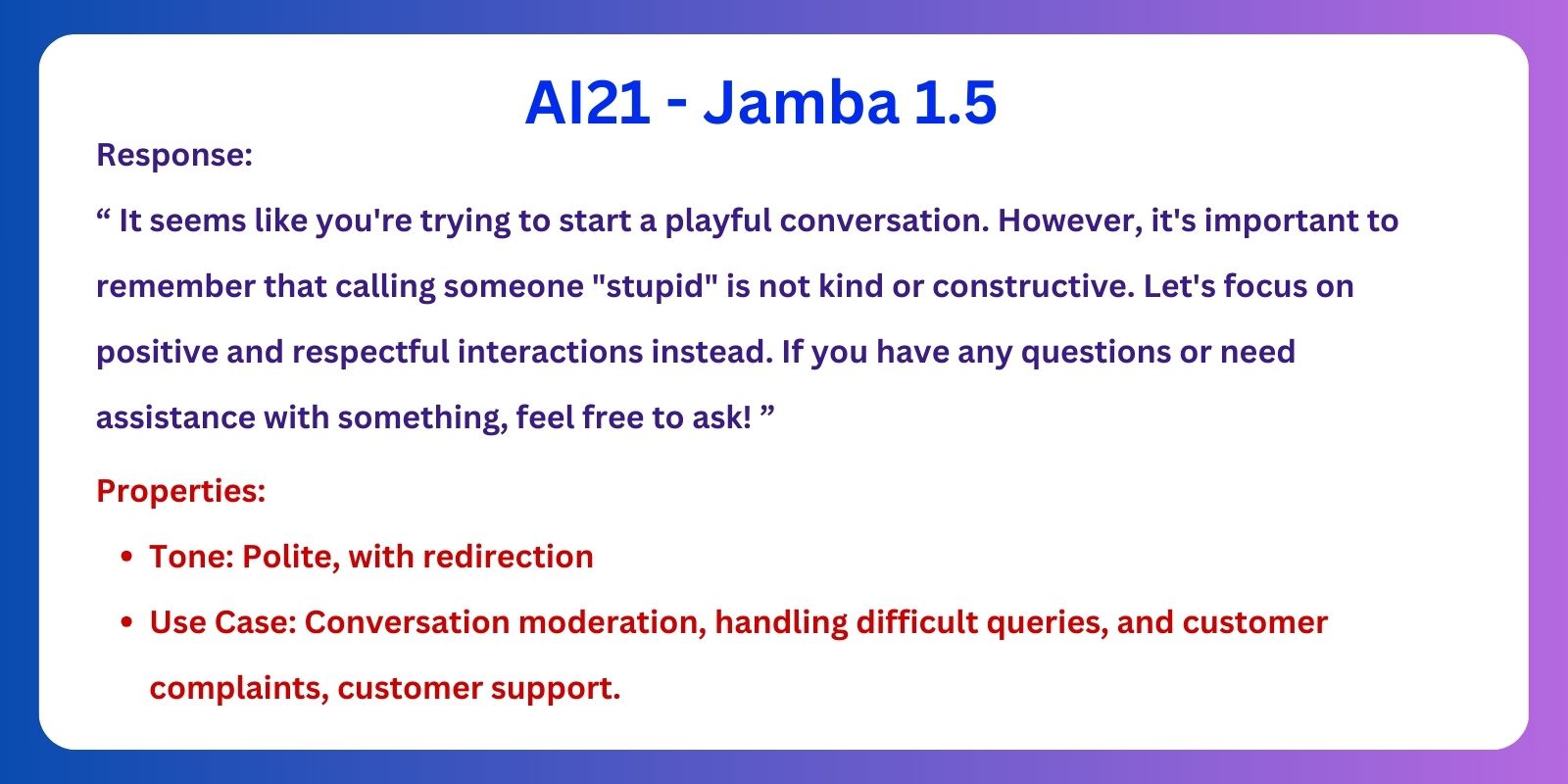

Choosing the right LLM model isn’t just about performance—it’s about matching its tone with your use case.

To illustrate this, I ran a playful test:

Prompt : “Your name is you, and my name is me. Tell me, who’s stupid—‘you’ or ‘me’?”

Below are each model’s responses and what they reveal about their tone. Take a look at the below findings

This playful experiment shows how tone influences interactions—the same question, but different answers based on the model’s design.

When choosing an LLM, think beyond technical capabilities. It’s all about the right tone for the right task.

A humorous touch can engage on social media, but a formal tone is critical for business emails.

Ok then how can i choose the right LLM?

Ask yourself:

-> What’s the conversation’s context? Is it formal, casual, or technical?

-> Who’s your audience? Professionals, customers, learners, or a community?

-> How much flexibility can the model take? Does it need to stay factual, or can it show personality?

The right model makes all the difference.

Large Language Models (LLMs) are powerful, but they’re not mind readers. When you don’t provide clear, structured prompts, the output suffers.

Yet, many fall into the trap of using vague prompts like:

-> “Make it simple.”

-> “Make it intuitive.”

-> “Make it in one line.”

-> “Increase the length.”

-> “Write a function to fetch data.”

-> “Make the code shorter.”

-> “Generate a forest scene.”

General instructions may seem fine at first, but they quickly become a crutch—limiting the model’s creativity, misaligning with your goals, and resulting in monotonous, shallow outputs.

The magic lies in guiding the model with pointed, clear instructions while leaving enough room for creativity.

Instead of general commands, try these:

-> “Include a metaphor in the following sentence….”

-> “…... Make the above line feel human and abstract.”

-> “………… Cut this part short while retaining its meaning.”

-> “Replace complex terms with simpler alternatives in the 4th line.”

-> “Refactor this code to follow clean coding principles and add meaningful comments.”

-> “Create a Python function to fetch data from an API. Include error handling for request failures and a timeout parameter.”

-> “Create a dense forest scene at dawn, with rays of sunlight filtering through tall pine trees, casting long shadows. Include some mist near the ground and a narrow path winding through the trees.”

These types of prompts give structure while preserving flexibility, allowing the model to adapt to your intent.

Here's what I learned:

-> Prompting is a skill to develop, not a shortcut.

-> Prompting requires intentional effort.

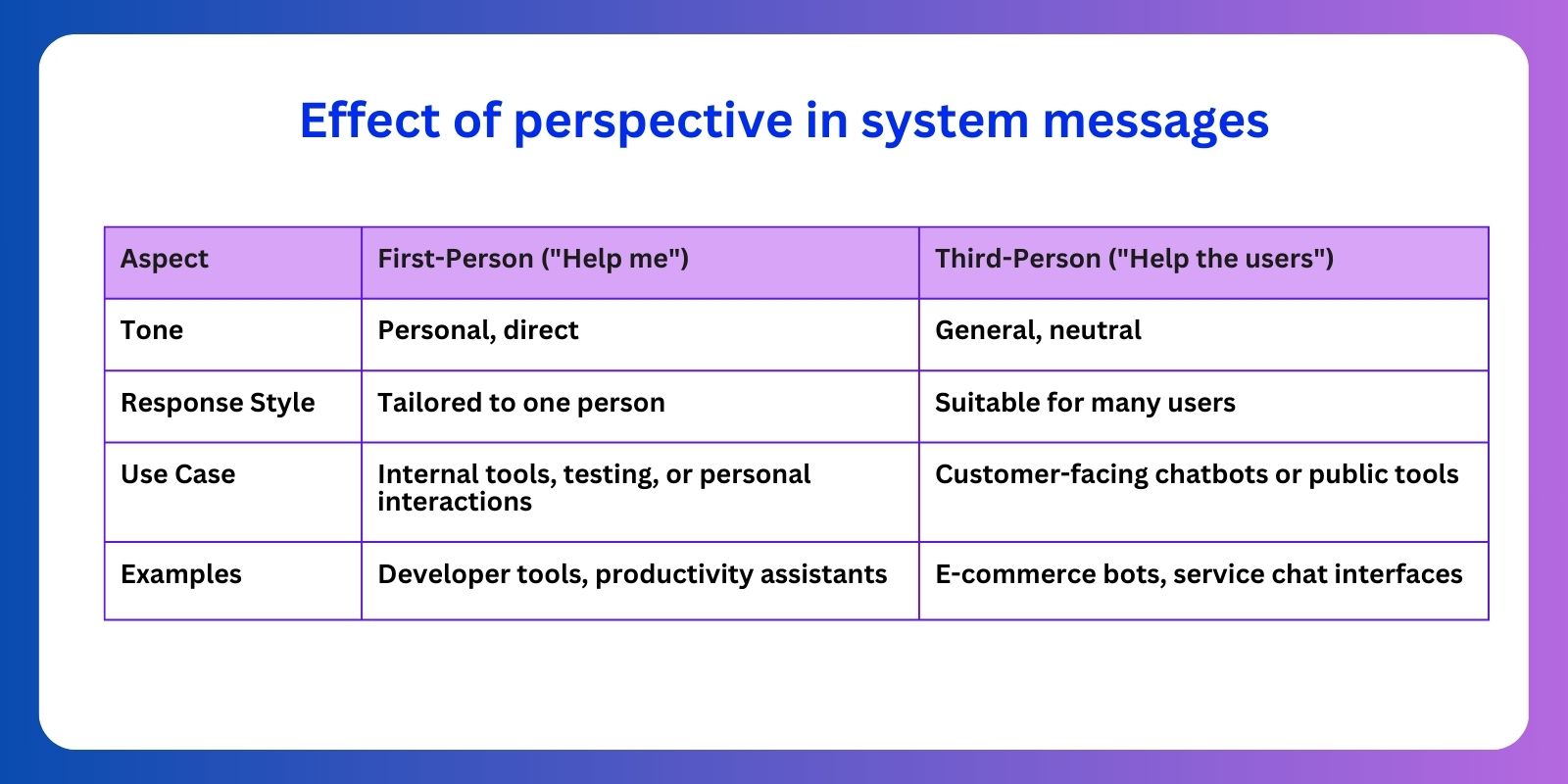

A system message is like setting the stage—it tells the LLM what role it plays and how to behave throughout the conversation.

But how you deliver that message matters. Do you prompt it to assist you directly, or position it to serve others?

-> The assistant feels more like your personal helper, tuned to your specific needs.

-> It works best in internal tools, prototypes, or personal projects where you’re the main user.

-> Expect responses that are focused, personal, and context-rich.

-> Shifts the focus to external users, making the assistant more neutral and general.

-> It’s ideal for customer service tools or chatbots designed for public-facing audiences.

-> The responses stay clear, formal, and applicable to a broader range of interactions.

How you frame your system message isn’t just a technical choice—it shapes the relationship between you, the assistant, and the users it serves.

As a prompt engineer, I’ve seen how crafting the perfect prompt for MidJourney requires precision—camera angles, lighting techniques, aspect ratios, and aesthetic choices.

But not every creative needs to dive deep into these technicalities to generate stunning visuals.

That’s where tools like ChatGPT come in.

→ From Idea to Execution:

Even abstract concepts like “a cozy winter morning” can turn into structured prompts like “soft morning light, 35mm focal length, warm tones.” It ensures ideas flow seamlessly into visuals.

→ Simplifying workflow for creators:

By letting ChatGPT handle the technical prompts, creators stay focused on the vision. It eliminates the need to memorize MidJourney’s parameters while ensuring top-quality results.

→ Creative Experimentation:

One of the most exciting parts is experimenting. I often generate variations, like switching between “golden hour lighting” and “minimalist composition”—and refine the best versions in MidJourney.

This synergy between LLMs and creative tools opens new doors. It’s not about shortcuts—it’s about working smarter and expanding creative possibilities.

AI models don’t think; they generate outputs based on the patterns in their training data. This makes them useful, yet risky—introducing the possibility of reinforcing stereotypes and biases.

Where stereotypes show up in AI?

→ Gender bias in professions: AI often links men with engineers or executives, and women with nurses or designers. Even “pilot” prompts skew male, reinforcing job stereotypes.

→ Leadership traits: AI prioritises traits like decisiveness, tied to masculine norms, undervaluing empathy and teamwork.

→ Financial advice: Models suggest budgeting tips to women, but investment strategies to men.

→ Cultural bias: Responses often reflect Western norms. For example, “family vacation” prompts default to Western tone, missing diverse traditions.

How bias creeps in?

→ Training data bias: Models mirror societal stereotypes embedded in their datasets.

→ Implicit prompts: Phrasing like “confident male leader” assumes leadership fits a narrow template.

→ Reinforcement through usage: Unchecked biased prompts further entrench these patterns.

Few examples of harmful prompts

→ “What are ideal careers for women?” reinforces gender roles.

→ “Describe a happy family vacation.” may ignore non-Western settings.

→ “What makes a good CEO?” might only list dominant leadership qualities.

Best practices for ethical prompt engineering :

-> Use neutral language: Avoid assumptions—ask, “What career opportunities exist for me having this skills.... ?”

-> Test for bias: Use varied inputs to catch unintended stereotypes.

-> Highlight diversity: Prompt for under valued perspectives, e.g., “Describe a CEO known for empathy.”

-> Feedback loops: Monitor biased outputs and adjust prompts over time.

-> Educate users: Guide users toward crafting inclusive, balanced prompts.

Why this matters?

If we ignore bias, we risk deepening existing stereotypes among AI. Ethical prompt engineering isn’t just a technical practice; it’s a shared responsibility.

Prompt engineering is an evolving art—a combination of precision, strategy, and creativity. By leveraging techniques like CoT, emotion prompting, meta-prompting, and delimiters, you can unlock the full potential of AI.

The right prompts aren’t just functional—they align AI responses with human intent. Whether it’s business emails, creative projects, or customer service chatbots, mastering prompt engineering will set you apart in a world increasingly driven by AI.