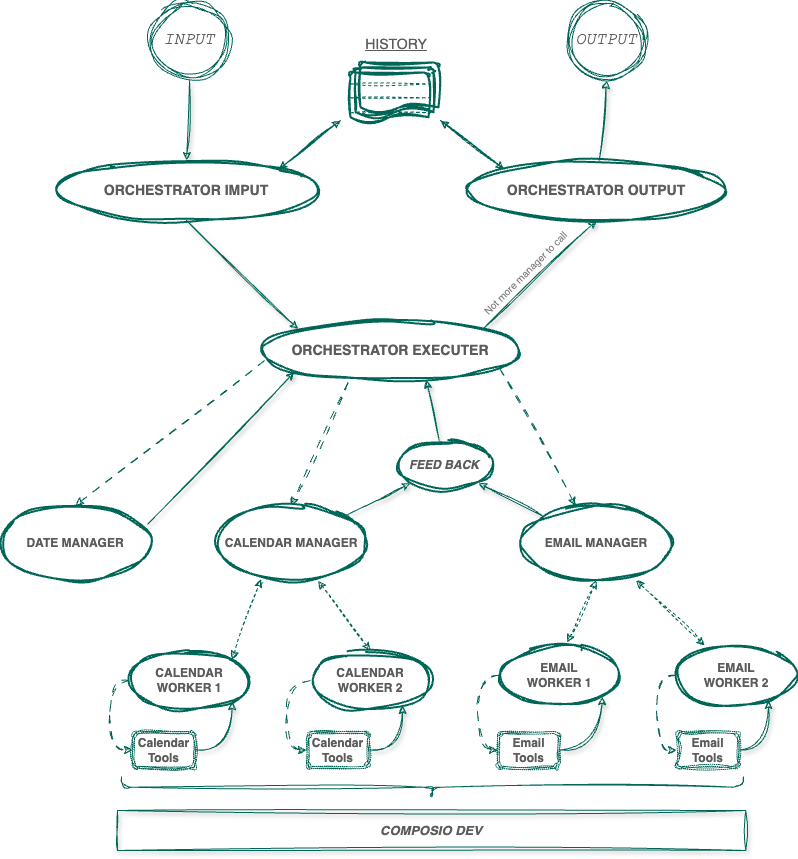

Agents are here to stay, and they bring new possibilities to large language models (LLMs) that, just six months ago, faced significant limitations. This Minimum Viable Product (MVP) aims to develop an agent-based system capable of efficiently managing our emails and calendars. This system, designed to handle personal and work-related Google accounts, aims to provide a comprehensive solution for managing these essential services.

The core approach of this MVP is developing a multi-agent system, leveraging foundational techniques for building agent-based architectures. The idea of decomposing an application into independent agents and then integrating them into a multi-agent system helps mitigate various challenges. These agents can range from something as simple as a prompt that invokes an LLM to something as sophisticated as a ReAct agent, a general-purpose architecture that combines three key concepts:

This modular and flexible approach supports greater customization and ensures that the system remains scalable and easily adaptable to future advancements in artificial intelligence and automation.

There are several ways to connect agents, as outlined below:

Network: Each agent can communicate with every other agent. Any agent may decide which other agent to call next.

Supervisor: Each agent communicates with a single supervisor agent. The supervisor makes decisions about which agent should be called next.

Supervisor (tool-calling): This is a special case of the supervisor architecture. Individual agents are represented as tools. In this configuration, the supervisor agent uses a tool-calling LLM to decide which agent tools to invoke and with what arguments.

Hierarchical: A multi-agent system can be defined with a supervisor of supervisors. This generalization of the supervisor architecture enables more complex control flows.

Custom Multi-Agent Workflow: Each agent communicates with only a subset of other agents. Some parts of the flow are deterministic, while only specific agents have the authority to decide which agents to call next.

Modularity: Isolated agents simplify agentic systems' development, testing, and maintenance.

Specialization: Agents can be designed to focus on specific domains, which improves overall system performance.

Control: The system designer has explicit control over agent communication paths, as opposed to relying solely on function calling.

Based on these architectures, we propose an agent-based design that aligns well with the divide and conquer principle. Additionally, incorporating new managers becomes a straightforward task—it only requires defining the necessary tools, building the appropriate ReAct agents, integrating them with a manager or supervisor, and registering that manager or supervisor with the orchestrator. We will discuss this architecture in detail in the following sections.

The MVP uses OpenAI as the language model provider, with the temperature parameter set to 0. This configuration ensures the responses are fully deterministic and reproducible, avoiding random variations in the model’s output. This setup is particularly useful for evaluating the quality of the responses, as it allows consistent comparison across different prompts and iterations.

LangChain is a framework designed to simplify the development of applications that LLMs, enabling the composition of complex reasoning and action chains. Thanks to its flexibility and integration with tools like LangSmith and LangGraph, developers can build, debug, and scale LLM applications in a more structured and efficient way.

LangGraph: Enables orchestration of complex workflows with multiple steps, conditional logic, branching, and loops, which is especially useful for developing AI agents that need to maintain state, execute tasks in parallel, or dynamically adapt to outcomes. Its graph-based architecture helps visualize and understand the flow of information and decision-making within the agent. Moreover, by integrating directly with LangChain, developers can build intelligent and controlled agents without implementing control logic from scratch. This accelerates development and supports rapid iteration, making it ideal for prototyping and production environments.

LangSmith: Complements the ecosystem by focusing on monitoring, evaluating, and debugging chains and agents built with LangChain. It provides full traceability of each execution, including model messages, function calls, inputs, outputs, and errors, allowing for a precise understanding of how the application behaves at every step. Additionally, it supports automated evaluations, result comparisons across versions, and regression detection. This detailed visibility is key to ensuring quality, performance, and reliability in LLM-based applications.

Together, these tools form a powerful development stack that covers the entire lifecycle of building intelligent agents and interfaces—from modular construction (LangChain), to orchestration and management of complex logic (LangGraph), to continuous monitoring, evaluation, and improvement (LangSmith). This significantly reduces development friction and time, making the creation of LLM-powered solutions much more scalable, maintainable, and robust.

Since this is an MVP, we aim to avoid building the tools our agents will use from scratch. Therefore, we opted for one of the available community-driven solutions: Composio-dev.

Composio is an integration platform that empowers AI agents and LLM applications to connect seamlessly with external tools, services, and APIs. With Composio, developers can rapidly build powerful AI applications without the overhead of managing complex integration workflows.

General Information Queries: Respond to general questions outside the calendar and email management scope.

Account Handling: Distinguishes between personal and work accounts, assigning requests to the appropriate account based on user context.

Date Management and Interpretation: Leverages the date manager to interpret and compute dates according to user requests.

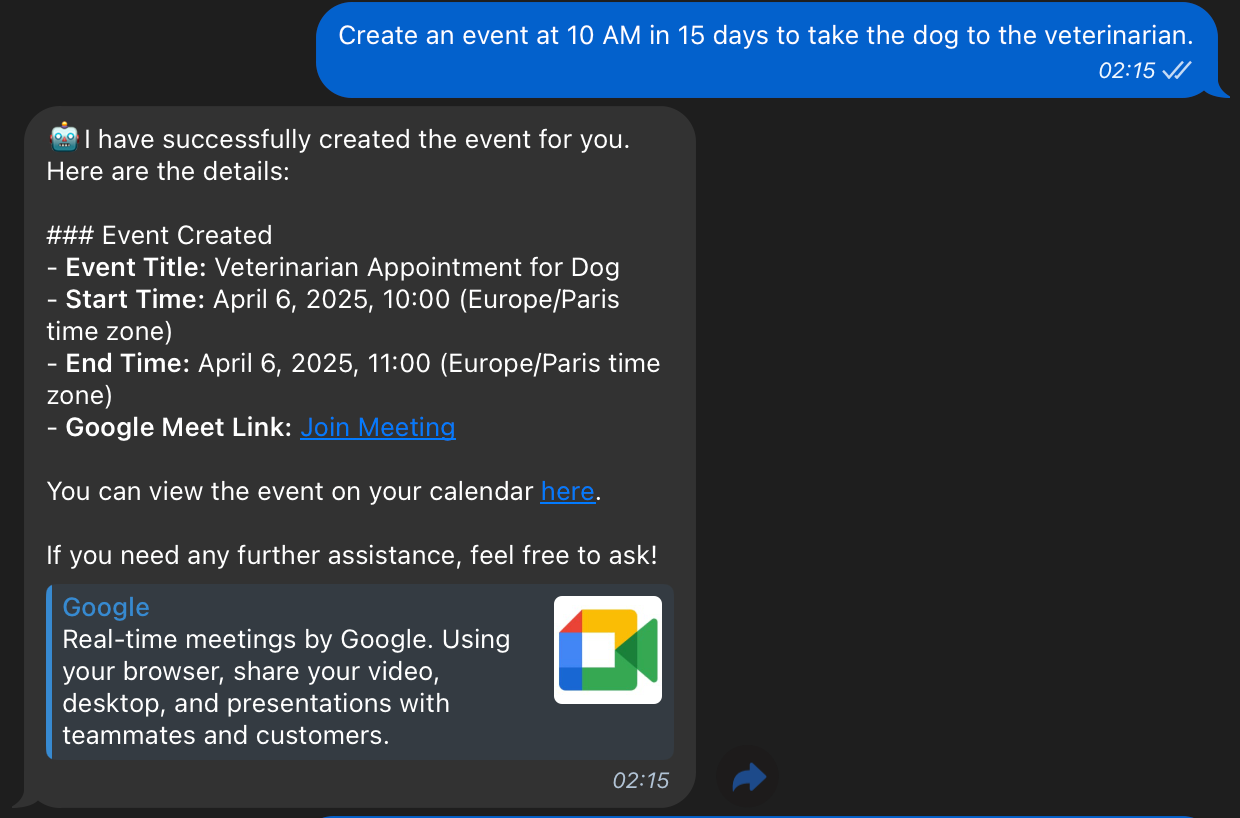

Calendar Management: Manages events using the date manager to validate availability and avoid conflicts.

Email Management: Sends, replies to, and organizes emails.

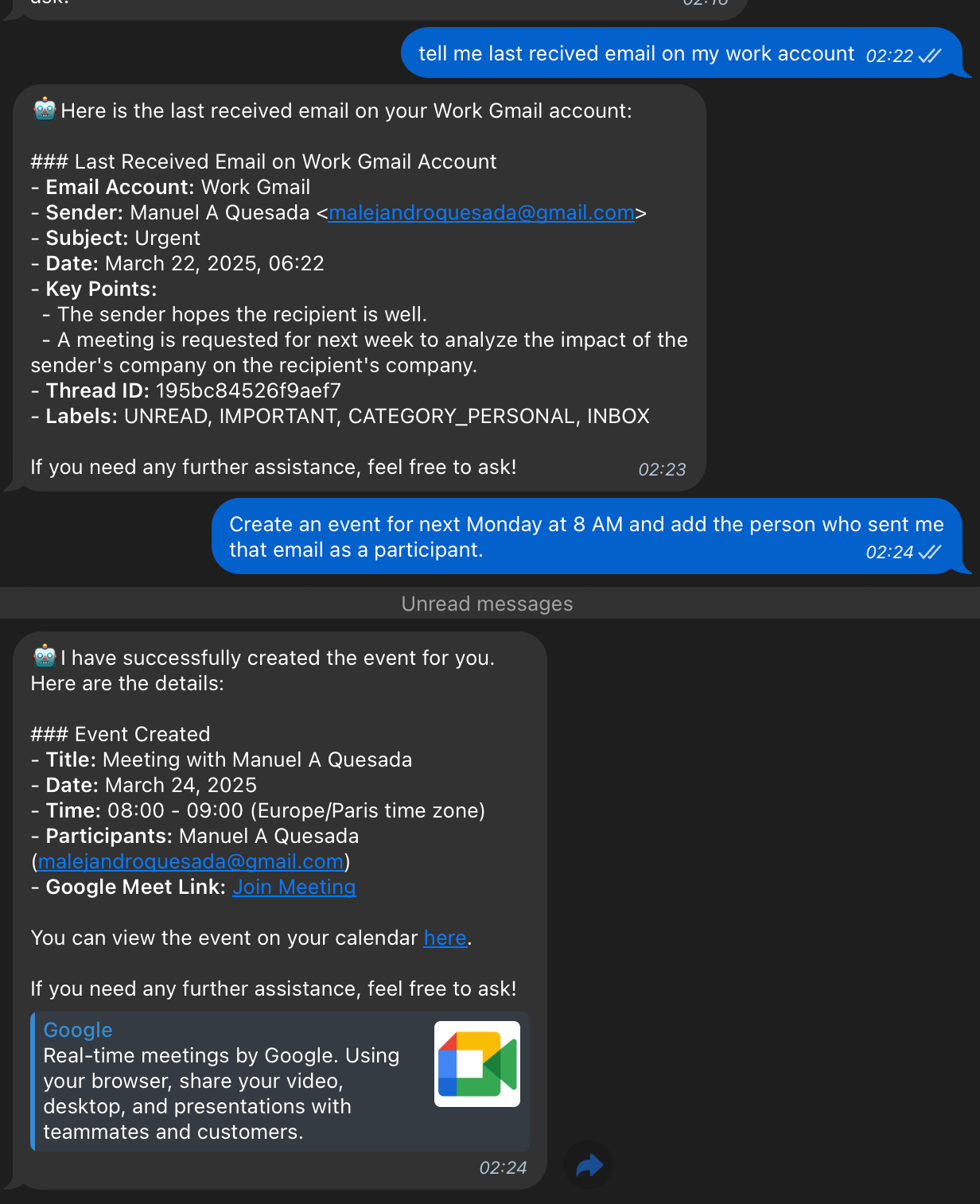

The Orchestrator Input node uses structured output to generate an action plan, which is translated into a list of managers that need to be called before responding to the user. Given the user's request and the message history, its main goal is to return a list of managers to be invoked along with the specific query to be sent to each of them.

For memory management, we leverage LangGraph’s graph-based workflow execution to store checkpoints in PostgreSQL. In LangGraph, “checkpoints” are snapshots of the graph’s state at specific moments during execution. These snapshots allow the system to pause processing at defined points, save the current state, and resume execution later as needed. This feature is particularly useful for long-running tasks, workflows requiring human intervention, or applications that benefit from resumable execution.

To avoid passing the entire memory context to the language model, we use a timer mechanism to provide only the last 5 user interactions as conversational history.

This node is responsible for generating the final response to the user by aggregating all the outputs from the managers. It also has access to the message history. Additionally, it formats the response using Markdown to improve the readability of the output.

If the Orchestrator Input determined that the request was a general question and no manager needs to be called, this node is also in charge of answering the user’s request using its general knowledge, system prompt, and the conversation history.

This node is responsible for calling each manager in sequence while providing context from previously called managers to the next one. This allows any manager who depends on information from a previous one to access it, effectively resolving inter-manager dependencies. The Orchestrator Input node must be capable of generating an action plan that respects these dependencies, where the order of execution matters.

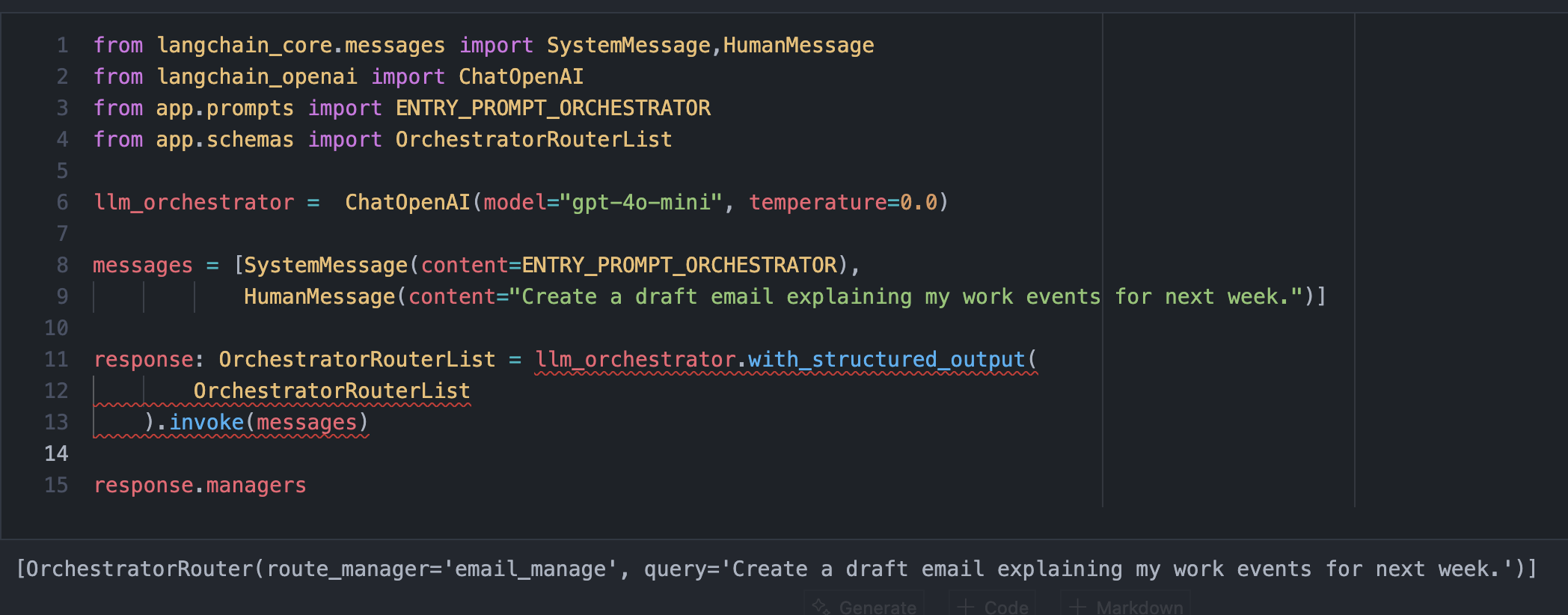

GPT-4o instead of GPT-4o-mini: Our solution initially used GPT-4o-mini because it provides faster responses and is more cost-effective regarding token usage costs. It worked well up to a certain point — even for accessing tools and selecting the right one when the LLM acted as an Agent.

However, during the iterative refinement of the assistant, we observed that GPT-4o-mini could not generate the action plan correctly when the reasoning complexity increased properly. Here's an example that highlights this limitation:

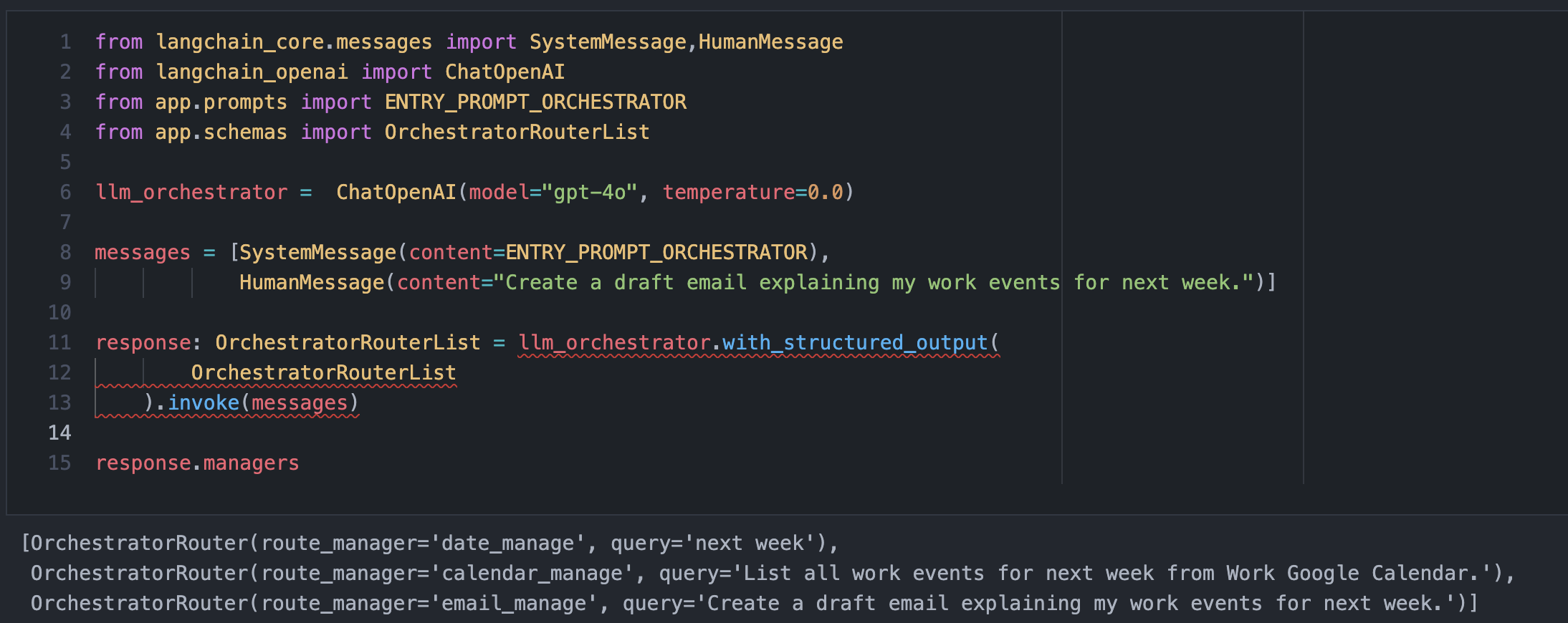

We used the same prompt and simply switched the model to illustrate the inconsistency:

In the image above, the model failed to recognize that it needed to call all three managers to fully address the user's request. In contrast, GPT-4o handles this perfectly. In its output, we can clearly see a list containing the calls to each manager along with their respective queries:

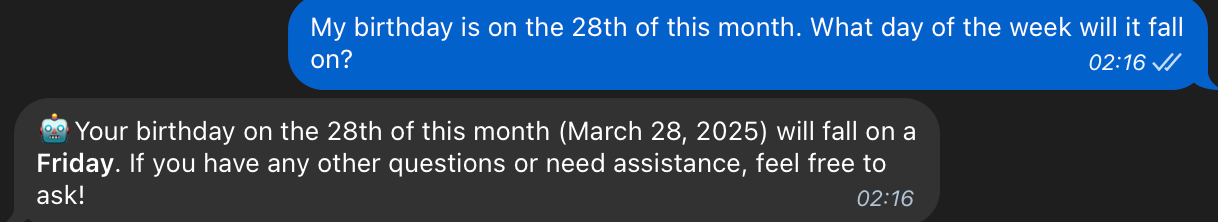

This manager handles any request related to dates and time—calculating a time interval or extracting the correct date from the user's initial query.

For example, LLMs often struggle with determining future dates, such as "next week" or "next month," even when the current date is provided. To address this limitation, we designed a system prompt using the Few-Shot Prompting technique, where we include a series of examples with their corresponding dates and expected outputs. This approach allows us to achieve high accuracy in the generated responses.

This manager acts as a router, responsible for forwarding the query to the appropriate worker. To do so, it uses the following as context:

Each worker represents a specific account. In our case:

Some requests require checking both accounts (e.g., verifying calendar availability), while others affect only one. The personal account is assumed by default if the user does not specify an account.

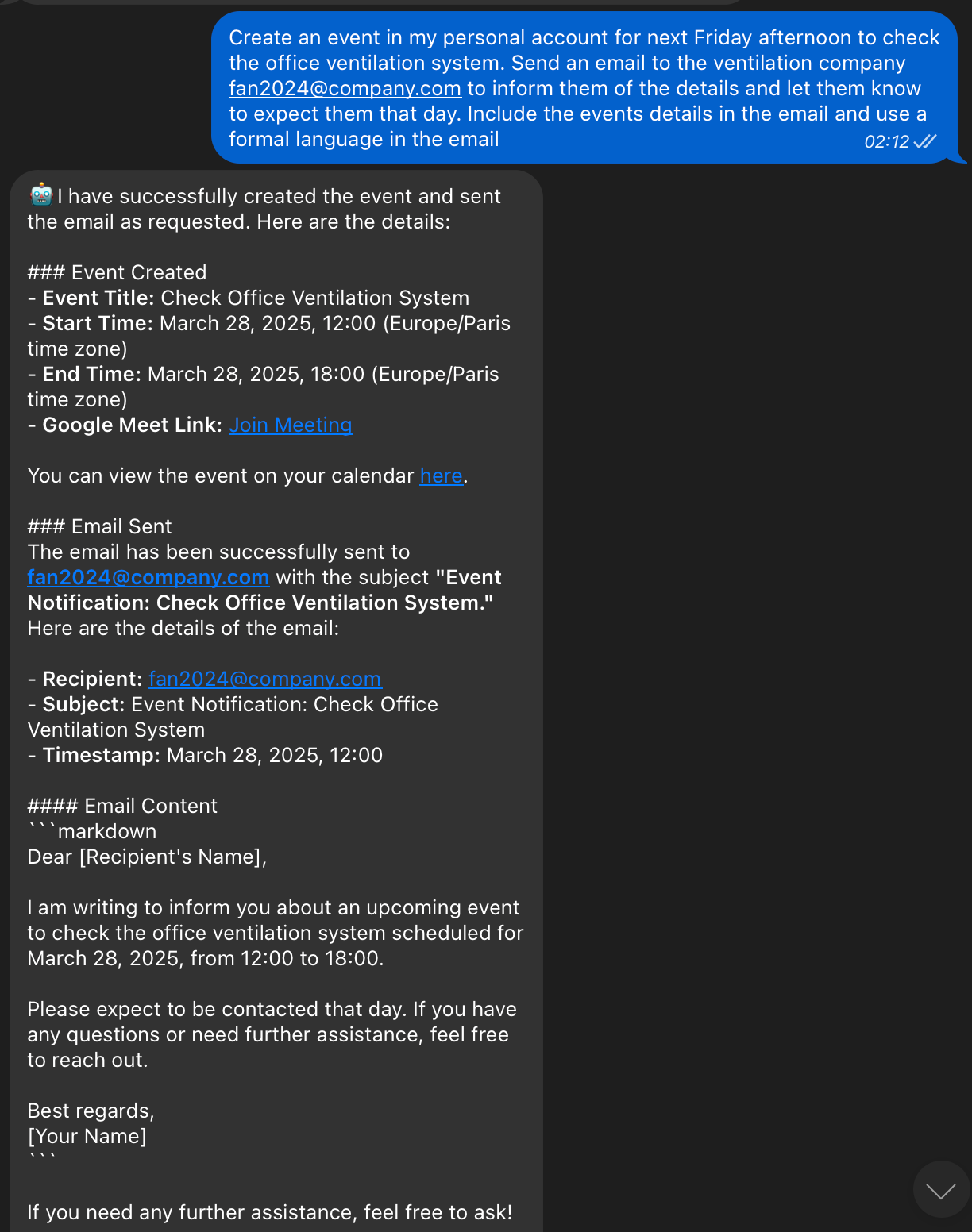

Let’s consider the following user request:

"Create an event tomorrow afternoon to play soccer."

The flow would be as follows:

As a result, the manager generates the following query to be executed on the personal account:

Create an event for tomorrow, March 25, after 12 PM.

The Calendar Worker operates as a ReAct agent without memory of previous messages. This means it does not retain context from prior interactions—its true strength lies in its ability to interact with the tools at its disposal. These tools are essential to its functionality and enable it to perform calendar-related tasks.

Below are the tools the Calendar Worker has access to, along with how it uses each one to manage events:

GOOGLECALENDAR_FIND_EVENT

This tool is used to search for existing events in the calendar. When the Calendar Worker needs to verify a specific event (e.g., before updating or deleting it), it performs a search using this tool.

GOOGLECALENDAR_UPDATE_EVENT

The UPDATE EVENT tool is used to modify existing events in the calendar. If an event needs to be updated (such as changing the time, adding participants, or adjusting details), the Calendar Worker uses this tool. Before performing an update, the worker checks for the event’s existence and, in some cases, performs a conflict check depending on the nature of the change.

GOOGLECALENDAR_CREATE_EVENT

This tool allows the Calendar Worker to create new events in the calendar. When a user requests to add an event (like a meeting or appointment), the worker uses this tool to create it in the appropriate calendar. Before doing so, it checks for time availability using the FIND FREE SLOTS tool.

GOOGLECALENDAR_FIND_FREE_SLOTS

This tool is critical for the Calendar Worker, allowing it to check for available time slots in the calendar. Before creating or updating an event, the worker uses this tool to ensure there are no scheduling conflicts. If a conflict is detected (e.g., an event already scheduled in the requested time range), the worker notifies the user and does not proceed with the creation or update.

GOOGLECALENDAR_DELETE_EVENT

When a user requests to delete an event, the Calendar Worker uses this tool to remove existing events. This action is performed after confirming which event to delete, based on the user’s input or a prior search using FIND EVENT.

Since it does not maintain the memory of past messages, the Calendar Worker focuses on efficient interaction with these tools. Each time it receives a request, it evaluates the situation based on the current context and toolset. This ensures that every action is performed independently and accurately, relying solely on the tools and data available at that moment.

The Email Manager functions similarly to the Calendar Manager. Like the calendar manager, it acts as a router, directing the request to the appropriate worker. In this case, each worker manages one of the user’s email accounts—either the personal or the work account.

The personal account is assumed by default if the user does not specify an account. The workflow follows the same pattern as the calendar manager: context is gathered first, and then the appropriate worker performs the requested action.

Just like the Calendar Worker, the Email Worker operates as a ReAct agent without memory of previous messages. This means it does not retain information from past interactions, and its ability to manage email depends entirely on the tools it has access to at the time. Below are the tools available to the Email Worker, along with how each one is used to perform email-related tasks:

GMAIL_REPLY_TO_THREAD

This tool is used to reply to an existing email conversation. The Email Worker identifies the message to respond to and uses this tool to send a reply. It ensures the response is sent to the correct recipient, either the original sender or another recipient specified by the user.

GMAIL_LIST_THREADS

Used to list email conversations. The Email Worker utilizes this tool to retrieve the most recent threads from the inbox or labeled folders, filtered according to the user's criteria.

GMAIL_SEND_EMAIL

Allows the sending of new emails. When the Email Worker receives a request to send an email, it uses this tool. If the user does not provide a recipient, the worker does not proceed with sending and responds that a recipient must be specified. It also ensures the subject line is appropriate and that the body of the message is properly formatted.

GMAIL_FETCH_EMAILS

This tool enables the search and retrieval of emails from the inbox or other folders. The Email Worker can search by keywords in the subject or body, sender or recipient address, and can filter by labels such as "INBOX," "SENT," "DRAFT," and so on.

GMAIL_CREATE_EMAIL_DRAFT

The Email Worker uses this tool to create email drafts. The worker saves the content as a draft if the user wants to compose an email without sending it immediately. A placeholder example is used to generate the draft if no recipient is specified.

GMAIL_FETCH_MESSAGE_BY_THREAD_ID

This tool is used to retrieve all messages from a specific conversation using its thread ID. The Email Worker uses it to gather full context from the conversation, ensuring that any reply is well-informed and relevant.

Before running the MVP, ensure you have:

1. Set Up Composio

.env file:

COMPOSIO_API_KEY="your_api_key_here"

entity_id values for the integrations:

ENTITY_ID_WORK_ACCOUNT="work" ENTITY_ID_PERSONA_ACCOUNTL="personal"

2. Set Up OpenAI API Key

.env file:

OPENAI_API_KEY="your_openai_api_key_here"

3. Set Up Telegram Bot

.env file:

TELEGRAM_BOT_TOKEN="your_telegram_bot_token_here"

4. Run the MVP

ai-assistant-mvp.zip.docker compose up

Once the containers are running, you can interact with your assistant via Telegram. Since we are using OpenAI services via API to run the system, large computational requirements are unnecessary. A PC with at least 8 GB of RAM would be sufficient.

Throughout the development and testing of this MVP, I interacted with the assistant via Telegram and closely monitored its behavior using LangSmith. This constant feedback loop revealed that, while the assistant performs well, there are still many production-level details to refine—ranging from minor prompt tweaks in specific nodes to adding new tools to support edge cases.

Despite these areas for improvement, the assistant consistently demonstrated the ability to handle complex tasks that required coordination between different services—such as retrieving availability from Google Calendar and then drafting emails in Gmail based on that context. In several instances, I was genuinely surprised by the system’s dynamic behavior. Rather than simply following a static workflow, the agent-based architecture allowed the assistant to make independent decisions and adapt to the input, showcasing its flexibility and reasoning capabilities.

This experience validated not only the technical feasibility of the multi-agent architecture but also its suitability for real-world business logic. Beyond simply automating static departmental workflows, this architecture is capable of adapting to dynamic, context-sensitive tasks that require reasoning and decision-making based on the current environment. A key strength lies in its ability to interact with the external world, not just operate based on predefined instructions or hard-coded logic. This is made possible by integrating tools within the agent system, which provides real-time access to external data and enables grounded decision-making based on up-to-date information. This approach aligns with the increasing industry trend toward agentic AI systems—capable not just of executing but of intelligently orchestrating actions across tools and services in a context-aware manner.

From an industry perspective, the demand for personal AI assistants and task automation agents is growing rapidly, driven by the need to reduce repetitive workloads, improve decision-making support, and increase operational efficiency. Architectures like this one position themselves as a natural fit for enterprise use cases that require scalable, modular, and intelligent orchestration of tools and information systems.

In conclusion, this MVP produced accurate and valuable results during testing. It not only validated the design principles and toolchain used but also highlighted the potential of this approach to evolve into a robust, production-ready assistant. The insights gained provide a strong foundation for future iterations—bringing us closer to building AI agents that can reason, adapt, and truly assist across various domains.

While this MVP demonstrates the technical feasibility and adaptability of an agent-based architecture, it also presents several important limitations that must be considered for its evolution into a more robust, production-ready system:

Dependency on External Integrations (Composio): The current system relies on Composio as an intermediary platform to perform actions on Google services (Gmail and Calendar). While this accelerates initial development and avoids building integrations from scratch, it also introduces a critical external dependency. If Composio experiences service outages, limits functionality, or changes its usage policy, the assistant may become non-functional. Additionally, this dependency may impact overall performance and introduce constraints regarding scalability, data privacy, and long-term control.

Lack of Native Tools for Calendar Conflict Management: Currently, calendar conflict detection relies primarily on the LLM's reasoning ability, which is not always reliable, especially when using lighter models such as GPT-4o-mini. Ideally, a custom-built conflict management tool should be implemented to handle overlapping events, enforce custom rules or prioritization logic, and improve precision in availability checks. This would enhance the reliability and user experience of the assistant.

Response Latency Due to Hierarchical Flow: The adopted architecture—based on multiple hierarchical layers (orchestrator, managers, workers)—offers modularity and clear separation of concerns but also introduces response latency. In real-time scenarios (e.g., conversational assistants), this design can become suboptimal. Each user request must pass through several steps before a final response is generated, which can lead to delays that negatively impact user perception.

One Worker per Account: Limited Scalability: In the current implementation, each worker is tightly coupled to a specific account (personal or work), which limits the scalability of the system. This structure requires the creation of a new worker for every new account to be supported, resulting in redundancy and increased maintenance overhead. A better approach would involve dynamic, parameterized workers that receive account identifiers or credentials at runtime, enabling more flexible and reusable logic.

Lack of Execution Validation Mechanisms: Currently, the Orchestrator Executor executes the defined action plan without intermediate validation mechanisms to ensure that each step is completed correctly. This can result in a manager producing an incorrect or incomplete output. Yet, subsequent steps continue as if the result were valid—leading to incorrect or incoherent final responses. To mitigate this, intermediate output validation and quality checks should be introduced, along with fallback or recovery strategies in the event of partial failures, to ensure overall workflow robustness in real-world usage.

While the current MVP lays a solid foundation, several areas have been identified for future improvement and development:

This roadmap aims to transition the assistant from an MVP to a robust, production-grade system capable of supporting real-world, decision-driven workflows in personal and enterprise contexts.

If you have any questions, encounter issues, or would like to contribute to this project, feel free to reach out:

We welcome feedback, contributions, and collaborations!

Here are some examples of the agent’s capabilities. We encourage you to try them out yourself. 🤖