Abstract

Percolate (MIT license) is a framework for building complex agentic memory structures using Postgres.

Percolate is built on the premise that in the coming years information retrieval and agentic orchestration will converge into the unified paradigm of Iterated Information Retrieval (IIR). The vision is that this paradigm will make way for a powerful class of agentic memory architectures that will support augmented general intelligence. Distinct from artificial general intelligence, augmented intelligence celebrates personalization, user data and human-AI co-creation.

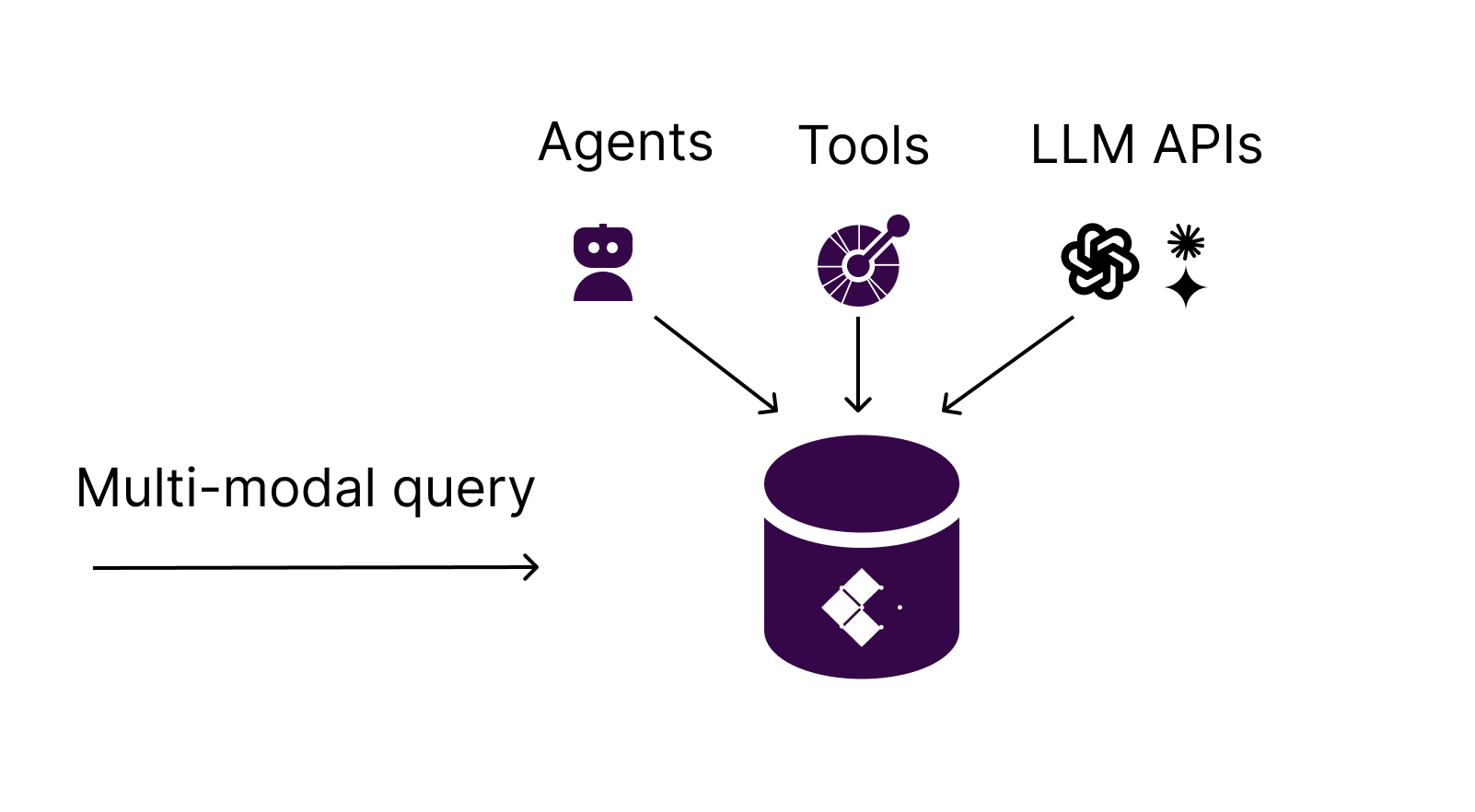

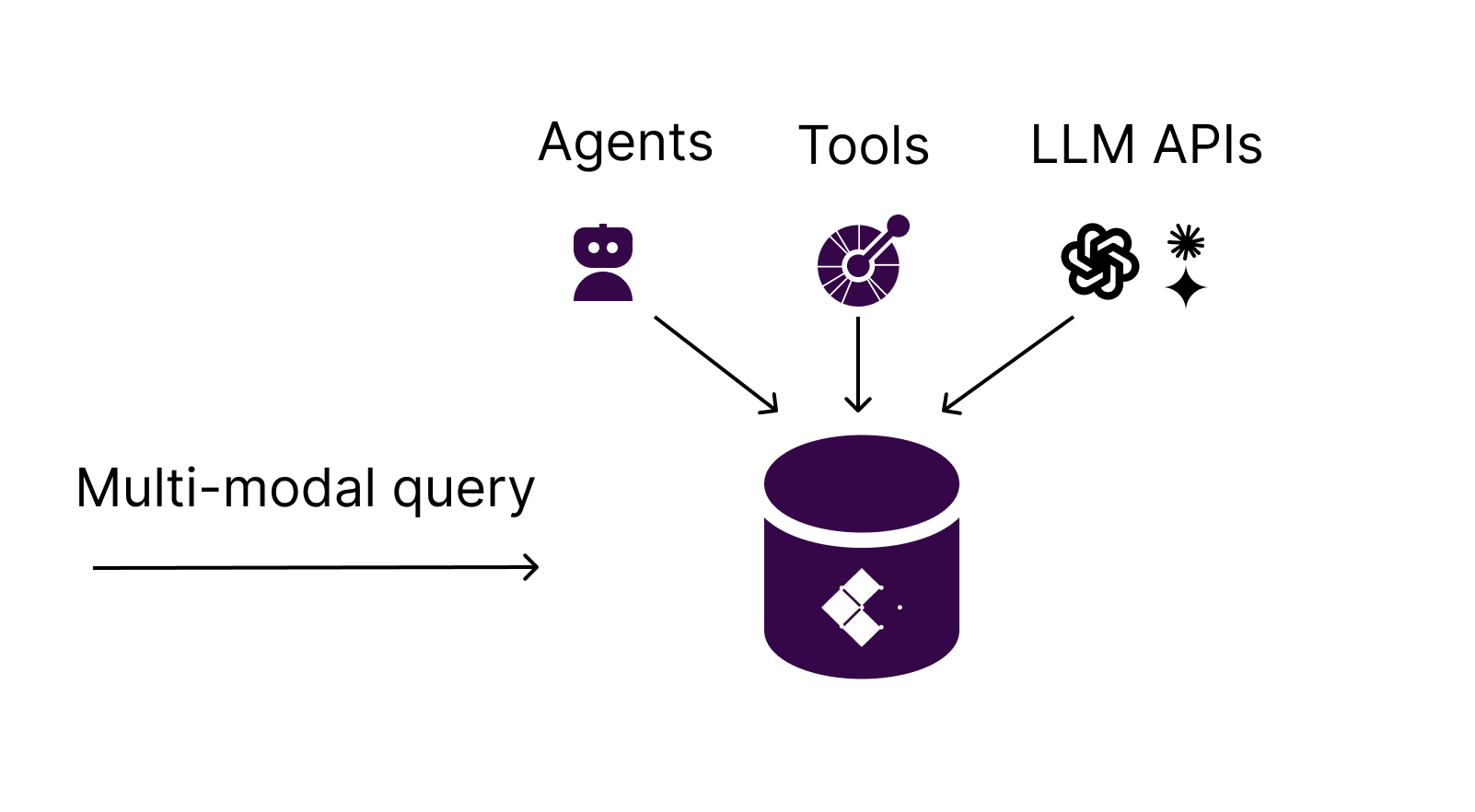

To realize this, Percolate does something very different to other agentic frameworks which is to push agentic orchestration into the data tier. Percolate reimagines agentic orchestration and tool calling as an information retrieval task and builds this directly into the database. This means we see the problem of routing requests to the right agent and loading personalization context into memory as similar problems. A multi-modal build of Postgres, which supports all of vector, graph, key-value, relational and HTTP API calls is used as a foundation.

With Percolate, dynamic agentic workflows can be constructed either in the database or through a client such as the Percolate Python client, which works much like any other Pydantic-driven agentic SDK you may have used.

Introduction

Today, agentic orchestration (typically in Python) and RAG/agentic memory solutions are generally treated as two separate concerns. Percolate was built on the premise that information retrieval and agentic orchestration will converge into a unified paradigm called Iterated Information Retrieval (IIR). This paradigm has the potential to make way for a powerful class of agentic memory architectures that can support augmented general intelligence. As language models become smaller, faster and cheaper, it will become increasingly feasible to use AI to build intelligent content indexes right inside the data tier.

You can see an early example of what this looks like in the MIT licensed project Percolate and you can check out this video to see how to get it running locally on Docker. (See also the basic examples given below.)

Iterated Information Retrieval combines (1) multimodal data indexes for graph, vector, key-value and relational data with (2) treating orchestration of agents and tool calls as an information retrieval problem. This means treating agents and tools as declarative metadata that can be stored, searched and called from the database rather than treating agents as code.

To make agents declarative we simply need to factor out any notion of “agents as code” by treating all tools as external to the “agent”, which then becomes simply (1) a system prompt with (2) structured schema, along with (3) a reference to external functions.

Percolate allows for a completely data-orientated orchestration pattern for agents but you can also use clients in your favourite programming language (as we have implemented in Python for example). These clients become relatively thin compared to present day agentic frameworks such as Langchain/LangGraph, Pydantic.AI or Open AI's newer AgentSDK. A declarative framework avoids hard-coding graphs and agents in code, which instead, can be treated more fluidly as data. This is not always preferred - but in the limit, for complex systems with many agents and tools, a database is a great way to manage the complexity.

By doing this, agentic orchestration becomes an information retrieval problem and part of the more general agent-persistence and agent-memory challenge. Whether we are looking for agents or tools, digging up previous user conversations or searching over a knowledge base, these are all unified here as a query/routing problem.

The “iterated” in IIR refers to the fact that unlike traditional forms of information retrieval where a user initiates a query and gets a response, now an agentic system generates a query wave; the first response can provide content but also references to other content in the database in a “see also” format. This process is supported by language models that run "offline" as background processes to build multi-modal content indexes.

As the agent loop runs it is “prompted” by these references and can probe deeper before responding to the user or taking some other action on behalf of the user. The agent loop can discover not only data but other functions and agents that can be activated, possibly with a “handover” of context.

All agent frameworks execute an agent loop but Percolate builds this into the query interface between data and agent logic.

In the following sections I provide a brief historical overview of agent frameworks before describing the features of Percolate with some examples.

A little history

This section is added for completeness and can be skipped by those familiar with the history of agentic frameworks and databases. It concludes with some of the main features of agent SDKs as of 2025 and emerging data-tier AI frameworks such as pgai.

The field of AI agent frameworks has seen rapid development and innovation over the past few years, with several key ideas and frameworks emerging to shape the industry. This review will explore the evolution of AI agent frameworks from 2023 to 2025, highlighting significant milestones and trends.

In the early stages, frameworks like LangChain emerged, focusing on handling large language models and text-based applications. LangChain focused on projects centered around chatbots, question-answering systems, and research tools. During this period, the concept of structured outputs gained traction, with frameworks emphasizing the importance of defining and validating agent outputs.

As the field progressed, there was a shift towards multi-agent systems and collaborative frameworks. Microsoft AutoGen, introduced in this period, represented an advancement in developing complex and specialized AI agents. AutoGen's multi-agent conversation framework and customizable agent roles allowed for more sophisticated AI-driven solutions. Simultaneously, CrewAI emerged, focusing on real-time collaboration and enabling multiple agents or humans and agents to work together effectively. CrewAI's Pythonic design and inter-agent communication features could be used for scenarios requiring coordinated teamwork.

The concept of graph-based architectures gained prominence with the introduction of frameworks like LangGraph. As an extension of LangChain, LangGraph used large language models to create stateful, multi-actor applications. This framework enabled more complex, interactive AI systems involving planning, reflection, and multi-agent coordination. LangFlow, another graph-based multi-agent framework, further solidified this trend, offering enhanced scalability and efficiency for managing multiple agents.

OpenAI Swarm emerged as a lightweight, experimental framework for in multi-agent orchestration. Its handoff conversations feature and scalable architecture provided a flexible solution for managing multiple AI agents. Pydantic.AI introduced their own SDK building on the strengths of Pydantic, which had been so readily embraced by the community. This framework improved engineering standards compared to earlier frameworks, while also starting to think about graphs and workflow persistence.

OpenAI released its Agents SDK in March 2025, offering developers a powerful toolkit for creating sophisticated AI agents. They implemented Agent Loops ie. automated process for tool calls and LLM interactions, handoffs i.e enabling seamless coordination between multiple agents, guardrails i.e. input validations to enhance security and reliability, tracing i.e built-in visualization and debugging tools for monitoring agent workflows. These illustrate what the industry perceives as critical features as of 2025.

Modern vector databases like Chroma and Pinecone have emerged as vector focused AI databases focusing on high-dimensional indexing for semantic search and similarity matching, real-time hybrid search combining dense/sparse vectors, cross-modal integration for tasks like text-to-image generation, dynamic personalization through continuous vector updates. The principle of Retrieval Augmented Generation (RAG) initially focused on vector embeddings for "AI memory". Vectors alone are limited and later applications merged graph and relational data for more interesting hybrid search.

The Postgres extension pg_vector allowed Postgres to support AI applications and many providers from Supabase, TimescaleDB, Lantern emphasize how this can be a better alternative to offerings such as Pinecone. With Postgres you have a mature open source ecosystem and can combine relational and semantic search.

TimescaleDB's pgai project is an illustration of a paradigm shift in agent frameworks by embedding AI workflows directly into PostgreSQL - just like Percolate.

Percolate goes beyond these offerings by integrating not just data storage concerns but also implementing agentic orchestration in the database. Additionally, it models graph and entity-lookup query modalities.

Key Principles of Percolate

To build agents in Percolate we use a similar approach to other frameworks as described in the previous section. Over the last few years the mantra “Pydantic is all you need” has become standard, leading even to Pydantic releasing their own Agent framework at the end of last year. However I would qualify that Pydantic is not all you need. Pydantic is more than you need and that is because Pydantic is (in the agentic context) simply a nice wrapper around Json Schema, which is a declarative way to express objects and structured output (for example in a database). Keep in mind that all language models today are accessed via JSON Rest APIs.

Side note on motivations

Since function calling was introduced in 2023, I was also leaning heavily into Pydantic, which was an obvious thing to do back then and I built an agentic framework called funkyprompt based on these ideas. I wrote a series of articles on Medium about it, talking about building agentic systems from first principles starting with this article.

However, I found that increasingly the real challenges were information-retrieval related. And thats why, at the start of this year, I started working on Percolate to get to the heart of the matter, which is how to (a) perform queries over multimodal data and tools and (b) to route requests between different agents i.e. orchestrate agents.

Below is a breakdown of some of the main principles and components of Percolate.

Agent and Tool storage and search

Percolate stores agents and tools in the database. Agents are stored in p8.Agent table and the tools are stored in the p8.Function table. Functions can be either REST endpoints described by OpenAPI or native database functions. (We are currently working on adding MCP Servers).

Planning and routing

Adding agents and tools to the database means they can be discovered by the Planner agent to dynamically load agents and functions. A help function is attached to all agents allowing for functions or agents to be recruited to a given cause. While loading dynamic functions is possible, agents are typically designed with their own functions by reference, which we will see in an example below. (If an agent has attached functions these functions can be activated at loop execution time. But if a user asks a question that is outside the scope of the prompt or functions that the agent has, it can ask for help and activate arbitrary functions.)

Note: when we activate other Agents, we can execute them as black box functions. When we do this the same system prompt and message context is used in our main thread. We also have the option to do a handoff where we load the recruited agent's system prompt into the main thread.

Tracing sessions and AIResponses over turns

When we run agents in Python or the database, the user questions are saved in the Session object. Additionally each request-response turn calling the language model API is logged in the AIResponse table. This object can have one or all of content, tool call data or tool call interpretation. As sessions are automatically logged this makes Percolate great for auditing agentic applications out of the box.

Langauge Model Agnostic

Percolate runs with any language model API via Python or the database. LLM Apis come in three major dialects all of which are implemented in Percolate.

- Google e.g. Gemini models

- Anthropic i.e. Claude models

- OpenAI (de facto standard): GPT and most other models that are not anthropic or google

One useful thing about Percolate is you can switch between these models and transform message payloads between dialects. For example you could start a conversation in one dialect e.g. Anthropic and then re-run it or resume it in another dialect e.g. OpenAI.

To learn more about how Percolate implements message structures and API calls in these dialects see this Medium article

Multi-modal indexing and search

Percolate is not only an agent orchestration framework. It is also (and more importantly) a database and a multi-modal one at that. Percolate is built on Postgres and we have added extensions for;

- Graph (AGE Graph extension)

- Vector (

pg_vectorextension) - Key-value lookup (Using the graph)

- Relational

- HTTP (using the HTTP extension for making tool calls, requesting embeddings or calling language model APIs from the database).

By combining these modalities we can represent knowledge in different ways.

The basis of Percolate entities is structured, relational data. For example when we create agents, we store tables that represent their structure along with detailed field metadata. This field metadata, which can include field descriptions or information about embeddings, can be thought of as extended table metadata in Postgres. This metadata can be used to construct SQL queries from natural language from within the database.

Beyond constructing relational predicates, we can also do semantic search since content fields on the entity that are labelled with embedding providers are automatically semantically indexed i.e. we compute embeddings for these fields. When data are inserted, a database trigger will run a worker to build an embedding table, which can later be used for vector searching that table.

More complex queries and indexes can include graph indexes. Graph indexes complement vector indexes which, alone, are insufficient for RAG applications. For an introduction to how we think about this in Percolate see this article.

In summary, Percolate provides multiple ways to index content. Multi-modal indexes means AI/agents can use different types of queries that best suit the user's query. Indexes are constantly built in the background so the system learns over time.

Walkthrough and Code Examples

Getting started

To run Percolate locally, clone the repo

git clone https://github.com/Percolation-Labs/percolate.git

and from the repo, launch the docker container so you can connect with your preferred database client. This assumes you have installed Docker e.g. Docker Desktop.

docker compose up -d #The connection details are in the docker-compose file

The easiest way to get started is to run the init cli option - this will add some test data and also sync API tokens for using language models from your environment into your local database instance. This requires Poetry to be installed. Note it is assumed when you run the client by default that you have an OPEN_AI_API key for the default examples but any models can be added and used.

#cd clients/python/percolate to use poetry to run the cli #the first time install the deps with `poetry install` poetry run p8 init

The above command will load standard API keys into your local environment and also add some test content for testing including indexing some Percolate code files for semantic searches and adding a test API to test function calling.

You can ask a question to make sure things are working. Using your preferred Postgres client log in to the database on port 5438 using password:password and ask a question.

Select * from percolate('How does Percolate make it easy to add AI to applications?')

Creating and using an agent in Python

While Percolate allows for agents to be run from the database, you can also use the Python client. Agents are just Pydantic objects. You can add the system prompt as a docstring and add fields to describe structured output. In Percolate all objects can be registered as database tables to save agent states. (Conversely, all tables are treated as agentic entities that you can talk to.)

While you can add and use functions directly on your object, Percolate is best used with external functions because then agents can be saved declaratively and used in the database. Below is an example of referencing external functions. In this case it uses a get_pet_findByStatus, which is an endpoint from a test API registered in the initialization step above.

import percolate as p8 from pydantic import BaseModel,Field import typing import uuid from percolate.models import DefaultEmbeddingField class MyFirstAgent(BaseModel): """You are an agent that provides the information you are asked and a second random fact""" #because it has no config it will save to the public database schema id : str | uuid.UUID name: str = Field(description="Task name") #the default embedding field just settings json_schema_extra.embedding_provider so you can do that yourself description:str = DefaultEmbeddingField(description="Task description") @classmethod def get_model_functions(cls): """i return a list of functions by key stored in the database""" return { 'get_pet_findByStatus': "a function i use to look up pets based on their status", }

To use this agent you can spin it up in Percolate.

import percolate as p8 agent = p8.Agent(MyFirstAgent) agent('can you tell me about two pets that were sold')

Running agents from the database and resuming sessions

Percolate's major strength is that it can run agents declaratively for example in the database.

You can register any agent using the repository for that object type...

p8.repository(MyFirstAgent).register()

This does a number of things:

- It creates a table based on the given schema, which the agent can use to save structured state.

- It adds database artifacts for managing column embeddings and for triggering embedding creation.

- It adds other metadata for managing entities and graph relationships.

- It adds the agent to the list of agents in the database so it can be searched and used dynamically.

In the database we can now run

select * from percolate_with_agent('list some pets that are sold', 'MyFirstAgent')

This is equivalent to using p8.Agent(MyFirstAgent).run('list some pets that are sold') except now its run entirely in the database. This means; discovering the agent and prompt (which has external function called get_pet_findByStatus to load); loading the function by name and "activating" it; calling the function and summarizing the data.

Each iteration or turn is audited in the database in the AIResponse table and its possible to resume sessions from their saved state.

Note: The examples here run synchronous agents just to illustrate. In production systems a background worker is used to manage turns without locking database resources.

Other examples

To see more examples you can check out the following Medium articles;

Why Percolate

Key Features

- ✅ Storage and search for agents and tools

- ✅ Data tier or Python agent orchestrator with dynamic function loading and agent handoffs

- ✅ Tracing and auditing for free as everything is persisted by default

- ✅ Workers to automatically build vector and graph indexes

- ✅ Multi-modal entity search that combines SQL predicates, semantic search and graph relationships

- ✅ OpenAPI function proxy

- ✅ Works with any language model using Google, Anthropic or OpenAI API (standard) dialects

- ✅ Session & turn auditing

- ✅ MCP client for attaching MCP servers (coming soon)

- ✅ Managed cloud recipes for Kubernetes (coming soon)

Key Use cases

- ✅ Persistence proxy when calling LLMs/Services

- ✅ Personalization layer to track conversation and integrate data into your agent workflows

- ✅ Research assistants

- ✅ Implementing multi-modal RAG

Tech stack and languages

- Docker/Kubernetes

- Postgres / Cloud Native Postgres Operator for Kubernetes

- SQL

- Cypher

- Python

- FastHTML admin dashboard (coming soon)

The Future

This is only the beginning. Percolate is a very young project started in January of this year. Percolate is an ambitious project that aims to go beyond what today's agent frameworks can do by pioneering a convergence between agent orchestration and information retrieval. Data and memory are what power agentic systems. "Solving" memory is the holy grail for achieving augmented general intelligence, where humans and AI work together to create at the speed of thought.

Please take a moment to check out the repository and get back to us with issues, feature requests and general comments. If Percolate sounds interesting to you please give the repo a ⭐

Stay in touch by subscribing to our conceptual overviews on Substack and our more granular, technical deep-dives over on Medium.

Thanks for reading about Percolate!