Abstract

3D multi-object tracking plays a critical role in autonomous driving by enabling the real-time monitoring and prediction of multiple objects' movements. Traditional 3D tracking systems are typically constrained by predefined object categories, limiting their adaptability to novel, unseen objects in dynamic environments. To address this limitation, we introduce open-vocabulary 3D tracking, which extends the scope of 3D tracking to include objects beyond predefined categories. We formulate the problem of open-vocabulary 3D tracking and introduce dataset splits designed to represent various open-vocabulary scenarios. We propose a novel approach that integrates open-vocabulary capabilities into a 3D tracking framework, allowing for generalization to unseen object classes. Our method effectively reduces the performance gap between tracking known and novel objects through strategic adaptation. Experimental results demonstrate the robustness and adaptability of our method in diverse outdoor driving scenarios. To the best of our knowledge, this work is the first to address open-vocabulary 3D tracking, presenting a significant advancement for autonomous systems in real-world settings.

Introduction

3D multi-object tracking involves detecting and continuously tracking multiple objects in the physical space across consecutive frames.

This task is essential in autonomous driving as it allows the vehicle to monitor and predict the movements of multiple objects in its environment. The system can make informed decisions for safe navigation, collision avoidance, and trajectory planning by accurately identifying, localizing, and tracking objects over time. This capability is vital for real-time responsiveness and the overall reliability of autonomous systems.

Current 3D tracking systems are structured around benchmarks with labeled datasets, driving the development of methods that excel at tracking predefined categories such as cars, pedestrians, and cyclists. These tracking systems maximize performance within this closed set by detecting and associating known objects across frames. However, these systems are limited by their reliance on a closed set of classes, making them less adaptable to new or unexpected objects in dynamic real-world environments. Although effective within defined benchmarks, their performance diminishes when encountering unknown objects.

To overcome these limitations, open-vocabulary systems are needed to enable the detection and recognition of objects beyond the predefined categories. In 3D tracking, open-vocabulary capabilities are essential for enhancing adaptability and robustness in dynamic environments, where a vehicle may encounter unexpected or rare objects not covered by the training data. By incorporating open-vocabulary approaches, 3D tracking systems can better generalize to new objects, ensuring safer and more reliable autonomous navigation in diverse real-world scenarios.

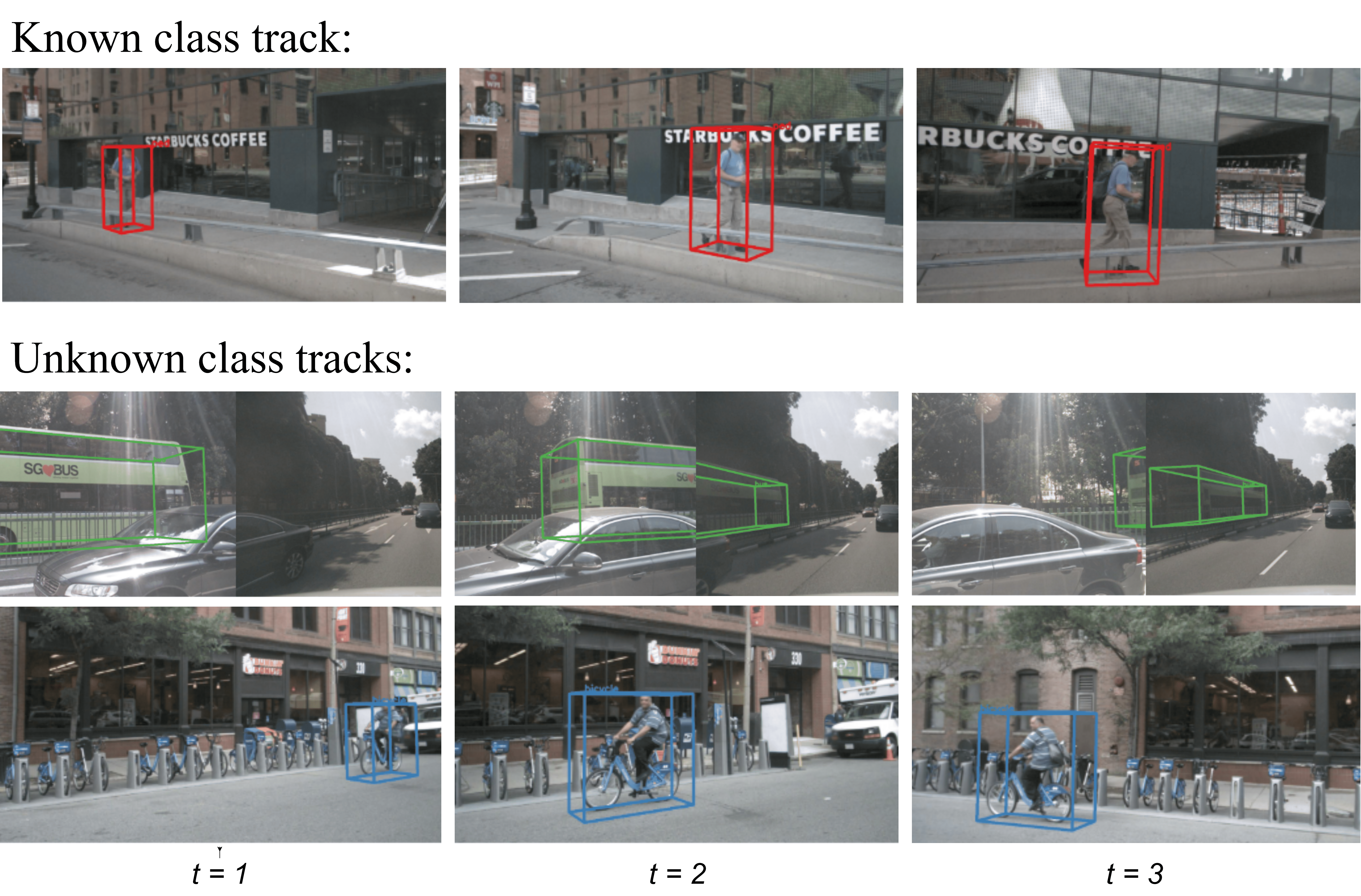

In this paper, we introduce the task of open-vocabulary 3D tracking, where trajectories of known and unknown object classes are estimated in the real 3D space by linking their positions across consecutive frames.

We formulate the open-vocabulary tracking problem, propose various splits to evaluate the performance across known and unknown classes, and introduce a novel approach that bridges the performance gap between tracking known and unseen classes.

Our approach leverages the strengths of 2D open-vocabulary methods and existing 3D tracking frameworks to generalize to unseen categories effectively.

Through extensive experiments, we demonstrate that our proposed method achieves strong results through our adaptation strategies, providing a robust solution for open-vocabulary 3D tracking. Figure below shows an example output of our solution for the open-vocabulary 3D tracking task.Our method can detect and track previously unseen object categories in three-dimensional space without relying on predefined labels.

Methodology

In this section, we present our approach for 3D open-vocabulary tracking. Our proposed system integrates 3D proposals, 2D image cues, and vision-language models to classify and track objects in an open-vocabulary setting, including classes not encountered during training.

3D Tracker

We adapt our 3D open-vocabulary tracking approach from the recent 3D multi-object tracker 3DMOTFormer (3DMOTFormer).

3DMOTFormer is a tracking-by-detection framework that leverages graph structures to represent relationships between existing tracks and new detections. Features such as position, size, velocity, class label, and confidence score are processed through a graph transformer and updated based on interactions. Within the decoder, edge-augmented cross-attention models how tracks and detections interact, with edges representing potential matches.

To match tracks with detections, affinity scores are predicted, indicating the likelihood of correspondence. The final step computes loss using positive target edges that share the same tracking ID. Ground truth tracking IDs are assigned by calculating 3D IoU between ground truth boxes and 3D detections, followed by Hungarian Matching for one-to-one ID assignment. Loss

While 3DMOTFormer performs well for the closed set, it struggles to track unseen object classes due to its reliance on class-specific information during training. The tracker expects identified 3D boxes to generate edge proposals and then process those through the graph transformer. In a situation where it may receive a new label or an unlabelled object, the model will fail to track it. 3DMOTFormer also uses class-specific statistics within its pipeline, such as maximal class velocities, to compute association edges between tracks and new detections. Such information regarding unseen, unfamiliar objects that the system may encounter for the first time is not available in a real-world open-vocabulary setting.

Class-Agnostic Tracking

To enable tracking objects irrespective of their class label, we modify the tracker to utilize only object position, dimensions, heading angle, and velocity as the initial features. Object detections from a given detector are treated as proposals (class-agnostic), where the class labels and confidence scores are dropped and not utilized.

To compute potential association edges, we replace class-specific distance thresholds with a fixed threshold, achieving a balance between maintaining true edges and avoiding unnecessary associations.

We change the ground truth track ID assignment by computing 3D IoU between ground truth boxes

Confidence Score Prediction

As discussed earlier, we drop class labels and confidence scores from the detection features to ensure the tracker is independent of any information specific to object classes.

As a result, the initial output from the system suffers from a lack of representation of the objectness score of each box. To overcome this issue, we use the confidence scores available for

where

2D Driven Open Vocabulary Labels

While we train the tracker by removing all class-specific dependencies, we require a strong open-vocabulary classification for all object tracks, known and unknown, at test time.

We leverage vision-language models to match regions in the images with text prompts, enabling them to detect and classify objects for which they have not been explicitly trained.

2D detections on multi-view images from the vehicle are obtained from an open-vocabulary 2D detector, which is prompted with all possible labels—both

The system allocates class labels to the 3D proposals by first projecting the 3D boxes onto multi-view images. The labels are then derived from the highest IoU overlap with 2D detections on the image plane. Projections of the 3D detections that do not overlap with a 2D open-vocabulary detection are labeled as 'unknown.' We explicitly identify these boxes at the end of the tracking process.

Track Consistency Scoring

At the output of the tracker, we apply a track smoothing module that addresses the issue of unknown boxes that do not match any 2D detections from the images, as well as improves label consistency throughout tracks to account for inaccuracies in the open-vocabulary detector outputs and errors accrued during projection from 3D to 2D.

To calculate the most accurate class for each track, we first compute the weight of an object based on its detected bounding box in an image, with objects at a greater distance assigned a smaller weight. The depth is derived from the size of the bounding box, the object's vertical position in the image for perspective correction, and the aspect ratio of the bounding box to ensure uniformity for all classes.

where:

refers to the 2D detected box, refers to the image, points to the vertical center of the box, is the perspective correction factor, is the aspect ratio threshold, .

We then compute the weight as:

where

The smoothing module then uses the modified confidence score and finds the average modified confidence for each class in the track, along with their occurrence, selecting the class with the highest combination. Any track that is composed entirely of unknowns is dropped.

Open-Vocabulary Tracking Splits

Our method is evaluated in outdoor environments using custom data splits based on common object types and situations. Fig. below shows various statistics from nuScenes and subsequent splits. One split follows the common open-vocabulary approach, treating rarer classes as novel. Another split differentiates between highway and urban objects based on the greater activity and mobility of some classes within urban areas. A final split focuses on objects with high variability in shape and size, represented by the average LiDAR points per object. These splits provide a robust framework for evaluating open-vocabulary tracking in realistic and diverse outdoor settings.

Results

Table 1: Main Results

Results on the nuScenes validation set using 3D proposals from CenterPoint. We compare the baseline and our open-vocabulary method across various splits. Closed-set 3DMOTFormer results are shown for reference. Our approach significantly improves over the baseline, particularly for novel classes, in all splits. Performance is reported for primary metrics AMOTA

| Overall | Bicycle | Bus | Car | Motorcycle | Pedestrian | Trailer | Truck | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | AMOTA | AMOTP | |

| Split 1 - Rare | ||||||||||||||||

| Baseline (w/o unknowns) | 0.415 | 0.868 | 0.323 | 1.051 | 0.403 | 1.030 | 0.656 | 0.432 | 0.194 | 1.336 | 0.717 | 0.399 | 0.395 | 1.069 | 0.218 | 0.758 |

| Baseline (w/ unknowns) | 0.483 | 0.785 | 0.424 | 0.853 | 0.423 | 0.952 | 0.678 | 0.391 | 0.288 | 1.293 | 0.746 | 0.350 | 0.469 | 0.975 | 0.349 | 0.684 |

| Open3DTrack (Ours) | 0.578 | 0.783 | 0.445 | 0.988 | 0.612 | 0.918 | 0.779 | 0.390 | 0.469 | 1.104 | 0.752 | 0.403 | 0.477 | 1.037 | 0.511 | 0.638 |

| Split 2 - Urban | ||||||||||||||||

| Baseline (w/ unknowns) | 0.469 | 0.740 | 0.301 | 0.982 | 0.481 | 0.907 | 0.657 | 0.392 | 0.537 | 0.549 | 0.482 | 0.634 | 0.455 | 0.985 | 0.367 | 0.729 |

| Open3DTrack (Ours) | 0.590 | 0.677 | 0.400 | 0.894 | 0.683 | 0.820 | 0.788 | 0.387 | 0.702 | 0.422 | 0.548 | 0.599 | 0.488 | 0.981 | 0.522 | 0.635 |

| Split 3 - Diverse | ||||||||||||||||

| Baseline (w/ unknowns) | 0.438 | 0.813 | 0.389 | 0.493 | 0.640 | 0.499 | 0.627 | 0.402 | 0.275 | 1.311 | 0.564 | 0.590 | 0.424 | 0.993 | 0.144 | 1.406 |

| Open3DTrack (Ours) | 0.536 | 0.804 | 0.524 | 0.581 | 0.770 | 0.543 | 0.708 | 0.395 | 0.438 | 1.143 | 0.564 | 0.648 | 0.470 | 1.036 | 0.276 | 1.281 |

| Upper Bound (3DMOTFormer) | 0.710 | 0.521 | 0.545 | 0.429 | 0.853 | 0.527 | 0.838 | 0.382 | 0.723 | 0.459 | 0.812 | 0.335 | 0.509 | 0.936 | 0.690 | 0.576 |

Table 1 shows results on the nuScenes validation set using 3D proposals from CenterPoint detector. Our method significantly improves AMOTA performance, particularly for novel classes.

Table 2: Detector Generalization

Results on the nuScenes validation set for detector generalization for open-vocabulary 3D tracking. Results are shown for base and novel class groups from split 3, with the tracker trained on detections from the source method and tested on 3D detections from the target method.

| Source | Target | Base AMOTA | Base AMOTP | Novel AMOTA | Novel AMOTP | Overall AMOTA |

|---|---|---|---|---|---|---|

| CenterPoint | CenterPoint | 0.630 | 0.617 | 0.509 | 1.003 | 0.578 |

| MEGVII | MEGVII | 0.586 | 0.7055 | 0.477 | 1.1207 | 0.539 |

| BEVFusion | BEVFusion | 0.662 | 0.5535 | 0.410 | 1.054 | 0.554 |

| CenterPoint | MEGVII | 0.579 | 0.7106 | 0.496 | 1.093 | 0.543 |

| CenterPoint | BEVFusion | 0.616 | 0.556 | 0.458 | 0.982 | 0.548 |

Our approach demonstrates consistent performance across various 3D proposal sources. Training and testing on the same detector generally yields the best results, with BEVFusion achieving the highest base class performance but lower novel class performance, potentially due to a focus on high-quality base class detections.

Conclusion

In conclusion, we introduce a novel approach for open-vocabulary 3D multi-object tracking, addressing the limitations of closed-set methods. By leveraging 2D open-vocabulary detections, and applying strategic adaptations to a 3D tracking framework such as class-agnostic tracking and confidence score prediction, we demonstrate improvements in tracking novel object classes. Our experiments highlight the robustness of our method. These contributions represent a meaningful step toward more flexible 3D tracking solutions for autonomous systems, enhancing their ability to generalize in dynamic environments.