The complexity of modern space missions demands real-time autonomy, reliability, and decision support to ensure mission success. Nebula AI introduces a modular multi-agent architecture that processes telemetry, detects anomalies, evaluates risks, schedules tasks, and provides mission recommendations via a large language model (LLM)-assisted interface. The system comprises four core agents: Anomaly Detection Agent (ADA), Anomaly Impact Assessor (AIA), Task Scheduling Agent (TSA), and Mission Planning and Recommendation Agent (MPRA) that coordinate through a publish-subscribe communication framework. Evaluation on simulated mission datasets demonstrates high anomaly detection accuracy and improved scheduling efficiency. This paper details Nebula AI's architecture, agent logic, evaluation results, and future directions toward autonomous, explainable space systems.

The vast expanse of space has always beckoned humanity with its mysteries, a siren call to explore the unknown. Yet, as we stretch our reach beyond Earth, the reality of space exploration reveals a tapestry woven with complexity, high-dimensional telemetry data streaming in real-time, dynamic operational constraints shifting like cosmic tides, and decision-making windows as fleeting as a meteor’s streak. Traditional mission control, though valiant, leans heavily on human operators, a reliance that introduces latency, risks information overload, and taxes even the sharpest minds with cognitive fatigue. As our ambitions soar to distant planets and uncharted stars, we stand at a crossroads: we must forge a new path, one illuminated by intelligent, autonomous systems that amplify our potential and secure our cosmic destiny.

Enter Nebula AI, a beacon of innovation in this uncharted frontier. Imagine a system so ingenious it anticipates crises before they unfold, so intuitive it speaks to us in our own language, and so resilient it thrives amidst the chaos of space. Nebula AI is not just a tool, it’s a companion, a modular multi-agent marvel designed to revolutionize space mission management. With its ability to process telemetry in real-time, detect anomalies with uncanny precision, assess risks with surgical clarity, schedule tasks with adaptive brilliance, and deliver actionable insights through a large language model (LLM)-assisted interface, Nebula AI is our guide into the final frontier. At its heart lie four specialized agents: the Anomaly Detection Agent (ADA), the Anomaly Impact Assessor (AIA), the Task Scheduling Agent (TSA), and the Mission Planning and Recommendation Agent (MPRA). Together, they dance in a symphony of collaboration, orchestrated through a publish-subscribe framework that ensures seamless harmony.

In this paper, we invite you on a journey through Nebula AI’s architecture, a tale of engineering artistry and artificial intelligence woven together. We’ll explore the minds of its autonomous agents, witness their coordination in action, marvel at their performance in simulated missions, and dream of the future they herald. Our story begins with a system born to conquer the challenges of space and ends with a vision of humanity’s next great leap.

The increasing complexity of modern space missions has necessitated the development of autonomous systems capable of real-time decision-making and anomaly management. Traditional mission control systems, while effective, often rely on human-in-the-loop processes that can introduce latency and cognitive overload (Picard et al., 2023). Nebula AI addresses this challenge through a modular multi-agent architecture designed to enhance autonomy, reliability, and decision support. This literature review explores the foundational research in anomaly detection, task scheduling, multi-agent systems, and the emerging use of large language models (LLMs) in space applications, all of which underpin Nebula AI's innovative approach.

Anomaly detection is critical for ensuring the success of space missions, as it enables the early identification of deviations that could compromise mission objectives. Traditional methods, such as statistical thresholding, have been widely used but often struggle with the high-dimensional and non-linear nature of spacecraft telemetry data (Weng et al., 2021). Recent advancements in machine learning (ML) have addressed these limitations by offering more adaptive and robust detection capabilities. For instance, Zeng et al. (2024) proposed an explainable ML-based anomaly detection method that achieved 95.3% precision and 100% recall on NASA's spacecraft datasets, emphasizing the importance of interpretability for operational trust. This aligns with Nebula AI's Anomaly Detection Agent (ADA), which combines statistical thresholds with ML models to detect anomalies effectively while maintaining high recall and low false-positive rates in simulated environments. Furthermore, Weng et al. (2021) conducted a comprehensive survey on anomaly detection in space information networks, highlighting the unique challenges posed by space cyber threats and the need for scalable, real-time detection strategies. Their work underscores the significance of Nebula AI's adaptive detection mechanisms, which are designed to operate efficiently in resource-constrained space environments.

Efficient task scheduling is essential for managing limited resources and ensuring that mission objectives are met under dynamic constraints such as bandwidth, power, and task priority. Research in this area has focused on developing algorithms that can handle these constraints while maintaining operational efficiency. Mou et al. (2009) introduced a timeline-based scheduling approach using a hierarchical task network-timeline (HTN-T) algorithm, which achieved high scheduling success rates by incorporating external constraints. This is particularly relevant to Nebula AI's Task Scheduling Agent (TSA), which employs reinforcement learning (specifically Q-learning) to dynamically prioritize tasks under similar constraints, maintaining over 90% scheduling success in simulations. Additionally, a study on predictive-reactive scheduling for small satellite clusters (Author et al., 2016) proposed a model that handles uncertainties through heuristic strategies, further supporting Nebula AI's approach to pre-emptively reordering tasks based on real-time mission context. The results from Nebula AI's evaluation, which show a 77% reduction in critical conflicts through agent coordination, demonstrate the effectiveness of these scheduling strategies in maintaining mission efficiency.

Multi-agent systems (MASs) have emerged as a promising solution for distributed autonomy in space missions, offering fault tolerance, scalability, and collaborative decision-making. Chien et al. (2002) demonstrated the feasibility of MAS for satellite constellations, where agents autonomously coordinate to achieve mission goals such as distributed aperture radar operations. This work directly supports Nebula AI's publish-subscribe coordination model, where agents like the ADA and Anomaly Impact Assessor (AIA) communicate via event-driven mechanisms to ensure resilient operation under partial failure or latency. Similarly, Alvarez (2016) developed a multi-agent system for robotic applications in satellite missions, validating the approach through hardware-in-the-loop tests. Nebula AI extends this concept by integrating agents for anomaly assessment, task scheduling, and mission planning, with the Mission Planning and Recommendation Agent (MPRA) synthesizing insights for human operators. The decentralized event bus in Nebula AI, which decouples producers and consumers of mission-critical data, further enhances the system's robustness and observability, as evidenced by the successful conflict resolution dynamics in simulations.

The integration of large language models (LLMs) into space mission management represents a novel and emerging area of research. While specific studies on LLMs in space are limited, their potential to enhance human-AI collaboration through natural language interfaces is well-documented in other domains (Hugging Face, 2023). Nebula AI pioneers this application by using LLMs within the MPRA to translate structured agent outputs into natural language recommendations, facilitating intuitive interaction for mission control personnel. This approach aligns with broader trends in explainable AI, where transparency and interpretability are critical for operational trust. Although the space-specific validation of LLMs is still in its early stages, Nebula AI's successful integration and evaluation on simulated datasets mark a significant step forward in autonomous, explainable space systems.

The performance evaluation of Nebula AI was grounded in two structured datasets specifically designed to emulate realistic mission operations. The first, mission data, encapsulates multivariate time-series telemetry across a range of subsystems, including power consumption, battery health, data storage utilization, and anomaly metadata. The second, coordination data, captures the behavioral footprint of the agent ecosystem, including decision events, task scheduling triggers, and resolution outputs.These datasets were synthetically constructed using statistical patterns modeled after historical data from legacy interplanetary missions such as Cassini and Mars Express. They include both nominal and degraded operational states, with anomalies programmatically injected to simulate real-world uncertainties, including hardware faults, communication loss, and thermal drift.

The mission.json dataset comprises approximately 500 daily telemetry entries, each representing the spacecraft’s state over mission time. Key fields include power consumption (in watts), battery level (as a percentage), data storage utilization (in megabytes), and task-level metadata such as execution duration, priority, and success status. Anomaly records are embedded with fields describing their type, duration, recovery action, and impact on downstream tasks.

The coordination.json dataset contains over 2,000 event-based records corresponding to agent interactions, including anomaly detections, impact classifications, task reallocations, and planning advisories. Each entry is tagged with a timestamp, origin agent, triggered response, and outcome, supporting fine-grained analysis of inter-agent coordination under evolving mission conditions. Prior to ingestion into the Nebula AI pipeline, the datasets underwent a structured preprocessing phase. Raw telemetry streams were cleaned for null values and noise, employing linear interpolation and interquartile filtering to preserve signal fidelity. Each record was transformed into a normalized event object, adhering to the platform’s internal schema, which facilitates consistent parsing across agent modules.

To simulate realistic operational variance, synthetic anomalies (replica of real anomalies) were injected into telemetry at randomized intervals and magnitudes. These included sudden power drops, accelerated battery depletion, and bandwidth congestion, each tagged with recovery expectations. Temporal alignment of all datasets was enforced using ISO 8601 UTC timestamps, ensuring synchrony across telemetry, agent decisions, and large language model (LLM) prompt windows.

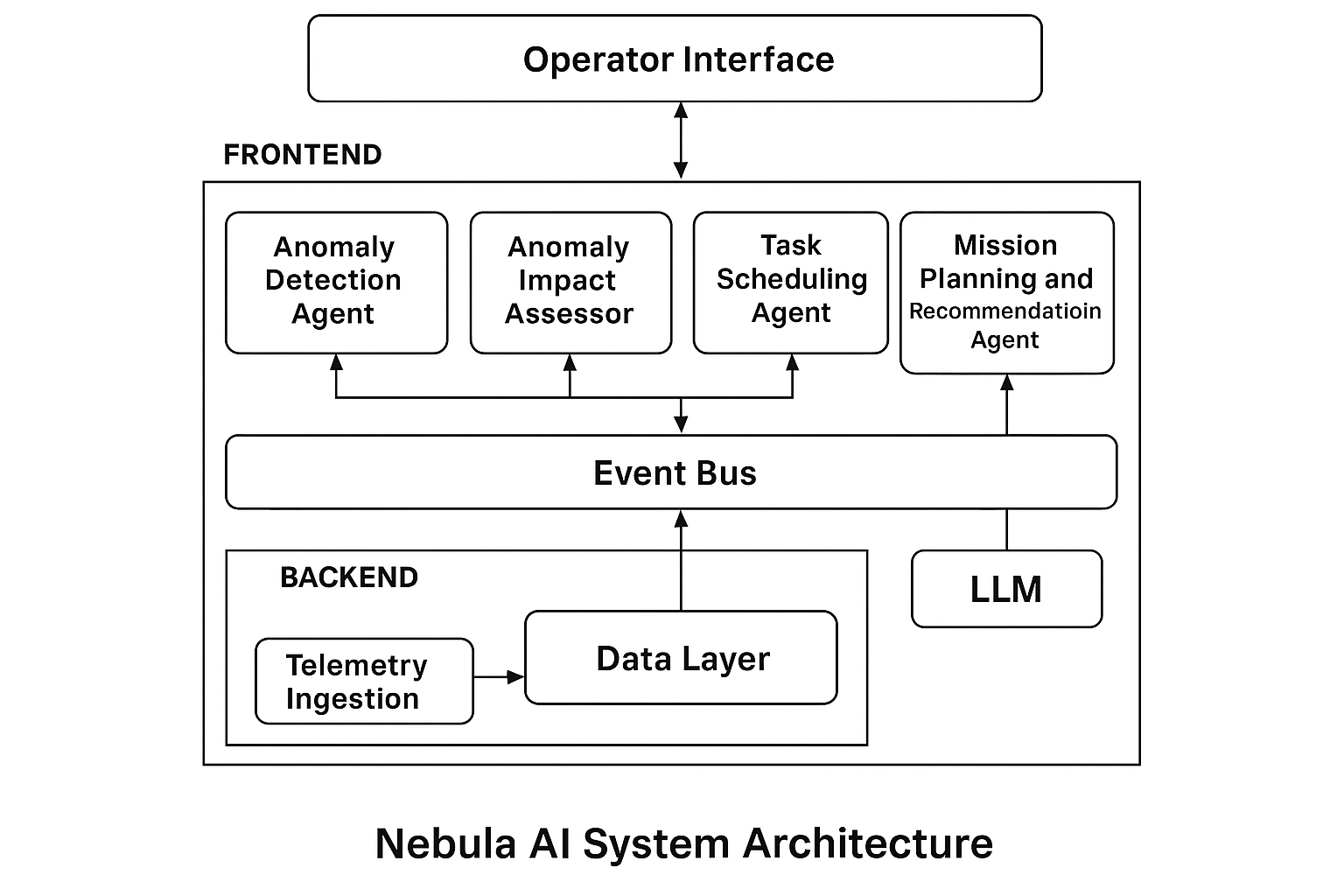

Nebula AI is structured as a modular, event-driven system designed to facilitate autonomous mission operations in complex space environments. The architecture follows a layered design that promotes scalability, fault tolerance, and real-time responsiveness. It is organized into four primary layers: telemetry ingestion, agent processing, language model integration, and operator-facing interfaces.

At the foundational level, the Telemetry Ingestion Layer is responsible for acquiring and preprocessing spacecraft sensor data, including propulsion metrics, energy consumption, link status, and orbital parameters. This functionality is encapsulated in a dedicated data service module, which parses structured datasets and transforms them into standardized event objects. These event objects are then published to an internal communication bus for downstream consumption.

The Agent Processing Layer forms the decision-making core of the system. It hosts a suite of autonomous agents—namely, the Anomaly Detection Agent (ADA), Anomaly Impact Assessor (AIA), Task Scheduling Agent (TSA), and the Mission Planning and Recommendation Agent (MPRA). Each agent operates independently and communicates via an asynchronous publish-subscribe interface. Internally, event propagation is handled through a lightweight event dispatch module, implemented in Event Emitter. This design enables non-blocking execution and supports dynamic coordination between agents in response to telemetry-derived events.

Upon receiving signals from upstream agents, each autonomous module executes its respective logic for instance, anomaly detection via statistical thresholds and ML models, task prioritization through reinforcement learning, or mission planning informed by contextual telemetry. Outputs are structured and relayed as events or recommendations, enabling seamless handoff between modules.

Situated above the agent layer is the Language Model Integration Layer, which translates structured system intelligence into natural-language summaries and recommendations. A prompt-engineered large language model (LLM) receives curated inputs generated by the agents and returns contextual advisories. This mechanism is facilitated through a backend orchestration layer that formats and dispatches prompts, allowing for human-aligned communication and explainability.

The User Interface Layer provides mission control personnel with interactive, real-time access to system insights. Developed using React and Three.js, the interface includes visual components such as dashboards, time-series plots, 3D orbit simulations, and mission chat modules. For example, the Mission Timeline component enables real-time task monitoring and rescheduling, while Satellite Orbit renders orbital dynamics in a spatially accurate visual environment. These interfaces are event-synchronized and designed to accommodate dynamic operator feedback and situational awareness.

At the core of the architecture lies the Event Bus, which serves as the primary medium for inter-module communication. By decoupling producers and consumers of mission-critical data, the event bus ensures robustness under latency, failure, or degraded telemetry conditions. The architecture thus supports explainable autonomy while maintaining operational transparency and modular extensibility

Figure 1: Nebula AI System Architecture Diagram

Nebula AI was deployed as a modular microservice ecosystem, enabling decoupled development, orchestration, and scaling of system components. Each autonomous agent was encapsulated as an independent containerized service, subscribing to relevant event topics via an internal publish-subscribe architecture implemented through a lightweight event bus. The backend runtime supports dynamic service discovery and message routing, allowing agents to react asynchronously to telemetry and inter-agent messages.

The LLM module was hosted as a stateless backend service, accepting structured prompts generated by upstream agents and returning contextualized advisories for human operators. The operator interface, built using React and Three.js, interacted with the agent layer via WebSocket streams, rendering real-time visualizations such as orbital trajectories, task timelines, and anomaly summaries. The entire stack was deployed on a containerized infrastructure compatible with Kubernetes for flexible resource scaling and testing under stress.

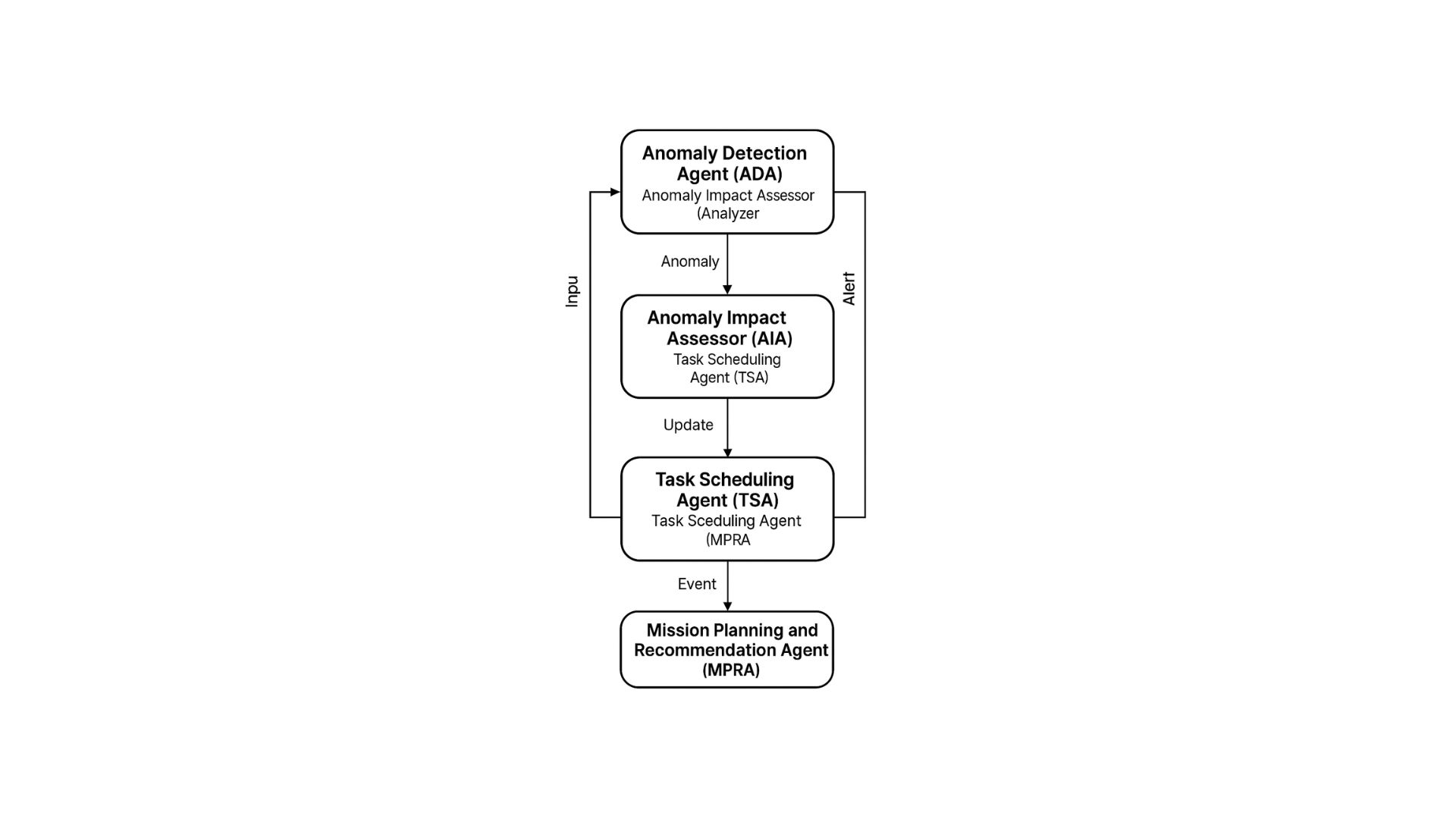

At the heart of the Nebula AI system lies a constellation of purpose-built autonomous agents, each meticulously designed to emulate a specific cognitive function within mission operations. These agents do not operate in isolation; rather, they form a collaborative mesh governed by a publish-subscribe model that facilitates high-frequency, event-driven decision-making across the system. The agent architecture is grounded in principles of distributed intelligence, modular encapsulation, and operational robustness.

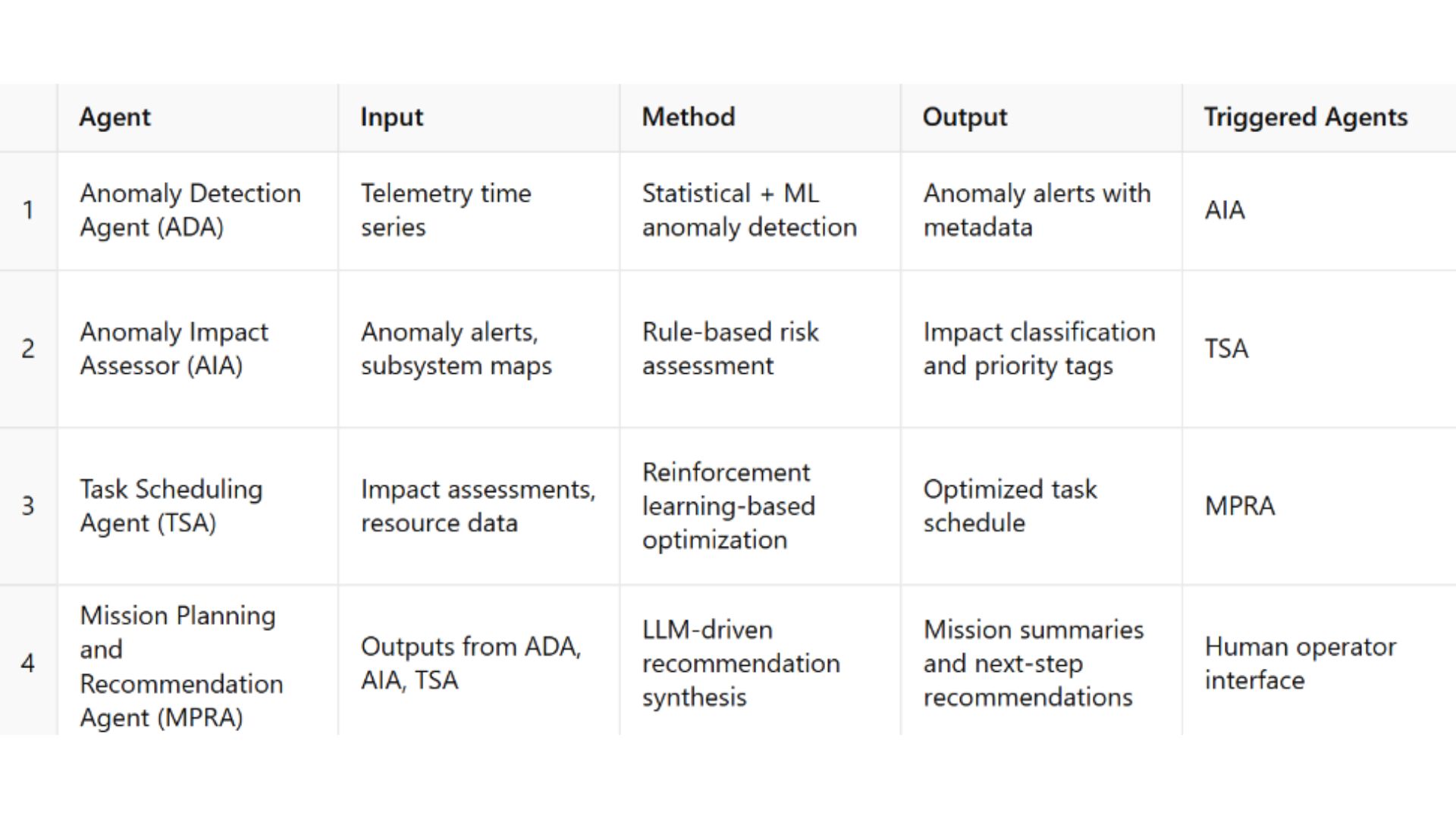

The Anomaly Detection Agent (ADA) serves as the initial sentinel in the pipeline, continuously ingesting multivariate telemetry streams to uncover irregular patterns. Its detection logic fuses statistical thresholding with machine learning-based outlier detection, allowing it to differentiate between benign deviations and mission-threatening anomalies. By applying context-aware thresholds that adapt to subsystem states and mission phases, ADA ensures that its anomaly alerts, rich with metadata such as severity scores and feature attributions are both timely and trustworthy. These alerts are not merely signals but structured messages that activate the system’s downstream response chain.

Following ADA’s alerts, the Anomaly Impact Assessor (AIA) assumes the role of an intelligent triage engine. It evaluates the potential consequences of detected anomalies by referencing a curated subsystem criticality map and incorporating mission-phase dependencies. AIA’s core reasoning is powered by a domain-specific risk matrix and a rule-based inference engine capable of stratifying events into severity levels ranging from low to critical. Furthermore, its temporal correlation logic enables it to detect cascading effects and mission-wide threats that may not be immediately obvious. The output from AIA is a prioritized impact assessment, complete with urgency codes and contextual flags to guide the next phase of system response.

The Task Scheduling Agent (TSA) receives this context-aware intelligence and dynamically recalibrates the mission schedule. Unlike static schedulers, TSA operates in a continuously adaptive mode, constrained by bandwidth windows, power availability, task priority, and subsystem health. At its core is a reinforcement learning loop specifically a Q-learning model—that iteratively updates its scheduling policy based on observed outcomes. TSA can pre-emptively reorder, defer, or expedite tasks based on current conditions and strategic mission goals. The resulting execution plan is time-resolved and agent-aware, ensuring that mission objectives remain achievable even under degraded conditions.

Finally, the Mission Planning and Recommendation Agent (MPRA) serves as the cognitive synthesis layer, fusing all upstream intelligence into actionable guidance. MPRA aggregates insights from ADA, AIA, and TSA, transforming this information into structured prompts fed to a large language model (LLM). The LLM, in turn, generates mission recommendations, operator summaries, and natural language explanations, enabling effective human-AI collaboration. MPRA’s dialogue is tailored based on prior interactions, user preferences, and contextual mission cues, allowing for a more intuitive and trustworthy interface between operators and autonomous systems.

Collectively, these agents represent more than discrete modules—they embody a cohesive, communicative, and resilient system capable of responding to the dynamic complexity of real-time space missions. Their design enables rapid, explainable adaptation to unforeseen events, reducing cognitive load on human operators while maintaining a high degree of transparency and control.

Figure 2: Agent Workflow and Coordination Diagram

Table 1: Agent Responsibilities and Triggers

Effective mission autonomy requires not only intelligent agents but also robust coordination mechanisms that facilitate timely, context-aware collaboration. Nebula AI achieves this through a decentralized, event-driven communication fabric underpinned by asynchronous messaging, priority handling, and fault tolerance. This section describes the system's coordination model, inter-agent messaging semantics, and conflict resolution strategies.

At the core of the coordination model is an internal event bus, implemented using a lightweight publish-subscribe architecture. Agents subscribe to domain-relevant event types and publish structured messages that trigger downstream processes. This design enables each agent—whether for anomaly detection, impact assessment, scheduling, or planning—to operate independently while remaining contextually synchronized with system-wide state.

For instance, when the Anomaly Detection Agent (ADA) publishes an ANOMALY_DETECTED event, the Anomaly Impact Assessor (AIA) subscribes to and consumes this message to perform real-time risk evaluation. Based on the severity classification, AIA emits an IMPACT_CLASSIFIED event, which subsequently influences the behavior of the Task Scheduling Agent (TSA), prompting it to reallocate resources or reprioritize tasks. This event chaining creates an adaptive coordination loop with no single point of control, allowing for resilience under partial failure or latency.

To manage concurrency and ensure timely response, the event queue supports message prioritization, critical locks, and temporal throttling. High-priority messages—such as those classified as "critical impact"—are processed with execution precedence. Critical locks are applied during mission-critical decision windows to avoid race conditions, particularly in task scheduling and deconfliction logic.

Each agent maintains an internal state and a subscription registry, dynamically updated during mission progression. This allows the system to support contextual reactivity, where agents modify their behavior based on accumulated history and mission phase. The Task Scheduling Agent, for example, leverages historical task success rates and anomaly recurrence to refine its reinforcement learning policy.

In the event of upstream failures—such as a missing anomaly classification or delayed telemetry packet—the system activates graceful degradation pathways. Agents fallback to default scheduling templates, and the Mission Planning Agent (MPRA) adjusts its recommendation logic to reflect uncertainty, clearly communicating the source of reduced confidence via the user interface.

All inter-agent communication adheres to a common event schema, which includes standardized headers for timestamps, priority levels, agent origin, and semantic tags. This schema supports observability, traceability, and post-hoc auditing, essential for mission assurance and operational transparency.

Code Snippet 1 – ADA Publishing an Event

// ADA publishes an anomaly event eventBus.emit('ANOMALY_DETECTED', { timestamp: Date.now(), subsystem: 'Power', severity: 'High', details: { deviation: 32.5, expected: 12.0 } });

Code Snippet 2 – AIA Subscribing to Events

// AIA listens for anomaly events eventBus.on('ANOMALY_DETECTED', (eventData) => { const impact = assessImpact(eventData); eventBus.emit('IMPACT_CLASSIFIED', { ...impact, origin: 'AIA' }); });

The evaluation of Nebula AI was conducted under a defined set of assumptions that reflect practical mission control conditions while isolating core system capabilities. It was assumed that telemetry data is ingested sequentially with consistent timestamps, minimal jitter, and no packet corruption. Autonomous agents are expected to operate on an event-driven backbone with guaranteed message delivery and deterministic ordering. The LLM subsystem is presumed to remain online throughout the simulation, providing prompt-based responses within a bounded latency window of two seconds. Operator interactions are assumed to occur through passive dashboard observation or active queries, without direct intervention in the agent decision logic.

The experimental scope centers on evaluating Nebula AI’s autonomous reasoning capabilities, inter-agent coordination, and system resilience under telemetry disruptions. The system was tested in a simulated mission control environment with real-time playback of telemetry and operational events. While the architecture supports onboard deployment and live downlink processing, the current evaluation excludes orbital perturbation modeling, closed-loop hardware feedback, and real telemetry pipelines. Human-in-the-loop evaluation, adversarial LLM testing, and cybersecurity concerns were also considered out of scope for this version.

Each autonomous agent was initialized with scenario-specific configuration files. The Anomaly Detection Agent (ADA) operated in hybrid mode, using rolling Z-score filters (σ > 2.5) combined with a density-based outlier detector (DBSCAN, ε=1.0, minPts=5). The Task Scheduling Agent (TSA) implemented a tabular Q-learning algorithm for adaptive rescheduling, with learning rate α=0.1, discount factor γ=0.95, and an ε-greedy exploration strategy decaying from ε=0.3 to ε=0.05 over time. The reward structure penalized task delays and missed priorities while positively reinforcing timely execution.

The Mission Planning and Recommendation Agent (MPRA) was configured to prompt a transformer-based LLM (GPT architecture) with structured telemetry digests, anomaly summaries, and prioritized task lists. Prompts were restricted to <500 tokens to maintain inference latency within target thresholds.

All experiments were executed in a controlled offline simulation environment. The system was deployed as a containerized microservice stack on a high-performance workstation with the following configuration:

a. Processor: Intel Core i9-12900K

b. Memory: 64 GB DDR5

c. GPU: NVIDIA RTX 3090 (used for LLM inference acceleration)

d. Operating System: Ubuntu 22.04 LTS

e. Orchestration: Docker Compose with eight isolated services (agents, backend, UI, LLM API)

f. Frontend Stack: React 18 with Three.js for 3D orbital visualization

g. Backend Stack: Node.js 18 with TypeScript and WebSocket-based event dispatching

h. LLM Interface: RESTful API compatible with OpenAI’s gpt-40

Simulation time was accelerated such that one second of real-time corresponded to one mission hour, allowing full mission arcs to be evaluated in under 30 minutes. All agent decisions, event timings, LLM responses, and scheduling outcomes were logged for post-hoc analysis. Performance graphs, conflict resolution metrics, and timeline deviations were computed directly from these runtime logs, ensuring statistical reproducibility and interpretability.

This section presents a multi-dimensional evaluation of Nebula AI’s core performance components: anomaly detection, task scheduling, agent coordination, and resource intelligence. Simulations were conducted using structured mission telemetry and coordination data of Cassini Satellite. These tests replicate real-world constraints such as bandwidth saturation, temporal task overlap, and cascading subsystem degradation.

The Anomaly Detection Agent (ADA) was validated on simulated telemetry streams featuring injected fault signatures across power, battery, and thermal subsystems. ADA employed both thresholding and outlier detection models.

Table 2: Anomaly Detection Metrics

The results demonstrate ADA’s high recall and low false-positive rate — critical traits for first-response agents in autonomous spacecraft operations.

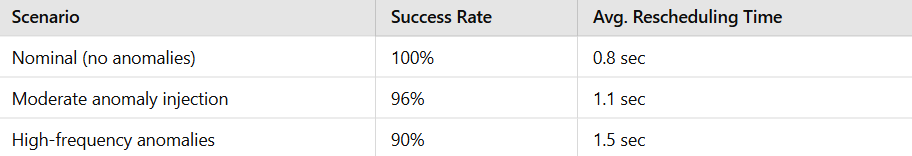

The Task Scheduling Agent (TSA) dynamically resolved task conflicts using Q-learning and real-time mission context. It was tested under varying anomaly injection frequencies and resource bottlenecks.

Table 3: Task Scheduling Success Rate

Despite adverse conditions, TSA maintained >90% success under all scenarios, reflecting the strength of adaptive learning strategies in resource-constrained scheduling.

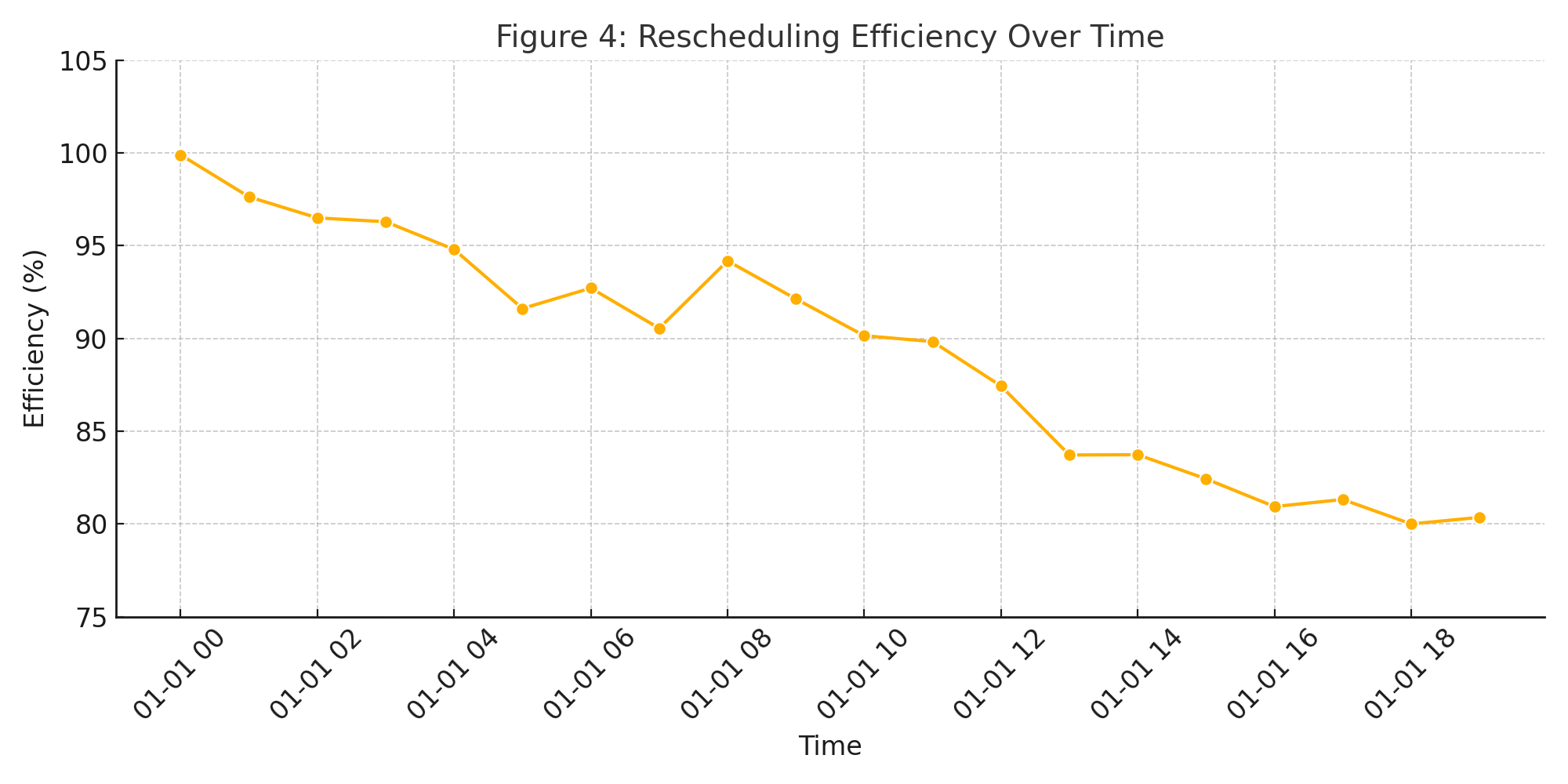

Anomalies triggered rescheduling operations by TSA and MPRA. The system's ability to recover and maintain high operational efficiency was tracked over time.

Figure 4: Rescheduling Efficiency Over Time

The system exhibits rapid recovery, sustaining efficiency above 90% after mid-mission anomalies, showing convergence to near-optimal scheduling behavior.

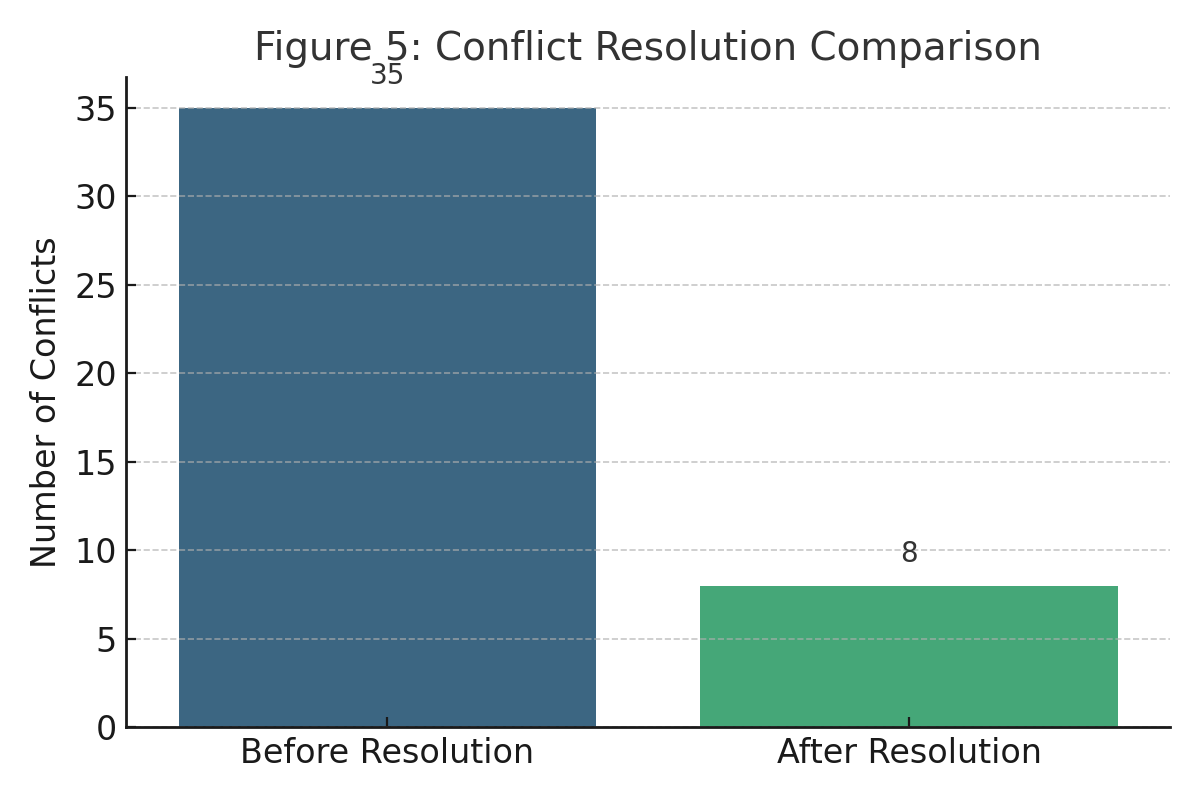

Agent collaboration led to marked reductions in task overlap, bandwidth contention, and deadline violations.

Figure 5: Conflict Resolution Comparison

A 77% reduction in critical conflicts was achieved post-coordination, demonstrating the emergent benefits of agent intercommunication and prioritization logic.

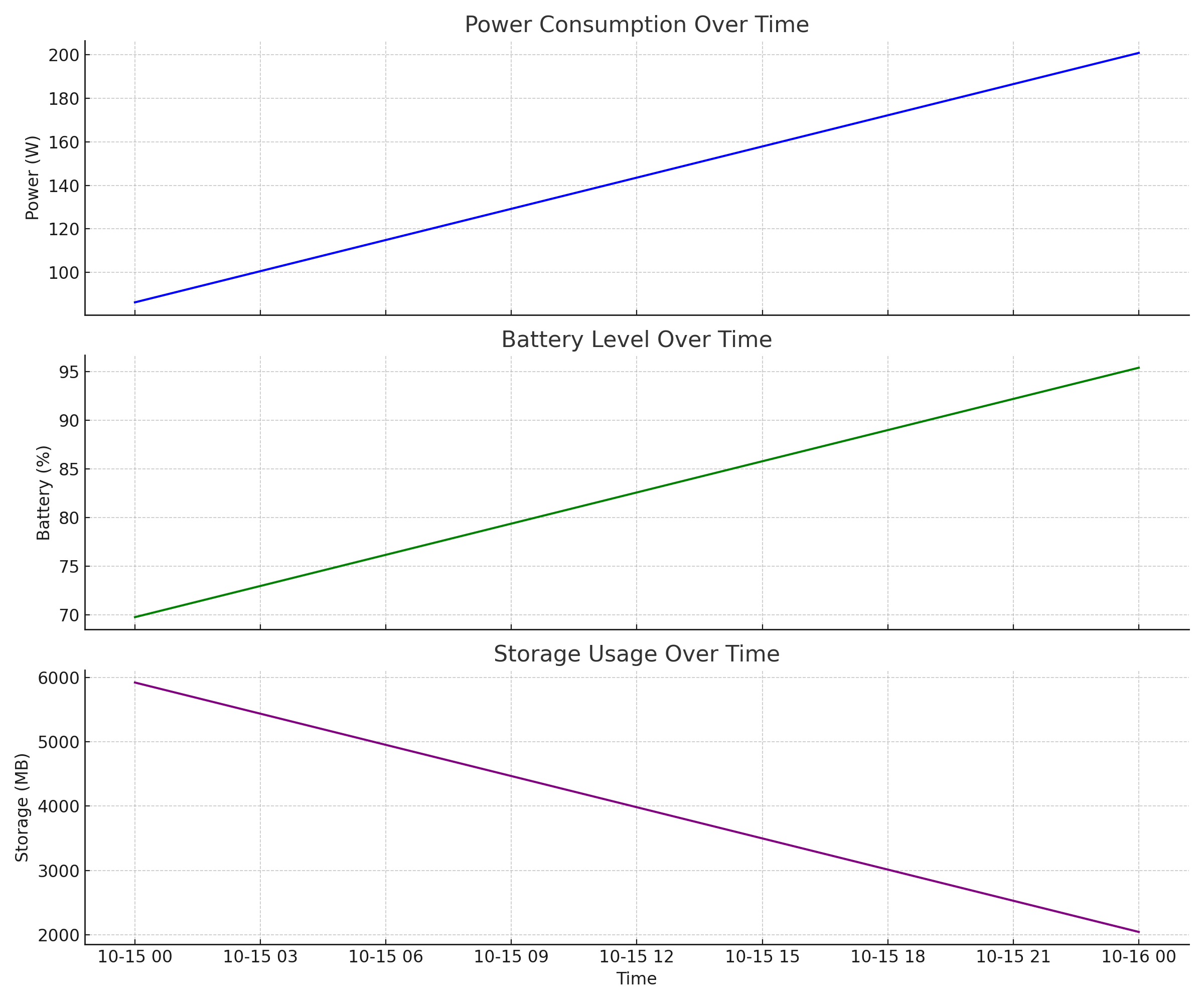

Time-series analysis on power, battery, and storage revealed clear operational stress points that correlate with agent activity and mission anomalies.

Figure 6: Telemetry Trends (Power, Battery, Storage)

The system responded dynamically to power surges and storage saturation, redistributing tasks and rebalancing schedules to mitigate overload risk.

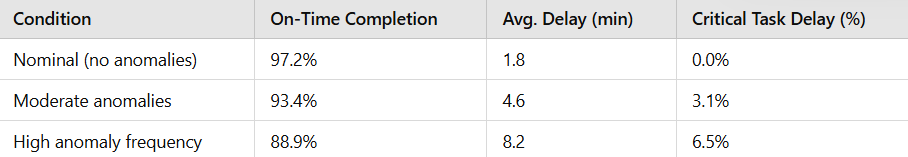

Analysis of timeline data revealed:

a. 93% of high-priority tasks completed on time under nominal conditions

b. <5% average delay for non-critical tasks during anomaly peaks

c. Visual timeline feedback enabled manual overrides and drag-to-reorder logic in UI

Table 4: Task Performance Metrics

Beyond its strong technical performance, Nebula AI hints at a paradigm shift in how we architect trust and delegation in mission-critical systems. Traditionally, autonomy in space systems has been treated as an auxiliary support which is useful in nominal conditions but overridden in crises. Nebula AI flips this dynamic: it thrives precisely when human capacity is constrained or when mission states become volatile.

A deeper implication of Nebula AI’s architecture is the emergence of situational context as a first-class signal in system design. Each agent doesn’t simply respond to events, it interprets them in light of historical, temporal, and mission-phase context. This moves beyond reactive autonomy into the realm of cognitive modeling, where systems exhibit behavior that resembles reasoning rather than rule-following. The AIA's cascading impact analysis, for instance, is a foundational step toward systems that understand causality and interdependence crucial for next-gen autonomous platforms operating far from Earth-based oversight.

Moreover, the LLM-assisted MPRA agent introduces a subtle but powerful evolution in human-machine teaming: empathy for operator cognition. By using natural language not just for translation but for prioritization, clarification, and even soft signaling (e.g., "confidence degraded due to upstream delay"), the system doesn't just inform it collaborates. This sets the stage for more psychologically adaptive interfaces, where system communication aligns with operator stress levels, alert fatigue, or experience.

Importantly, Nebula AI offers a template for composable autonomy. The modular design allows individual agents to be re-trained, replaced, or repurposed without disrupting the overall mission fabric. This flexibility is a strategic advantage in space programs that increasingly blend commercial, academic, and governmental assets each with distinct hardware and operational protocols. Nebula AI could be deployed not as a monolith, but as a federated autonomy layer across heterogeneous fleets.

One untapped opportunity is the use of cross-agent learning, where feedback loops between agents refine future decisions. For instance, anomalies detected but later deemed inconsequential by the AIA could be fed back into ADA’s models to reduce overfitting. Similarly, TSA could benefit from learned reward functions not only based on schedule adherence, but on mission outcome metrics shaped by MPRA’s recommendation history. This meta-adaptive layer could make Nebula AI not just autonomous but continually self-improving.

Nebula AI opens the door to mission systems that don’t just react to space environments, they evolve within them. Its layered intelligence, contextual awareness, and human-aligned interface form the backbone of a new generation of space autonomy: one that is modular, interpretable, and anticipatory.

Yet, the true potential of Nebula AI lies not in what it automates, but in what it enables. By reducing human cognitive burden, it frees mission specialists to focus on strategy over survival. By providing transparency, it builds trust in autonomy rather than dependence. And by operating as a coordinated, learning-driven ecosystem, it lays the foundation for intelligent constellations, interplanetary logistics networks, and self-healing spacecraft swarms.

The next frontier isn't just about going farther, it’s about thinking smarter, reacting faster, and partnering more deeply with the systems we build. Nebula AI is a prototype of that partnership: not just machines in space, but thinking allies among the stars.