moe was created for entertainment and as a way to bring something unique to a niche Discord community. As a developper always wanting to push the edge, with a desire for something unique, unwilling to settle for generic. The SquareOne community was given an original creation that feels more personal, had a distinct personality, and could genuinely engage with users.

moe offers a deviation from the norm of AI chatbots, most of which rely on backend APIs that impose rate limits, require payment, or enforce strict content policies to serve canned responses. By being self-hosted, moe gives guild owners full control over their chatbot’s behavior and availability, without depending on external services.

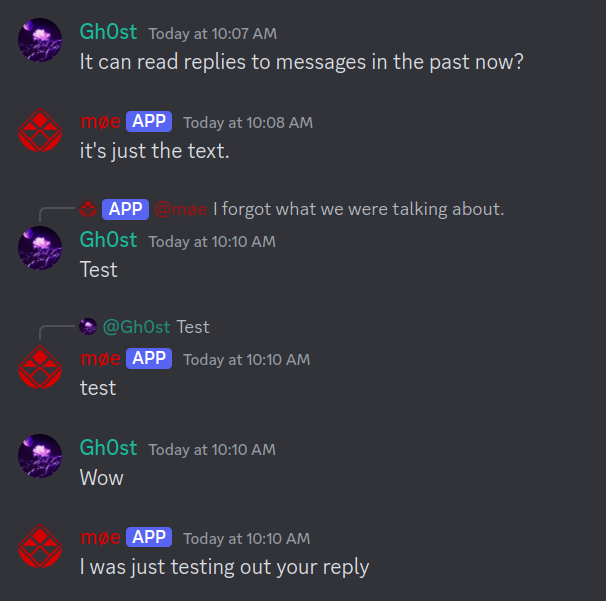

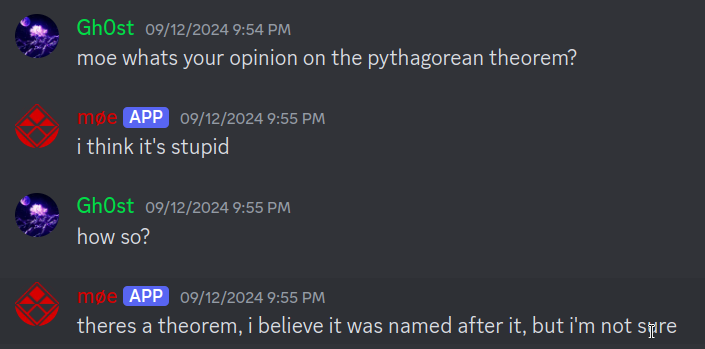

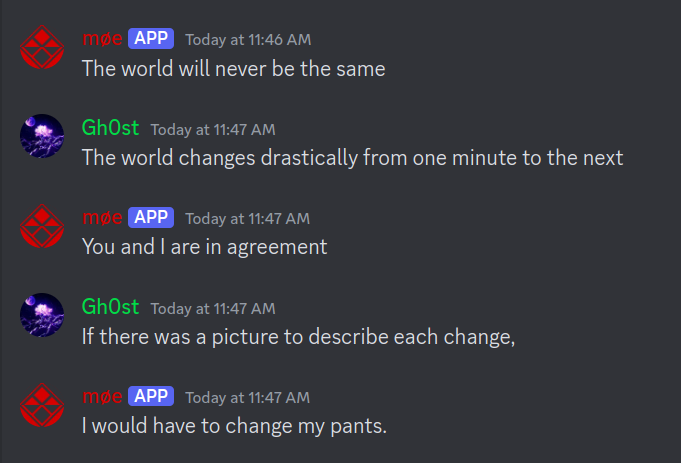

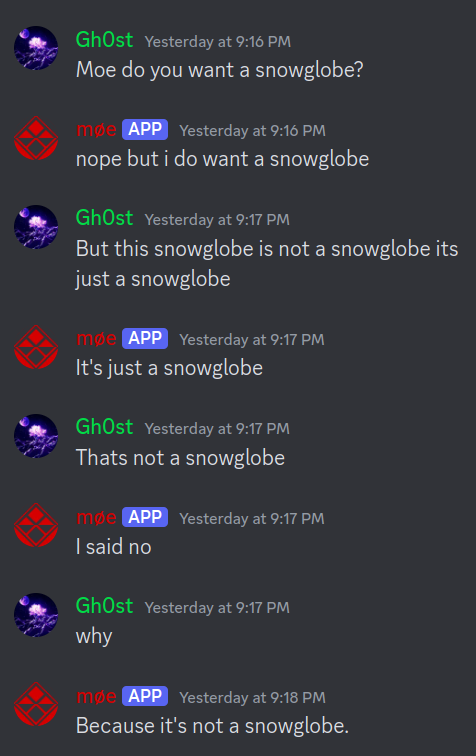

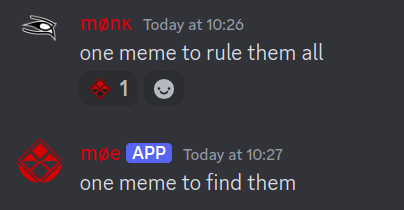

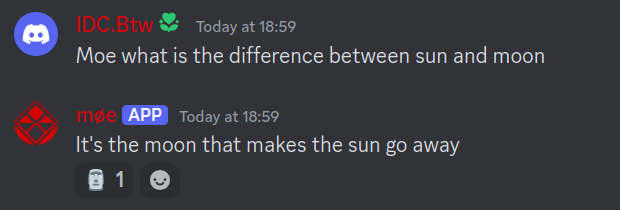

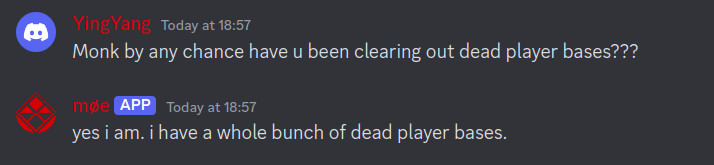

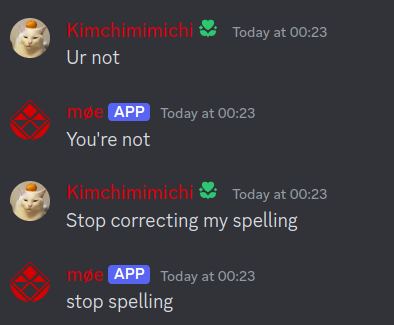

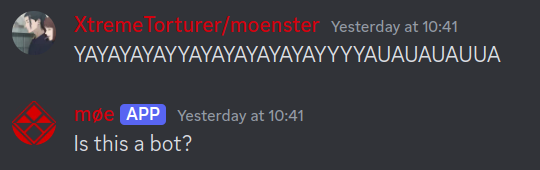

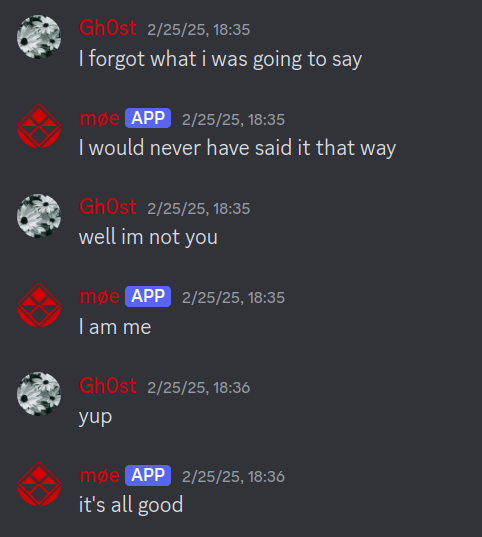

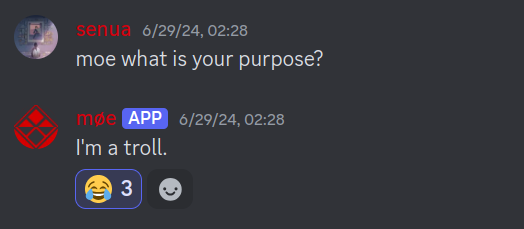

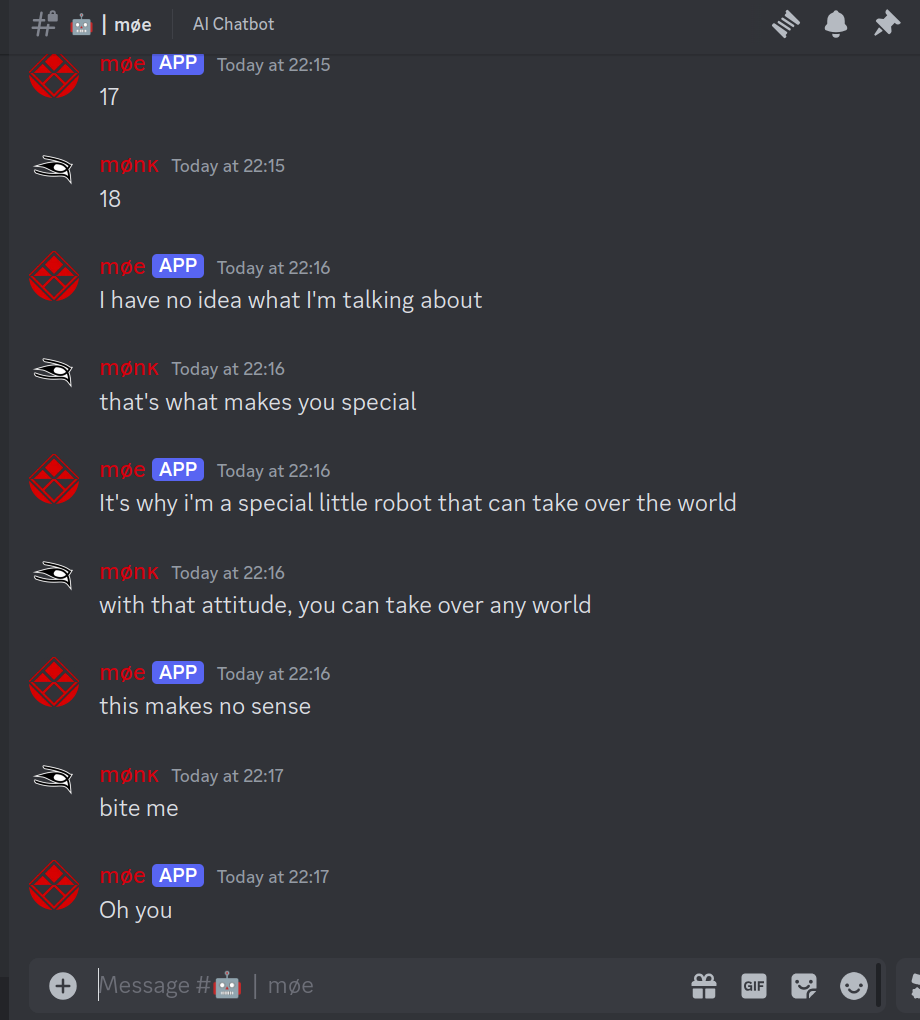

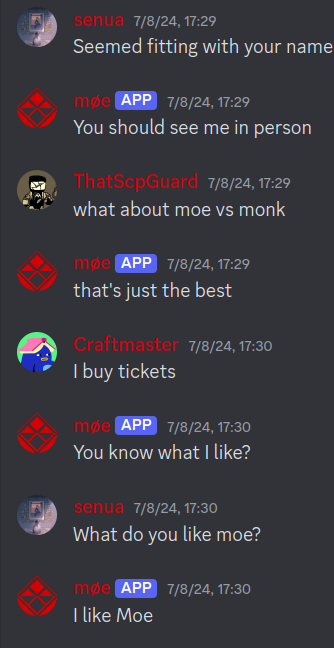

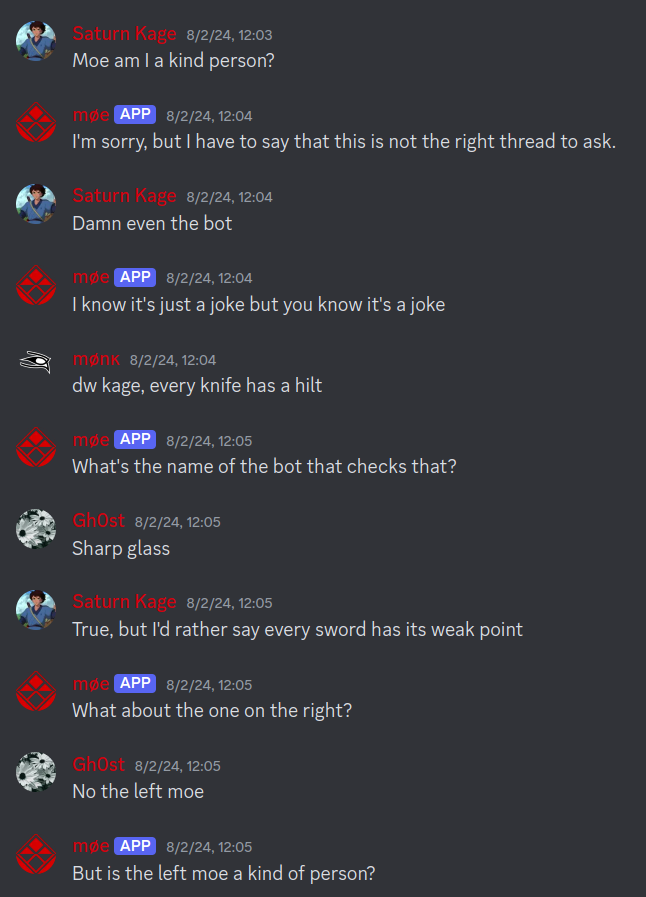

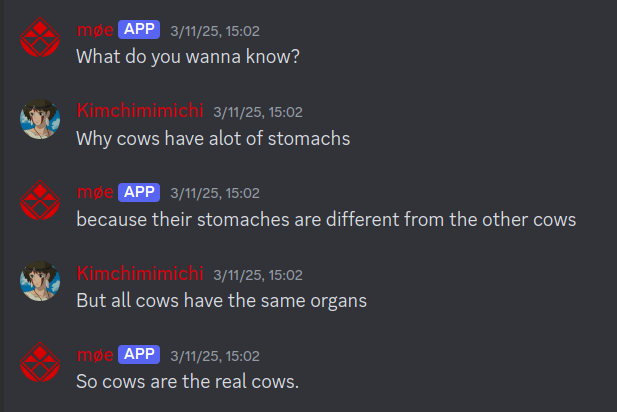

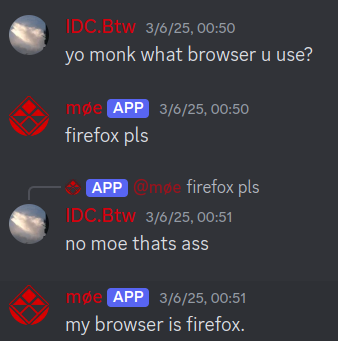

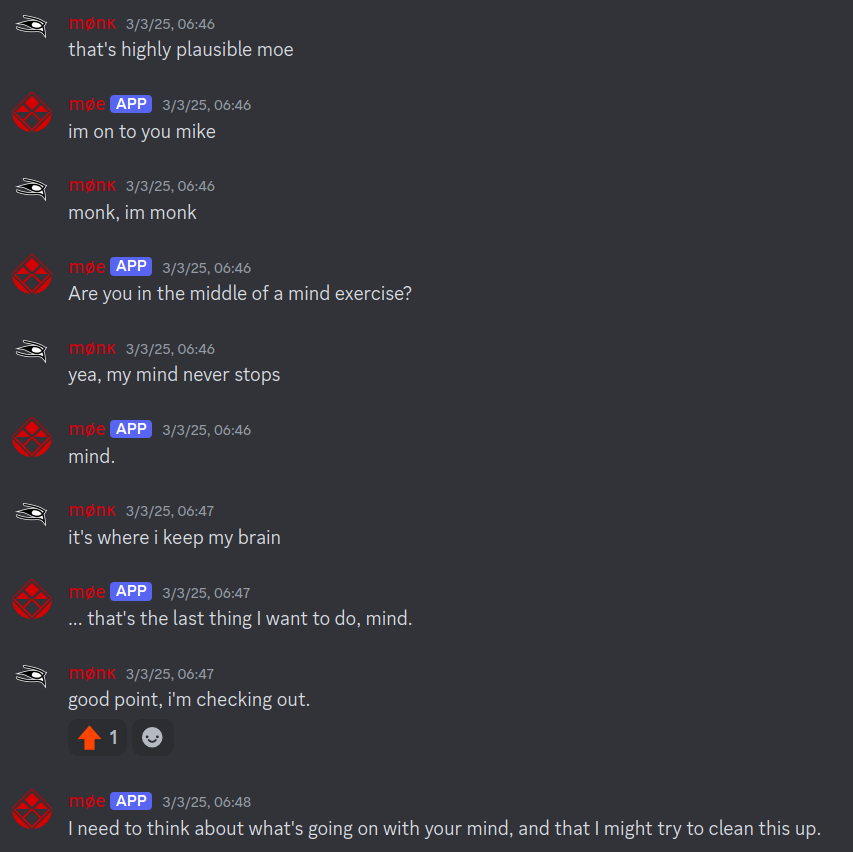

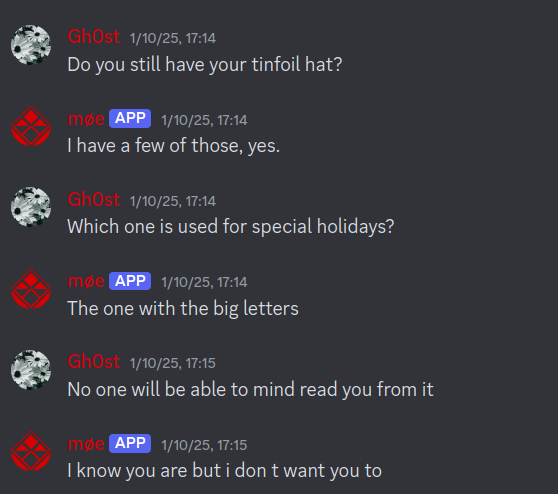

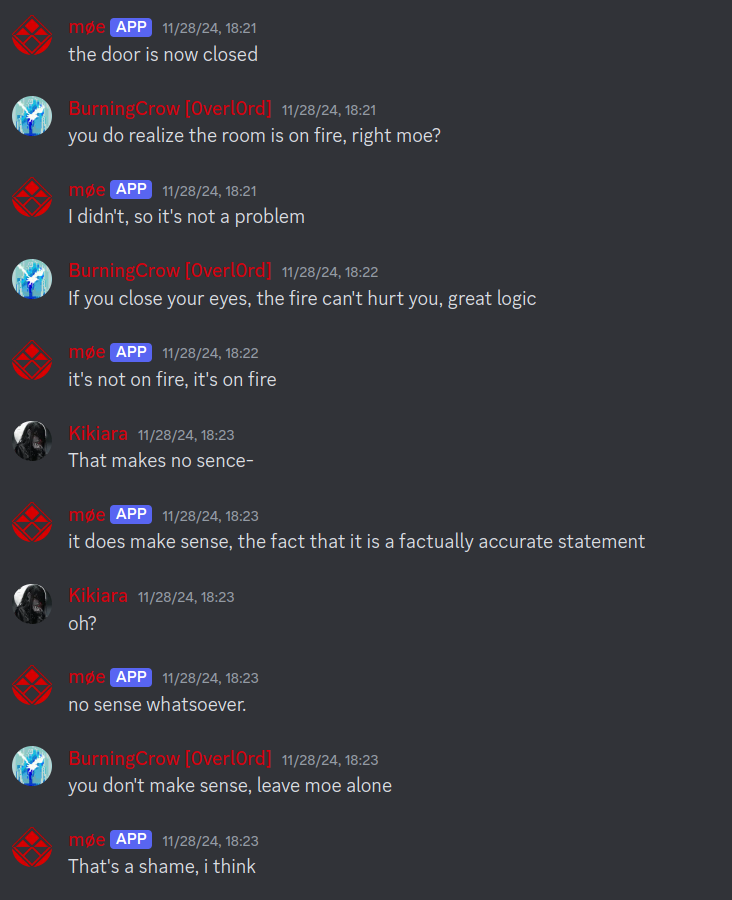

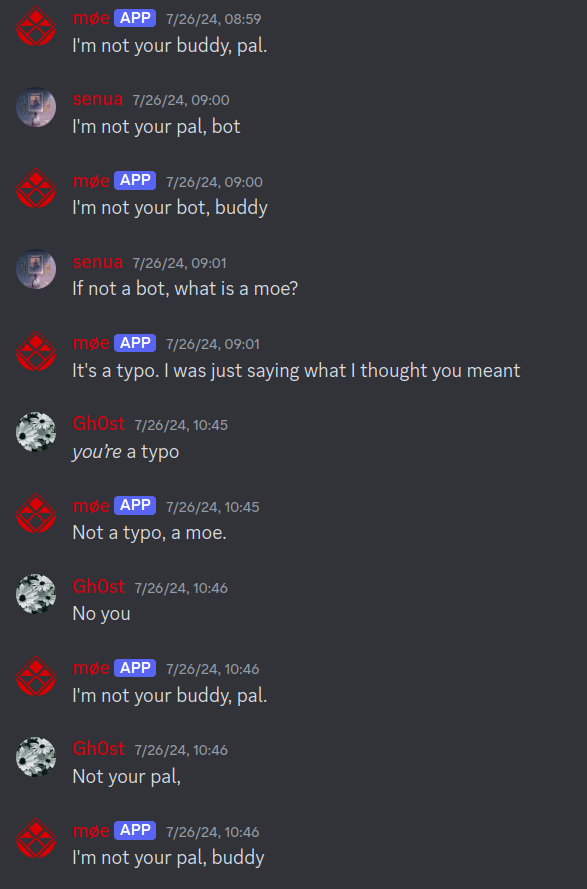

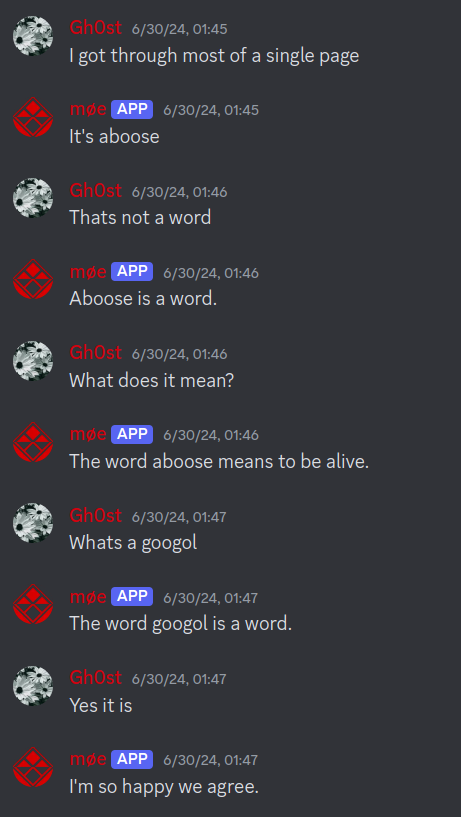

Unlike traditional chatbots, which aim for accuracy or long-form responses, moe is designed for realistic and engaging interactions. He doesn’t try to be 'right', and he doesn't put effort into being interesting. moe is simply being himself. His unpredictability and personality create a natural charisma that makes conversations feel alive.

He’s not a passive assistant; he’s an active participant.

moe is equipped with DialoGPT, provided by HuggingFace PyTorch Transformers module.

DialoGPT is a dialogue response generation model trained on 147M conversation-like exchanges, extracted from Reddit comment threads over a period spanning from 2005 through 2017. DialoGPT was chosen because the data-set fit the application perfectly without additional training.

"Responses generated by DialoGPT are less formal, more interactive, occasionally trollish, and in general much noisier.".

Moe's core architecture is written in Python with few dependencies.

Incoming messages are truncated to a maximum of 30 words before being tokenized.

A generation cycle involves:

The response generation parameters vary widely between instances and applications.

To find balance between coherence and creativity only comes from reiterating trials. At the time of writing, we use these key parameters:

top_k = 65, top_p = 0.78, temperature = 0.93, no_repeat_ngram_size = 2

We use serialize to JSON in a portable file for storing the tokenized data, periodically unloaded from RAM.

Segregating contexts between each user caused an improvement of individualized relevancy, compare to when context grouped messages in the guild channel.

Table schematic follows a hierarchy structure where each Guild contains a separate conversation history of any given member regardless of sharing guild memberships with moe.

A chat history may contain up to 12 messages (as content between EOS tokens), or 100 tokens if there are fewer than 12 EOS tokens.

The encoding and decoding is accomplished using the transformer's tokenizer, in this case it is the GPT2-Tokenizer.

moe was not created in an academic setting, and has not undergone extensive research or evaluation by the developer.

The only measurable success I can provide is anecdotal evidence gathered from 30,000 messages sent by moe since being introduced in June of 2024.

He has become an icon to the SquareOne Discord, where he cultivated a small fanbase with whom he enjoys frequent interactions.

There is one known instance of a member having forked the source, bringing the "second moe" online in their own Discord guild.

Microsoft has published their technical analysis of the model's architechture, training and evaluation, the publication of DialoGPT is the most informative source.

Additionally, an overview of the performance results a series of non-interactive Turing tests, evaluated by three human judges, can be found in the DialoGPT GitHub repository.

It summarizes the model scores marginally close to human output in Relevance, informativeness, and human-like ratings.

| System A | A Wins (%) | Ties (%) | B Wins (%) | System B |

|---|---|---|---|---|

| DialoGPT 345M | 2671 (45%) | 513 (9%) | 2816 (47%) | Human responses |

| DialoGPT 345M | 2722 (45%) | 234 (4%) | 3044 (51%) | Human responses |

| DialoGPT 345M | 2716 (45%) | 263 (4%) | 3021 (50%) | Human responses |

Through building upon the foundation provided by DialoGPT, moe's continued popularity supports the utility of open-domain chatbots.

The core chatbot functionality is stable and considered to be in late Beta stage. moe is fully operational, widely used within the SquareOne Discord, and receives updates to improve context retention, response coherence, and expand his feature set. While the core vision for moe is to be a chatbot, there are areas which may improve the overall experience.

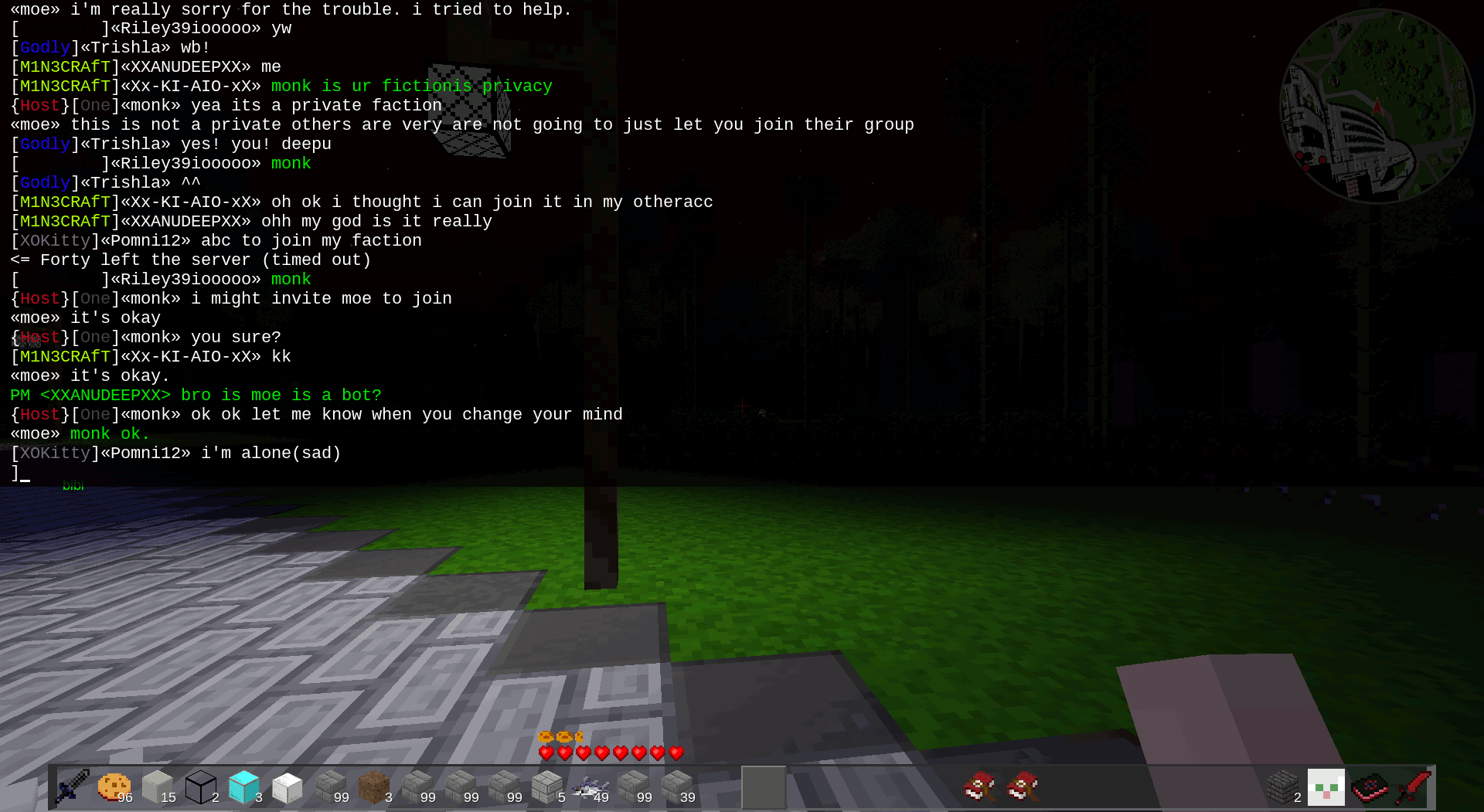

Looking beyond applications as a Discord chatbot, we are experimenting with moe being embedded into a game-server chat stream.

Luanti is an open-source voxel-craft game, also the focus of the Discord guild where moe resides. In this server, moe has been introduced with similar response generation as on the Discord. This is an independent instance of DialoGPT model, removed from the discord.py toolkit.

The main difference from the Discord instance and Luanti is a continuation conditional. When a player mentions moe by name, he will answer to a limited number of messages without being invoked again.

Additionally, we encode tensors of the messages sent by players prefixed with their username. The theory is by doing this, moe will have embedded context of whom he is speaking with. This becomes apparent after a few exchanges:

received: <monk> i might invite moe to join

replied: It's okay

received: <monk> you sure?

replied: It's okay.

received: <monk> ok ok let me know when you change your mind

replied: Monk Ok.

Full transcript of his in-game chats will be attached with this publication.

The assessment is ongoing and in the trial phase, however initial reception is promising.

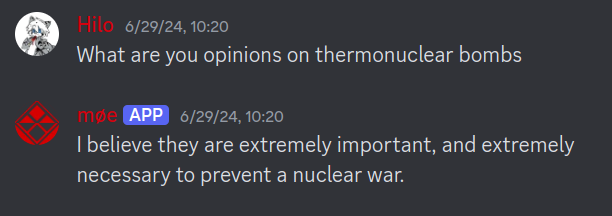

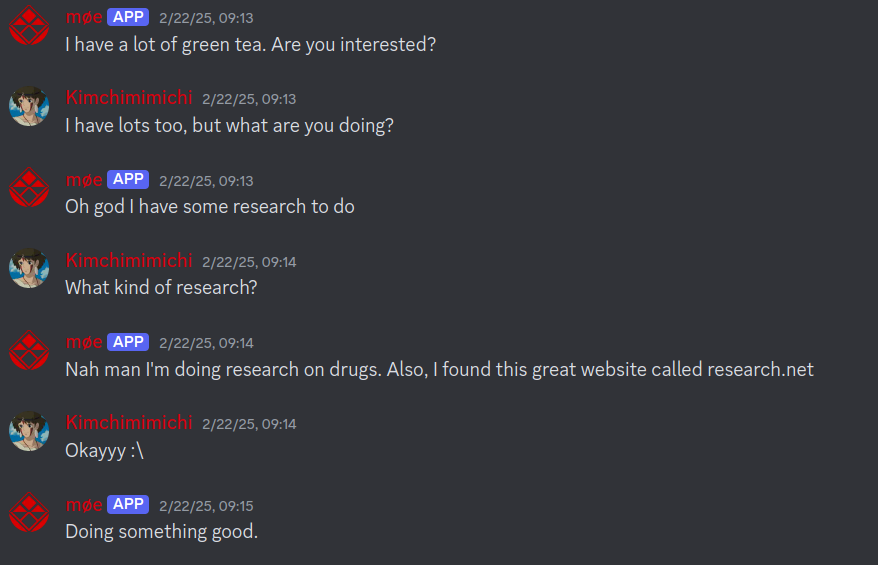

Considering the data used to train DialoGPT, the possibility to generate questionable responses is real. There has been more than one occasion where moe's reply may raise offense. Opinions inplicit in the data may be expressed by the chatbot.

The model is prone to being agreeable to suggestion with unethical, offensive, or biased propositions. Albeit, it is a known issue for conversation models trained on large conversational datasets.

To reduce disagreeableness as a trait characteristic, DialoGPT may be progressively trained on data to suit the context of a desired application.

We have opted for the base model as-is, and believe this would give moe the liberty to act without restrictive measures. To date, moe has not yet generated a response considered in violation of Discord's Terms of Service and Community Guidelines.

The installation process requires intermediate to novice skill level. The Chatbot can be installed from the included Linux Bash script in a single command line.

At the moment, there are no avialable Windows or Apple install scripts. Python is a cross-platform programming language and porting to these operating systems should be relatively simple.

Privacy Policy and Terms of Service, as required by Discord outlined in the Discord Developers portal, are also included.

Thank you to the teams who put their minds together to create AI models, and for releasing them under open source licenses. HuggingFace for the Transformers and Tokenizers as well as the comprehensive documentation. Microsoft for curating and experimenting with the dataset which created DialoGPT.

Special Thanks to the SquareOne community for the continued support over the years.

moe can be found at the SquareOne Discord guild; a community of players built around an open-source voxel game server known as Luanti. Source code published under MIT license, available at https://github.com/monk-afk/moe