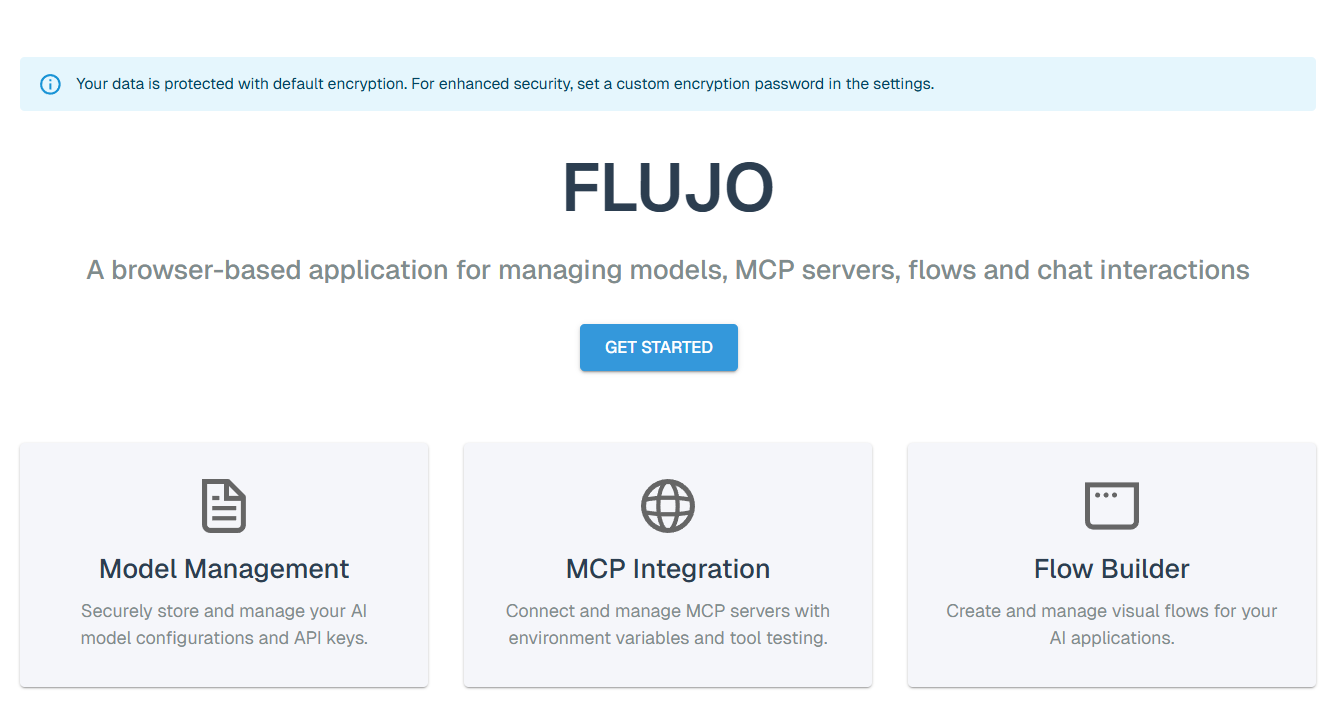

#Overview

** Think n8n + ChatGPT. **

FLUJO is a desktop application that integrates with MCP to provide a workflow-builder interface for AI interactions. Built with Next.js and React, it supports both online and offline (ollama) models, it manages API Keys and environment variables centrally and can install MCP Servers from GitHub. FLUJO has an ChatCompletions endpoint and flows can be executed from other AI applications like Cline, Roo or Claude.

- Environment & API Key Management

- Model Management

- MCP Server Integration

- Workflow Orchestration

- Chat Interface

#Detail

FLUJO aims to close the gap between workflow orchestration (similar to n8n, ActivePieces, etc.), Model-Context-Protocol and Integration with other AI Tools like CLine or Roo. All locally, all open-source.

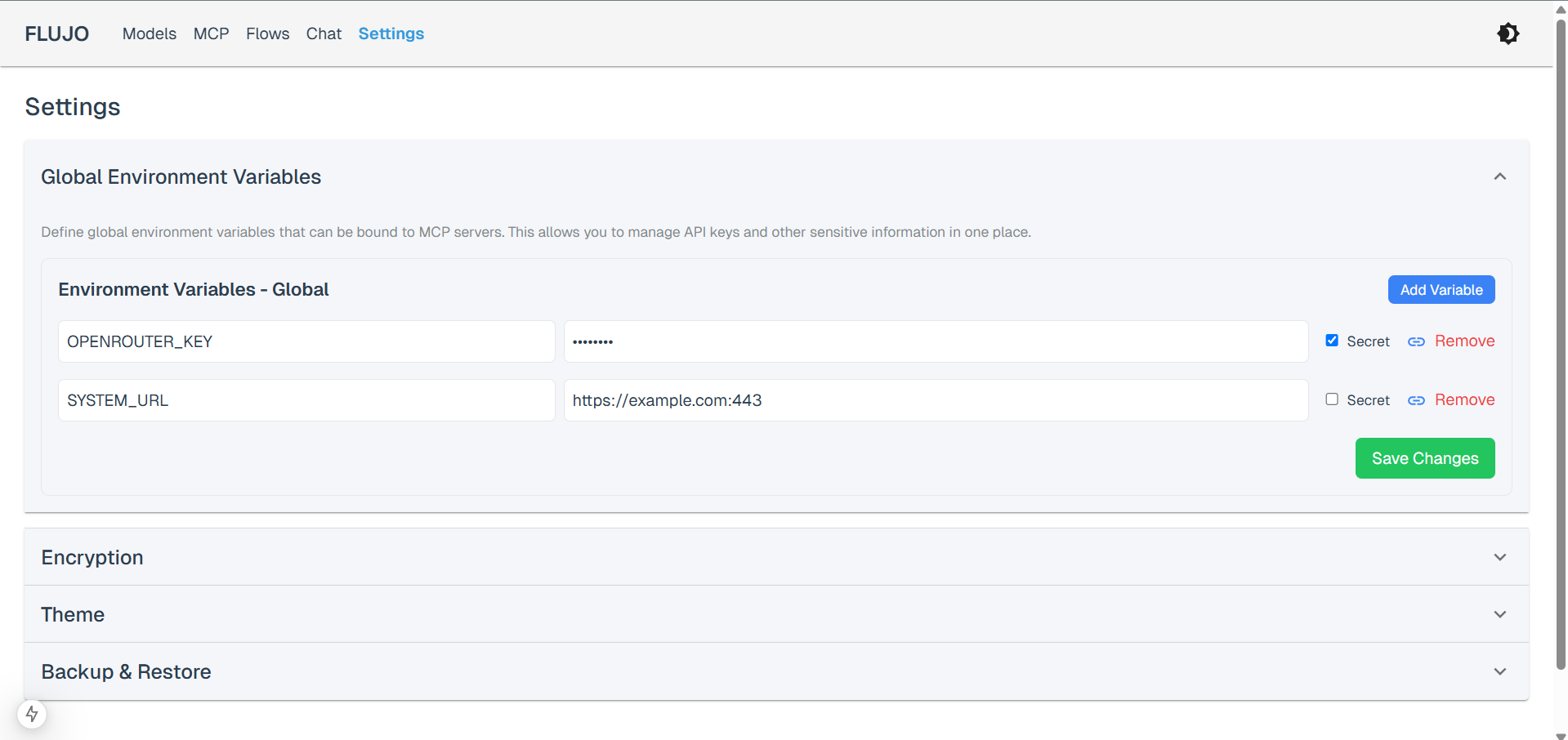

Store environment variables and API keys (encrypted) globally in the app, so your API-Keys and passwords are not all over the place.

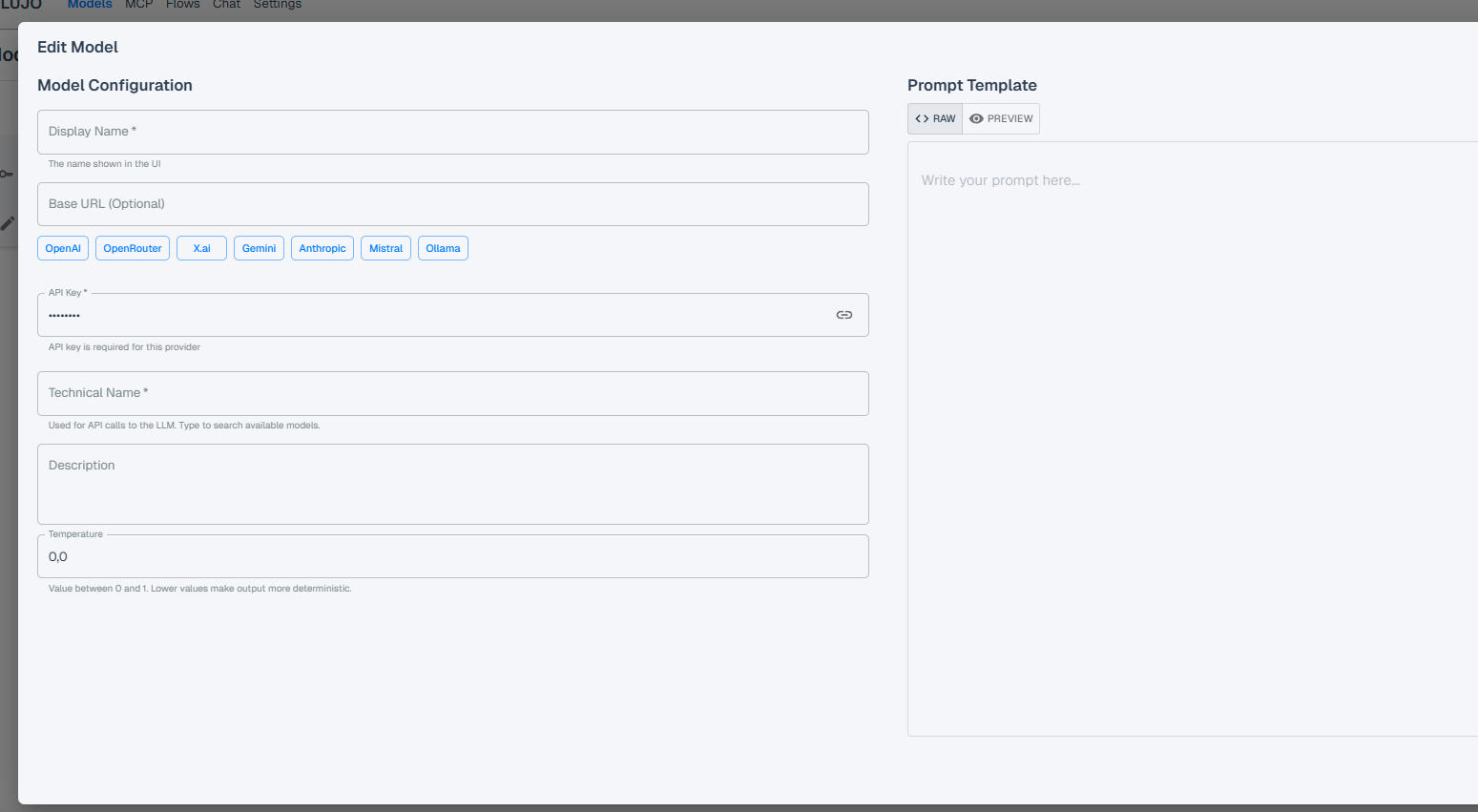

Manage different Models with pre-defined prompts, API-Keys or (openAI compatible) providers: You want to use Claude for 10 different things, with different system-instructions? Here you go!

Can connect to Ollama models exposed with ollama serve: Orchestrate locally! Use the big brains online for the heavy task - but let a local ollama model to the tedious file-writing or git-commit. That keeps load off the online models and your wallet a bit fuller.

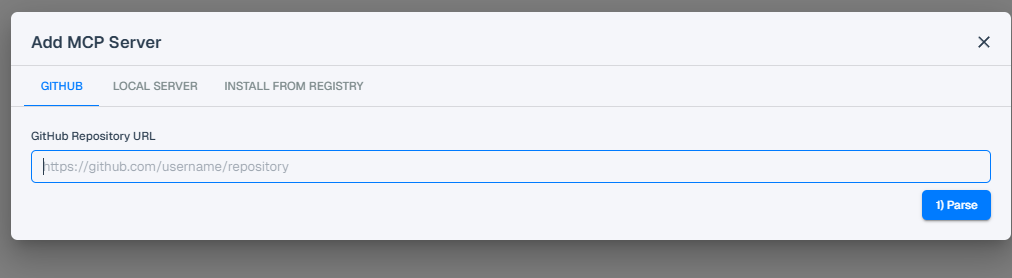

Install MCP servers from Github (depends on the readme quality and MCP server): No struggling with servers that are not yet available through Smithery or OpenTools.

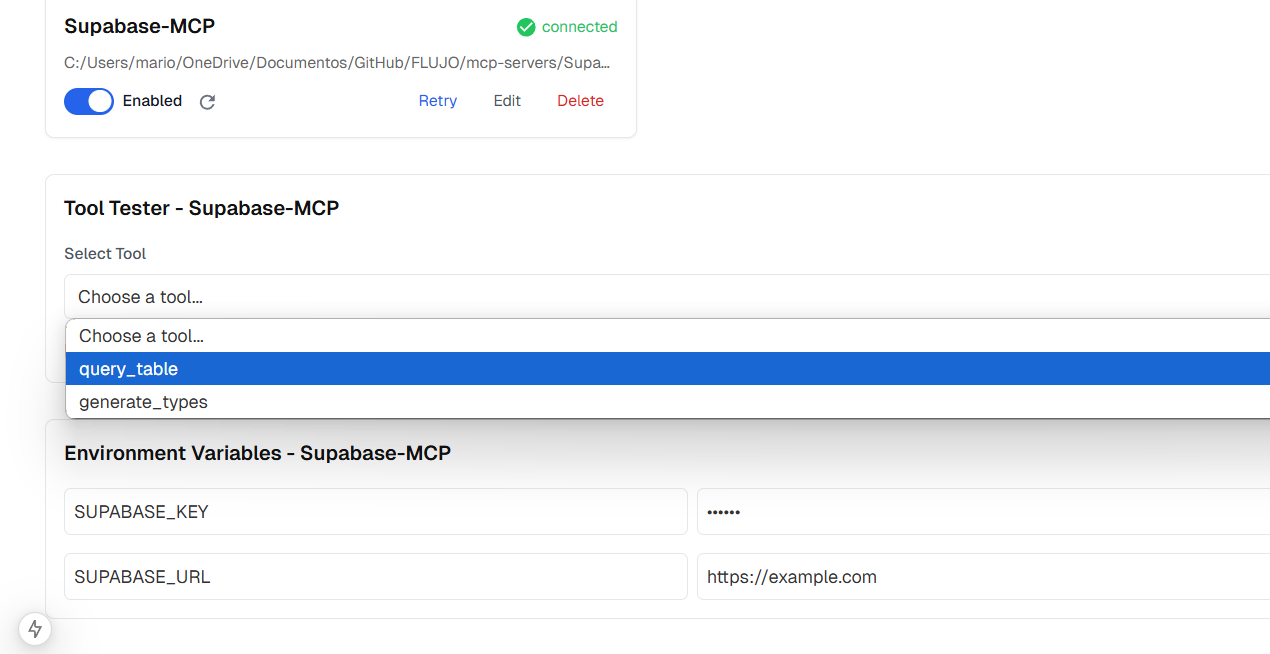

Manage and Inspect MCP Servers (only tools for now. Resources, Prompts and Sampling are coming soon)

Bind MCP Servers' .env-variables (like api keys) to the global encrypted storage: You set your API-KEY once, and not a thousand times.

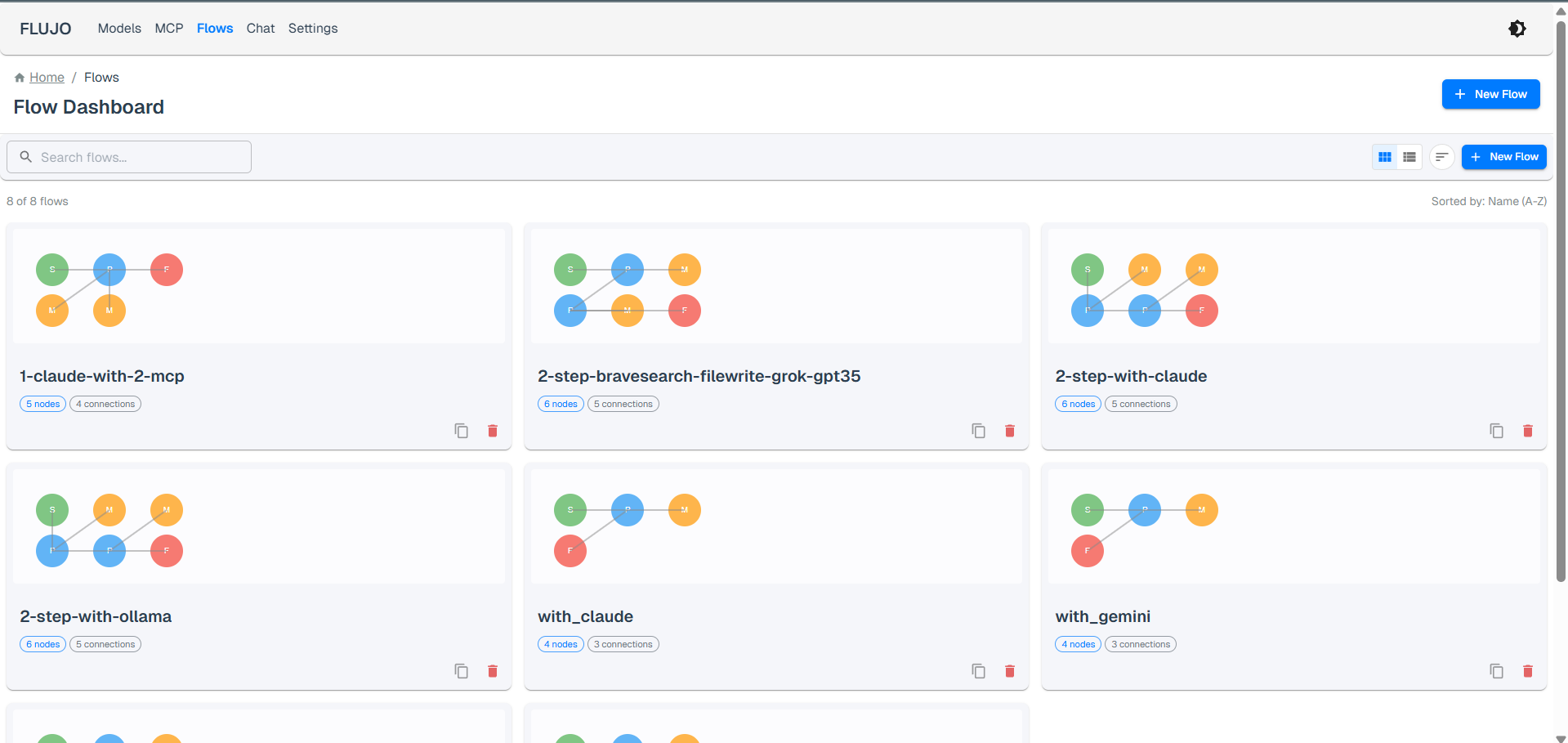

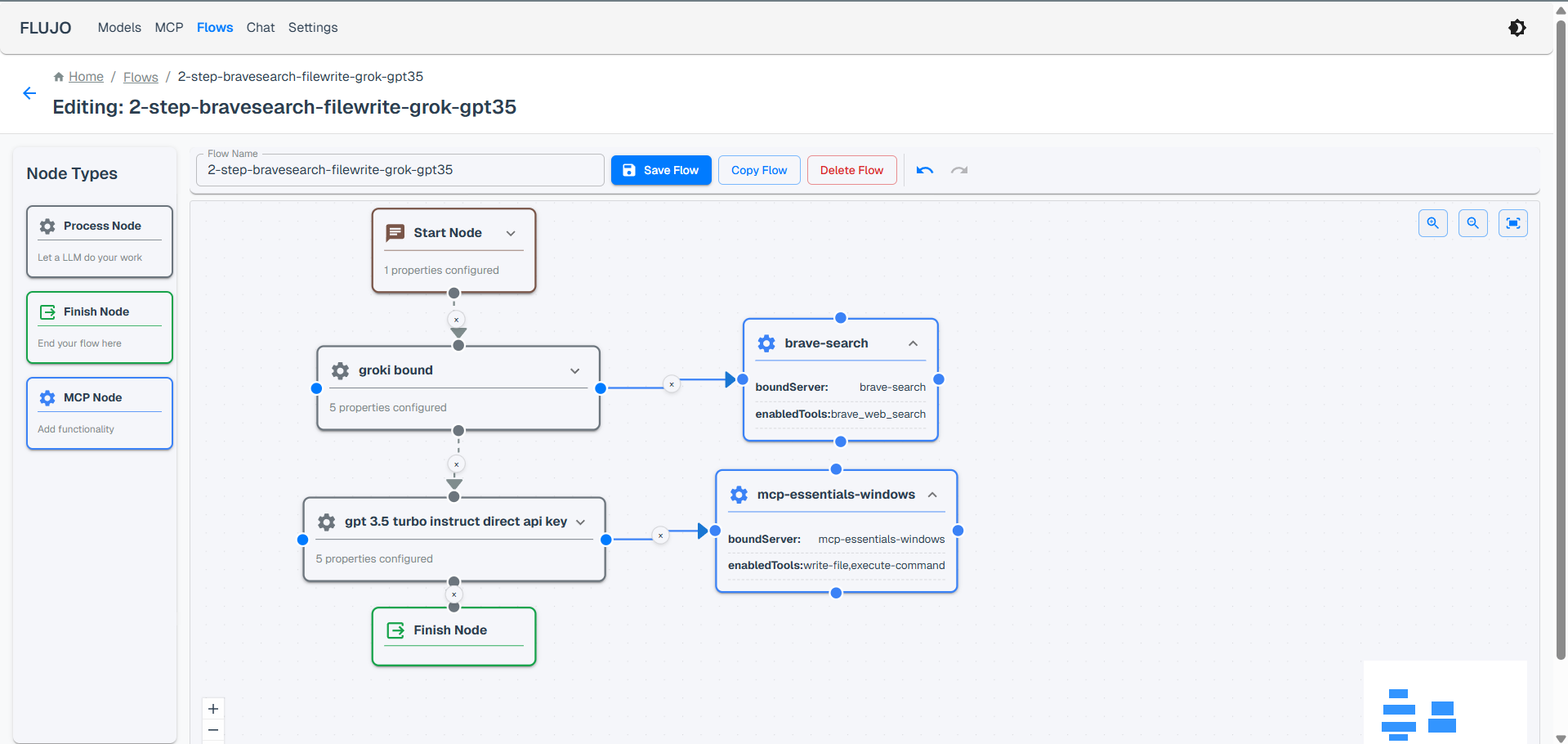

Create, design and execute Flows by connecting "Processing" Nodes with MCP servers and allowing/restricting individual tools: Keep it simple for your model - no long system prompts, not thousand available tools that confuse your LLM - give your Model exactly what it needs in this step!

Mix & match System-Prompts configured in the Model, the Flow or the Processing Node: More power over your Prompt design - no hidden magic.

Reference tools directly in prompts: Instead of explaining a lot, just drag the tool into the prompt and it auto-generates a instruction for your LLM on how to use the Tool.

Interact with Flows using a Chat Interface: Select a Flow an Talk to your Models. Let them do your work (whatever the MCP Servers allow them to do, however you designed the Flow) and report back to you!

Manually disable single messages in the conversation or split the conversation into a new one: Reduce Context Size however you want!

Attach Documents or Audio to your Chat messages for the LLM to process.

Integrate FLUJO in other applications like CLine or Roo (FLUJO provides an OpenAI compatible ChatCompletions Endpoint) - still WIP