Abstract

In today’s fast-evolving, technology driven world, STEM education plays a crucial role in creating creative thinking and practical knowledge applications for a child’s development. A good understanding of foundational mathematics is essential across all STEM disciplines and is needed to eventually succeed in their future career. Despite the focus of education boards all over the world, basic mathematic skills and proficiency levels are low and a serious issue particularly for developing countries. An easy way to improve this situation would be to utilize the online resources available to gain mathematics knowledge. In addition, enabling students to solve mathematics problems will allow them to apply their learning and will provide a solid foundation. This project proposes the solution of improving mathematics skills for school grade students through a problem-solving approach. The development of an easy to use and cheap software application provides the means to achieve this. Although, in the past, trying to solve mathematics problems by computers was met with very limited success, a promising way to pursue this cutting-edge research is through the recent popularity and advances made by Artificial Intelligence (AI) systems. At the same time, AI systems pose challenges that are unprecedented and unproven which might be more harmful than beneficial to a student.

This project uses an innovative technique that tries to leverage the mathematics knowledge and capabilities of AI models and also to be aware of their advantages and limitations. The technique was demonstrated by developing a software application named MathGPT that uses OpenAI GPT3.5 which is a large language model (LLM). Users of this application are anyone who wants to practice school level mathematics. MathGPT allows the user to specify their requirements by using an easy-to-use GUI that primarily captures existing questions or existing test papers, the desired complexity and the number of practice questions or complete practice papers to generate. Using this input MathGPT generates new questions or new complete practice papers and displays them through the GUI. MathGPT has been tested extensively using specific questions from various topics and areas as per elementary school curriculum and also as well on complete test papers. After analyzing the results obtained using MathGPT, it is observed that by employing the technique proposed in this project it is possible to exploit the mathematics capabilities of the LLMs and to also overcome its limitations to create an innovative and useful application for students all around the globe.

Introduction

We are currently living in a digital world surrounded by mobile phones, laptops, social networks, and other similar technologies which have been marked by immense growth in recent years, because of which we need to reconceptualize the traditional way of performing a task. The education field has been disrupted by an online mode of teaching and tutoring resources, whose benefits were demonstrated during COVID-19 and fueled further growth. In today’s modern technology-savvy world, STEM education plays a very vital role to help develop a child’s critical thinking, flexibility, discover real-world applications, and creativity. Mathematics characterized by its reasoning and numerical capabilities plays a central and practical role across various STEM disciplines.

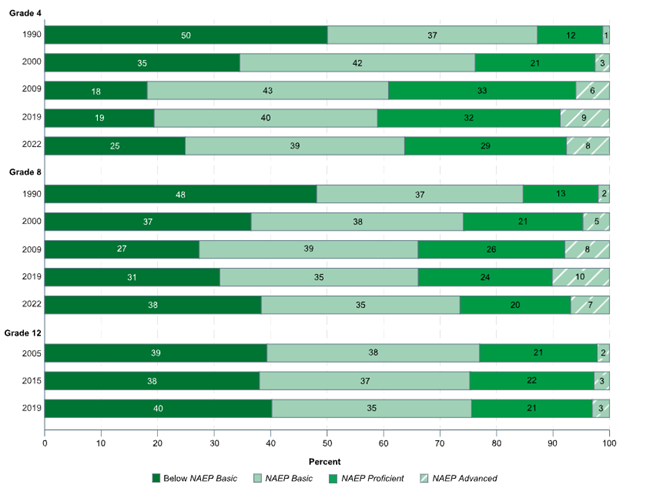

Justifiably, the math curriculum is globally viewed and accepted as a foundational skill required for all career paths. Foundational mathematics is deeply integrated in the school curriculum and is perceived as a privileged skill and even necessary for admission to post-secondary education. However, there is a growing concern that children are not learning sufficient mathematics skills. Data provided by the National Assessment of Educational Progress (NAEP) of student performance in mathematics at grades 4, 8, and 12 in both public and private schools in USA is shown in Figure 1. Among 4th-grade students in 2022, 75 percent performed at or above the NAEP Basic achievement level in mathematics, 36 percent performed at or above NAEP Proficient, and 8 percent performed at NAEP Advanced. Among 8th-grade students in 2022, 62 percent performed at or above the NAEP Basic achievement level in mathematics, 26 percent performed at or above NAEP Proficient, and 7 percent performed at NAEP Advanced. However, these numbers, when compared to poor, developing countries, are concerningly low. As per the World Bank Education Statistics, the percentage of 6th grade students reaching basic proficiency level in mathematics is shown in Figure 2. Namibia, the highest among them, has only 34% of its students having achieved basic proficiency in mathematics. Clearly, despite the policies and strategies of the education boards all over the world, the solution to improve these numbers lies in helping students apply their knowledge and solve math problems.

An easy solution may exist by acknowledging that mathematics education in the current society can be improved by tapping into the vast online resources. There exist innumerable texts, videos, and tutorials on mathematics on the internet that students can use to grasp and strengthen their mathematics knowledge. Yet for a subject like mathematics, to get a sense of what it means to think mathematically, a student often needs sufficient mathematical practice.

This project addresses the issue of improving mathematics skills of elementary school students by providing them with a problem-solving approach. The idea is to generate an intelligent question and test generating system with an option to select the recommended question pattern and complexity that both the students and teacher find engaging. The goal of the project is to overcome the challenges that computers face when understanding mathematics and also achieve a cheap and efficient solution to be used by everyone in the world to develop their own personal mathematics skills.

This project includes: the background information, which includes what AI and ML are and what they are used for. Then described are the lab question, hypothesis, and purpose of the project. Next, listed are the materials and procedure. Then described are the Data & Observations whose main parts are Topic and Subtopic Identification, Question Generation, MathGPT Application GUI and User guide, and Practice Test Paper Generation Trials. Next, is the data analysis where the results of the data and observation are discussed. Finally, the lab report ends with the sources of error faced in this project, future improvements, the conclusion, the references, and appendix.

NAEP data for student performance in mathematics

Methodology

Part 1: Software Design

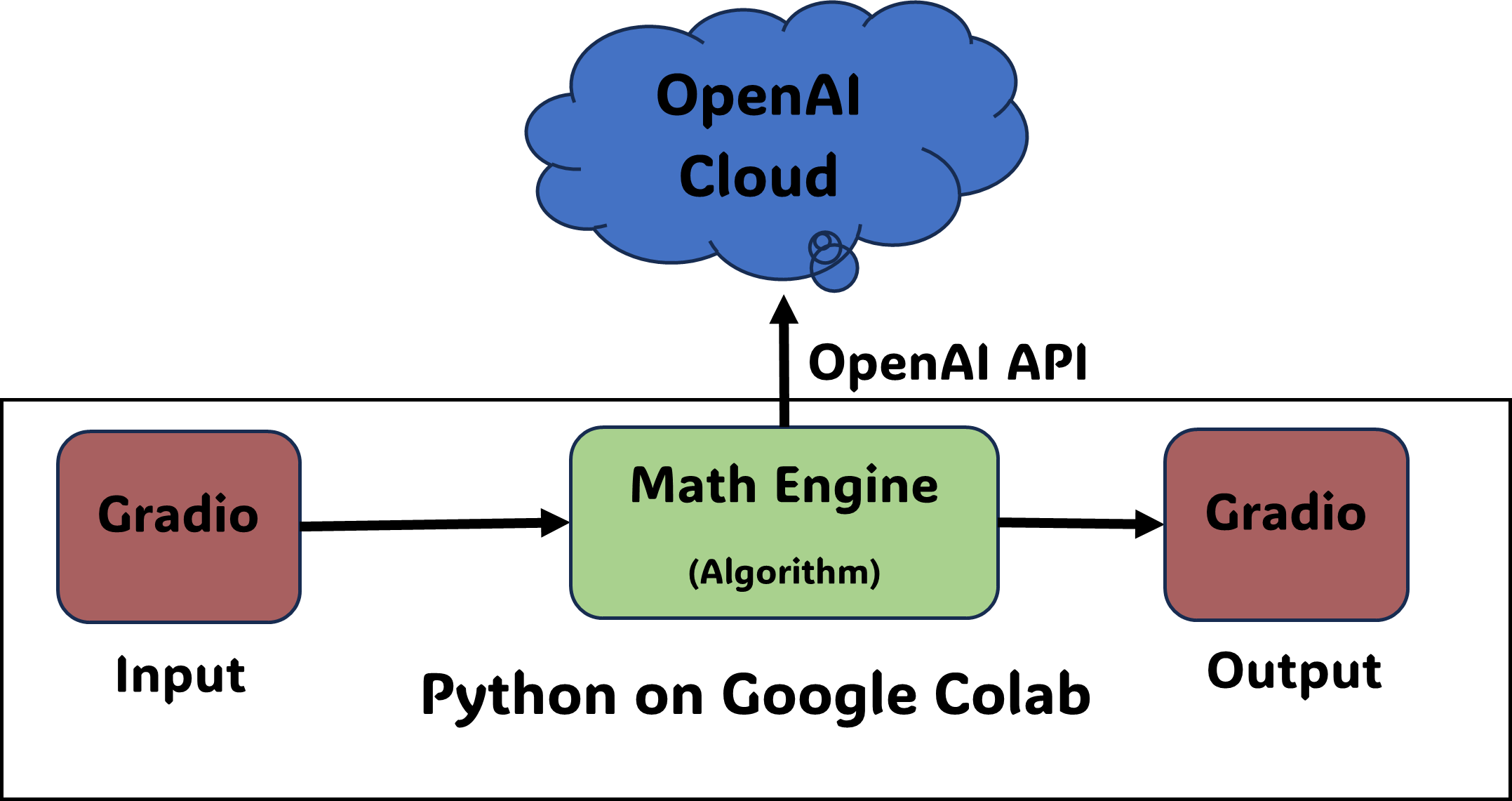

The software (MathGPT) consists of 3 modules as shown in figure 5. The input and output module has been developed using an open-source python package called Gradio. The Math Engine module contains the main logic of MathGPT. It uses the LLM model GPT 3.5 version: “gpt-3.5-turbo-1106”. The Math Engine invokes APIs implemented in OpenAI cloud through the API keys to use the LLM functionalities. MathGPT has been developed using python and is running in Google Colab Notebooks.

Part 2: OpenAI APIs

Langchain is an easy to use open-source framework that allows developers to work with LLMs and ML to develop LLM-powered applications using python or JavaScript. In this project, langchain is used to call the LLM models, providing prompts and parsing the response. For example, as shown below in fig 6, the task of identifying whether a given mathematics question is a word problem the steps are shown below.

- A template was created which contains the commands for the LLM. Writing a clear, precise, and meaningful template is essential for getting the desired response.

- Next, a response schema is created to tell the LLM how the output should be structured

- Then the output parser is created to retrieve the LLM’s response.

- Next, the PromptTemplate() is made to finally structure the complete query and the output format which is necessary to pass to the LLM.

- Finally, the call is made to the LLM which responds to the query as per the instructions

template = """ You are a mathematics expert and assistant who helps answer mathematics questions. Is the mathematics question a word problem? Only answer with a yes or no: \n{format_instructions}\n{question}? """ response_schemas = [ ResponseSchema(name="word problem", description="is the mathematics question a word problem?") ] output_parser = StructuredOutputParser.from_response_schemas(response_schemas) format_instructions = output_parser.get_format_instructions() prompt_template = PromptTemplate( input_variables=["question"], template=word_template, partial_variables={"format_instructions": word_format_instructions} ) word_llm = LLMChain(llm="gpt-4", prompt=prompt_template)

Using langchain to invoke the LLM

Part 3: Prompt Engineering

To assist the LLM with identifying the topic of the question Prompt Engineering can be used. Prompt Engineering is the technique employed to get the best output response from the LLM. In this project Few-shot prompting has been used, which means clear, definitive, and descriptive examples provided to the model to bound and guide the topic response for a given question. To perform prompt engineering, the steps are shown below. Fig 7 shows the code snippet for performing prompt engineering in this work.

- An example format template is created which tells the LLM how the examples are formatted and passed to the PromptTemplate API.

- The prompt format along with the actual examples are passed to the FewShotPromptTemplate() API that prepares the message and finally passed to the LLM using the LLMChain() API.

- The prompt engineered LLM is coupled with the base LLM to work as fine-tuned LLM to serve purposes of this project work.

examples = [ {"question" : "What is 234-187?", "topic" : "Numbers and Numerations"}, {"question" : "5/2 / 5/7", "topic" : "Fractions"}, {"question" : "c – 9 at c = 16", "topic" : "Algebra"} ] example_formatter_template = """ question: {question} topic: {topic}\n """ example_prompt = PromptTemplate( input_variables=["question", "topic"], template=example_formatter_template, ) few_shot_prompt = FewShotPromptTemplate(examples=examples, example_prompt=example_prompt, prefix="Classify the topic of every input", suffix="question: {input}\ntopic:", input_variables=["input"], example_separator="\n\n", ) trained_llm = LLMChain(llm= "gpt-3.5-turbo-1106", prompt=few_shot_prompt) topic_chain = SimpleSequentialChain(chains=[trained_llm, topic_llm], verbose=False)

Using prompt engineering to improve accuracy of identification of topic

Part 4: Algorithm (Math Engine)

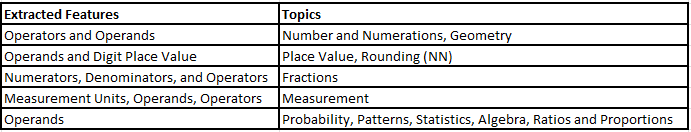

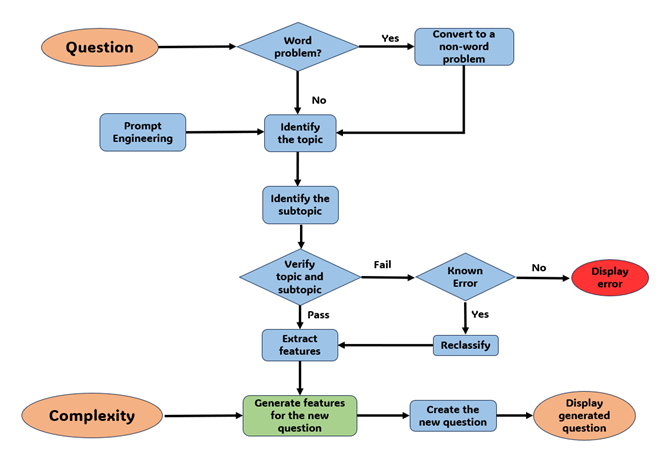

LLMs are not the perfect model and can get easily confused and provide wrong and misleading results. Therefore, to ensure the correctness and accuracy of the tool the problem needs to be broken down into simpler tasks and corrected if necessary. The main logic of MathGPT is shown in figure 4 and in the steps below. The key idea being understanding the question category as precisely as possible and break it down into its features (e.g. operands and operators) and regenerating the new set of features and finally assembling the new question as an output. In fig 9 the blue colour represents the steps that uses the LLM functionalities.

- First, the question is taken as an input

- It is classified as a word or non-word problem.

- If the question is not a word problem, the LLM is used to predict the topic of the problem, classified as per fig 10.

- The LLM predicts the subtopic in that particular topic.

- To confirm that the question has been classified correctly an alternative template is used.

- In the feature extraction stage, the LLM’s mathematical understanding is used heavily to parse the problem and break it down into its mathematical components. The figure 8 shows the features extracted in this project.

- The new features are the generated using the current features and the desired complexity

- The original question and the new features set are used to compose an entirely new question.

Early on if we detect that the question is a word problem, then the question is first converted into the expression needed to solve the problem and then the same steps are repeated as shown in fig 9. Also, if the verification fails and if it is a known error, we reclassify the question to the correct topic and subtopic. If the error is not known, we display an error.

Using the application

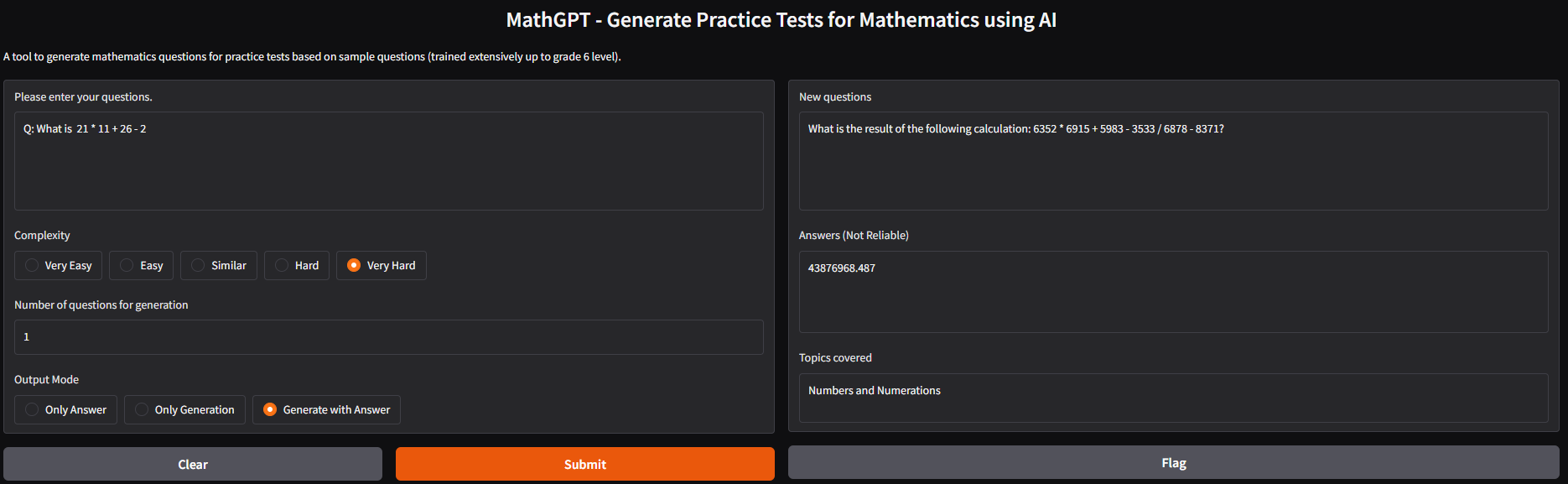

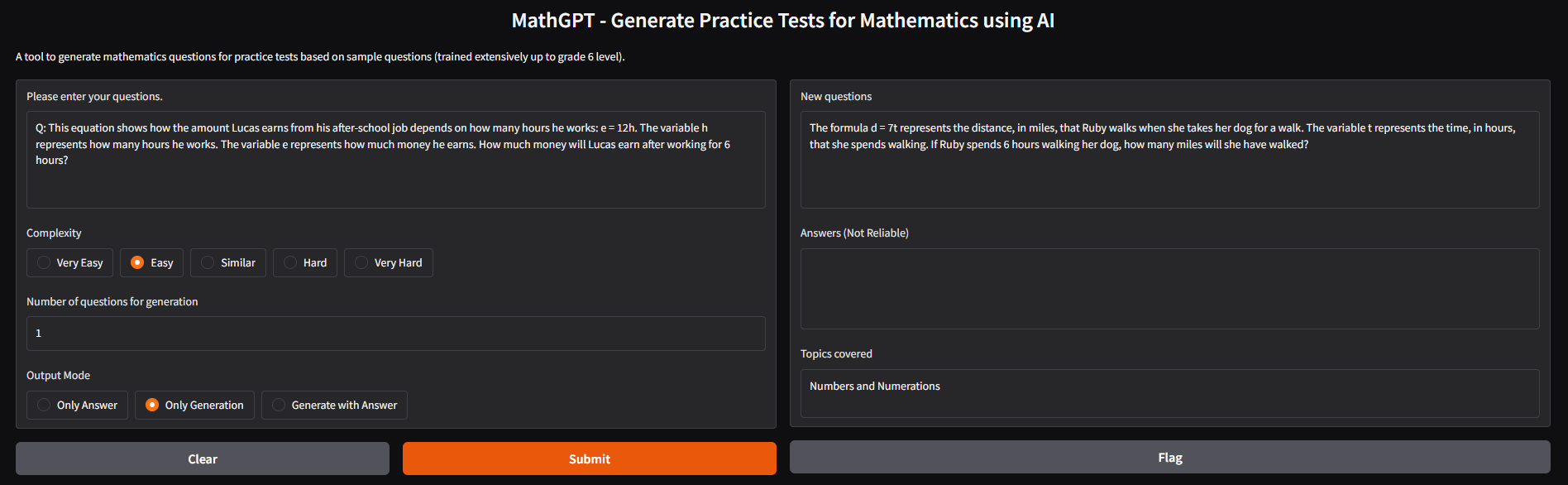

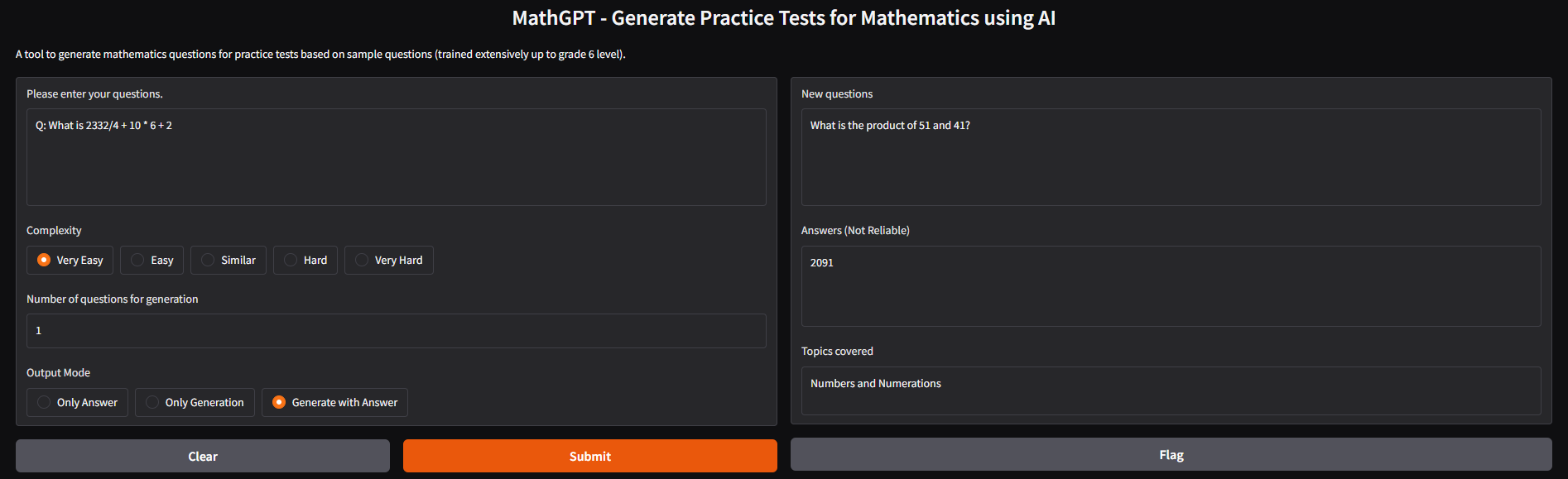

Sample Questions and the generated responses

| Input Question | Generated Question |

|---|---|

| The hobby store normally sells 10,576 trading cards per month. In June, the hobby store sold 15,498 more trading cards than normal. In total, how many trading cards did the hobby store sell in June? | A library usually lends out 71,880 books every year. This year, during the summer reading program, the library lent 21,474 more books than usual. How many books did the library lend out during the year? |

| Melissa buys 2 packs of tennis balls for $12 in total. All together, there are 6 tennis balls. How much does 1 pack of tennis balls cost? How much does 1 tennis ball cost? | David purchases 6420 comic books for $7505 in total. All together, there are 6710 comic books. How much does 1 comic book cost? How much does 1 box of comic books cost? |

| What is 21 * 11 + 26 - 2 | What is the result of the following calculation: 6352 * 6915 + 5983 - 3533 / 6878 - 8371? |

| This equation shows how the amount Lucas earns from his after-school job depends on how many hours he works: e = 12h. The variable h represents how many hours he works. The variable e represents how much money he earns. How much money will Lucas earn after working for 6 hours? | The formula d = 7t represents the distance, in miles, that Ruby walks when she takes her dog for a walk. The variable t represents the time, in hours, that she spends walking. If Ruby spends 6 hours walking her dog, how many miles will she have walked? |

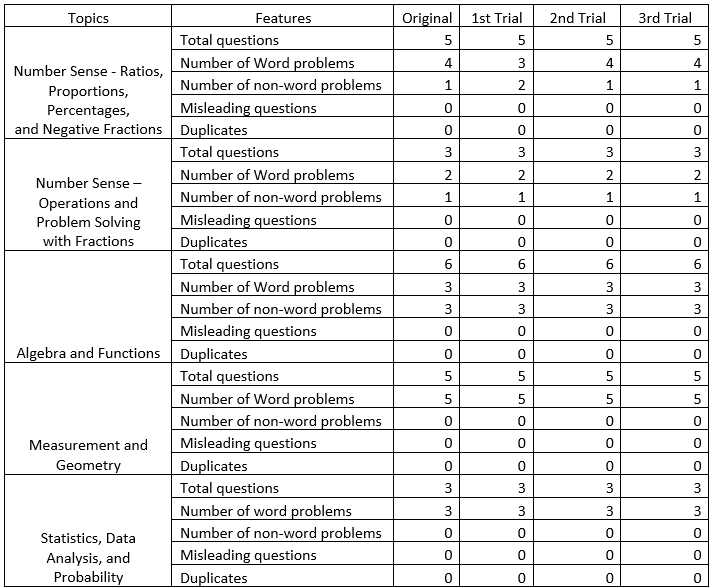

Results

This section shows the data for trials to generate practice test paper from an existing test paper. The input test paper contains 22 questions distributed between the various topics as shown below. The questions are taken as is from California’s grade 6 standards test and pasted in the input text box as described above. The complexity is set to “Similar” and Number of Question to generate as 1 and mode as “Only Generation”. This instructs MathGPT to generate 22 questions of similar complexity as the input question. This experiment has been repeated 3 times and for each trial the original questions and the generated questions have been compared and noted in the table.

Generation of sample test papers taken from California grade 6 standards test (Trial 1, 2, 3)