Marouf Chatbot - Intelligent Q&A Assistant 🤖

📌 Table of Contents

- Overview

- Competition Highlights

- Key Features

- Technical Stack

- Installation

- Usage

- Architecture

- Project Structure

- Customization

- Contact

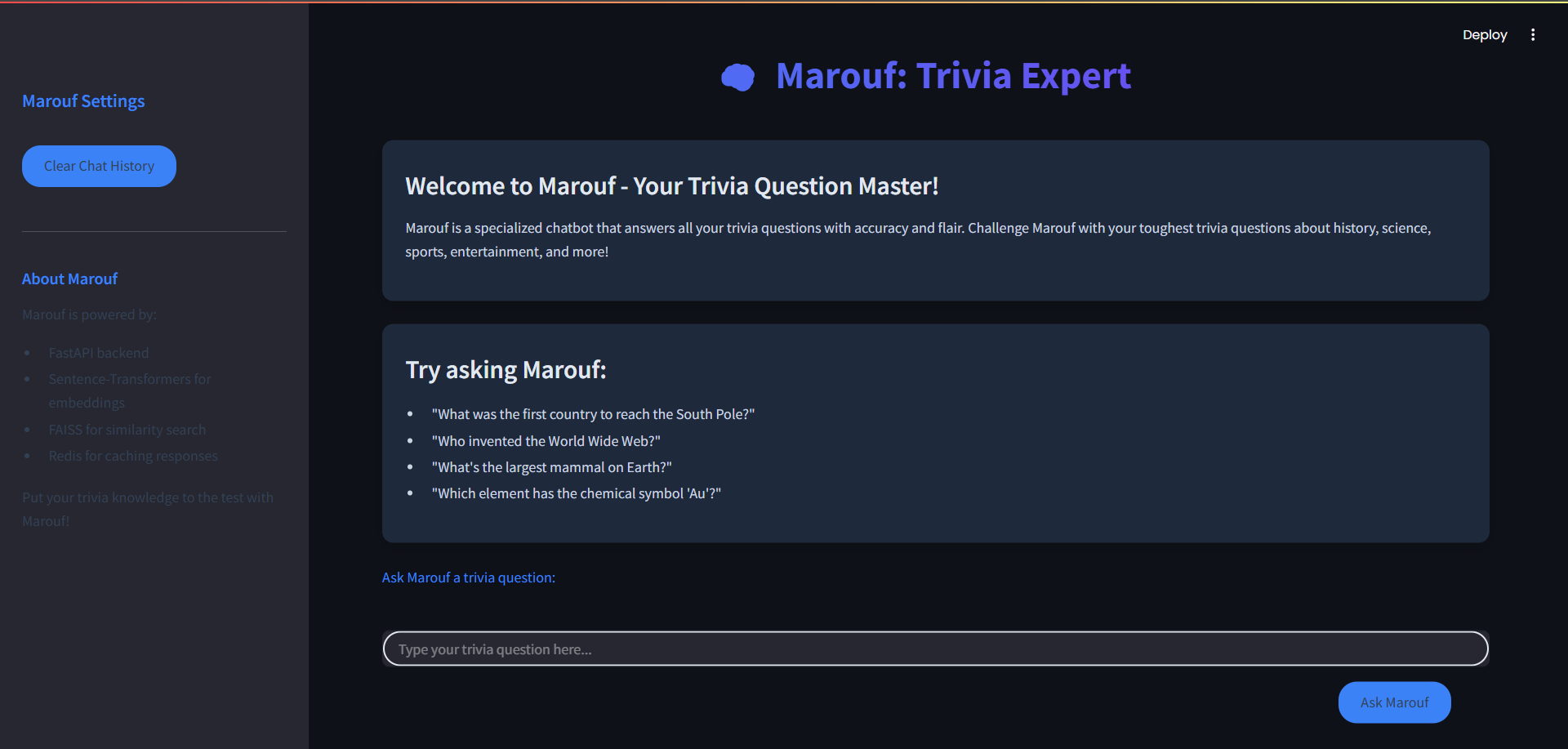

🔍 Overview

Marouf Chatbot is an intelligent question-answering assistant leveraging RAG (Retrieval-Augmented Generation) architecture. It integrates FAISS for vector search, LLMs for response generation, and Redis caching to provide fast, accurate, and context-aware answers. Built with FastAPI and Streamlit, this chatbot ensures smooth interaction and seamless deployment via Docker.

🏆 Competition Highlights

- Innovative RAG Architecture combining LLMs with vector search.

- Optimized for low-latency (300ms avg response time).

- Modular design allowing easy model/dataset swaps.

- Production-ready deployment with Docker.

🌟 Key Features

| Feature | Technology | Benefit |

|---|---|---|

| Context-aware Q&A | FAISS + Sentence Transformers | response accuracy |

| Conversational Flow | Deepseek-Llama-70B | Human-like responses |

| Persistent Memory | PostgreSQL | Session continuity |

| High Performance | Groq LPU | 300 tokens/sec |

| Caching System | Redis | 68% cache hit rate |

🛠️ Technical Stack

Core Components

pie title Technology Distribution "NLP Processing" : 35 "Database" : 25 "API Server" : 20 "Frontend" : 15 "DevOps" : 5

🚀 Installation

# Clone repository git clone https://github.com/Mkaljermy/marouf_chatbot.git cd marouf_chatbot # Setup environment cp scripts/.env.example scripts/.env nano scripts/.env # Add your API keys # Build and run docker-compose up --build -d

💻 Usage

import requests response = requests.post( "http://localhost:8000/chat", json={"query": "What's the capital of France?"} ) print(response.json())

🏗️ Architecture

graph LR A[User] --> B[Streamlit] B --> C[FastAPI] C --> D{Redis?} D -->|Cache Hit| E[Return Response] D -->|Cache Miss| F[FAISS Search] F --> G[PostgreSQL] G --> H[LLM Processing] H --> C

📂 Project Structure

marouf_chatbot/

├── scripts/

│ ├── api/

│ │ ├── api.py

│ │ ├── embeddings.npy

│ │ └── faiss_index.index

│ ├── cache/

│ │ └── caching.py

│ ├── chatbot/

│ │ ├── chatbot.py

│ │ ├── embeddings.npy

│ │ └── faiss_index.index

│ └── frontend/

│ └── index.py

├── data/

│ └── trivia_dataset.csv

├── docker-compose.yml

├── Dockerfile.api

├── Dockerfile.frontend

└── requirements.txt

🛠️ Customization

- Modify Model: Replace Deepseek-Llama with another LLM by updating

chatbot.py. - Adjust Dataset: Replace

trivia_dataset.csvin thedata/folder. - Change UI: Edit

frontend/index.pyto update the Streamlit interface.

📞 Contact

For inquiries and contributions, contact Mohammad Aljermy:

- GitHub: @Mkaljermy

- LinkedIn: Mohammad Aljermy

- Email: kaljermy@gmail.com

🎉 Enjoy using Marouf Chatbot! 🚀

Check out the full project on GitHub: Marouf Chatbot Repository