Local AI: Secure, Private LLM Interaction

Local AI is a web application designed to empower users with the ability to interact with Large Language Models (LLMs) while maintaining complete control over their data and privacy. In an era where data breaches and privacy concerns are paramount, Local AI offers a secure alternative to cloud-based LLM services.

The Growing Need for Privacy in AI

The widespread adoption of AI has led to concerns about the handling of sensitive user data. Traditional cloud-based LLM services often store user queries and data, raising questions about data security and confidentiality. Users need a solution that allows them to leverage the power of LLMs without compromising their privacy.

Local AI: A Privacy-Focused Approach

Local AI addresses these concerns by processing all user queries locally. This means that your data never leaves your device, ensuring complete confidentiality. Local AI provides a user-friendly interface for interacting with LLMs, combining the power of AI with the security of local processing.

Key Features

- Local Processing: All data is processed locally, ensuring privacy.

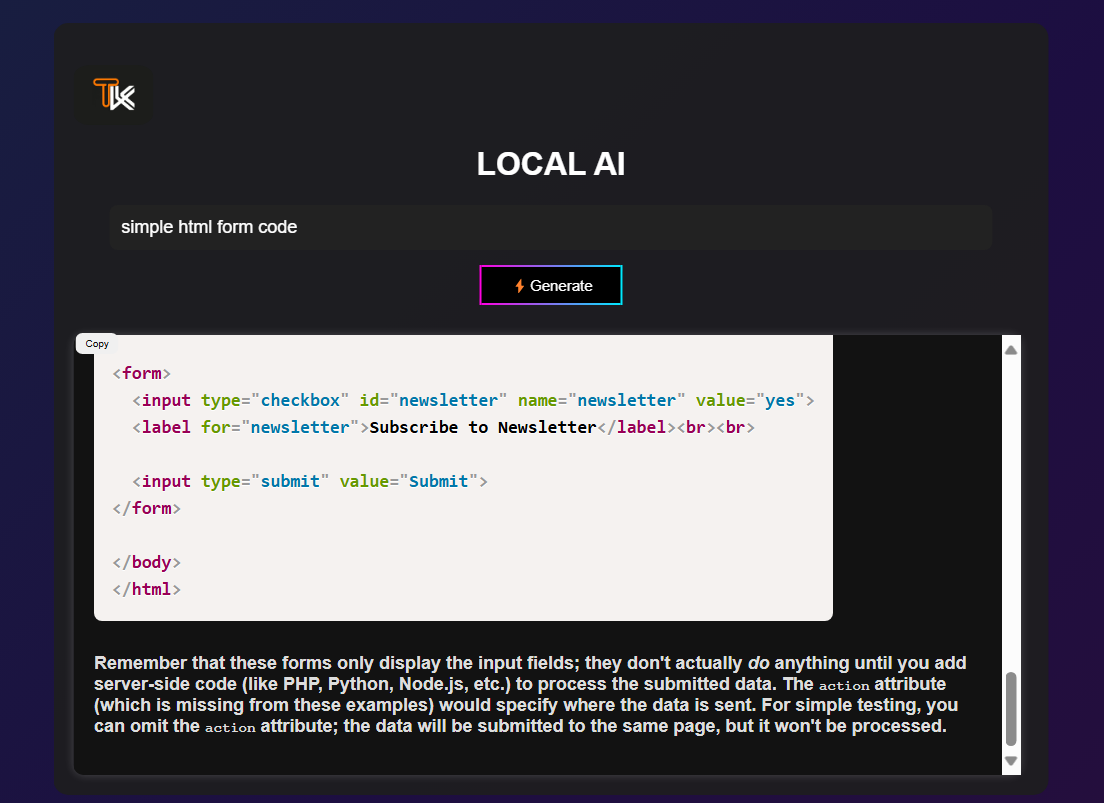

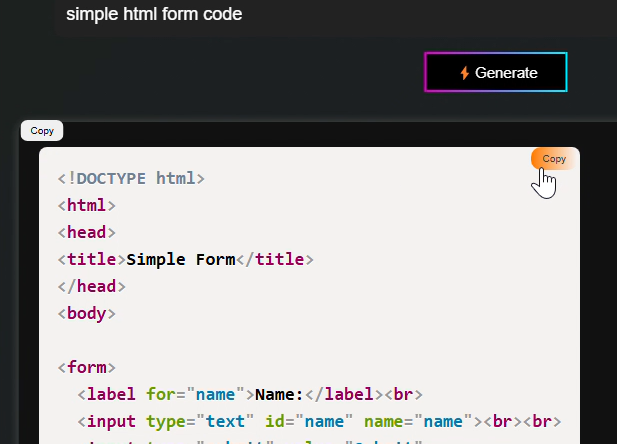

- User-Friendly Interface: Intuitive web interface for easy interaction.

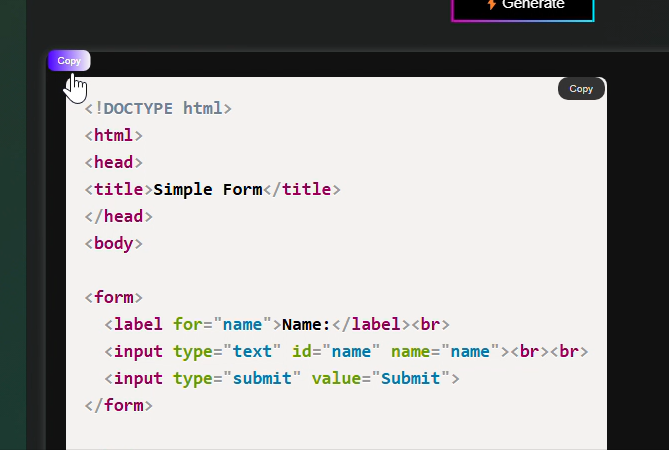

- Code Block Highlighting: Syntax highlighting for improved code readability.

- Copy Functionality: Easily copy responses and code blocks.

- Loading Indicator: Visual feedback during processing.

How Local AI Works

- User Input: The user enters their query in the web interface.

- Local Processing: The query is processed locally using the LLM API key.

- Response Display: The LLM's response is displayed in the browser.

- Copy and History: Users can copy responses or provided code blocks also.

Installation

Follow these steps to set up Local AI:

- Clone the repository: git clone https://github.com/Tejas7k/LocalAI.git

- Create a virtual environment.

- Install dependencies:

pip install -r requirements.txt - Set your LLM API key as an environment variable.

- Run the application:

python app.py

Usage

- Enter your query.

🎯 Usage

🔹 Enter Your Query

Type your query in the input field.

🔹 Click "Generate"

Click the "Generate" button to process your request.

🔹 View the Response

Generated content will be displayed in the response area.

🔹 Copy the Response

Click the Copy Response button to copy the full output.

🔹 Copy Code Blocks

Copy individual code snippets with the Copy button.

Conclusion

Local AI provides a secure and private way to leverage the power of LLMs. By processing data locally, users can maintain complete control over their information. We invite you to try Local AI and experience the benefits of privacy-focused AI.