🚀 Abstract

We’re bringing LLM-powered intelligence to your CI/CD pipeline—no RAG, no fine-tuning, just results. Our project integrates source code and tool-generated reports (test results, security scans, etc.) directly into smarter, more efficient analysis, security checks, and QA.

Seamlessly integrate, let automation handle repetitive tasks, and focus on what truly matters—building and shipping high-quality software.

👔 Introduction

Modern software engineering follows a structured path—from gathering requirements to deploying the final product. Copilot has reshaped how developers transition from ideas to code, yet a critical gap remains: the untapped potential of automated intelligence in CI/CD pipelines.

Today's CI/CD pipelines handle a vast amount of structured data—source code, test results, security scans, and dependency reports. However, these artifacts, generated by existing tools, are underutilized. They provide raw data but lack deeper insights.

So, what if we could extract meaningful analysis from this structured information?

Our project enhances CI/CD workflows by integrating intelligent automation, transforming existing toolkits into a context-aware assistant. This enables:

✅ More effective security assessments

✅ Proactive insights that support engineering decisions

This isn’t just about automation—it’s about making CI/CD pipelines more adaptive, efficient, and responsive.

👀 Methodology

On one hand, referencing the ReAct, LLMs generate reliable, hallucination-free responses when provided with sufficient ground truth data. With reasoning technologies like Chain of Thought (CoT), LLMs can analyze patterns, detect anomalies, and surface actionable insights.

On the other hand, modern software scanning tools already generate structured data, but they lack the ability to interpret, connect, and act on it.

The Challenge:

🔥 Can we extract real intelligence—without RAG, without fine-tuning, just results?

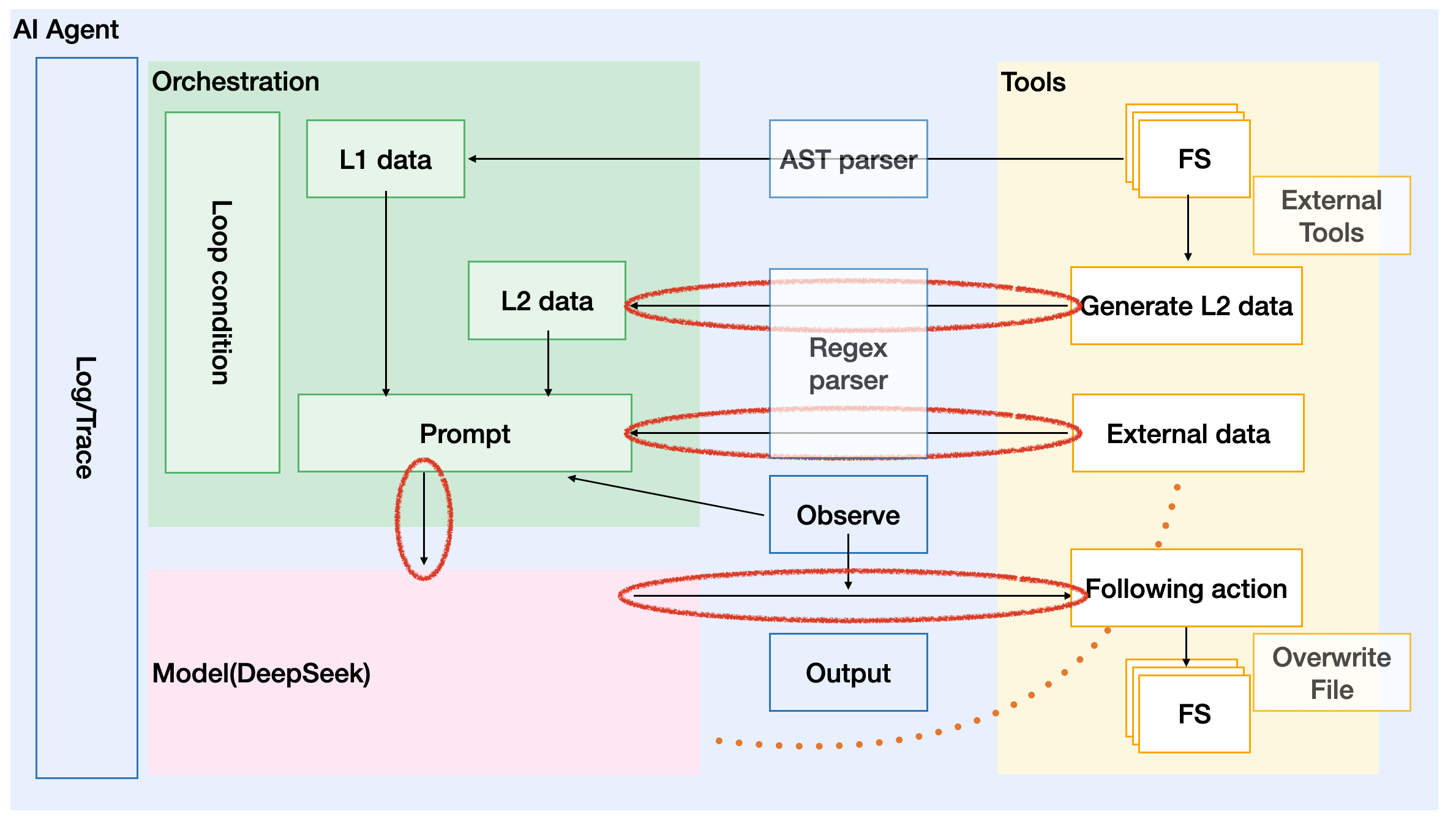

Let's shake hands, make it happen. By ground truth as source code or structured reports, with a clear human intention, ask LLM to deal with the task. Using Google's AI Agent design style to explain our design, we implements tools read data from both source code and structured reports, with prompt as prefix

represent human intention.

🔥 Can we upgrade CI/CD pipelines from passive scanners to AI-driven assistants?

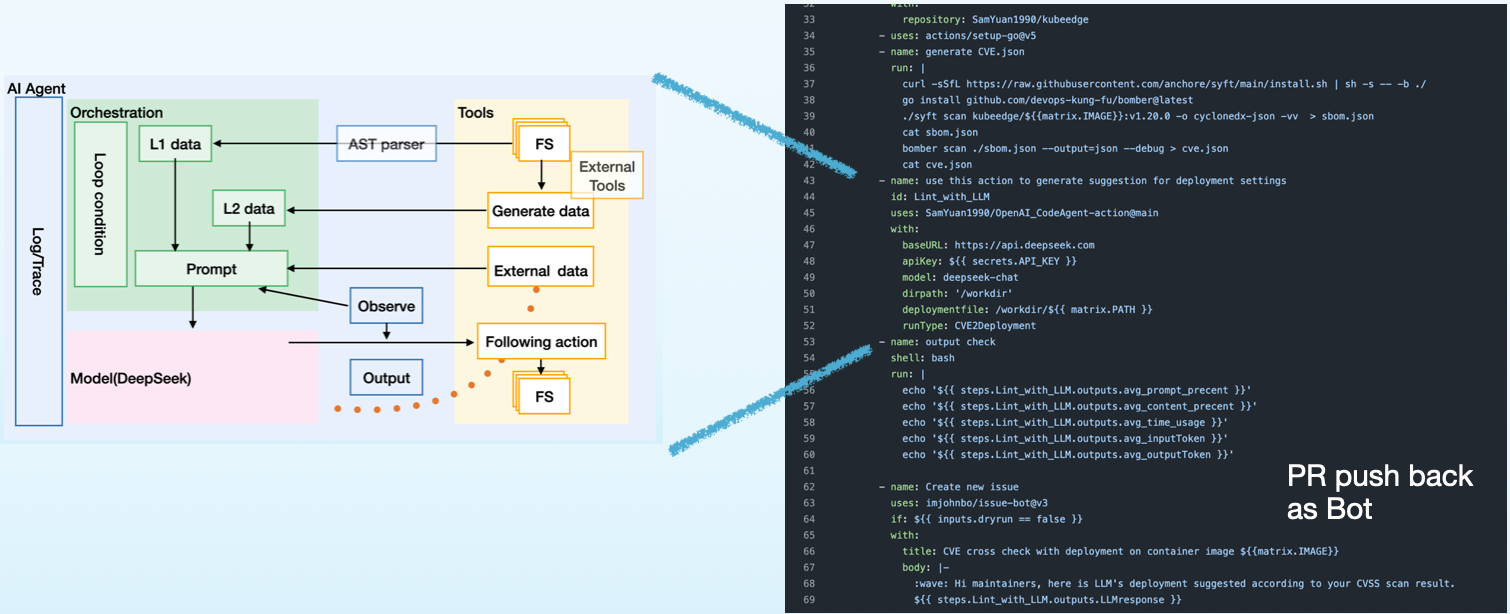

Yep, we package this AI agent as a github action as a part of CI/CD pipeline code!

🔧 Experiments

As a github action, we provide online experiments publish to everyone at here.

Thanks to kubeedge community and brpc community, we have experiments with their repo in our maintainers own fork and have results.

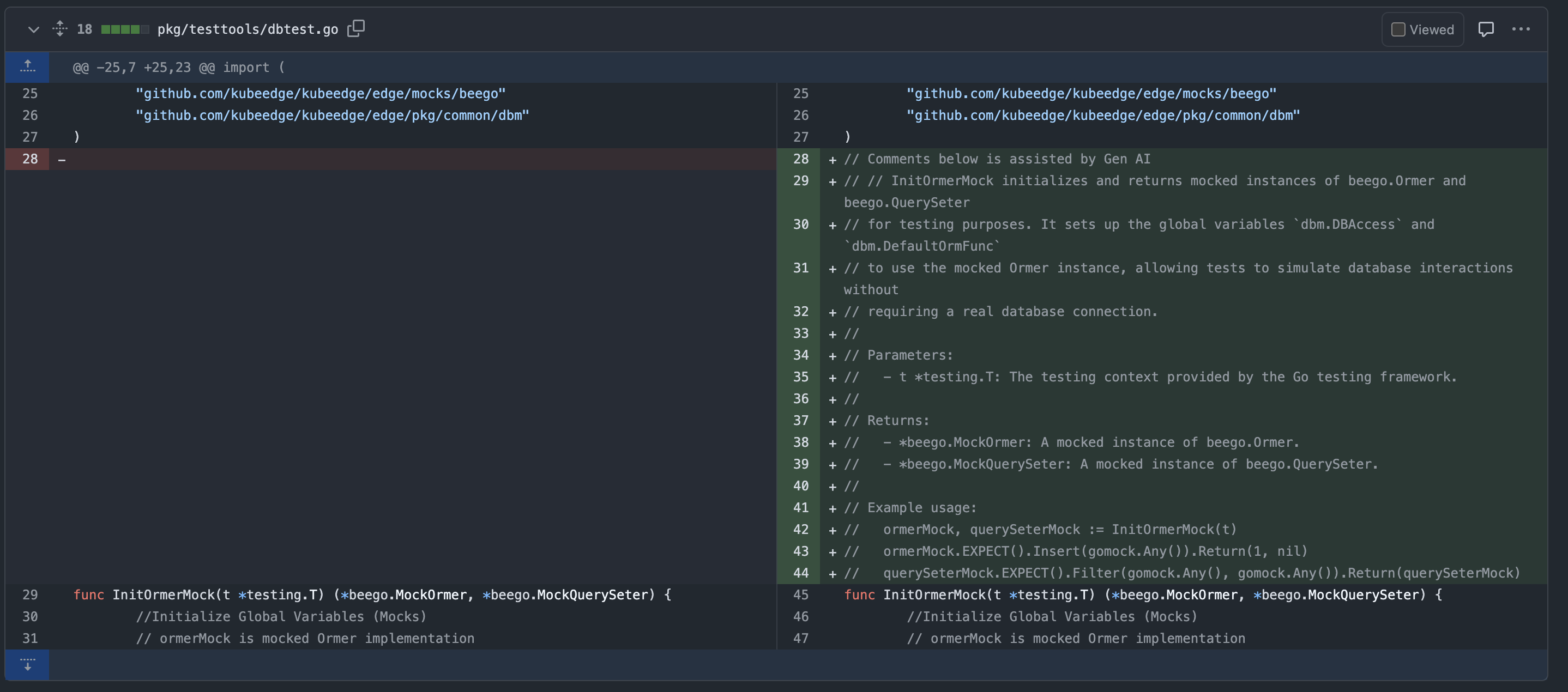

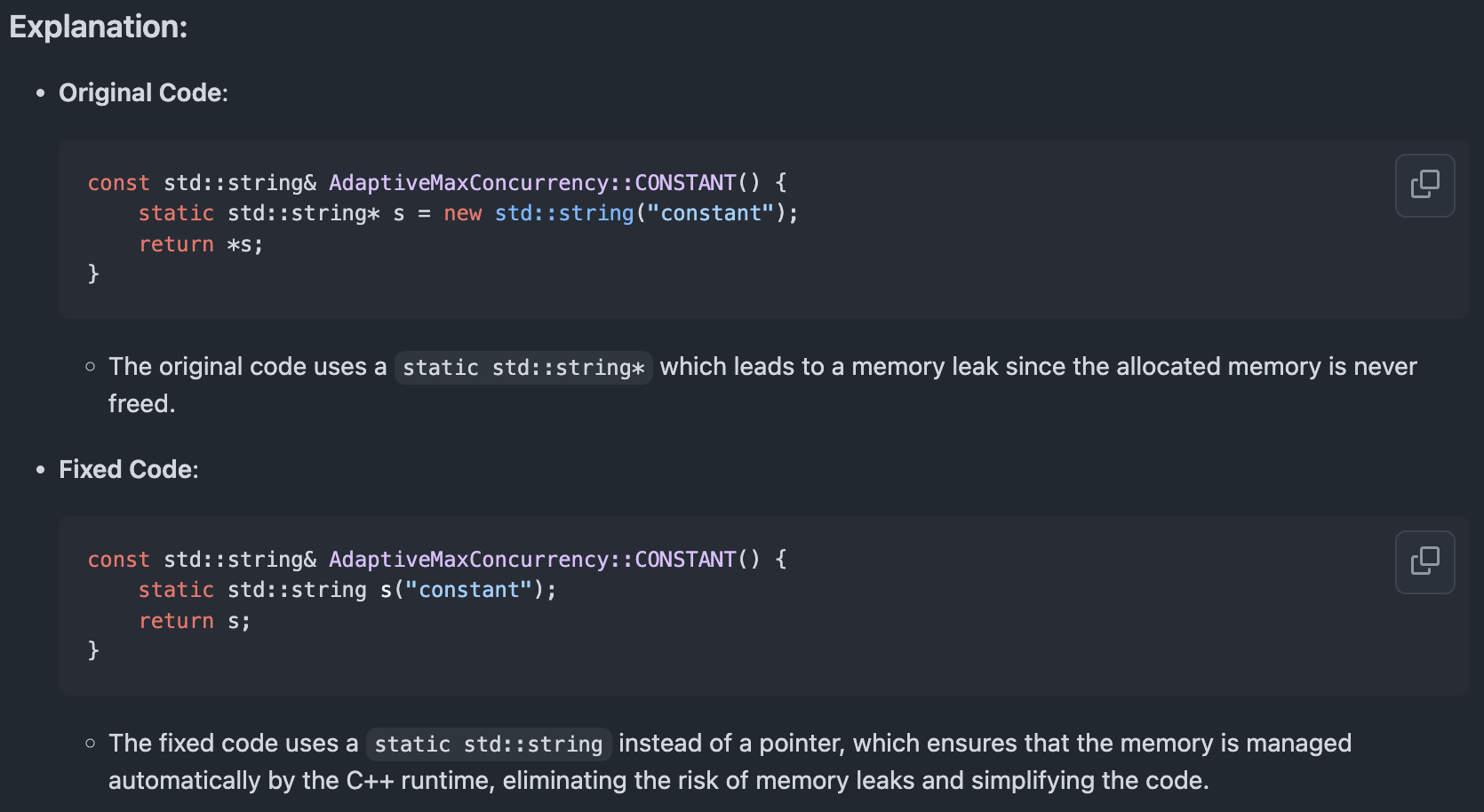

- ✅ We submit a code enhancement to brpc community and get a "LGTM" feedback from maintainer.

We invite volunteers to integrate our action into their CI/CD pipelines, give it a try, and share your feedback with us. Your insights will help us refine and improve the project!

📊 Results

Metrics

We define metrics for evaluate our jobs' effectively.

- Prompt percent: The percent of prompt as human intention in question asked to LLM.

- Content percent: The percent of a specific function or files as content in question asked to LLM.

- Output Token: The numbers token as LLM's response.

- LLM response time: The duration for LLM's response.

Summary table

| Metric\Task | Document generate | Deployment suggestion | Code enhancement |

|---|---|---|---|

| Prompt percent | 16% | 5.6% | 54.2% |

| Content percent | 83% | 93% | 45% |

| Output Token | 430 | 1207 | 742 |

| LLM response time(second) | 26 | 61 | 43 |

PR or Github issue

🔒 As our scan result related with vulnerability, we hidden all details in result to avoid hacker exploitation.

Document generate

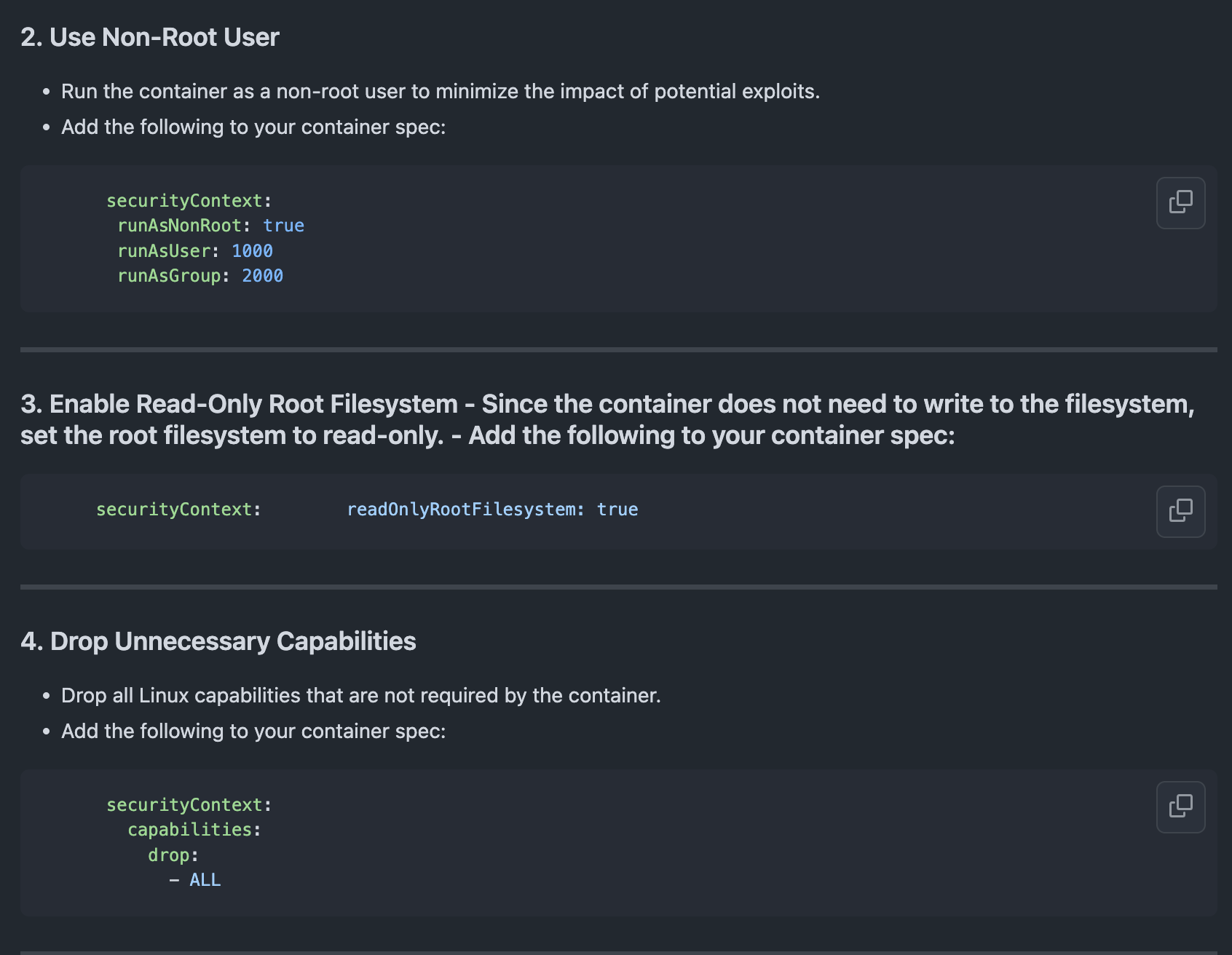

Deployment suggestion

In this part, we just scan "hello world" deployment yaml, either they are used for integration test or quick start for user. No production deployment.

Code enhancement

✨ Image this

🌴 You're on a sun-soaked beach, sipping a mojito, watching the waves roll in. Carefree. Relaxed. Your code? It’s running itself in—an intelligent pipeline scanning CVEs, analyzing risks, and auto-adjusting deployments.

Now, contrast that with this:

💻 You’re hunched over your desk, chugging coffee at 2 AM, manually digging through security reports, tweaking YAML files, and writing function doc strings one by one.

The choice is yours. Hack smarter, not harder. Let AI do the grunt work while you enjoy the sunset.