For implementation and demonstration, please refer to the following repository of the project:

https://github.com/shoaibmustafakhan/GeoSynthAI-Vistas

Urban planning is an inherently complex and resource-intensive process, characterized by lengthy timelines and extensive bureaucratic procedures. Typically, a team of urban planners, often comprising multiple professionals, must process vast datasets, engage in iterative planning cycles, and conduct rigorous assessments to develop actionable urban development strategies. This conventional approach is time-consuming and costly and frequently results in delays that hinder timely urbanization, particularly in rapidly developing regions. In response to these challenges, we propose a novel AI-driven pipeline designed to streamline the creation and evaluation of satellite imagery for urban planning applications. At its core, the pipeline integrates an inpaint diffusion model within a generative AI framework, enabling the automated generation of high-quality, contextually relevant satellite images, reducing time for urban planning from years to seconds. In order to make sure the generation is accurate and useful, after adversarial evaluation using a GANs methodology, with the implementation of upscaling and object detection, the potential usage and feasibility of our system for practical integration into the urban development process is displayed.

The field of urban planning and architectural design is hindered by traditional manual design methods that result in significant inefficiencies. Architects and urban planners often face a series of challenges that slow down the planning process, increase costs, and heighten the likelihood of errors. These challenges not only delay urban development but also contribute to escalating expenses and resource wastage.

One of the primary issues is high design cost with manual design. Architects and planners spend every hour developing and refining designs, all at cost. However, with growing scope of projects, the delivery time and labour costs increase as well, leading to inflated budgets, which can be cumbersome for both developers and local authorities.

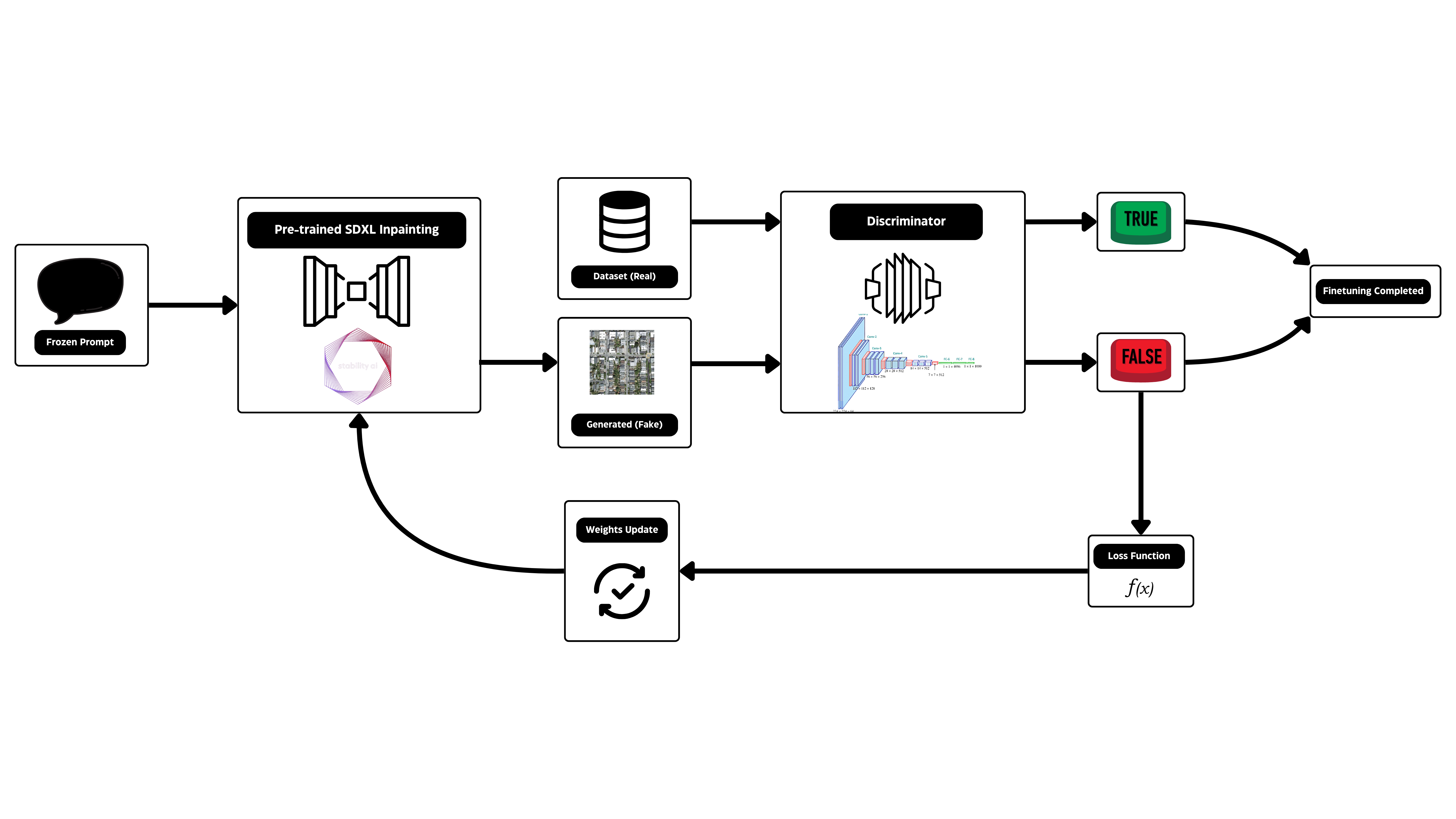

We propose a solution to these pressing challenges in this paper, incorporating Stable Diffusion, generative adversarial networks (GANs), and deep learning detection to automate and optimize the design process. The methodology behind the proposed approach is to use an inpaint Stable Diffusion model to generate high quality city planning synthesis, using high resolution, contextually relevant satellite imagery, which would take years to develop and perfect in the conventional industry. In this study, we employ a CNN within a GAN-inspired system as a discriminator to assess the quality and accuracy of the generated designs against defined datasets. This iterative process of fine tuning the model continually and outputting designs that are accurate to the urban planning requirements ensure significant increase in physics-accurate generation. This new integration of deep learning and generative models overcomes the drawbacks of traditional manual planning methods and lowers costs and time to plan for urban development, at the same time improving effectiveness in both precision and scalability.

In recent years, Artificial Intelligence (AI) has been integrated into urban development and smart city creation, providing innovative solutions for the multiple urban planning, management and sustainability issues associated with urban planning. Traditionally, urban design practices can be automatized by AI-driven systems, namely Generative Adversarial Networks (GANs) and deep learning models, whose advancement enable them to generate synthetic data, simulating urban scenarios. This synthetized data can then be used by urban planners and architects to evaluate different design alternatives and urban layouts without enduring the traditional planning time and cost consuming process.

(CNNs), AI models can significantly improve the accuracy of building segmentation even in complex urban environments. For instance, recent research using SegNet combined with Bi-directional Convolutional LSTM (BConvLSTM) has demonstrated substantial improvements in segmentation accuracy, with an average F1-score exceeding 96%, thereby enhancing applications in urban planning, geospatial database updates, and disaster management.

The rapid expansion of smart cities has resulted in the generation of vast amounts of data through various sensors and Internet of Things (IoT) devices. Despite the high volume of data generated, much of it remains underutilized due to challenges in data processing and integration. AI models are being leveraged to address these issues, particularly through semi-supervised learning and deep reinforcement learning techniques, which enable the extraction of valuable insights from both labeled and unlabeled data. A proposed three-level learning framework for smart cities integrates this data into a cohesive system, where real-time feedback from users helps AI models make adaptive decisions in dynamic environments. This approach facilitates improvements in key areas such as traffic management, energy systems, and waste management, while simultaneously enhancing the overall quality of life for city residents.

The methodology for this project involved leveraging advanced generative models and optimization techniques to produce and refine high-resolution satellite imagery for urban planning applications. It began with the preprocessing of a custom dataset to ensure uniformity and compatibility with the model's input dimensions. Images were resized to a consistent resolution of 256x256, enabling efficient processing and seamless alignment with the Stable Diffusion XL (SDXL) Inpainting model.

Fine-tuning the SDXL Inpainting model was a key part of the process. Batch sizes of 32 images per training epoch were chosen to balance computational efficiency and the capacity of the NVIDIA A10G GPU used in the experimental setup. The training process employed the Adam optimizer, known for its ability to adapt learning rates dynamically. A learning rate of 1e-4 was used, with unified beta parameters set to (0.9, 0.999) to ensure stable convergence during training.

An innovative GAN-inspired framework was implemented to iteratively refine the SDXL Inpainting model for accurate and context-sensitive image generation. Unlike traditional GANs, the discriminator’s weights were kept static throughout the process, providing a fixed benchmark for evaluating the generator’s outputs. A CNN, extensively trained on the dataset, served as the discriminator, classifying generated images and acting as an evaluation metric for optimizing the SDXL Inpainting model’s weights. This approach allowed iterative improvement without the need for dynamic training of the discriminator, enhancing the stability and reliability of the system.

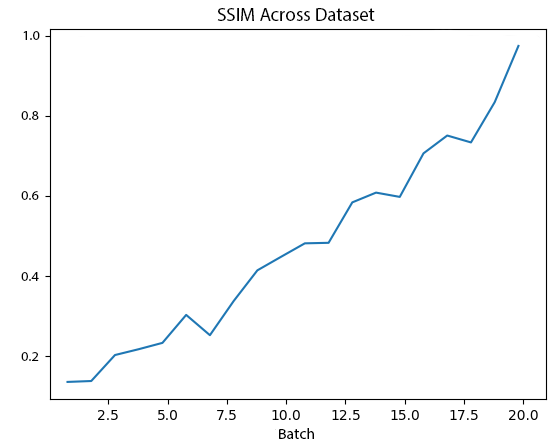

The fine-tuning process spanned five epochs, with each epoch representing a complete forward pass through the training data. At the end of each epoch, the outputs were evaluated using two key metrics: CNN classification accuracy and the Structural Similarity Index Measure (SSIM). SSIM was particularly crucial as it quantified the structural integrity of the generated images, ensuring similarity to the input data with a threshold set at 85%. This threshold maintained the spatial and structural characteristics essential for urban planning applications while avoiding overfitting.

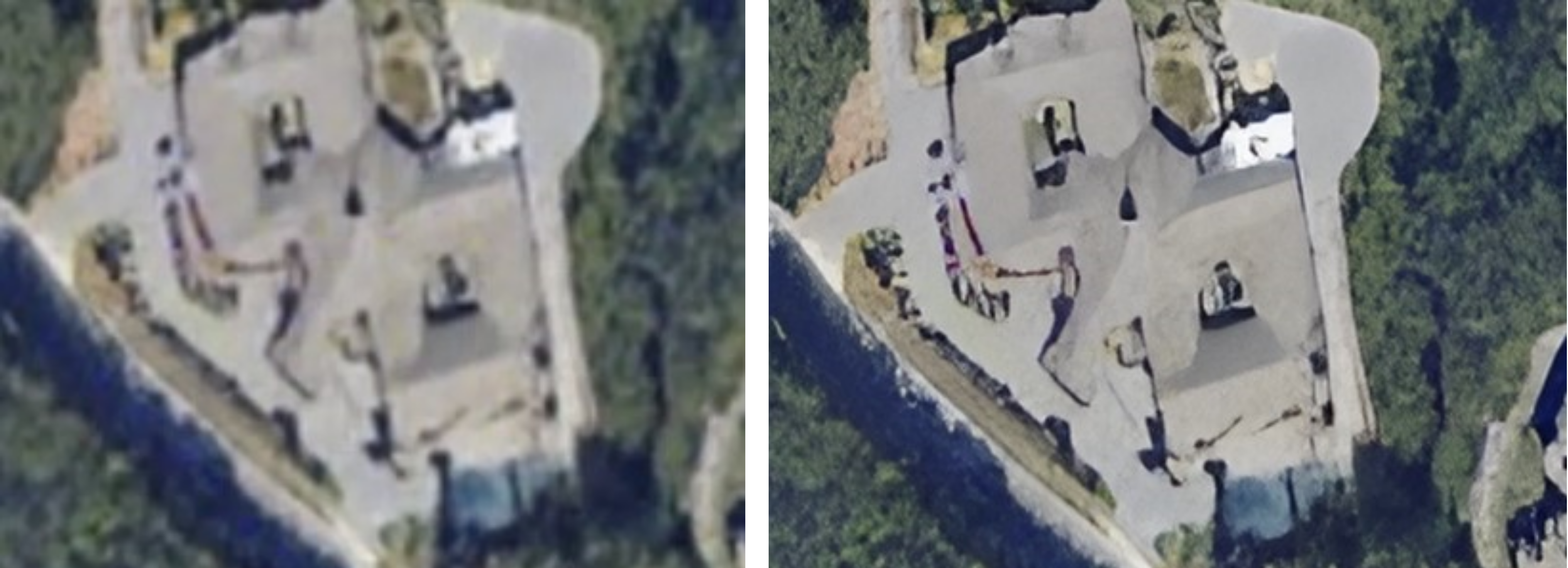

To further refine the outputs, image enhancement was integrated as a crucial post-processing step. The GFP-GAN model, originally designed for facial restoration, was repurposed to enhance the unique characteristics of satellite imagery. This phase focused on improving structural coherence and fine-grained details, such as road topology, building edges, and vegetation boundaries. The model extracted multi-resolution spatial features and selectively applied enhancements to areas requiring improvement. This adaptive process ensured critical regions were sharpened without overprocessing coherent areas, resulting in outputs suitable for urban planning analysis and decision-making.

.png?Expires=1770349243&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=F0qnYlp32F6HQqP-CvcvF4HjIKqB8HcAsZYHmDMvI~fBbm9k1D2IEjOqa8UjSuxHSzykejEWUkajW0rnSdvsDXw52Y3yG-4FSXpCubKawWed2iv0OMubSRexv2zf64KVfpYbO48zcmjOqYybBpMjZRNTtXXBVz4p6asBIloeVEWBCRHeYilQF34KY8mCFtcYFcjXmY8naPUhws5FTGa-ay73DmRaVSh11StSzDTGcOn7VWRanSFfoXHBM5rEIOVtGC5is2Wsf2fvu3sMWKDyMJgOMw74AsGvEWLJWsNmnjbTgMRNJW0YtdZc-UmYEw4AfICE~mGIr9N6IByoOsl9vA__)

Overfitting was a critical concern during the fine-tuning process. Despite the availability of training loss as a metric, it was insufficient for assessing the model’s performance due to the minimal changes observed in loss values. This was hypothesized to be a result of the SDXL Inpainting model’s extensive pretraining.

Therefore, as an attempt to avoid the model weights from overfitting onto the dataset and providing illogical outputs, which would be the result from training the model over large amount of epochs, a further novelty was introduced, which included the use of SSIM (structural similarity index measure).

Taking into consideration and having the basis of provided datasets to be the ideal standard of development, SSIM was measured in between the model's outputs and the dataset's output. The threshold similarity of the SSIM, as previously mentioned, was set to 80%, while values above 80% were considered to result in the overfitting of the model. This ensured that the similarity of the images against the dataset is measured by external evaluation on visual similarity rather than the model's capability. This methodology also offered robustness for the model's generation, alowing the model to not only generate accurate infrastructure as per the dataset, but also ensuring that it is accurately variable across the given dataset. Weights resulting from epochs with the SSIM being above 80% were discarded as an attempt to ensure that the model does not overfit.

Beyond image generation and enhancement, the pipeline incorporated a practical analysis step to detect rooftops in the enhanced satellite images. This functionality was achieved through the integration of a deep learning model trained with a curated dataset of labeled rooftops. Roboflow’s platform was used for data preprocessing, augmentation, and labeling. The model achieved 90% accuracy, enabling precise detection of rooftops across diverse urban configurations. It also provided configurable confidence thresholds, allowing urban planners to adjust detection sensitivity to suit specific scenarios.

The final output of the pipeline included enhanced satellite imagery with highlighted rooftops and an estimated count of rooftops within the region. This data provided actionable insights for population estimation, infrastructure planning, and zoning analysis, directly addressing the needs of urban developers. By combining image generation, enhancement, and analysis, the pipeline delivered outputs that were not only visually accurate but also functionally relevant for urban planning professionals. This structured and iterative methodology ensured robustness, adaptability, and precision in addressing the complex requirements of urban development scenarios.

Despite the promising results achieved by the proposed pipeline, a few limitations remain due to resource and dataset constraints. First, only the U-Net layer of the SDXL Inpainting model was finetuned during the experiments. This limitation was primarily due to computational resource constraints, as full-model finetuning requires significantly more resources.

Consequently, the outputs are unstable, with some fields yielding viable and high-quality outputs while others fail to produce meaningful results, occasionally resulting in blank or incoherent outputs.

Another limitation lies in the accuracy of the rooftop detection model. Due to constraints in dataset annotation and the availability of labeled data, the rooftop detection model could only achieve a maximum accuracy of 90%. This restricts the system's ability to provide perfectly reliable detection results for all scenarios, particularly in areas with complex building structures or insufficiently annotated datasets.

Future work will address these limitations and further enhance the system's capabilities. A key direction is the incorporation of multiple text encodings during the finetuning phase, allowing the model to generate a broader range of outputs rather than being limited to the category of "satellite imagery residential houses." This will expand the model's capability generate on a wider and more diverse inference data and user inputs.

Further finetuning and integration of larger, more diverse datasets will be explored so as to ensure quality output over a wider range of image inputs. This will also improvement stability and consistency in the generated results and leave the model less prone to generate blank and incoherent outputs.

The rooftop detection module will also be transitioned from Roboflow's service to a custom implementation, ensuring the model leverages better annotations and a broader rooftop types while still achieving higher detection accuracies. Through a bespoke detection model, greater flexibility and precision will be achieved, increasing the system's robustness and reliability for real world urban planning applications.

The proposed pipeline consists of GFP-GAN for image enhancement, a UNetconditioned SDXL latent diffusion model for core image generation, a rooftop detection module, and a sharpening module, presenting a significant solution for generating high quality, semantically consistent satellite image for use towards urban planning applications. The system achieves fidelity and functionality in its outputs by leveraging latent diffusion with CLIP based text conditioning, and incorporates GFP-GAN for post processing. The rooftop detection addition further extends the practical utility of the system, from what would take years before to seconds, and exponentially lower costs for generation and design.

This research showcases several strengths of the proposed methodology, including the ability to leverage latent space for performance under minimal computational complexity, and incorporating GAN inspired finetuning mechanisms to guarantee stability and quality. Furthermore, it also demonstrates the pipeline's modularity with high adaptability and scalability, suitable for applications that require high resolution and context aware imagery generation. Quantitative evaluations reveal that, under certain constraints, the system is able to produce reliable results for a variety of urban development scenarios.

The proposed methodology offers a major improvement in the use of generative models in practical applications. This pipeline leverages modularity, computation efficiency, and real world relevance to form an excellent platform for further research and development opportunities in the area of text to image generations, urban planning and beyond.

1.. Xu, H., Omitaomu, F., Sabri, S., Li, X., Song, Y.: Leveraging Generative AI for

Smart City Digital Twins: A Survey on the Autonomous Generation of Data, Sce-

narios, 3D City Models, and Urban Designs. arXiv

We would like to express our gratitude to Nikola Gligorovski for his work on the foundational SDXL inpainting finetuning methodology, which played a key role in the basis of early development of this system. \cite{b14} We also extend our appreciation to Dr. Akhtar Jamil for his valuable mentorship and guidance throughout the project. Finally, we acknowledge the contributions and collaboration of our team members, whose efforts were integral to the success of this work.