Text-to-SQL Query Generation

This project fine-tuned LLaMA-3.2-3B model for generating SQL queries from natural language inputs. The model was fine-tuned using QLoRA (Quantized Low-Rank Adaptation) to efficiently update parameters while minimizing resource usage. It enables users to interact with databases without requiring SQL expertise.

📌 Repository Links

- GitHub: Text-to-SQL_Query-LLM

- Hugging Face Models:

- QLoRA Model: sai-santhosh/text-2-sql-Llama-3.2-3B

- GGUF Model: sai-santhosh/text-2-sql-gguf

📝 Overview

This project fine-tunes LLaMA-3.2-3B to convert natural language questions into SQL queries, helping users query complex databases with ease. It leverages contextual understanding through CREATE TABLE schemas to improve SQL query accuracy.

🔹 Model Input Format

The model requires two inputs:

- Question: The natural language query, e.g., "List all customers with orders over $500."

- Context: Table schema(s) provided as

CREATE TABLEstatements to help the model understand database structure.

🔹 Use Cases

- Conversational AI: Enables chatbots to answer database-related queries.

- Educational Tools: Helps users learn and practice SQL with real-world examples.

- Business Intelligence: Simplifies querying large databases for insights.

⚙️ Model Details

- Base Model: LLaMA-3.2-3B

- Fine-tuning Method: QLoRA (Quantized Low-Rank Adaptation)

- Task: Text-to-SQL query generation

- Framework: Hugging Face Transformers

- Inference Support: Compatible with LM Studio, Ollama, and GGUF-compatible tools.

📦 Installation & Setup

To install the necessary dependencies:

pip install -q -U transformers bitsandbytes accelerate

🚀 Usage

1️⃣ Python API Usage

⚠️ Ensure GPU availability for optimal performance

Clone the repository:

git clone https://github.com/SaiSanthosh1508/Text-to-SQL_Query-LLM cd Text-to-SQL_Query-LLM

Load the model and generate SQL queries:

from transformers import AutoModelForCausalLM, AutoTokenizer from text_sql_pipeline import get_sql_query # Load Model model = AutoModelForCausalLM.from_pretrained("sai-santhosh/text-2-sql-Llama-3.2-3B", load_in_4bit=True) tokenizer = AutoTokenizer.from_pretrained("sai-santhosh/text-2-sql-Llama-3.2-3B") # Example Query question = "List all employees in the 'Sales' department hired after 2020." context = "CREATE TABLE employees (id INT, name TEXT, department TEXT, hire_date DATE);" get_sql_query(model, tokenizer, question, context)

For multiple tables:

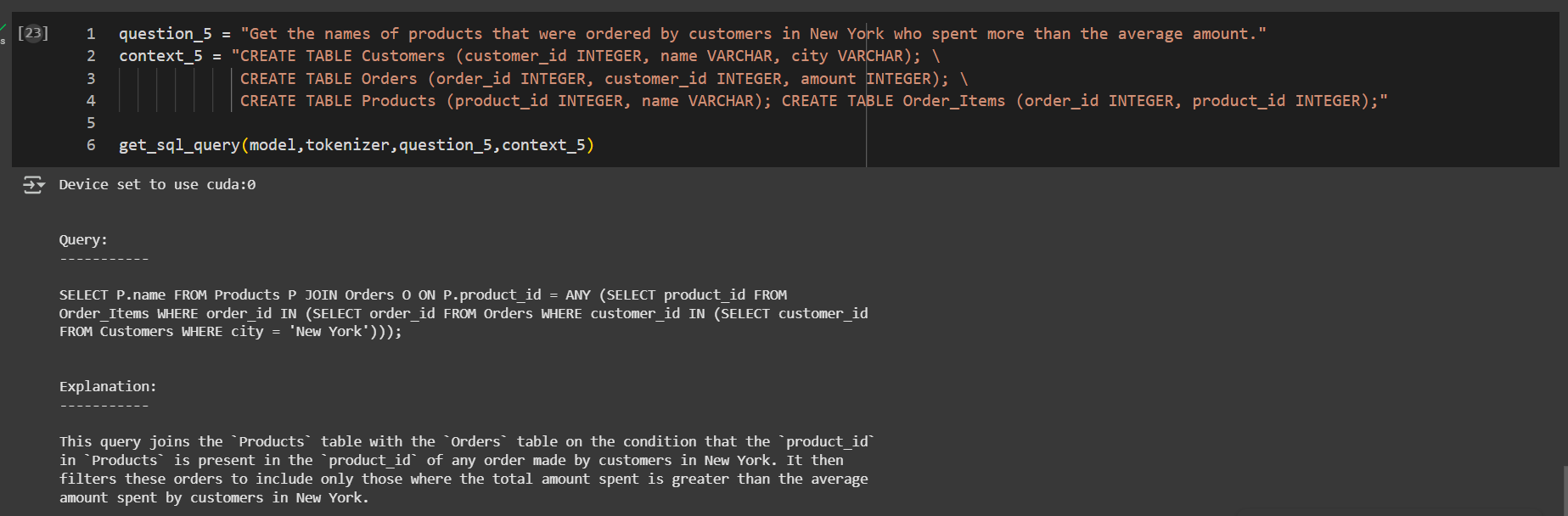

question_5 = "Get the names of products that were ordered by customers in New York who spent more than the average amount." context_5 = "CREATE TABLE Customers (customer_id INTEGER, name VARCHAR, city VARCHAR); \ CREATE TABLE Orders (order_id INTEGER, customer_id INTEGER, amount INTEGER); \ CREATE TABLE Products (product_id INTEGER, name VARCHAR); CREATE TABLE Order_Items (order_id INTEGER, product_id INTEGER);" get_sql_query(model,tokenizer,question_5,context_5)

Output

2️⃣ Command-Line Interface (CLI) Usage

Run the model using the command line:

python generate.py -q "Find the zip code where the mean visibility is lower than 10." \ -c "CREATE TABLE weather (zip_code VARCHAR, mean_visibility_miles INTEGER);"

python generate.py -q "Find all cities with temperatures above 90°F." \ -c "CREATE TABLE weather (zip_code VARCHAR, city VARCHAR, temperature INTEGER);" \ -c "CREATE TABLE population (city VARCHAR, population INTEGER);"

🤗 HuggingFace Spaces Model Inference