1. Abstract

The concept of enabling a robotic device to autonomously follow a user has been explored extensively by various individuals and industries. The objective of our capstone project is to replicate these mechanics using the research and resources provided by Bellevue College. We intend to build a robot capable of carrying up to 50 lbs. in order to help the disabled. This project introduces the essential learning model designed to enable our robot to recognize and identify the user it is intended to follow. By implementing the YOLO (You Only Look Once) learning model, our system will detect objects in the environment and specify the target object. YOLO is predominantly used for object detection, making it an ideal choice for our application.

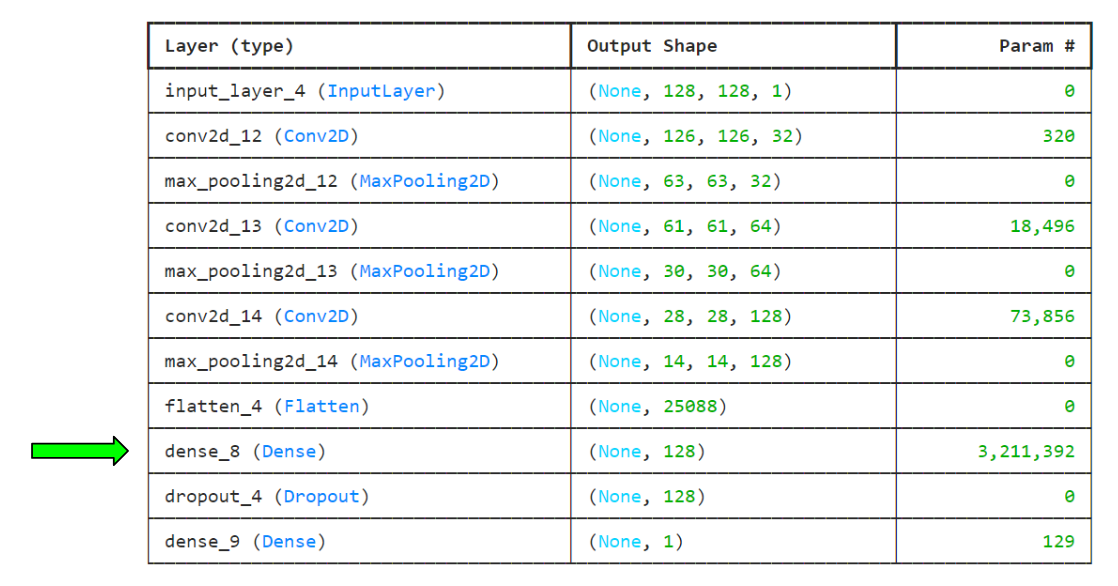

Part of our research includes evaluating simple models, alongside YOLO's precursor Convolutional Neural Networks (CNN) to accomplish this task of predicting a specific individual.

In our capstone project, we will employ a Raspberry Pi to run this intensive learning model, complemented by a camera to facilitate computer vision capabilities. With the integration of extensive image processing into the model, we aim to develop a robust system capable of consistently identifying and following a designated user. Our first goal is to achieve a functional and effective model that allows our robotic device to autonomously track a user with precision. And our second goal is allow our robotic device to autonomously move from one location to the other without human control.

2. Introduction

Our capstone project aims to replicate these advancements by developing an autonomous following robot with substantial application potential. However, significant challenges remain, such as improving the following mechanics to accurately distinguish individuals and enhancing the robot's ability to identify and react to consistently moving objects while avoiding obstacles.

These critical questions drive our investigation and experimentation with the YOLO (You Only Look Once) model. Currently, we are in the preliminary stages of integrating computer vision into our project. In the coming weeks, we aim to develop a functional model for the Raspberry Pi, enabling us to test and refine the robot's following capabilities.

Through this project, we aspire to address these challenges and contribute to the field of autonomous robotics, ultimately achieving a reliable and effective system that can consistently follow a user.

3. Methodology

Our approach to developing an autonomous following robot involves a step-by-step increase in functionality:

3.1. Establish Communication:

In order to obtain live updates from our robotic device (FollowBot) we will need to create a web application & mobile app to keep track on all of the information that the device will gather, which we will hope to use within our ML models. For swift communication between the user and robotic clients we are using server to client communication.

3.2. User Proximity

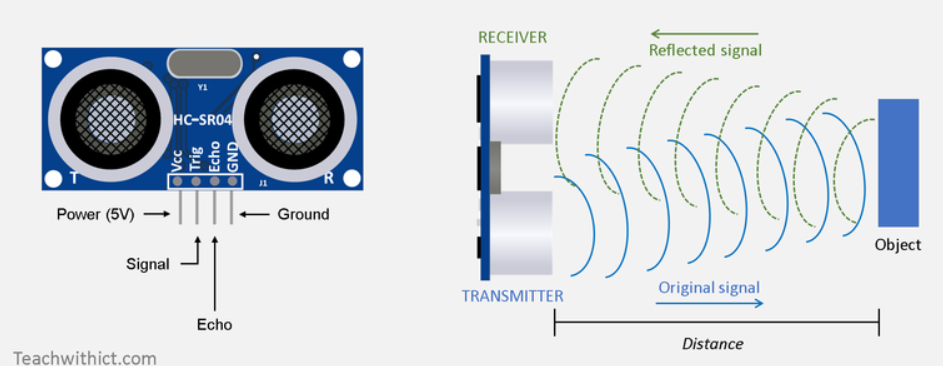

When establishing the position of our device to ensure proximity to the user, accurate distance measurement becomes paramount. Initially, we experimented with supersonic sensors to measure distances to various objects in the surrounding environment. Additionally, we utilized RSSI signal strength via a Wi-Fi module to attempt triangulating the distance between the user and the robot.

However, recognizing the inherent limitations of RSSI for precise distance measurement, we plan to transition away from relying solely on Wi-Fi. By deploying a machine learning model on a Raspberry Pi 4 with ROS2 in tandem with existing architecture on our Arduino board, object detection will be enhanced while inaccuracies can be made up for.

3.3. Machine Learning Implementation

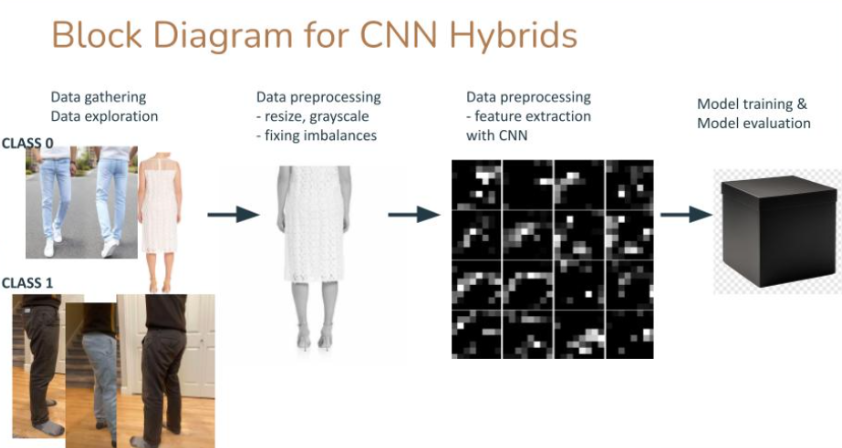

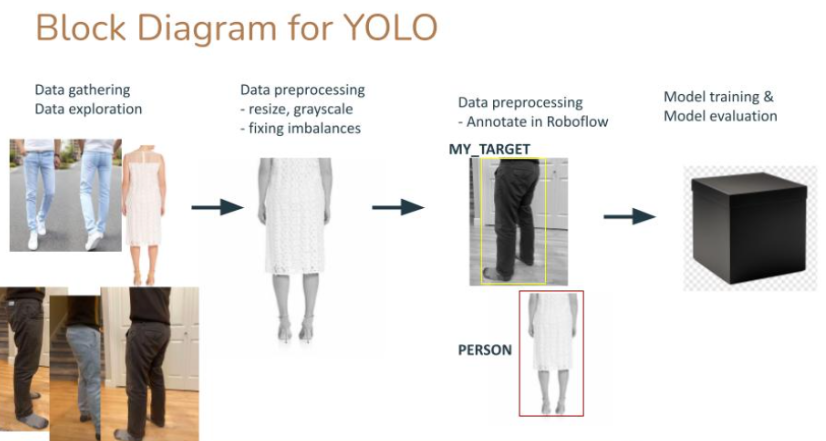

Preprocessing

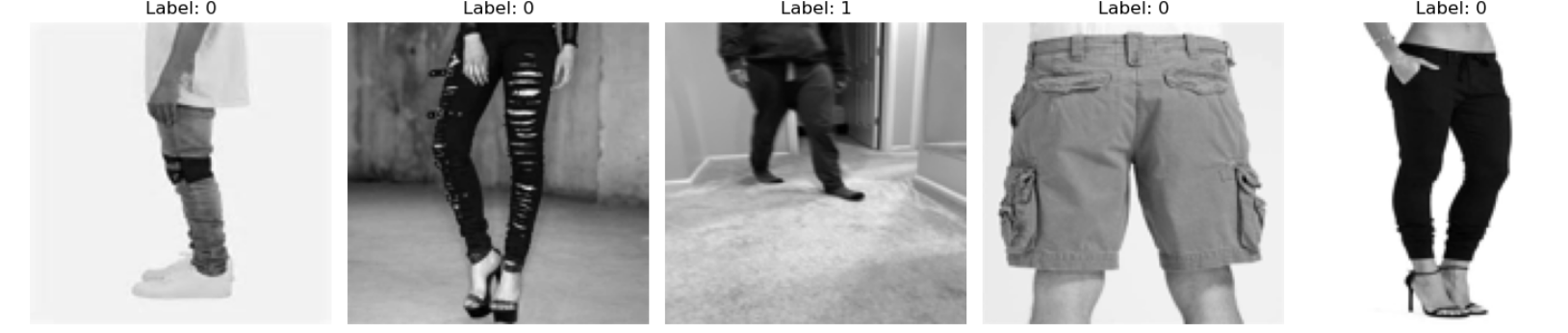

Our initial dataset is obtained from kaggle. We introduced photos of one of ourselves to serve as the target user.

We begin by preprocessing our dataset. Since we are planning to deploy this on a Raspberry Pi, images will be scaled down to 128x128 and converted to gray scale to save on costs. Next the data set will be augmented to mitigate the issue of class imbalance.

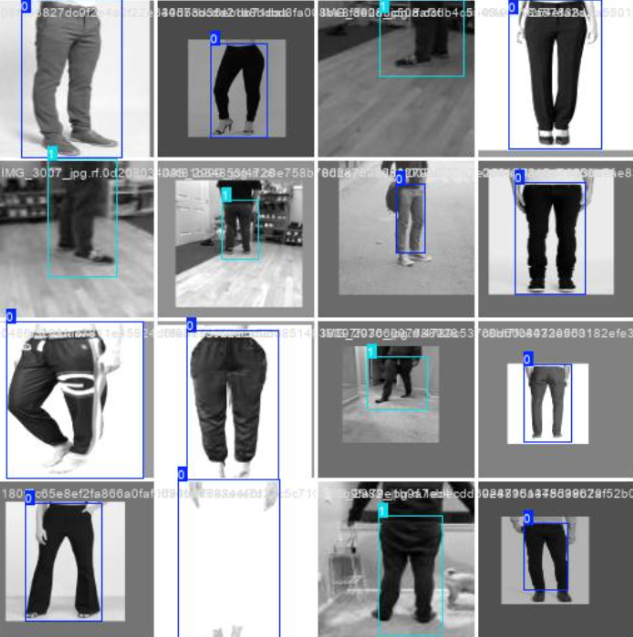

The images in the YOLO dataset must be annotated with bounding boxes. Brightness of the images will vary between -15% and +15% to mimic different light conditions. Random cropping is introduced to aid identifying the user in the event they are partially obscured.

Features

Preprocessed Image:

Dense Layer from CNN to be used as inputs for CNN-Hybrids

Image with Bounding Boxes Used for YOLO

Block Diagrams

3.4. Autonomous Navigation

After we have successfully finalized the learning model and improved it's accuracy we plan on moving our focus to mapping out location with our device in order for it to move from point A to point B.

Sensor Integration

- Encoders on Wheels: Install encoders to accurately measure the distance traveled and maintain precise motion control.

- IMUs (Inertial Measurement Units): Utilize IMUs to track orientation and movement, ensuring the robot remains stable and follows the intended path.

- Supersonic Sensors and LiDAR: Equip the robot with supersonic sensors and LiDAR for obstacle detection and distance measurement.

Data Processing and Fusion

- Collect and integrate real-time data from encoders, IMUs, supersonic sensors, and LiDAR.

- Use sensor fusion techniques to improve the accuracy of the robot's perception of its environment.

Path Planning

- Implement a algorithm (Dijkstra's) to calculate the most efficient route from the starting point to the destination, avoiding detected obstacles.

- Continuously update the planned path as new obstacles are detected in real-time.

Object Detection and Tracking

- Integrate the YOLO (You Only Look Once) machine learning model to enable real-time object detection and user identification.

- Use YOLO to dynamically recognize and avoid obstacles while following the designated user.

Motion Control

- Develop motion control algorithms to manage the robot's movement along the planned path.

- Utilize feedback from encoders and other sensors to ensure smooth and precise movements.

System Integration and Testing

- Integrate all subsystems (sensor data fusion, path planning, object detection, and motion control) into a cohesive navigation system.

- Conduct extensive testing in various environments to validate the robot's autonomous navigation capabilities.

- Collect and analyze performance data to make necessary adjustments and improvements.

By following these steps, we aim to develop a robust and reliable autonomous navigation system capable of precise and efficient movement in various environments.

We are currently working on step 2 and 3 of our methodology.

4. Results

-

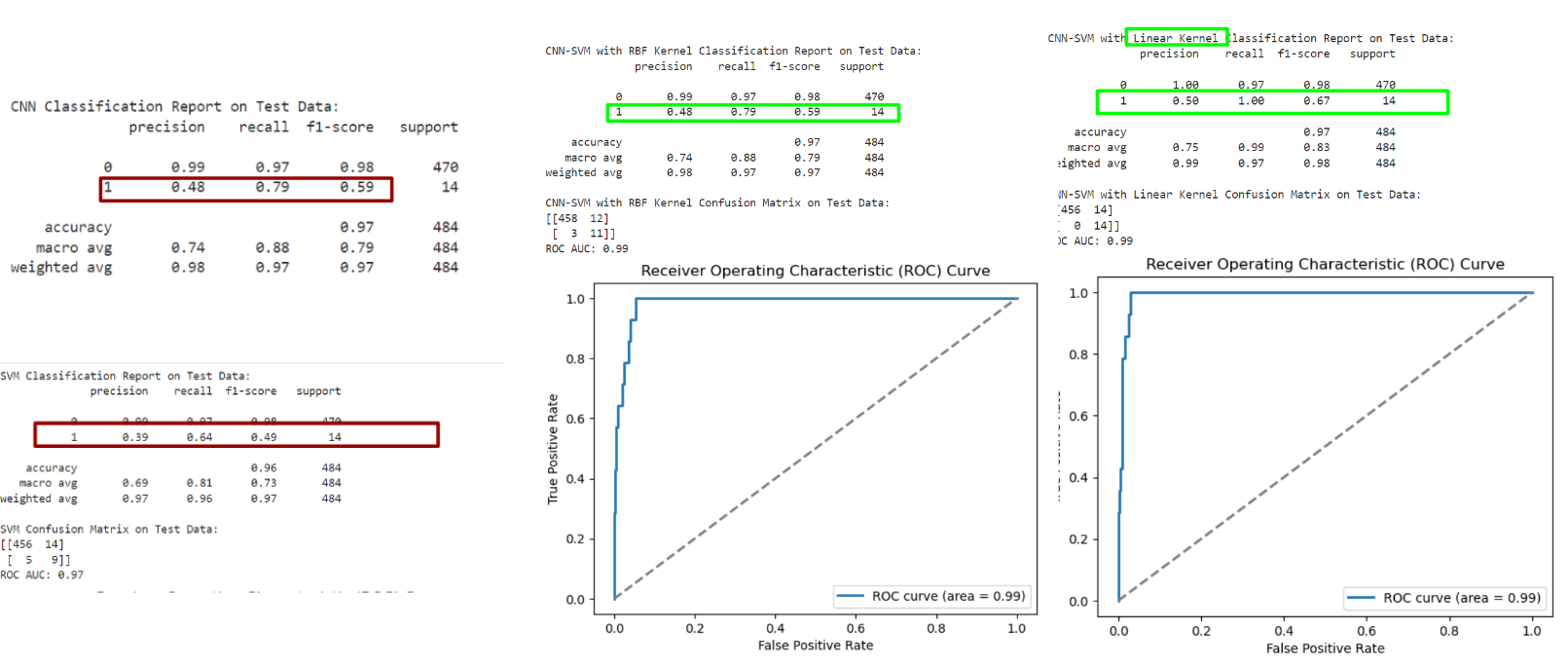

Tested several other machine learning models, including basic models, basic CNN and CNN-hybrids. Of which, we discovered weaknesses in precision, recall, and overfitting the training data:

-

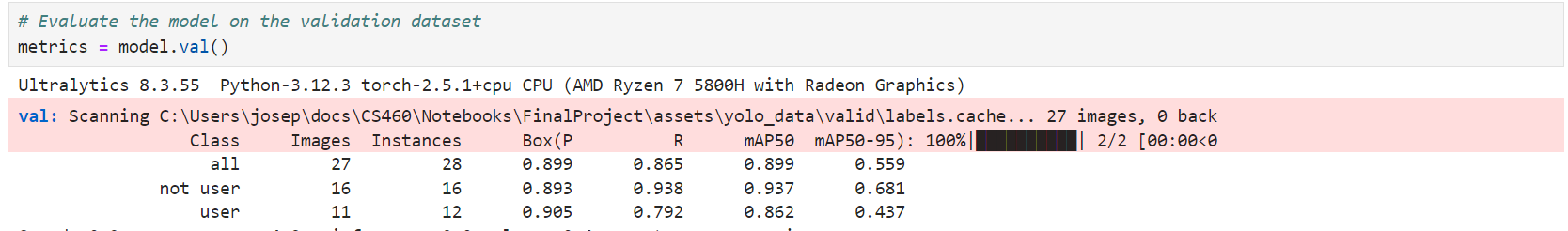

Created a YOLO model with mean average precision with a 50% IoU threshold (mAP50) of ~80%, alongside improved precision and recall:

-

Established a communication protocol between FollowBot and the web server to separate user interaction and data processing.

5. Conclusion

To date, our capstone project has successfully achieved bidirectional data communication between the user and our FollowBot. We have the capability to issue commands to FollowBot effectively. Additionally, we have laid the groundwork for integrating the machine learning models that will enhance our project.

We believe the model can still be improved to eliminate bias towards the majority class and overfitting to training data to best generalize real world inputs. Once we establish an initial following mechanism, we can deploy the model and test its compatibility with our existing architecture.

6. Future

We plan on having a functioning robot with computer vision technologies using the YOLO model for object detection and avoidance, along with the capability to map autonomously from one location to another.

Going forward, we intend to improve our ML model in these areas:

- Resampling techniques: Research more sophisticated techniques and re-evaluate by original metrics

- Additional Metrics: Introduce metrics such as training time, computational load, memory load

- Accessibility: Include photos of wheelchairs so that the model to better target our intended market

- Edge Cases: Introduce photos of ordinary objects and images of target the user in different clothes and settings for sustainment of model

- Deployment and Implementation: With the improved model, save and store on a Raspberry Pi 4 with camera module with the ethos of continuous improvement

In terms of our capstone, we plan to develop a web app that can be accessed from mobile and desktop to see statistics and control (this is currently implemented locally) the FollowBot. We will then create a mobile app with the same features as the web app to see statistics and control Followbot(with voice and touch screen controls), as well as see the data of immediate usage for users( such as power and usage of weight). With all the data we are recording to be fed back to machine learning model.