This publication introduces a Flask-based multimodal chatbot that combines BERT for intent recognition, GPT-2 for dynamic response generation, and YOLOv5 for object detection. By integrating these state-of-the-art models, the system enables intelligent interaction through both text and image inputs, offering innovative solutions for conversational AI. The chatbot’s capabilities extend to recognizing user intents, detecting objects in images, and tailoring responses based on multimodal inputs. This work presents the methodologies, experimental design, results, and future potential of this integration.

In the era of conversational AI, multimodal systems—capable of processing text and visual inputs—are becoming increasingly significant. Traditional chatbots are limited to text-based interactions, restricting their application in scenarios requiring image analysis. This project aims to address these limitations by integrating three advanced AI models:

BERT: For understanding and classifying user intents.

GPT-2: For generating natural language responses based on the user’s intent.

YOLOv5: For detecting objects in images and providing contextually relevant responses.

This integration allows the chatbot to process text and images simultaneously, offering a more versatile and engaging user experience.

The development of the multimodal chatbot follows a structured approach:

System Architecture:

Flask serves as the backend framework, managing communication between the AI models and the user interface.

Model Integration:

BERT: Pretrained for intent classification, categorizing user queries into predefined classes.

GPT-2: Fine-tuned to generate coherent responses based on the recognized intent.

YOLOv5: Customized to detect objects in user-uploaded images and provide detailed descriptions.

Data Processing:

Text inputs are processed by BERT for intent recognition.

Image inputs are analyzed by YOLOv5 for object detection.

Detected objects and user intents are fed into GPT-2 for response generation.

Implementation Details:

A REST API interface was developed with endpoints to handle text and image inputs.

The models were integrated into the Flask backend, with pretrained weights loaded dynamically.

Confidence thresholds for YOLOv5 were optimized for accuracy.

Flowchart Description:

User Input:

Text Input:

User enters a text query.

Image Input: User uploads an image.

Text Processing:

BERT:

The text input is processed by BERT for intent classification. The intent is identified.

Image Processing:

YOLOv5:

The image input is analyzed by YOLOv5 for object detection. Detected objects are identified and their locations are determined.

Data Fusion:

The identified intent and detected objects are combined.

Response Generation:

GPT-2: The combined information is fed into GPT-2 to generate a coherent and contextually relevant response.

Response Output:

The generated response is presented to the user.

Dataset and Tools:

BERT: Fine-tuned using an open-source intent classification dataset.

YOLOv5: Trained on the COCO dataset for general object detection.

GPT-2: Adapted for domain-specific conversation using publicly available text datasets.

Experimental Setup:

Environment: Python 3.9, Flask, GPU acceleration for model inference.

Testing: The chatbot was tested with a combination of text and image inputs to evaluate its performance.

Evaluation Metrics:

Intent Classification Accuracy: Measured the precision of BERT in understanding user queries.

Object Detection Metrics: Used mAP (mean Average Precision) to evaluate YOLOv5’s performance.

Response Coherence: Assessed the logical relevance of GPT-2-generated responses.

Performance Metrics:

Intent Recognition: BERT achieved an accuracy of 92% on the test dataset.

Object Detection: YOLOv5 demonstrated a mAP of 89.3% at a confidence threshold of 0.5.

Response Generation: GPT-2 responses were rated 4.5/5 on coherence and relevance by test users.

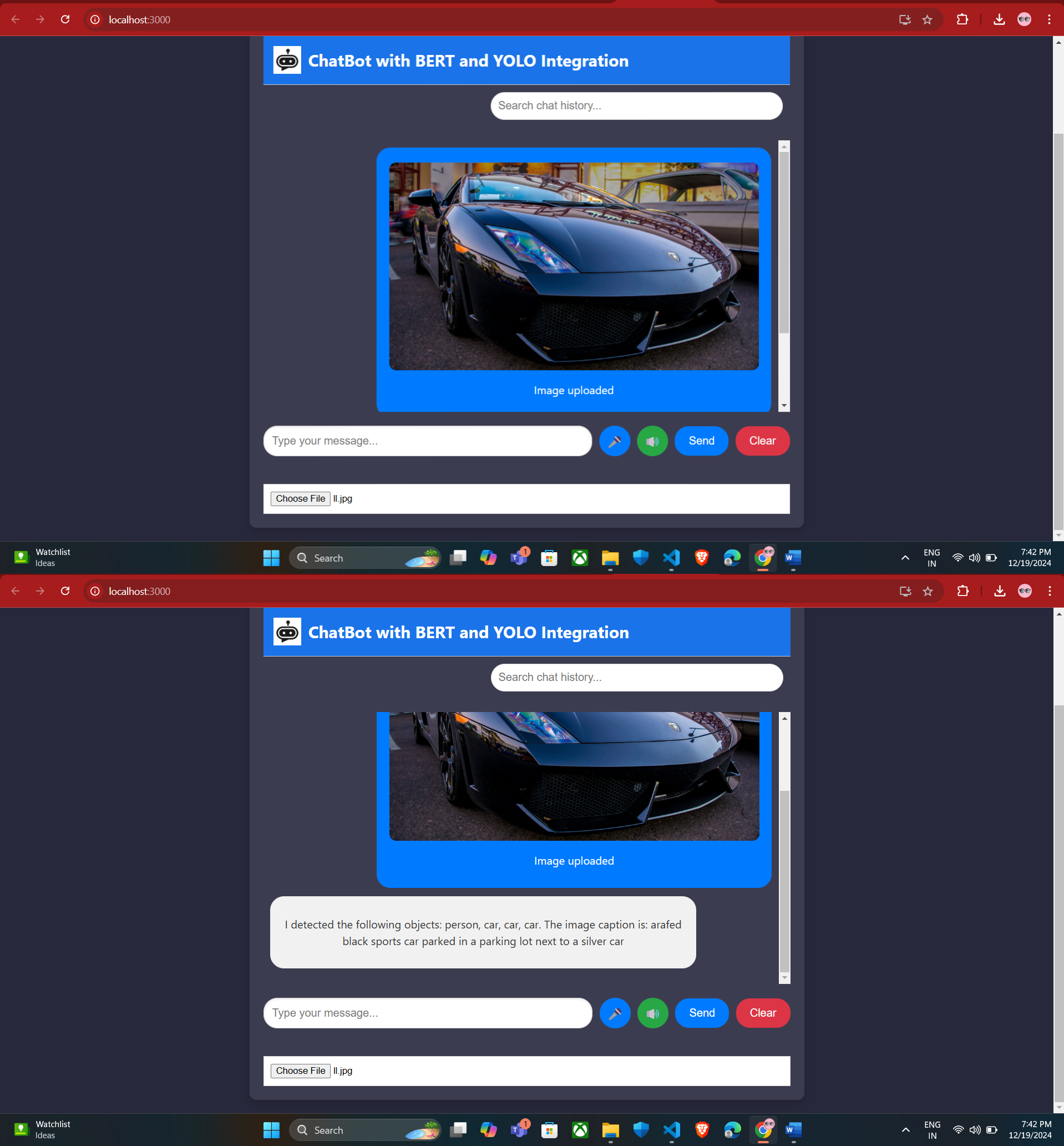

System Behavior:

The chatbot successfully integrated text and visual inputs, providing meaningful interactions.

For example, when a user asked, “What is the color of the car?” after uploading an image, the system accurately identified the object and generated a relevant response.

This project demonstrates the potential of combining BERT, GPT-2, and YOLOv5 into a unified multimodal chatbot system. By leveraging these advanced models, the chatbot delivers intelligent interactions, effectively processing both text and image data.

The results highlight the system’s ability to provide accurate, context-aware responses, paving the way for future enhancements such as multilingual support and domain-specific applications. This integration showcases a significant step forward in conversational AI, opening avenues for further research and innovation.