Introduction

In recent years, Brazil has faced critical challenges related to deforestation and forest fires, mainly in the Amazon Forest, one of the largest biomes in the world. The destruction caused by illegal fires not only threatens biodiversity, but also contributes significantly to greenhouse gas emissions, worsening climate change. Historically, the increase in fires occurs due to unsustainable agricultural practices, illegal logging and the disorderly expansion of agricultural frontiers. According to data from INPE (National Institute for Space Research), the number of fires in the Amazon has reached alarming levels in recent years.

In this context, there is a need for technologies that can monitor and quickly identify fire outbreaks, contributing to more effective control actions and minimizing environmental and socioeconomic damage.

The Project

This project uses computer vision and the powerful YOLO (You Only Look Once) architecture to develop a system capable of detecting signs of fire from a certain distance. The proposal aims to demonstrate how data and artificial intelligence can play a crucial role in environmental monitoring and prevention of natural disasters.

Objective

The main objective is to create an AI-based solution that facilitates the rapid identification of forest fires, contributing to environmental preservation and supporting public policies and conservation initiatives.

# Importing the necessary libraries: #Computer vision from ultralytics import YOLO import torchvision.transforms as transforms import torchvision.transforms.functional as F import albumentations as A #Image processing: from PIL import Image, ImageDraw import cv2 # Data Manipulation import pandas as pd from torch.utils.data import Dataset, DataLoader from collections import Counter # Data Visualization import matplotlib.pyplot as plt import seaborn as sns #File Handling import os import shutil from dotenv import load_dotenv #Numerical Operations and Statistics import numpy as np import random #DeepLearning import torch # Load variables from .env file load_dotenv()

To explore and process the D-fire dataset, a custom class was created that facilitates data loading and management, in addition to preparing images and labels for training the YOLOv8 model. This dataset will be able to:

-

Organizes images and labels, automatically associating them.

-

Translates YOLO coordinates to absolute bounding boxes, ensuring integrity and consistency.

-

Applies synchronized transformations to images and bounding boxes, increasing the robustness of the model.

class CustomDataset(Dataset): def __init__(self, root_dir, dataset_type='train', transform=None): self.root_dir = root_dir self.transform = transform # Define image and label directories if dataset_type == 'train': image_dir = os.path.join(root_dir, 'train/images') label_dir = os.path.join(root_dir, 'train/labels') elif dataset_type == 'test': image_dir = os.path.join(root_dir, 'test/images') label_dir = os.path.join(root_dir, 'test/labels') elif dataset_type =='train_new': image_dir = os.path.join(root_dir, 'images') label_dir = os.path.join(root_dir, 'labels') elif dataset_type =='test_new': image_dir = os.path.join(root_dir, 'images') label_dir = os.path.join(root_dir, 'labels') else: raise ValueError("dataset_type deve ser 'train' ou 'test'.") # Associate images with labels self.image_to_label_map = { os.path.join(image_dir, f): os.path.join(label_dir, f.replace('.jpg', '.txt')) for f in os.listdir(image_dir) if f.endswith('.jpg') } # Get only images that have labels self.image_paths = list(self.image_to_label_map.keys()) def __len__(self): return len(self.image_paths) def __getitem__(self, idx): img_path = self.image_paths[idx] label_path = self.image_to_label_map[img_path] # Upload image image = Image.open(img_path).convert('RGB') image = np.array(image) # Convert to numpy array, needed for Albumentations # Read image dimensions img_height, img_width = image.shape[:2] # Read the bounding boxes of the YOLO .txt file boxes = [] labels = [] with open(label_path, 'r') as f: for line in f: class_id, x_center, y_center, width, height = map(float, line.strip().split()) # Correct the bounding box coordinate to ensure valid values xmin = (x_center - width / 2) * img_width ymin = (y_center - height / 2) * img_height xmax = (x_center + width / 2) * img_width ymax = (y_center + height / 2) * img_height # Ensure that xmin < xmax and ymin < ymax if xmax <= xmin or ymax <= ymin: continue # Ignore invalid boxes # Ensure coordinates are within image boundaries xmin = max(0, xmin) ymin = max(0, ymin) xmax = min(img_width, xmax) ymax = min(img_height, ymax) boxes.append([xmin, ymin, xmax, ymax]) labels.append(int(class_id)) # Class as integer # If there are no valid boxes, set an empty box if len(boxes) == 0: boxes = torch.zeros((0, 4), dtype=torch.float32) # Empty tensor to indicate absence of boxes labels = torch.zeros((0,), dtype=torch.int64) else: boxes = torch.tensor(boxes, dtype=torch.float32) labels = torch.tensor(labels, dtype=torch.int64) # Apply Albumentations transformations if self.transform: transformed = self.transform(image=image, bboxes=boxes.tolist(), labels=labels.tolist()) image = transformed['image'] boxes = torch.tensor(transformed['bboxes'], dtype=torch.float32) labels = torch.tensor(transformed['labels'], dtype=torch.int64) # Target configuration target = { 'boxes': boxes, 'labels': labels, 'image_id': torch.tensor([idx]), 'area': (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1]) if boxes.numel() > 0 else torch.tensor([]), 'iscrowd': torch.zeros((len(boxes),), dtype=torch.int64) } return image, target

With the CustomDataset class implemented, two additional functions were created to streamline working with the dataset: one for visualizing images with bounding boxes and another for grouping samples into batches.

# Function to view image with bounding boxes def plot_image_with_boxes(image, target): image = transforms.functional.to_pil_image(image) draw = ImageDraw.Draw(image) for box in target['boxes']: draw.rectangle(((box[0], box[1]), (box[2], box[3])), outline="red", width=2) plt.imshow(image) plt.axis('off') plt.show() # Function to group samples def collate_fn(batch): images = [] targets = [] for b in batch: images.append(b[0]) targets.append(b[1]) return images, targets

# Instantiating the Training and Test datasets train_dataset = CustomDataset(root_dir=os.getenv("DATASET_PATH"), dataset_type='train') test_dataset = CustomDataset(root_dir=os.getenv("DATASET_PATH"), dataset_type='test')

Exploratory Data Analysis (EDA)

In this section, an Exploratory Data Analysis (EDA) will be carried out with the aim of understanding the main characteristics of the dataset used in this project. EDA is a key step in gaining insights and guiding decisions about data preprocessing and modeling. During the analysis, the following aspects will be investigated:

-

Total Number of Images in Each Dataset: Verification of the number of images available in the training, validation and test sets.

-

Number of Labeled and Unlabeled Images: Identification of the proportion of images that have annotations (bounding boxes) compared to those that do not.

-

Distribution between Classes: Analysis of the frequency of each class present in the dataset to understand how the data is organized.

-

Dataset Imbalance Check: Assessment of the existence of imbalances in the classes, which can influence the model's performance.

-

Analysis of Bounding Box Dimensions: Investigation of the dimensions of bounding boxes, such as height and width, to understand variations and identify possible anomalies.

Esse tipo de análise permitirá identificar problemas como desbalanceamento, imagens sem rótulos e inconsistências nas anotações, que podem impactar o treinamento e a performance do modelo.

To check the number of images available in the datasets, the function count_images_per_dataset was created, which receives the training and test datasets as parameters. This function uses the __len__ method of the custom class CustomDataset, which returns the total number of images in each dataset. The purpose of this check is to ensure that the number of images matches expectations and that there are no inconsistencies or data loading issues. Image counting is an essential step in the exploratory analysis process, as it helps plan model training and validation, considering the appropriate size of each set.

def count_images_per_dataset(train_dataset, test_dataset): print(f"Total images in the training set: {len(train_dataset)}") print(f"Total images in the test set: {len(test_dataset)}") count_images_per_dataset(train_dataset, test_dataset)

Total images in the training set: 17340

Total images in the test set: 3876

After executing the function, it was identified that:

The numbers indicate that the training set has the largest proportion of data, which is ideal for ensuring that the model learns robustly. The test set, although smaller, seems adequate to evaluate the generalization of the model.

This initial count also provides a basis for analyzing potential imbalances or issues in the data that could impact model performance during training and validation.

For the next stage of the exploratory analysis, the number of images with and without bounding box descriptions will be evaluated in both training and testing data sets. For this, the function count_images_with_labels was developed, which goes through all the images in the dataset and checks whether each one has at least one associated bounding box. This analysis allows you to understand the quality of the annotated data, identify possible problems in the labeling process and determine the quantity of images directly usable for training and validating the model. A high percentage of images without labels may indicate:

-

The need to clean or delete these images.

-

The possibility of performing additional annotations to improve model performance.

def count_images_with_labels(dataset): total_images = len(dataset) images_with_labels = 0 images_without_labels = 0 for idx in range(total_images): _, target = dataset[idx] if len(target['boxes']) > 0: images_with_labels += 1 else: images_without_labels += 1 print(f"Images with labels: {images_with_labels}") print(f"Images without labels: {images_without_labels}") print(f"Percentage without labels: {images_without_labels / total_images * 100:.2f}%") # Para treino print("Training Set:") count_images_with_labels(train_dataset) # Para teste print("\nTest Set:") count_images_with_labels(test_dataset)

Training Set:

Images with labels: 9507

Images without labels: 7833

Percentage without labels: 45.17%

Test Set:

Images with labels: 2067

Images without labels: 1809

Percentage without labels: 46.67%

The numbers resulting from the execution of the function show that approximately 45% of the images in the training set and 46% in the test set do not have bounding boxes associated. This may occur due to:

- Absence of objects in the scene (scenarios where no fire or sign of fire was detected).

- Problems with annotation during dataset construction.

This allows you to define future actions to train the model:

Images without labels:

- Decide whether they will be removed or kept in the pipeline, depending on the model approach.

- Images without labels can be useful for training the model to recognize "neutral" images (without fires), contributing to a lower false positive rate.

Images with labels:

- Investigate the quality of existing annotations and ensure that the bounding boxes are correct and aligned with the expected objects

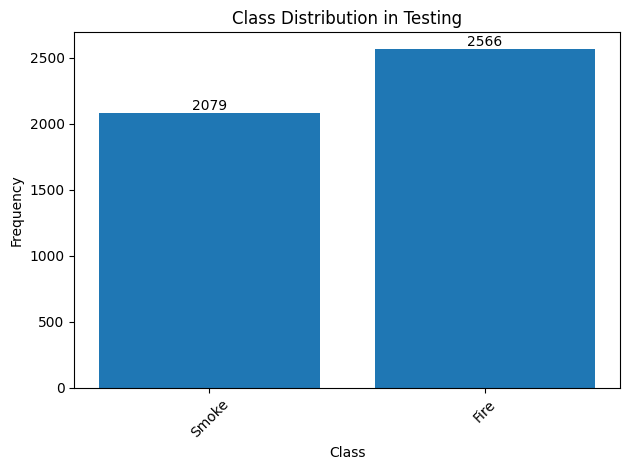

Class Distribution Analysis:

Class distribution analysis is an essential step to understand the proportion of different types of objects annotated in the dataset. This investigation allows us to identify possible imbalances between classes, which can impact model performance, especially in tasks such as fire detection, where unbalanced classes can lead to a bias in the prediction.

The objective of this analysis is to calculate the frequency of each class present on the labels and visualize it through a bar graph, facilitating the analysis and identification of potential unbalance problems.

# Function to count class frequencies def plot_class_distribution(dataset, dataset_type): class_counts = Counter() for _, target in dataset: class_counts.update(target['labels'].tolist()) # Assuming you have a class mapping (if not, replace with the list of classes) class_names = { 0: 'Smoke', # Mapping example 1: 'Fire', # Add more classes as needed } # Updating keys with class names class_labels = [class_names.get(label, str(label)) for label in class_counts.keys()] # Plotting the graph fig, ax = plt.subplots() bars = ax.bar(class_labels, class_counts.values()) # Adding the values to the top of each column for bar in bars: height = bar.get_height() ax.text(bar.get_x() + bar.get_width() / 2, height, str(int(height)), ha='center', va='bottom') # Adjustments to the graph plt.xlabel('Class') plt.ylabel('Frequency') plt.title(f'Class Distribution in {dataset_type.capitalize()}') plt.xticks(rotation=45) # To ensure that labels do not overlap plt.tight_layout() # Adjust layout to avoid overlapping # Displaying the graph plt.show() # Count and color for practice set plot_class_distribution(train_dataset, "Training") # Count and plot for the test set plot_class_distribution(test_dataset, "Testing")

The graphs above clearly show the frequency of each class in both data sets. Including exact values above the bars provides greater clarity for interpretation

Impact of Imbalance:

Although the imbalance is not extreme, it may be necessary to use compensation techniques during training, such as:

- Adjustment of loss function weights to balance the influence of each class.

- Increase of the minority class (

Smoke) with data augmentation techniques. - Application of undersampling or oversampling.

Unbalance Proportionality Check

After identifying a class imbalance in the dataset, the next step will be to check whether this imbalance is proportionally distributed between the training and testing sets. This analysis is important to ensure that the model will be trained and evaluated under consistent conditions, avoiding possible biases during validation.

Methodology

-

Calculation of Proportions: For each class in the training and testing datasets, the proportion was calculated in relation to the total samples in the set. This metric provides a clearer view of the representativeness of each class, regardless of the absolute size of the sets.

-

Comparison of Proportions: The proportions of each class between the two sets were compared. The absolute difference between the proportions was calculated and compared to a defined tolerance value, in this case, 5% (0.05).

-

Imbalance Classification: If the difference between the proportions is less than or equal to the tolerance for all classes, the imbalance between the two sets will be considered similar.

Otherwise, it will be reported that the imbalance is different.

def calculate_class_distribution(dataset): # Count class frequencies class_counts = Counter() for _, target in dataset: class_counts.update(target['labels'].tolist()) # Calculate class proportions total_samples = sum(class_counts.values()) class_proportions = {cls: count / total_samples for cls, count in class_counts.items()} return class_proportions def check_class_imbalance(train_dataset, test_dataset, tolerance=0.05): # Calculate class distributions for training and testing train_proportions = calculate_class_distribution(train_dataset) test_proportions = calculate_class_distribution(test_dataset) # Show header print(f"{'Class':<10} {'Proportion in Training':<20} {'Proportion in Test':<20} {'Difference':<20}") print("-" * 70) imbalance_equal = True for cls in train_proportions: test_prop = test_proportions.get(cls, 0) # If the class is not in the test set train_prop = train_proportions[cls] # Calculate the difference diff = abs(train_prop - test_prop) # Print numeric values print(f"{cls:<10} {train_prop:.4f} {test_prop:.4f} {diff:.4f}") # Check if the difference in proportions is greater than the tolerance if diff > tolerance: imbalance_equal = False # Resultado final if imbalance_equal: print("\nThe class imbalance is similar between training and testing.") else: print("\nThe class imbalance is different between training and testing.") # Call the function to check the imbalance check_class_imbalance(train_dataset, test_dataset)

The following table presents the proportions of classes in the training and testing sets, as well as the differences between them:

| Class | Proportion in Training | Proportion in Test | Difference |

|---|---|---|---|

| 0 (Smoke) | 0.4500 | 0.4476 | 0.0025 |

| 1 (Fire) | 0.5500 | 0.5524 | 0.0025 |

The maximum difference between the proportions of each class was 0.0025, which is significantly lower than the established tolerance of 0.05 (5%).

Analysis of Bounding Boxes - Distribution of box sizes

To better understand the behavior of the bounding boxes in the training dataset, we performed a statistical analysis of the widths and heights of the bounding boxes. This analysis aims to identify patterns in box sizes and understand the variability in object dimensions.

The average size and standard deviation for the widths and heights of the boxes were calculated. The calculation was done for each box, extracting the dimensions of each object from the coordinates provided on the labels.

widths = [] heights = [] for _, target in train_dataset: if len(target['boxes']) > 0: widths.extend((target['boxes'][:, 2] - target['boxes'][:, 0]).tolist()) heights.extend((target['boxes'][:, 3] - target['boxes'][:, 1]).tolist()) print(f"Medium width: {np.mean(widths):.2f}, Standard deviation: {np.std(widths):.2f}") print(f"Average height: {np.mean(heights):.2f}, Standard deviation: {np.std(heights):.2f}")

Medium width: 204.10, Standard deviation: 246.33

Average height: 124.81, Standard deviation: 130.17

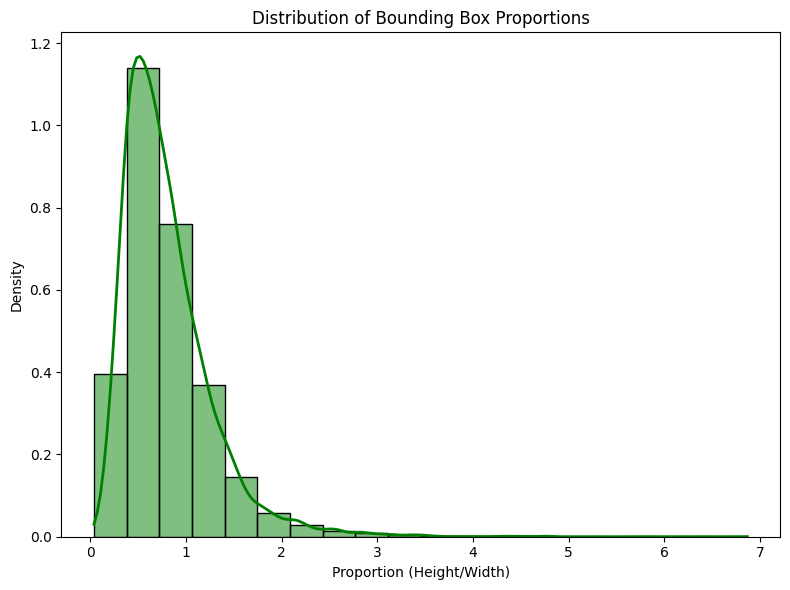

These values indicate that, on average, the bounding boxes in the training dataset are considerably wider than they are tall, with significant variation between boxes (particularly in relation to widths). The high standard deviation suggests that there is a large diversity in the size of the detected objects. To facilitate the understanding of the distributions of the dimensions of the bounding boxes, a histogram will be generated that shows the frequency of the proportions between height and width of the bounding boxes in the dataset. These proportions were calculated as the ratio between the height and width of each box, as shown in the following graph.

def plot_bounding_box_proportions(heights, widths): # Calculate proportion (Height/Width) proportions = [h / w for h, w in zip(heights, widths) if w > 0] # Generate a numeric table with statistics proportions_df = pd.DataFrame(proportions, columns=['Proportion (Height/Width)']) # Descriptive statistics stats = proportions_df.describe().transpose() # Display the table with statistics print("Table with Proportion Statistics:") display(stats) # Chart settings plt.figure(figsize=(8, 6)) # Plot the histogram of proportions with the density curve sns.histplot(proportions, kde=True, bins=20, color='green', stat='density', line_kws={'color': 'red', 'lw': 2}) # Adjustments to the graph plt.xlabel('Proportion (Height/Width)') plt.ylabel('Density') plt.title('Distribution of Bounding Box Proportions') plt.tight_layout() # Adjust to avoid overlap # Display the graph plt.show() plot_bounding_box_proportions(heights, widths)

Table with Proportion Statistics:

| Statistic | Count | Mean | Std Dev | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|---|

| Proportion (Height/Width) | 21469.0 | 0.800365 | 0.474677 | 0.038199 | 0.477401 | 0.696629 | 1.0 | 6.866667 |

In addition to visually observing the distributions, descriptive statistics of the proportions were also calculated to provide a more detailed view of the data. The table above displays the main metrics of height-to-width ratios. These results indicate that most boxes have aspect ratios close to 1, which suggests that height and width are relatively similar for most objects. The mean of 0.800 and standard deviation of 0.475 reinforce this idea, as most proportions are concentrated around values close to 1.

However, the presence of a maximum value of 6.867 reveals that there are some boxes that are much taller than they are wide, which may indicate objects with vertical orientations or atypical characteristics in relation to the rest. The distribution of proportions in the histogram shows a tendency for more compact boxes to predominate (proportions closer to 1), but also reveals a tail to the right, which represents cases in which the height of the box is significantly greater than its width.

Implications for the Model:

This information is crucial when configuring your object detection model, as it can help you define anchors and choose training strategies. The presence of objects with very high proportions may indicate the need for greater diversity in anchors, so that the model is able to better deal with objects in different orientations, a situation that should be evaluated during model training.

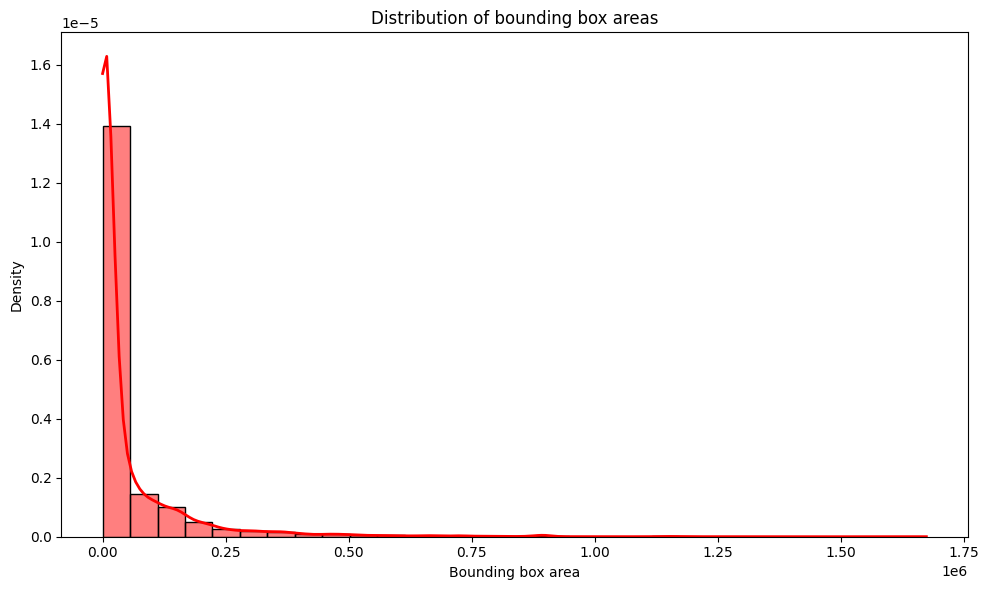

Analysis of Bounding Box Areas

The analysis of the bounding box areas is essential to understand the size distribution of the objects detected in the dataset. The area of a bounding box is calculated by multiplying its width by its height, and can provide important information about the scale and variation of the objects present. To visualize the distribution of box areas, a histogram with 30 bins was generated, along with a density curve, to observe how the areas are distributed within the data set. The graph generated below shows the distribution of the bounding box areas.

# Areas being a list of areas of the bounding boxes, as shown in the previous code areas = [area.item() for _, target in train_dataset for area in target['area']] # Chart configuration plt.figure(figsize=(10, 6)) # Plotting the histogram with 30 bins and normalizing the frequencies sns.histplot(areas, bins=30, kde=True, color='red', stat='density', line_kws={'color': 'red', 'lw': 2}) # Adjusting the labels and title plt.xlabel('Bounding box area') plt.ylabel('Density') plt.title('Distribution of bounding box areas') # Displaying the graph plt.tight_layout() plt.show()

By observing the distribution graph of the bounding box areas with the histogram and the density curve, we can draw the following conclusions:

-

Asymmetrical Distribution: The distribution of bounding box areas presents a significant asymmetry, with a large concentration of very small areas close to zero. The density is highest at low values, which indicates that most of the bounding boxes in the dataset are very small.

-

Long Tail: The tail of the distribution extends to very large areas, which suggests the presence of some bounding boxes that are significantly larger in relation to most others. This may represent objects that occupy large parts of the image or that are on a larger scale compared to more common objects.

-

Data Concentration: Most areas are concentrated around very small values, probably representing smaller objects or objects detectable in smaller regions of the image. This may be relevant to the type of objects your detection model is being trained to identify.

Implications for the Model

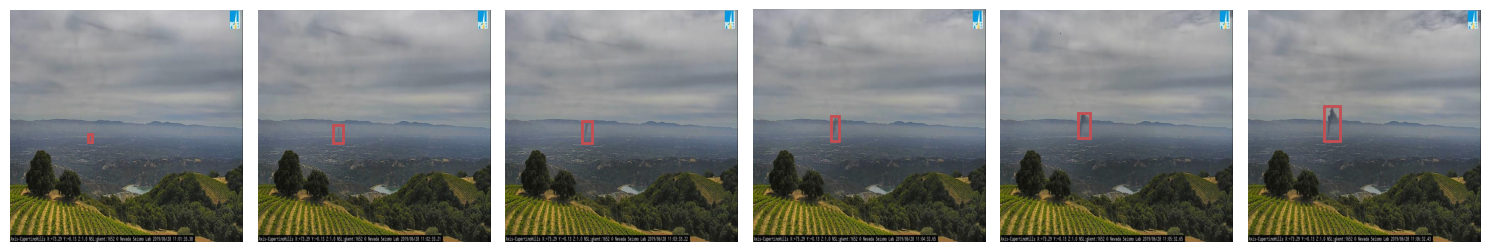

Area analysis is also useful for configuring the object detection model. When there is a large variation in the size of the boxes, as observed, it is important to adjust the model anchors to deal with objects of different scales. This may require the use of multiple anchor scales to improve the model's accuracy in detecting both small and large objects. To explain this idea, let's take a sample example from the training dataset:

def plot_multiple_images(dataset, num_images=5): """Function to display multiple images side by side""" fig, axes = plt.subplots(1, num_images, figsize=(15, 5)) # Create a line with 'num_images' images for i in range(num_images): image, target = dataset[i] ax = axes[i] ax.imshow(image) for box in target['boxes']: x_min, y_min, x_max, y_max = box ax.add_patch(plt.Rectangle((x_min, y_min), x_max - x_min, y_max - y_min, linewidth=2, edgecolor='r', facecolor='none')) ax.axis('off') # Remove axes plt.tight_layout() # Adjust the layout so that images do not overlap plt.show() # Display 6 images side by side plot_multiple_images(train_dataset, num_images=6)

In the figure above, a sample of multiple frames from a video is shown. It is observed that, as the images progress, the dimensions of the bounding boxes change to make the detections. As smoke identification is part of the nature of our data, these large variations in box dimensions occur due to the need to capture this dynamic phenomenon accurately.

In summary, the analysis of the distribution of the bounding box areas highlights that the model needs to be robust enough to deal with objects of varying sizes. Furthermore, variation in areas can directly influence how the model performs detections. To mitigate these challenges and improve performance, it may be necessary to adopt strategies such as adjusting anchors, which should reflect the specific characteristics of the data, and applying data augmentation techniques to increase sample diversity and balance the distribution of sample sizes. boxes.

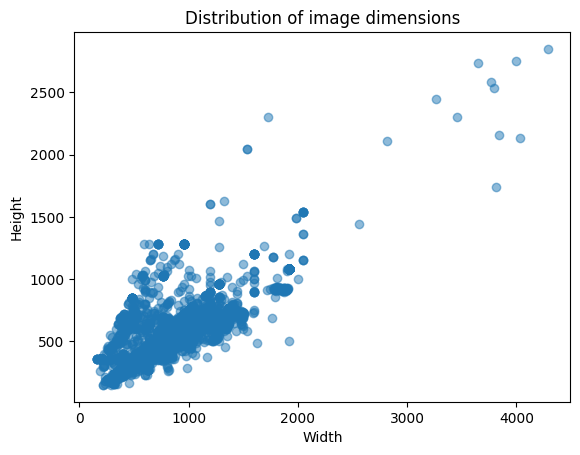

Analysis of Image Sizes and Resolutions

To understand the diversity of image dimensions in the dataset, an analysis of the heights and widths of all images was performed. Evaluating dimensions is crucial to identify possible inconsistencies or patterns that could impact model training.

heights, widths = [], [] for img_path in train_dataset.image_paths: with Image.open(img_path) as img: heights.append(img.height) widths.append(img.width) print(f"Average height: {np.mean(heights):.2f}, Medium width: {np.mean(widths):.2f}") print(f"Minimum height: {np.min(heights)}, Minimum width: {np.min(widths)}")

Average height: 583.28, Medium width: 982.89

Minimum height: 150, Minimum width: 162

When analyzing the results above, a significant variation in image resolutions is observed, especially when considering minimum values. This variation may be associated with different capture conditions, such as the distance from the camera, the quality of the devices used or the varied scenarios present in the videos. These factors reflect the diversity in the forms of capture, highlighting the heterogeneity of the data available in the set.

plt.scatter(widths, heights, alpha=0.5) plt.xlabel('Width') plt.ylabel('Height') plt.title('Distribution of image dimensions') plt.show()

- Data Dispersion Analysis

High Image Concentration:

- Most images present specific specifications in a width range between 0 and 1200 pixels and height between 0 and 600 pixels. This suggests that the dataset is predominantly composed of moderate resolution images, many of them with balanced width and height proportions.

- This concentration may be an indication that the images were standardized or follow a specific format, such as rectangular or square proportions more common in the data set.

Constant Width Image Group (~1200 pixels):

- Observe a point of greater density around 1200 pixels wide, with variable height. This may indicate that many images have been adjusted or resized to this specific width while maintaining specific height proportions.

- Outliers Analysis

Images with a width greater than 1200 pixels:

- Some images are wider than 1200 pixels, being dispersed and isolated. This may indicate examples of high-resolution or panoramic (width greater than height) images capturing large or built-up scenes.

- These outliers can be a result of collecting data from different sources or devices, which develop into variation in dimensions.

Images with High Height and Restricted Width (Over 800 pixels in height):

- Some images are taller than 800 pixels and have a smaller width (between 200 and 1000 pixels). This can represent vertical images, such as photos of tall objects (buildings, trees) or images that highlight details in perspective

- General Trends in Image Dimensions:

Relationship Between Width and Height:

-

In general, there seems to be an increasing trend in height as width increases. In other words, wider images tend to be taller. This is typical of images in horizontal rectangular format (landscape), where the width tends to be greater than the height.

-

This relationship can be useful for tuning data preprocessing and model training, as you can consider proportional resizing based on this trend.

Actions for Model Training:

Image Standardization:

-

To ensure the consistency of the model, it may be interesting to pre-process the images so that they all have fixed dimensions, or at least a similar resolution range. This helps ensure that images do not introduce distortions during training.

-

Considering that most images are between 0-1200 pixels wide and 0-600 pixels tall, resizing will be necessary to ensure a standard. For example, resizing to 800x600 or 1200x800 pixels.

-

Data Augmentation Techniques:

-

As image resolution varies, data augmentation techniques such as cropping, rotation or scaling can be used to generate more varied examples and reduce overfitting.

Dataset Transformation and Preparation Strategy

- Based on the characteristics identified in the exploratory analysis, a custom transformation pipeline will be developed to optimize model training. This pipeline will have the following main objectives:

- Increase and diversify the samples available in the dataset:

- Apply data augmentation techniques to expand the variability of the dataset, making the model more robust to different conditions (such as lighting, orientation and noise) but in this case a restriction will be applied that only images that have notations of bbox will be replicated, the others will only be normalized.

- Normalize image dimensions:

- Standardize dimensions to 640x640 pixels (dimension selected based on image distribution analysis), ensuring compatibility with the YOLO model and optimizing memory usage during training.

Given that we are using the YOLO model via the Ultralytics library, we know that directly changing the framework's internal dataset and dataloader functions can be complex and prone to errors. To overcome this limitation, we decided to use a custom dataset and custom dataloader, which will include specific transformations before training. This approach allows us greater control over the applied transformations and facilitates integration with the model.

def create_dataloader_training(root_dir): """ Function to create the DataLoader for the training dataset with transformations. Args: root_dir (str): Path to the folder containing the training data. batch_size (int): Batch size for the DataLoader. num_workers (int): Number of workers for the DataLoader. Returns: train_dataset (Dataset): training dataset. """ # Transformations for the training dataset with bounding boxes adjustment transform_train = A.Compose([ A.Resize(640, 640), A.RandomBrightnessContrast(brightness_limit=0.2, contrast_limit=0.2, p=0.5), A.HorizontalFlip(p=0.5), A.RandomRotate90(p=0.5), A.HueSaturationValue(hue_shift_limit=20, sat_shift_limit=30, val_shift_limit=20, p=0.5), A.ToGray(p=0.3), A.GaussianBlur(blur_limit=(5, 9), sigma_limit=(0.1, 5), p=0.3), A.ISONoise(color_shift=(0.01, 0.05), intensity=(0.1, 0.5), p=0.5), ], bbox_params=A.BboxParams(format='pascal_voc', label_fields=['labels'])) # Creating the training dataset train_dataset = CustomDataset(root_dir=root_dir, dataset_type='train', transform=transform_train) return train_dataset

def create_dataloader_test(root_dir): """ Function to create the DataLoader for the test dataset with transformations. Args: root_dir (str): Path to the folder containing the test data. batch_size (int): Batch size for the DataLoader. num_workers (int): Number of workers for the DataLoader. Returns: test_dataset (Dataset): test dataset. """ # Transformation for the test dataset transform_test = A.Compose([A.Resize(640, 640)], bbox_params=A.BboxParams(format='pascal_voc', label_fields=['labels'])) # Creating the test dataset test_dataset = CustomDataset(root_dir=root_dir, dataset_type='test', transform=transform_test) return test_dataset

To save the transformations carried out by our dataloader, the save_transformed_dataset function will be created with the purpose of saving the transformed images and their labels in YOLO format in an organized directory structure meeting the following requirements:

-

Facilitate Training and Integration with the Framework: The

Ultralyticslibrary requires that datasets be in YOLO format, with images stored in a specific folder and labels in another. This function automates the conversion and storage process, ensuring compatibility with the framework. -

Preservation of Applied Transformations: During training, dynamically applied transformations can make data validation and analysis difficult. By saving the transformed images and their labels, you can visually inspect the changes made and better understand the impact of the transformations.

-

Increased Efficiency: Applying transformations "on-the-fly" during training can be computationally expensive, especially for large datasets. Saving the transformed images and labels allows the model to directly read the ready data, reducing training time.

# Function to save transformed images and labels cumulatively def save_transformed_dataset(dataset, output_dir, dataset_type=None): """ Saves transformed images and their labels in a new folder. For the training set, it only saves the images with bounding boxes. For the test set, it saves all images. """ # Create output directories for images and labels images_dir = os.path.join(output_dir, 'images') labels_dir = os.path.join(output_dir, 'labels') # Clear and recreate output directories if they do not exist os.makedirs(images_dir, exist_ok=True) os.makedirs(labels_dir, exist_ok=True) # Maintain file counter to ensure unique names start_idx = len(os.listdir(images_dir)) # To ensure that the index starts from the next available number for idx in range(len(dataset)): # Get image and labels from the dataset image, target = dataset[idx] # For the training dataset, only save if there are bounding boxes if dataset_type == 'train': if len(target['boxes']) > 0: # Only saves if there are bounding boxes # Save transformed image image_np = image # It is already a NumPy array image_path = os.path.join(images_dir, f"{start_idx + idx:05d}.jpg") cv2.imwrite(image_path, cv2.cvtColor(image_np, cv2.COLOR_RGB2BGR)) # Save transformed labels in YOLO format label_path = os.path.join(labels_dir, f"{start_idx + idx:05d}.txt") with open(label_path, 'w') as f: for box, label in zip(target['boxes'], target['labels']): xmin, ymin, xmax, ymax = box x_center = (xmin + xmax) / 2.0 / image_np.shape[1] y_center = (ymin + ymax) / 2.0 / image_np.shape[0] width = (xmax - xmin) / image_np.shape[1] height = (ymax - ymin) / image_np.shape[0] f.write(f"{label} {x_center:.6f} {y_center:.6f} {width:.6f} {height:.6f}\n") elif dataset_type == 'test': # For the test dataset, save all images regardless of bounding boxes image_np = image # It is already a NumPy array image_path = os.path.join(images_dir, f"{start_idx + idx:05d}.jpg") cv2.imwrite(image_path, cv2.cvtColor(image_np, cv2.COLOR_RGB2BGR)) # Save transformed labels in YOLO format (even if there are no boxes) label_path = os.path.join(labels_dir, f"{start_idx + idx:05d}.txt") with open(label_path, 'w') as f: if len(target['boxes']) > 0: for box, label in zip(target['boxes'], target['labels']): xmin, ymin, xmax, ymax = box x_center = (xmin + xmax) / 2.0 / image_np.shape[1] y_center = (ymin + ymax) / 2.0 / image_np.shape[0] width = (xmax - xmin) / image_np.shape[1] height = (ymax - ymin) / image_np.shape[0] f.write(f"{label} {x_center:.6f} {y_center:.6f} {width:.6f} {height:.6f}\n") else: # For images without bounding boxes in the test set, we can save an empty file or omit f.write("") print(f"Transformed images and labels saved in: {output_dir}")

# Using the functions: for element in range(0, 3): root_dir =os.getenv("DATASET_PATH") train_dataset = create_dataloader_training(root_dir) save_transformed_dataset(train_dataset, output_dir=os.getenv("NEW_DATASET_TRAIN_PATH"), dataset_type='train')

Transformed images and labels saved in: C:\Users\eduar\Documents\florest_fire_env\D-Fire\train_transformed

Transformed images and labels saved in: C:\Users\eduar\Documents\florest_fire_env\D-Fire\train_transformed

Transformed images and labels saved in: C:\Users\eduar\Documents\florest_fire_env\D-Fire\train_transformed

# Using this function: root_dir = os.getenv("DATASET_PATH") test_dataset = create_dataloader_test(root_dir) save_transformed_dataset(test_dataset, output_dir=os.getenv("NEW_DATASET_TEST_PATH"),dataset_type='test')

Transformed images and labels saved in: C:\Users\eduar\Documents\florest_fire_env\D-Fire\test_transformed

To finish preparing the new dataset, we will use the copy_files function to move the files from the original folder to the new folder structure that will be consumed by the model during training. This function ensures that data is organized and ready to be used, facilitating the workflow and avoiding possible file location errors.

def copy_files(source_folder, destination_folder): """ Copies files from a source folder to a destination folder. Args: source_folder (str): Path of the source folder. destination_folder (str): Path of destination folder. """ # Checks if the destination folder exists, otherwise creates it if not os.path.exists(destination_folder): os.makedirs(destination_folder) # List all files in the source folder for file in os.listdir(source_folder): source_path = os.path.join(source_folder, file) # Check if it is a file (not a folder) if os.path.isfile(source_folder): destination_path = os.path.join(source_folder, file) # Copy the file shutil.copy2(source_path, destination_path) print(f"File {file} copied to {source_folder}")

# Instantiating the copy functions: folder_source_images= os.getenv("DATASET_PATH") folder_destination_images = os.getenv("DESTINATION_IMAGES_FOLDER") copy_files(folder_source_images, folder_destination_images) source_folder_labels = os.getenv("SOURCE_FOLDER_LABELS") destination_folder_labels = os.getenv("DESTINATION_FOLDER_LABELS") copy_files(source_folder_labels , destination_folder_labels)

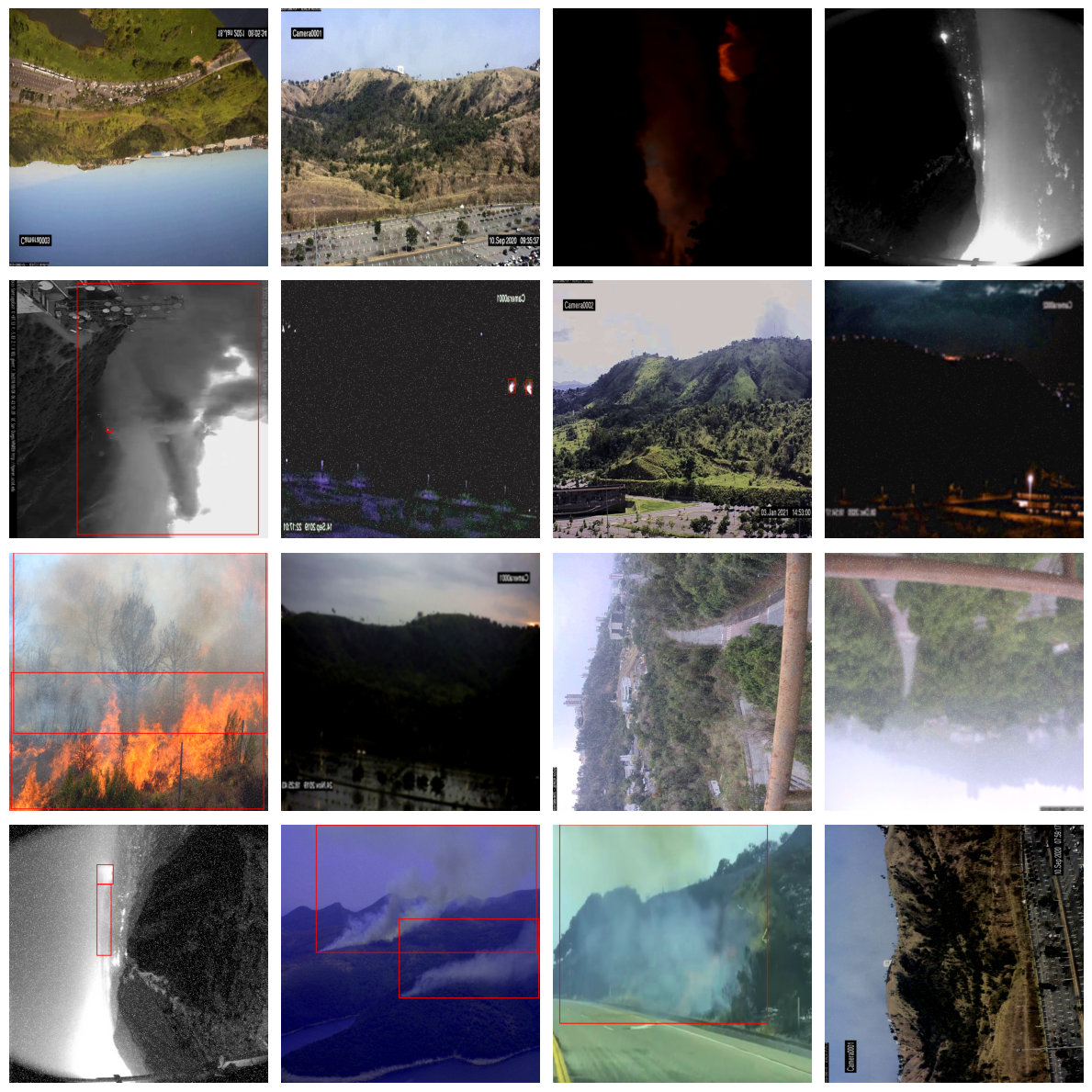

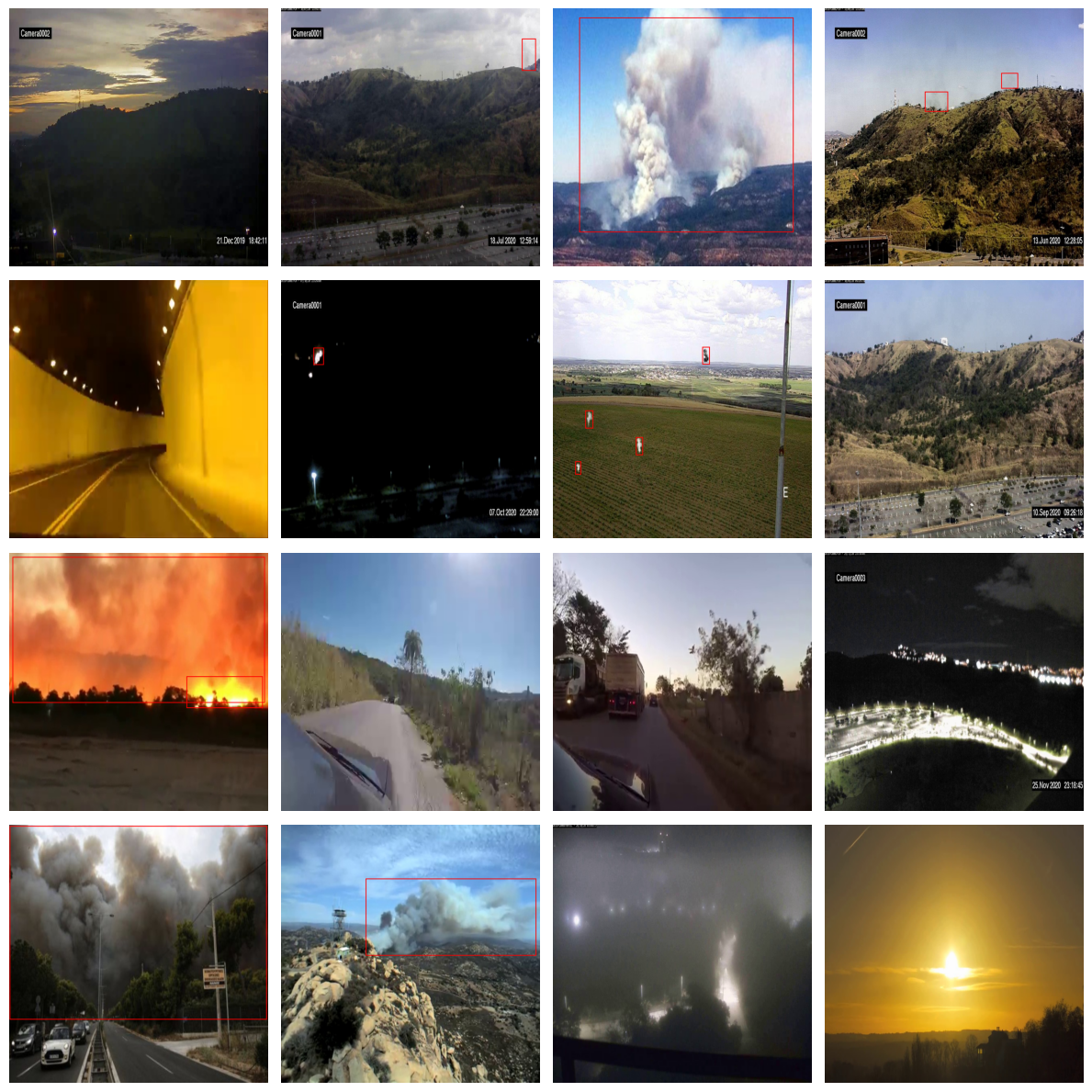

Finally, to ensure that the transformations were correctly applied to the dataset, we will extract a sample of the transformed images for visualization. This step is essential to validate whether the modifications, such as resizing, color adjustments and application of bounding boxes, were carried out as expected. To do this, we will use the show_transformed_images function, which displays the transformed images together with their drawn bounding boxes.

# Function to display multiple images after transformations with bounding boxes def show_transformed_images(dataset, num_images=16): # Initialize the figure with 4x4 subplots (or other desired arrangement) fig, axes = plt.subplots(4, 4, figsize=(12, 12)) # 4x4 to display 16 images axes = axes.flatten() # Flattens the axis array for easier access for i in range(num_images): # Select a random index idx = random.randint(0, len(dataset) - 1) # Get the image and target image, target = dataset[idx] # Convert the tensor to a PIXEL image for display image = F.to_pil_image(image) # Draw the bounding boxes on the image draw = ImageDraw.Draw(image) for box in target['boxes']: draw.rectangle(((box[0], box[1]), (box[2], box[3])), outline="red", width=2) # Display the image in the corresponding subplot axes[i].imshow(image) axes[i].axis('off') # Remove axes for clean viewing plt.tight_layout() # Adjust the layout so as not to overlap the images plt.show()

# For the training dataset train_new_dataset = CustomDataset(root_dir=os.getenv("NEW_DATASET_TRAIN_PATH"), dataset_type='train_new') train_new_loader = DataLoader(train_new_dataset, batch_size=16, shuffle=True, num_workers=0, collate_fn=collate_fn) test_new_dataset = CustomDataset(root_dir=os.getenv("NEW_DATASET_TEST_PATH"), dataset_type='test_new') test_new_loader = DataLoader(test_new_dataset, batch_size=16, shuffle=False, num_workers=0, collate_fn=collate_fn)

# Call the function to show 16 images show_transformed_images(train_dataset)

# Call the function to show 16 images show_transformed_images(test_dataset)

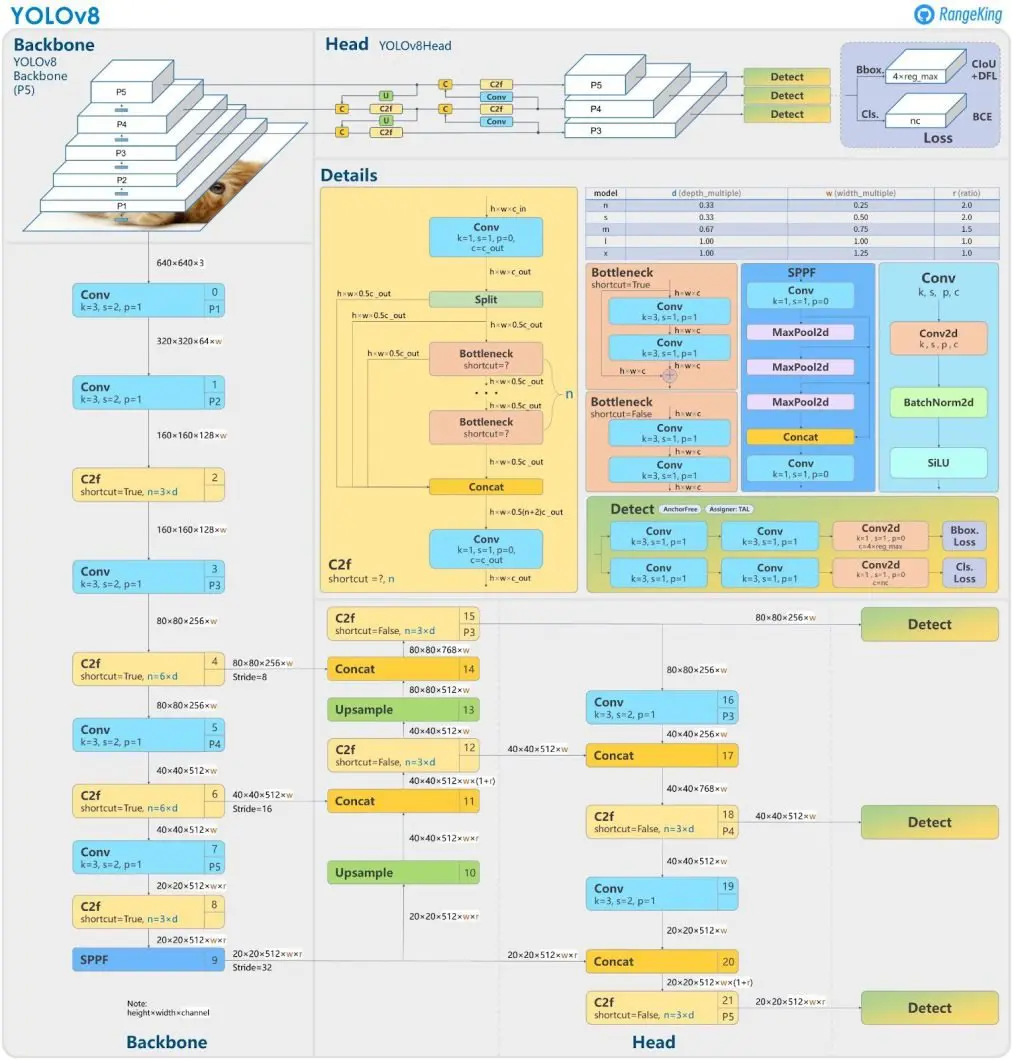

Model

The model chosen for this task was YOLOv8, using the Ultralytics framework. For this project, we chose to use the YOLOv8 Small model, which offers an ideal balance between performance and computational efficiency, being suitable for tasks that require speed and good accuracy, even on devices with limited resources.

Key features of YOLOv8 Small include:

-

Optimized layers and architecture: It is based on a lightweight and efficient structure, with fewer parameters compared to larger models, but maintaining the ability to identify objects with good accuracy.

-

Backbone: Uses a deep convolutional network for feature extraction, tuned to detect objects of different scales and sizes.

-

Neck: Includes components such as PANet (Path Aggregation Network), which improve the fusion of features extracted at different layers of the network, allowing better detection of small objects.

-

Head: Responsible for predicting bounding boxes, classes and confidence scores, implementing anchor or free anchor techniques, depending on the configuration.

To better illustrate how this architecture works, see the following image:

According to the model documentation and article, the main characteristics of this architecture are:

Mosaic Data Augmentation

- Similar to YOLOv4, YOLOv8 employs mosaic data augmentation, which combines four images into one during training. This technique provides the model with richer contextual information, improving its ability to generalize. However, YOLOv8 stops applying mosaic augmentation during the final ten training epochs to enhance performance on the validation set, ensuring the model fine-tunes to real-world data distribution.

Anchor-Free Detection

-

YOLOv8 transitions to anchor-free detection, a significant shift that enhances generalization and simplifies the training process.

-

Limitations of anchor-based detection: Predefined anchor boxes can hinder learning, especially for custom datasets with unique object scales.

-

Anchor-free advantages: The model directly predicts the center points of objects, reducing the number of bounding box predictions and accelerating processes like Non-Maximum Suppression (NMS), which eliminates overlapping bounding boxes.

C2f Module

-

The backbone architecture of YOLOv8 incorporates the C2f module instead of the C3 module used in earlier versions.

-

Key difference: In the C2f module, outputs from all bottleneck modules are concatenated, unlike the C3 module, which uses only the final bottleneck output.

-

Impact: This improves gradient flow and accelerates training by enhancing the network’s ability to learn intricate patterns efficiently.

-

Bottleneck modules: These modules use residual blocks to reduce computational costs in deep learning models without sacrificing performance.

Decoupled Head

-

YOLOv8 introduces a decoupled head that separates classification and regression tasks, as illustrated in the architecture diagram. Why decouple? Performing these tasks independently improves accuracy by allowing the model to optimize classification and bounding box regression separately.

-

Result: This design choice leads to better overall performance in object detection tasks.

Modified Loss Function

-

To handle potential misalignment caused by separating classification and regression, YOLOv8 incorporates a task alignment score. This score helps the model differentiate between positive and negative samples.

-

Task alignment score: Combines the classification score with the Intersection over Union (IoU) score, quantifying how well a predicted bounding box aligns with the ground truth.

Loss computation:

-

Classification loss: Calculated using Binary Cross-Entropy (BCE), which measures the accuracy of predicted labels.

-

Regression loss: Combines Complete IoU (CIoU) and Distributional Focal Loss (DFL).

-

CIoU: Considers center point, aspect ratio, and overlap to improve bounding box predictions.

-

DFL: Focuses on boundary optimization, prioritizing hard-to-classify false negatives.

These enhancements make YOLOv8 a robust and efficient model, offering superior performance in detecting and classifying objects across diverse datasets.

Model Training

In this step, we will train the model and evaluate its performance metrics. We will use the transfer learning technique, in which the first layers of the network will be frozen. This approach aims to preserve the model's ability to identify basic features, such as edges, textures, and shapes, that are common to different types of images. Subsequently, we will train only the final layers of the network, allowing the model to adapt and specialize in the specific characteristics of the dataset used in this task.

# Load a model model = YOLO(model='yolov8s.yaml') # Load custom model PROJECT = 'florest_fire_env' # Project name NAME = 'fire_model' # Run name model.train(data='data.yaml', task='detect', epochs=200, verbose=True, batch=20, imgsz=640, patience=20, save=True, device=0, workers=8, project=PROJECT, name=NAME, cos_lr=True, lr0=0.0001, lrf=0.00001, warmup_epochs=3, warmup_bias_lr=0.000001, optimizer='Adam', seed=42)

New https://pypi.org/project/ultralytics/8.3.38 available

Update with pip install -U ultralytics

Ultralytics 8.3.35 Python-3.11.0 torch-2.5.1+cu124 CUDA

Training Configuration

engine\trainer:

- Task: detect

- Mode: train

- Model: yolov8s.yaml

- Data: data.yaml

- Epochs: 200

- Batch size: 20

- Image size: 640

- Optimizer: Adam

- Patience: 20

- Device: CUDA

- Project: florest_fire_env

- Name: fire_model4

- Learning Rate (lr0): 0.0001

- Lr final (lrf): 1e-05

- Momentum: 0.937

- Weight Decay: 0.0005

- Pretrained: True

- Seed: 42

Model Summary

0 -1 1 928 ultralytics.nn.modules.conv.Conv [3, 32, 3, 2]

1 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]

2 -1 1 29056 ultralytics.nn.modules.block.C2f [64, 64, 1, True]

3 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

4 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

5 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

6 -1 2 788480 ultralytics.nn.modules.block.C2f [256, 256, 2, True]

7 -1 1 1180672 ultralytics.nn.modules.conv.Conv [256, 512, 3, 2]

8 -1 1 1838080 ultralytics.nn.modules.block.C2f [512, 512, 1, True]

9 -1 1 656896 ultralytics.nn.modules.block.SPPF [512, 512, 5]

10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

12 -1 1 591360 ultralytics.nn.modules.block.C2f [768, 256, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

15 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

16 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

18 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

19 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1]

21 -1 1 1969152 ultralytics.nn.modules.block.C2f [768, 512, 1]

22 [15, 18, 21] 1 2116822 ultralytics.nn.modules.head.Detect [2, [128, 256, 512]

Freezing layer 'model.22.dfl.conv.weight'

AMP: running Automatic Mixed Precision (AMP) checks...

AMP: checks passed

train: Scanning C:\Users\eduar\Documents\florest_fire_env\D-Fire\train_transformed\labels.cache... 40114 images, 7833 backgrounds, 0 corrupt: 100%|██████████| 40114/40114 [00

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01, num_output_channels=3, method='weighted_average'), CLAHE(p=0.01, clip_limit=(1.0, 4.0), tile_grid_size=(8, 8))

val: Scanning C:\Users\eduar\Documents\florest_fire_env\D-Fire\test_transformed\labels.cache... 3876 images, 1809 backgrounds, 0 corrupt: 100%|██████████| 3876/3876 [00<?, ?it/s]

Plotting labels to florest_fire_env\fire_model4\labels.jpg...

optimizer: Adam(lr=0.0001, momentum=0.937) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.00046875), 63 bias(decay=0.0)

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to florest_fire_env\fire_model4

Starting training for 200 epochs...

Training Summary

- Epochs Completed: 130

- Training Duration: 15.151 hours

- Optimizer Stripped Files:

florest_fire_env\fire_model4\weights\last.pt(22.5MB)florest_fire_env\fire_model4\weights\best.pt(22.5MB)

Validation of Best Model

- Weights Validated:

florest_fire_env\fire_model4\weights\best.pt - Warning: Validating an untrained model YAML will result in 0 mAP.

- Environment:

- Ultralytics: 8.3.35

- Python: 3.11.0

- Torch: 2.5.1+cu124

- CUDA: NVIDIA GeForce RTX 4060 Ti (8188MiB)

YOLOv8s Model Summary (Fused)

- Layers: 168

- Parameters: 11,126,358

- Gradients: 0

- GFLOPs: 28.4

Validation Results

| Class | Images | Instances | Box(P) | R | mAP50 | mAP50-95 |

|---|---|---|---|---|---|---|

| all | 3876 | 4645 | 0.756 | 0.71 | 0.761 | 0.439 |

| smoke | 1873 | 2079 | 0.784 | 0.792 | 0.821 | 0.508 |

| fire | 996 | 2566 | 0.729 | 0.627 | 0.702 | 0.370 |

Performance Metrics in the YOLOv8 Model:

The YOLOv8 model delivered the following performance results based on key metrics:

Box Precision (P):

- Overall Value: 0.758

The accuracy of the bounding boxes (Box Precision) indicates that the model performs well in correctly predicting the locations of objects approximately 76% of the time. Although this value is considered good (above 0.7), there is still room for optimization.

Recall (R):

- Overall Value: 0.71

The overall recall of 71% means that the model is identifying the majority of objects present in the images, but is still missing around 29% of the objects (false negatives). In particular, the recall for the fire class was 0.628, indicating a greater difficulty in detecting fires compared to smoke.

mAP50 (Mean Average Precision @ IoU 0.5):

- General Value: 0.762

This value reflects good accuracy for bounding boxes that have an Intersection over Union (IoU) of 50% or more with the true boxes. This demonstrates that the model is reliable for scenarios with moderate overlap.

mAP50-95 (Mean Average Precision @ IoU [0.5

.95]):- Overall Value: 0.439

This value measures performance over a range of IoU (from 0.5 to 0.95), which provides a more comprehensive view of the model's ability to detect objects at different levels of accuracy. A value of 0.439 is reasonable, but indicates that the model still faces difficulties in scenarios with greater precision requirements.

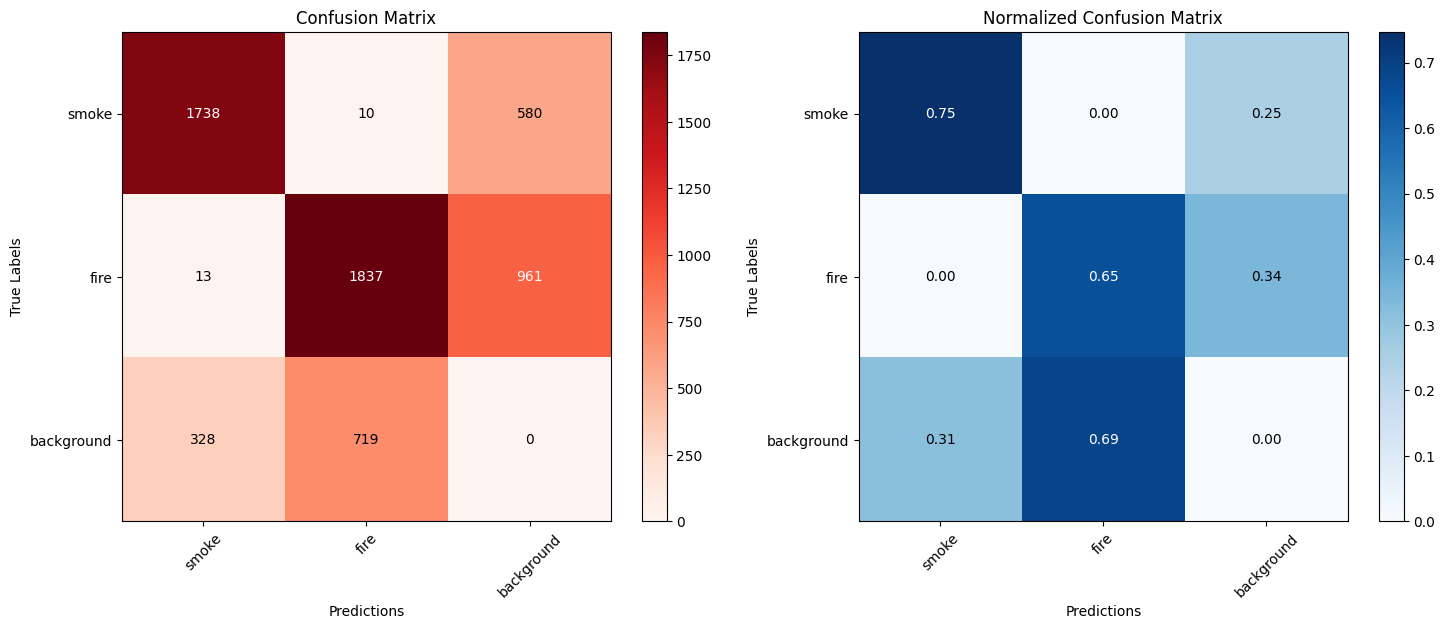

Evaluate the model's classification performance by class, generating the confusion matrix to visualize the model's successes and errors in each class.

# Load the model model = YOLO(r'C:\Users\eduar\Documents\florest_fire_env\florest_fire_env\fire_model4\weights\best.pt') # Use the trained weights file # Perform validation with the default dataset (defined in the YAML used in training) metrics = model.val() # Perform validation with a new set of data metrics = model.val(data='data.yaml') conf_matrix = metrics.confusion_matrix # Get the confusion matrix conf_matrix_values = conf_matrix.matrix # It is already a NumPy array # Normalizing the confusion matrix (dividing each row by the sum of the rows) conf_matrix_normalized = conf_matrix_values.astype('float') / conf_matrix_values.sum(axis=1)[:, np.newaxis] # Class names class_names = ["smoke", "fire", "background"] # Plotting matrices side by side fig, axes = plt.subplots(1, 2, figsize=(15, 6)) # Plotting the original confusion matrix cax1 = axes[0].imshow(conf_matrix_values, interpolation='nearest', cmap=plt.cm.Reds) axes[0].set_title('Confusion Matrix') axes[0].set_xlabel('Predictions') axes[0].set_ylabel('True Labels') axes[0].set_xticks(np.arange(len(class_names))) axes[0].set_yticks(np.arange(len(class_names))) axes[0].set_xticklabels(class_names, rotation=45) axes[0].set_yticklabels(class_names) # Adding values to the cells of the original matrix thresh = conf_matrix_values.max() / 2.0 for i, j in np.ndindex(conf_matrix_values.shape): axes[0].text(j, i, format(conf_matrix_values[i, j], '.0f'), ha="center", va="center", color="white" if conf_matrix_values[i, j] > thresh else "black") # Add the color bar to the original matrix fig.colorbar(cax1, ax=axes[0]) # Plotting the normalized confusion matrix cax2 = axes[1].imshow(conf_matrix_normalized, interpolation='nearest', cmap=plt.cm.Blues) axes[1].set_title('Normalized Confusion Matrix') axes[1].set_xlabel('Predictions') axes[1].set_ylabel('True Labels') axes[1].set_xticks(np.arange(len(class_names))) axes[1].set_yticks(np.arange(len(class_names))) axes[1].set_xticklabels(class_names, rotation=45) axes[1].set_yticklabels(class_names) # Adding values to cells of the normalized matrix thresh_norm = conf_matrix_normalized.max() / 2.0 for i, j in np.ndindex(conf_matrix_normalized.shape): axes[1].text(j, i, format(conf_matrix_normalized[i, j], '.2f'), ha="center", va="center", color="white" if conf_matrix_normalized[i, j] > thresh_norm else "black") # Add the color bar to the normalized matrix fig.colorbar(cax2, ax=axes[1]) # Display the graph plt.tight_layout() plt.show()

The graphs above show the confusion matrix (left) and normalized confusion matrix (right) for a classification model. Let's analyze the results and discuss the main points:

- General Analysis of the Confusion Matrix:

-

Class

smoke:- Most examples of this class were correctly classified (1,738 correct predictions).

- Only 10 examples were incorrectly classified as

fire. - There were a significant number of confusions with the

backgroundclass (580 incorrect predictions).

-

Class

fire:- The model performed well in this class, with 1,837 correct classifications.

- Few mistakes were made, such as 13 confusions with

smoke. - However, 961 examples were confused with "background".

-

Class

background:

Relatively poor performance in this class, with only 719 correct classifications. There were 328 confusions with smoke and 719 confusions with fire. Indicating that the model signals signs of fire where there are none.

- Normalized Matrix Insights:

- Normalization reveals the proportional performance of the model by class:

smokeclass: High hit rate (75%), but still with 25% of confusions withbackground.fireclass: Moderate hits (65%), but a significant number of errors withbackground(34%).backgroundclass: Most problematic result: only 69% correct, with a high rate of confusion withsmoke(31%).

- Conclusion:

Based on these results:

-

The current performance of the model may be insufficient for practical use in fire monitoring, where it is crucial:

-

Minimize false negatives in

smokeandfire. -

Reduce background false positives to avoid unnecessary alarms.

-

If the goal is a prototype or early stage of development, the model is a good starting point, but still needs improvements.

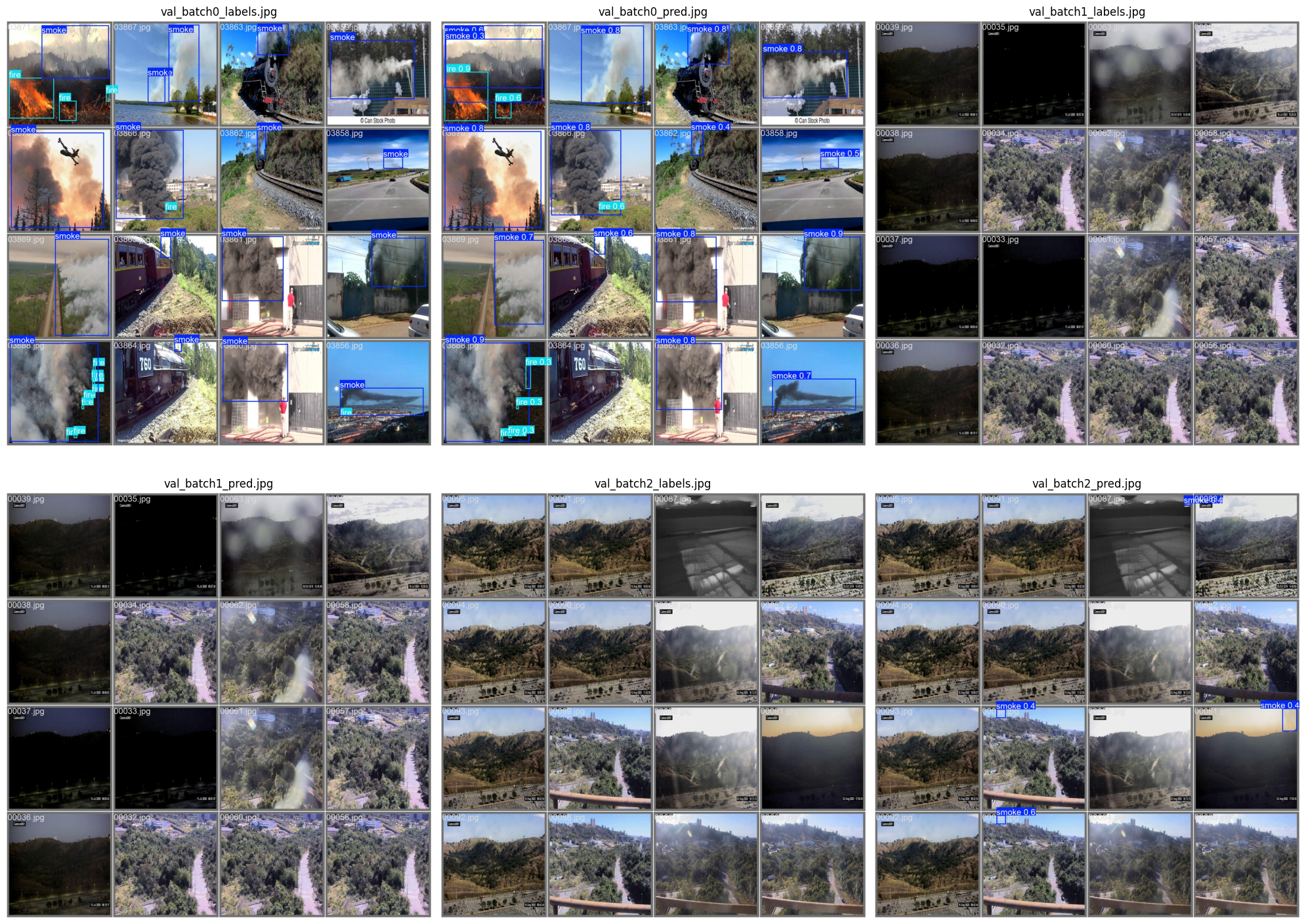

Showing some model prediction images during the validation phase:

# Function to display images side by side def plot_images_side_by_side(image_paths): # Upload images images = [Image.open(img_path) for img_path in image_paths] # Create a figure with 2 rows and 3 columns (for each pair of images) fig, axes = plt.subplots(2, 3, figsize=(20, 15)) # Increase size as needed # Adjust so that axis is a list of axes (flatten) axes = axes.flatten() # Plot each pair of images for i, (ax, img, path) in enumerate(zip(axes, images, image_paths)): ax.imshow(img) ax.axis('off') # Disable axes for a cleaner view ax.set_title(path.split("\\")[-1]) # Set title to image name plt.tight_layout() plt.show() # Image paths image_paths = [ r"C:\Users\eduar\Documents\florest_fire_env\val_batch0_labels.jpg", r"C:\Users\eduar\Documents\florest_fire_env\val_batch0_pred.jpg", r"C:\Users\eduar\Documents\florest_fire_env\val_batch1_labels.jpg", r"C:\Users\eduar\Documents\florest_fire_env\val_batch1_pred.jpg", r"C:\Users\eduar\Documents\florest_fire_env\val_batch2_labels.jpg", r"C:\Users\eduar\Documents\florest_fire_env\val_batch2_pred.jpg" ] # Call the function to display the images side by side plot_images_side_by_side(image_paths)

# Load the dataset data_results = pd.read_csv(r"C:\Users\eduar\Documents\florest_fire_env\results.csv") # Function to generate the graph with subplots def plot_training_metrics(data_results): # Setting the number of subplots (4x2) to have more space for individual variables num_rows = 4 num_cols = 2 fig, axes = plt.subplots(num_rows, num_cols, figsize=(12, 8)) # Ensure that axes is a 4x2 matrix axes = axes.flatten() # Plotting 'train/box_loss' and 'val/box_loss' on the same graph ax = axes[0] ax.plot(data_results['epoch'], data_results['train/box_loss'], label='train/box_loss') ax.plot(data_results['epoch'], data_results['val/box_loss'], label='val/box_loss') ax.set_xlabel('Epochs') ax.set_ylabel('Loss') ax.set_title('Box Loss vs Epochs') ax.legend() # Plotting 'train/dfl_loss' and 'val/dfl_loss' on the same graph ax = axes[1] ax.plot(data_results['epoch'], data_results['train/dfl_loss'], label='train/dfl_loss') ax.plot(data_results['epoch'], data_results['val/dfl_loss'], label='val/dfl_loss') ax.set_xlabel('Epochs') ax.set_ylabel('Loss') ax.set_title('DFL Loss vs Epochs') ax.legend() # Plotting 'train/cls_loss' and 'val/cls_loss' on the same graph ax = axes[2] ax.plot(data_results['epoch'], data_results['train/cls_loss'], label='train/cls_loss') ax.plot(data_results['epoch'], data_results['val/cls_loss'], label='val/cls_loss') ax.set_xlabel('Epochs') ax.set_ylabel('Loss') ax.set_title('Classification Loss vs Epochs') ax.legend() # List of other columns to plot individually columns_individual = [ 'metrics/precision(B)', 'metrics/recall(B)', 'metrics/mAP50(B)', 'metrics/mAP50-95(B)' ] # Plotting the remaining variables (individually) for i, col in enumerate(columns_individual): ax = axes[i + 3] # Starting from the 4th graph ax.plot(data_results['epoch'], data_results[col], label=col) ax.set_xlabel('Epochs') ax.set_ylabel(col) ax.set_title(f'{col} vs Epochs') ax.legend() # Adjust the layout so that the graphics do not overlap plt.tight_layout() plt.show()

# Call the function to generate the graphs plot_training_metrics(data_results)

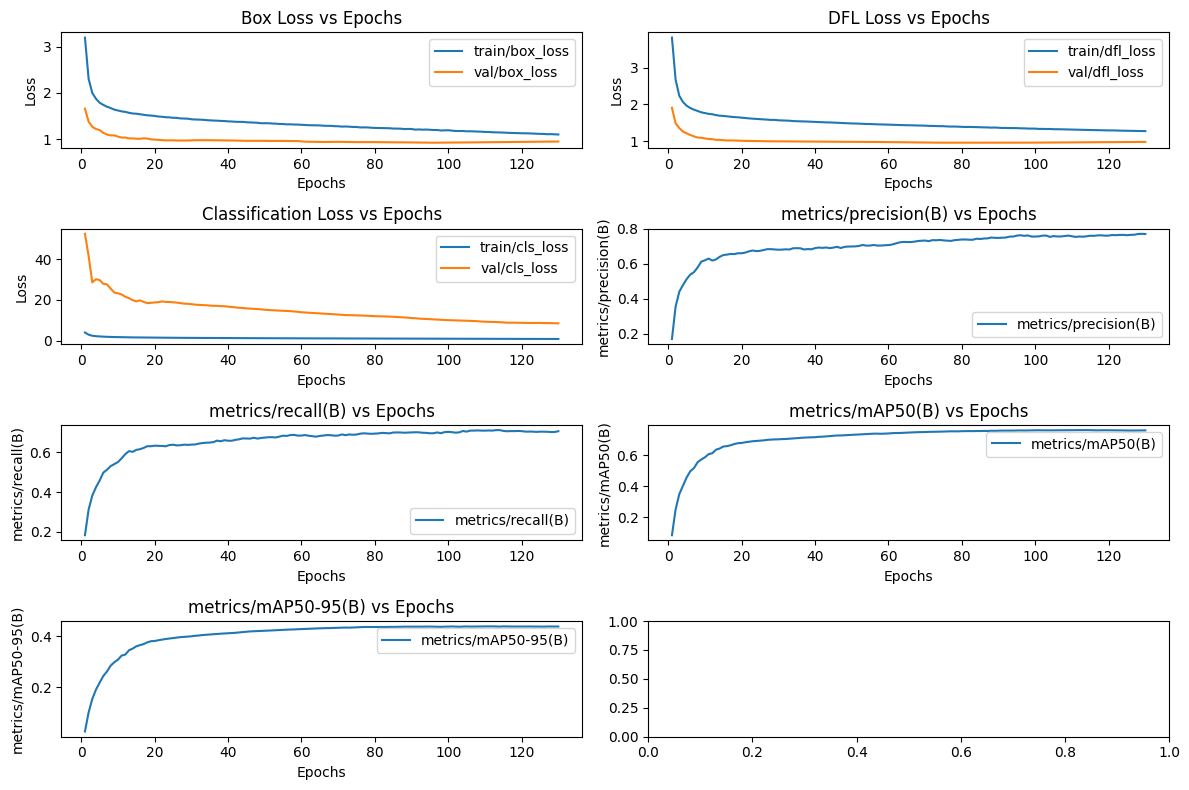

Based on the graph presented, we can discuss the performance of the model considering the different metrics and losses presented:

- Box Loss vs Epochs

-

Description: Measures the error related to the predictions of the bounding boxes.

-

Note: For both the training and validation sets, the error decreases consistently, stabilizing after about 100 epochs. The difference between the training and validation curves is small, indicating good fit and low risk of overfitting.

- DFL Loss vs Epochs

-

Description: Refers to "Distribution Focal Loss", which evaluates the accuracy of the distribution of bounding box predictions.

-

Note: Similar to Box Loss, the training and validation curve decreases consistently and stabilizes, suggesting that the model demonstrated efficient learning.

- Classification Loss vs Epochs

-

Description: Measures the error in classifying categories (background, smoke, fire).

-

Note: The training curve is very low from the beginning, while validation starts high and gradually decreases. This initial discrepancy may indicate differences in the validation set or a possible adjustment of the model throughout training. The stabilized behavior after 100 epochs is a positive point.

- Precision (B) vs Epochs

-

Description: Measures the proportion of correct predictions in relation to the total number of predictions made by the model.

-

Note: Accuracy increases significantly in the first 20 epochs and stabilizes at a value close to 0.75 (75% accuracy).

-

Consideration: May be sufficient for applications where false positives are tolerable. However, in critical systems, this value can be improved by tweaking the data or hyperparameters.

- Recall (B) vs Epochs

- Description: Measures the proportion of correct predictions in relation to the total number of actual occurrences in the data set.

- Note: The recall stabilizes around 0.60 (60%), which can be worrying in cases where detection is a priority.

- Consideration: Low recall means false negatives, that is, the model is failing to identify cases of smoke or fire. It is a critical point that can be adjusted by: Adding more examples of

smokeandfire. Balance the weight of classes in training.

- mAP50(B) vs Epochs

- Description: Mean Average Precision (IoU ≥ 50%), a metric to evaluate the average precision considering different classes.

- Note: The metric stabilizes around 0.65 (65%), indicating reasonable performance for detection.

- Consideration: Although the value is not bad, it may be insufficient in highly demanding applications such as fire monitoring.

- mAP50-95(B) vs Epochs

- Description: Average accuracy at different IoU thresholds (50% to 95%).

- Note: Stabilizes around 0.50 (50%), a relatively low value for a robust detection model.

- Consideration: Indicates that the model may not be generalizing well to different sizes or positions of the bounding boxes.

General Points:

Overfitting:

- Training and validation losses are consistent, suggesting low overfitting.

- However, the difference in validation metrics (recall, mAP) shows room for improvement.

Overall Performance:

- Precision is good (75%), but relatively low recall (60%) is concerning for critical applications.

Suggested actions:

-

Improve smoke and fire detection with:

-

Balancing the dataset (e.g. increasing examples of minority classes). Adjustments to the detection threshold to prioritize recall (if false positives are acceptable).

-

Future iterations: Evaluate model error cases (false positives and negatives) to identify recurring patterns and adjust the pipeline.

Predictions

Finally, some YouTube videos will be used to make predictions with the model and illustrate its performance in a detection task.

# Load the trained model model = YOLO(r'C:\Users\eduar\Documents\florest_fire_env\florest_fire_env\fire_model4\weights\best.pt') # Make prediction on a video results = model.predict( source=r"C:\Users\eduar\Documents\florest_fire_env\Videos\Anatomy of a burnover_ RoundMtn Paskenta camera enveloped in flames....mp4", # Substitua pelo caminho do vídeo save=True, save_txt=True, project="runs/videos", # Nome do projeto name="fire_predictions_5", conf=0.48, iou=0.5, device=0 )

Predictions Results:

Video link showcasing the prediction results posted on LinkedIn

References

-

Borges de Venâncio, P. V. A., Lisboa, A. C., & Barbosa, A. V. (2022). An automatic fire detection system based on deep convolutional neural networks for low-power, resource-constrained devices. Neural Computing and Applications.

-

YOLOv8 Documentation: Overview of YOLOv8 architecture and functionalities.

Available at: https://yolov8.org/yolov8-architecture/