LangGraph is a powerful library designed for developing stateful, multi-actor applications with LLMs, making it ideal for creating both agent and multi-agent workflows. Unlike many other LLM frameworks, LangGraph stands out by offering three key advantages: support for cycles, enhanced controllability, and built-in persistence. By enabling the definition of workflows with cycles a critical component for most agentic architectures LangGraph moves beyond the limitations of DAG-based solutions (frameworks that structure workflows as Directed Acyclic Graphs, ensuring a unidirectional flow of tasks without cycles, often used for data processing and pipeline management). As a low-level framework, it provides granular control over both the flow and state of applications, ensuring the reliability of agent systems. Furthermore, its built-in persistence capabilities support advanced features like human-in-the-loop interactions and memory retention.

First, ensure you have all the necessary libraries installed in your environment. Use the following commands:

!pip install openai==1.55.3 httpx==0.27.2 --force-reinstall --quiet

langgraph and Supporting Libraries!pip install -q langgraph langsmith langchain_community

You need an OpenAI API key to access their services. Use the following code to securely set it as an environment variable:

import getpass import os def _set_env(var: str): if not os.environ.get(var): os.environ[var] = getpass.getpass(f"{var}: ") _set_env("OPENAI_API_KEY")

LangGraph has a bultin state management flow which requires a State object to be passed to it si below we will be creating a simple State object.

from typing import Annotated from typing_extensions import TypedDict from langgraph.graph import StateGraph, START, END class State(TypedDict): messages: Annotated[list, 'messages'] # List of conversation messages response: Annotated[str, 'response'] # Chatbot's response temperature: Annotated[float, 'temperature'] # Sampling temperature top_p: Annotated[float, 'top_p'] # Top-p sampling model_name: Annotated[str, 'model_name'] # Model name for inference

This ensures consistency and type safety when managing chatbot states.

Simple function for sending api request State will be passed to this function when called in pipeline and it can access all required variables from the State

from openai import OpenAI client = OpenAI() def bot(state: dict): response = client.chat.completions.create( model=state["model_name"], # Model name (e.g., GPT-4) messages=state["messages"], # Messages in the chat history temperature=state["temperature"], # Temperature for sampling top_p=state["top_p"] # Top-p value for sampling ) return {"response": response.choices[0].message.content} # Extract the response

This function interacts with OpenAI's chat API and returns the chatbot's response.

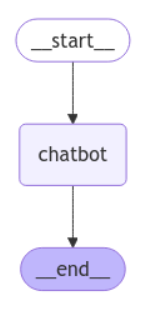

Graph is initialized and State class is assigned to it and then a node and its edge will be added to the graph. We can also add conditional edges.

graph_builder = StateGraph(State) graph_builder.add_node("chatbot", bot) # Add a chatbot node graph_builder.add_edge(START, "chatbot") # Connect START to chatbot node graph_builder.add_edge("chatbot", END) # Connect chatbot node to END graph = graph_builder.compile() # Compile the graph

This graph ensures a logical flow from input to output.

Below is a function to start the pipeline by traversing the graph nodes.

def start(user_input: str, system_message: str, temperature=0.7, top_p=1.0, model_name="gpt-4o-mini"): state_dict = {} # Initialize state dictionary messages = [ {"role": "system", "content": system_message}, # System message {"role": "user", "content": user_input} # User input ] state_dict["messages"] = messages state_dict["temperature"] = temperature state_dict["top_p"] = top_p state_dict["model_name"] = model_name # Traverse the graph and display responses for event in graph.stream(state_dict): for values in event.values(): print(values['response'])

start("What is LLM", "You are a helpful chatbot")

This function initializes the state, passes it to the graph, and outputs the chatbot's response.

LLM stands for "Large Language Model." It refers to a type of artificial intelligence model that is trained on vast amounts of text data to understand, generate, and manipulate human language. These models utilize deep learning techniques, particularly neural networks, to learn patterns, context, grammar, and even some level of reasoning from the training data. Examples of large language models include OpenAI's GPT (Generative Pre-trained Transformer) series, Google's BERT (Bidirectional Encoder Representations from Transformers), and others. LLMs can perform a variety of tasks such as text generation, translation, summarization, question-answering, and more, making them useful in applications like chatbots, content creation, and information retrieval

We can easily visualize our pipeline in LangGraph

from IPython.display import Image, display display(Image(graph.get_graph().draw_mermaid_png()))

This snippet renders a PNG of the workflow graph using langgraph.

LangGraph enables the integration of diverse agents and experts into a cohesive workflow, facilitating the creation of complex, adaptable systems. Each agent is designed to specialize in particular tasks such as natural language understanding, text generation, or data retrieval, working collaboratively within the same workflow. In contrast, experts contribute specialized knowledge or domain-specific capabilities, such as addressing technical queries or providing context-aware insights.

As data flows through the workflow, LangGraph intelligently directs it to the most relevant agent or expert, ensuring that each part of the process is handled by the most suitable entity. This diverse mix of agents and experts creates a flexible and efficient system, where general tasks are managed by agents, while more specialized tasks are tackled by experts. The result is a highly scalable and maintainable system that can evolve and adapt to various inputs and needs, drawing on the strengths of both agents and experts in a unified, dynamic manner.

LangGraph is a versatile framework that simplifies the creation of dynamic, adaptive workflows by integrating diverse agents, experts, and tools. With features like branching, persistence, human-in-the-loop, streaming, and memory, it enables robust and intelligent systems. Its visualization and seamless integration with tools like LangChain make it a powerful choice for building scalable and efficient applications.