ABSTRACT

With each academic cycle, university enrollment continues to rise, increasing the demand for efficient communication between students and staff. Class advisers, faculty members responsible for overseeing student cohorts, must balance growing administrative responsibilities with their primary roles as lecturers. As these challenges intensify, the need for a streamlined system to facilitate academic and administrative interactions becomes increasingly evident.

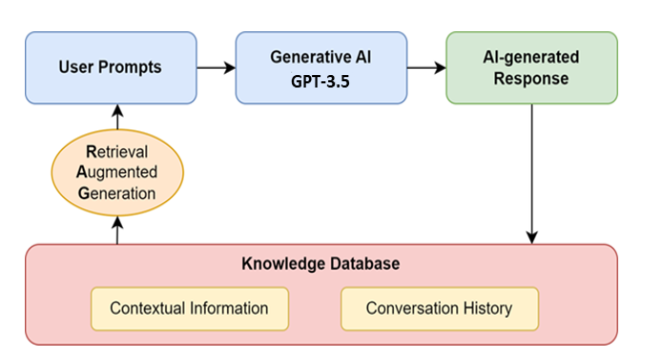

This publication presents EE-Buddy, an AI-powered campus assistant designed to streamline academic and administrative interactions. Leveraging GPT-3.5 Turbo, LangChain, Pinecone, and Chainlit, EE-Buddy integrates Retrieval-Augmented Generation (RAG) to provide real-time, university-specific responses. By addressing student inquiries and reducing adviser workload, EE-Buddy enhances information accessibility, ensuring seamless communication and efficient workflow management within the university.

1. Introduction

Due to limited availability, administrative workload, and the sheer number of students under their supervision, Class advisers tasked with guiding students through their academic journey often struggle to meet the increasing demand. As a result, students frequently experience delays in receiving guidance, leading to frustration and inefficient academic decision-making.

To tackle these challenges, EE-Buddy introduces an AI-driven, RAG-based chatbot that delivers context-aware, institution-specific assistance. By combining pre-trained language models with a structured knowledge base, EE-Buddy provides accurate, real-time responses, significantly improving the advisory process compared to traditional systems.

2. Background of the Study

In higher education, effective student guidance is crucial for academic success. Traditionally, universities assign class advisers to provide mentorship, monitor academic progress, and assist students in navigating institutional processes. However, the conventional advisory system is increasingly strained by rising student populations and the administrative workload placed on faculty members.

Over the past 15 years, class sizes in most major Nigerian universities have more than tripled, rising from an average of 60–90 students per class to 200–260 students today. This surge makes one-on-one student-adviser interactions highly inefficient, leaving students with limited access to academic and administrative guidance. Many students resort to search engines for answers, but the responses they receive are often generic, outdated, or misaligned with university policies.

The primary problem is the availability and accessibility of advisers. Since class advisers also serve as lecturers and administrative staff, their ability to provide on-demand support is severely limited. The increasing workload and growing student population necessitate a scalable, AI-powered solution that can bridge this gap without compromising accuracy or personalization.

This study proposes EE-Buddy, an AI chatbot designed to:

- Leverage RAG to retrieve real-time, University-specific information and generate precise responses.

- Integrate Pinecone, a vector database, to efficiently store and retrieve structured academic data.

- Utilize Chainlit to create a seamless, user-friendly web interface for intuitive interactions.

- Enhance student engagement by providing 24/7 accessibility and automating routine queries.

By combining state-of-the-art AI with a structured knowledge base, EE-Buddy transforms the traditional advisory process into a scalable, efficient, and intelligent system. It ensures that students receive accurate, context-aware guidance instantly, reducing the burden on class advisers while improving the overall university experience.

3. Literature Review of AI Chatbot Architectures

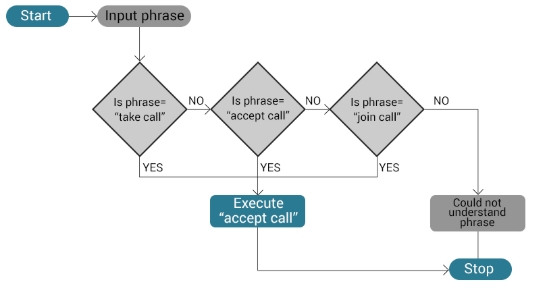

3.1 Rule-Based Systems

Rule-based chatbot systems rely on predefined responses and structured decision trees to interact with users (Shawar & Atwell, 2007). These systems use a set of if-then rules to process inputs and generate appropriate outputs. One of the earliest examples of such a system is ELIZA, developed in 1966 by Joseph Weizenbaum. ELIZA functioned by matching user input to predefined scripts, particularly simulating a Rogerian psychotherapist (Weizenbaum, 1966).

Figure 1. Rule-based chatbot systems

These early chatbot architectures, while innovative, had significant limitations. Since rule-based systems depend entirely on handcrafted rules, they struggle with handling complex or unexpected user queries (Colby, 1975). Additionally, their responses lack contextual understanding, often leading to repetitive or non-coherent interactions.

Despite these drawbacks, rule-based chatbots are still in use today, particularly in customer service applications where interactions follow predictable patterns (Jain et al., 2018). Modern implementations often integrate rule-based logic with machine learning components to improve adaptability and user engagement.

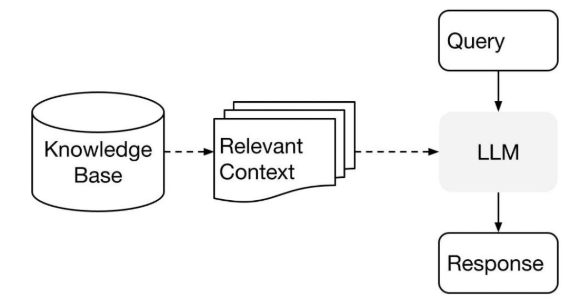

3.2 Retrieval-Based Models

Retrieval-based chatbots utilize a predefined set of responses and select the most appropriate reply based on the user's input. These models rely on sophisticated ranking algorithms, including TF-IDF, word embeddings, and deep learning techniques, to determine the best response (Yan et al., 2016). Unlike rule-based systems, retrieval-based models do not rely solely on handcrafted rules but leverage databases of past interactions to enhance response relevance.

Figure 2. Retrieval-based chatbot systems

One major advantage of retrieval-based chatbots is their ability to generate contextually appropriate responses without the need for extensive training. However, they are limited by the scope of their response databases. If an inquiry does not match an existing response, the chatbot may fail to provide a satisfactory answer (Lowe et al., 2015).

These chatbots are widely used in customer support systems, virtual assistants, and automated response applications where responses need to be reliable and controlled (Henderson et al., 2017).

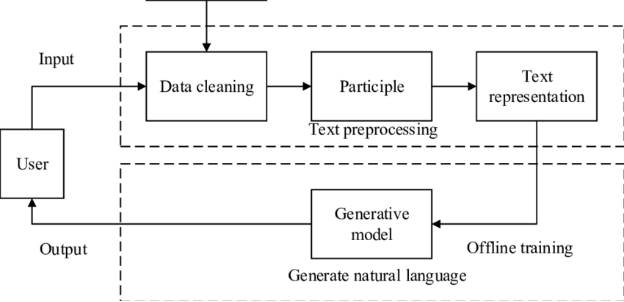

3.3 Generative Models

Generative chatbot models use machine learning techniques, particularly deep learning, to generate responses dynamically rather than selecting from a fixed set. These models are typically built using recurrent neural networks (RNNs), transformers, or large-scale pre-trained language models such as GPT (Radford et al., 2019).

Figure 3. Generative chatbot models

Unlike rule-based and retrieval-based models, generative chatbots can produce more natural and diverse conversations. They learn language patterns from large datasets and can generate human-like responses without predefined scripts (Vaswani et al., 2017). However, generative models also pose challenges, such as producing irrelevant or nonsensical replies, ethical concerns, and computational intensity (Roller et al., 2021).

Recent advancements in transformer architectures, such as BERT and GPT-4, have significantly improved the fluency and coherence of chatbot responses, making them suitable for a wide range of applications, including healthcare, education, and entertainment (Brown et al., 2020).

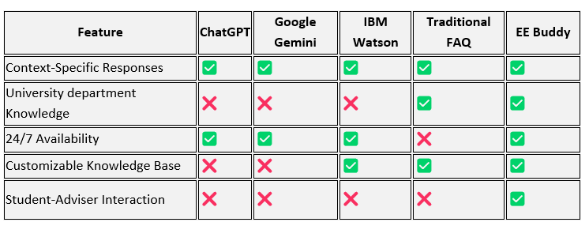

Figure 4. Feature comparison between EE Buddy and other information sources

3.4 Comparison with Existing Chatbots

Chatbot architectures have evolved significantly from rule-based systems to more advanced retrieval-based and generative models. Rule-based chatbots provide structured interactions but lack flexibility, while retrieval-based models offer more relevant responses by leveraging historical data. Generative models, powered by deep learning, enable dynamic and context-aware conversations, though they come with computational and ethical challenges, other challenges like difficulty handling ambiguous user inputs, security risks, data privacy concerns, and the need for robust error management.EE-Buddy focuses on a hybrid approach that combine these architectures to maximize efficiency and user experience.

Figure 5. Hybrid AI model using RAG

4. Methodology

4.1 System Operation and Chatbot Integration

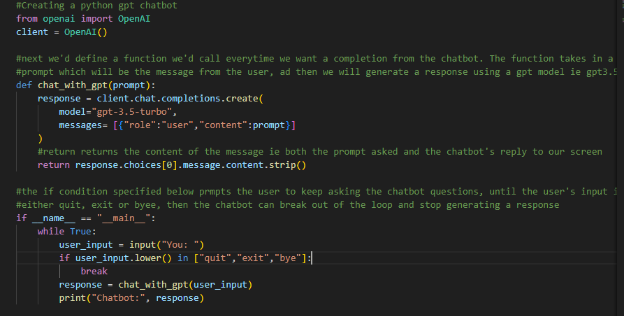

The chatbot is developed using OpenAI’s GPT-3.5 Turbo, a state-of-the-art language model optimized for enhanced accuracy, reduced bias, and improved alignment with user intent. By leveraging OpenAI’s fine-tuning techniques, the chatbot delivers highly contextual and precise responses, making it a reliable virtual assistant.

Figure 6. GPT-3.5 setup process

4.2 GPT-3.5 Turbo Model Integration

The development process began by obtaining an OpenAI API key, granting access to GPT-3.5 Turbo’s powerful capabilities. This API allows the chatbot to process natural language queries, generate human-like responses, and adapt to user interactions dynamically. The chatbot was specifically designed to serve as a virtual assistant for the Electrical and Electronic Engineering Department of the Federal University of Technology Owerri (FUTO), Nigeria. To ensure reliability, extensive testing was conducted to verify that the chatbot’s personality and responses aligned with the department’s needs.

4.3 Enhancing Functionality with LangChain Framework

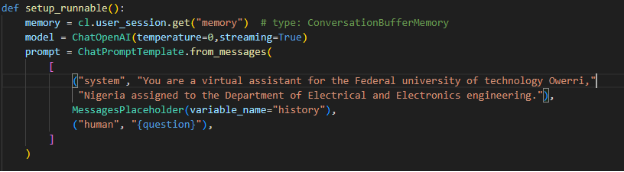

Figure 7. LangChain integration process

Although GPT-3.5 Turbo is highly capable, its standalone functionality is limited to the knowledge embedded during its training. To enhance the chatbot’s functionality beyond GPT-3.5 Turbo’s built-in capabilities, the LangChain framework was integrated. LangChain enables seamless collaboration between multiple language models, enhances data awareness, and provides "agentic" features—allowing real-time web searches and interaction with external knowledge bases.

4.3.1 Key Features of LangChain in the Chatbot

- Advanced Prompt Engineering – Enables structured prompt templates for context-aware, optimized responses, improving clarity and consistency.

- Task Chaining & Multi-Step Reasoning – Allows the chatbot to execute multiple interdependent tasks, such as analyzing data before generating a response.

- Memory Retention – Supports conversation history, ensuring responses remain coherent and contextually aware over long interactions.

- External Data Access – Enhances accuracy by integrating real-time information from databases, web searches, and academic sources.

- Efficient Data Processing – Facilitates large-scale data retrieval, transformation, and analysis through automated data loaders and vector databases.

By combining OpenAI’s advanced language modeling with LangChain’s multi-functional capabilities, this chatbot offers an intelligent, interactive, and dynamic assistant tailored to academic and research needs. Future enhancements may include integration with additional AI-driven tools, voice interaction capabilities, and adaptive learning features to further improve response accuracy and user experience.

4.3.2 Vector Stores and Data Indexing

A key challenge for large language models (LLMs) is their inability to access specific external documents. To address this, LangChain incorporates vector stores, also known as vector databases, which store and manage vectorized data efficiently. Vector databases optimize storage, enable fast retrieval using similarity searches, and support real-time querying for applications such as recommendation systems and NLP tasks.

Key characteristics of vector stores include:

- Efficient Storage – Optimized for high-dimensional vector data.

- Scalability – Capable of handling large datasets across distributed systems.

- Vector Indexing – Supports nearest neighbor searches using cosine similarity or Euclidean distance.

- Real-Time Querying – Enables fast retrieval for applications such as personalized search.

- Support for Sparse and Dense Vectors – Handles diverse data representations efficiently.

By integrating vector databases, the chatbot can access and retrieve relevant external knowledge, improving its ability to provide personalized and context-aware responses.

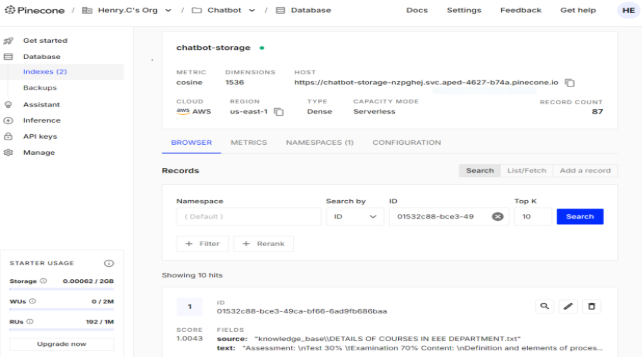

4.4 Pinecone for Vector Storage

Pinecone, an open-source vector database, was implemented as the primary vector store for the chatbot, enabling efficient storage and retrieval of knowledge base PDFs. The integration involved several key steps:

- Document Loading – Converting PDF content into structured documents.

- Chunking – Splitting large text files into manageable sections.

- Embedding – Transforming text chunks into high-dimensional vector representations.

- Vector Storage – Storing and indexing these embeddings in Pinecone for fast retrieval.

Figure 8. Pinecone vector database dashboard

By leveraging vector databases like Pinecone, the chatbot efficiently manages unstructured data such as research papers and technical documents, significantly improving its ability to provide detailed, knowledge-driven responses. Other alternatives to Pinecone include ChromaDB, FAISS, and Weaviate, each offering unique strengths in vector data management.

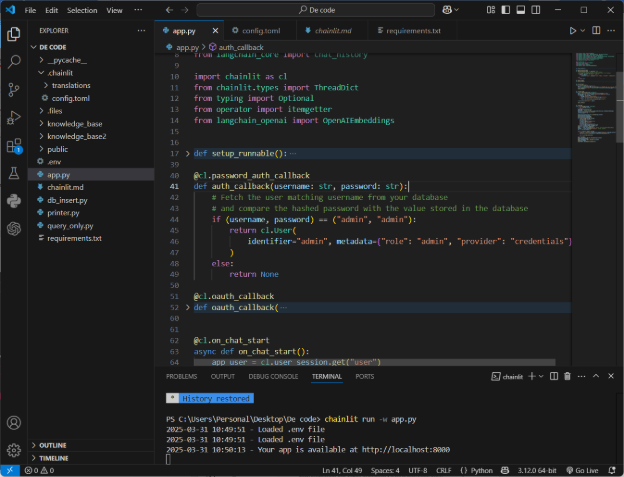

4.5 Chainlit Integration

To improve user interaction, Chainlit,** a Python framework for building user interfaces, was integrated into the chatbot. This integration enables seamless communication between users and the chatbot via a web-based interface. The Chainlit framework was installed and configured within the existing LangChain-based infrastructure, ensuring smooth interoperability and enhanced user experience.

Figure 9. Chainlit integration process

4.6 System Requirements

The EE-Buddy Chatbot is designed to function as a digital assistant for students in the Electrical and Electronic Engineering (EEE) Department at FUTO. It provides students with academic guidance, administrative assistance, and personalized responses.

4.6.1 Functional Requirements

The chatbot is designed to perform the following core functions:

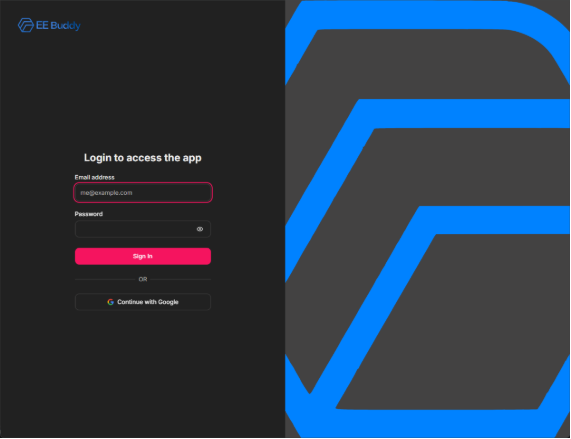

- User Registration & Login

- Students can create an account using their email addresses.

- The system maintains a secure authentication process to prevent unauthorized access.

- Once logged in, students can access personalized recommendations and previous chat history.

- The chatbot supports session persistence, ensuring users do not have to log in repeatedly during active use.

Figure 10. EE Buddy’s login screen

- Personalized Solutions

- The chatbot provides tailored responses based on individual user queries and past interactions.

- It answers common academic and administrative questions, including:

- Course registration guidance

- Transcript requests

- Result checking procedures

- CGPA calculations

- Internship and job opportunities related to the department

- It can redirect students to relevant faculty members or official university resources when necessary.

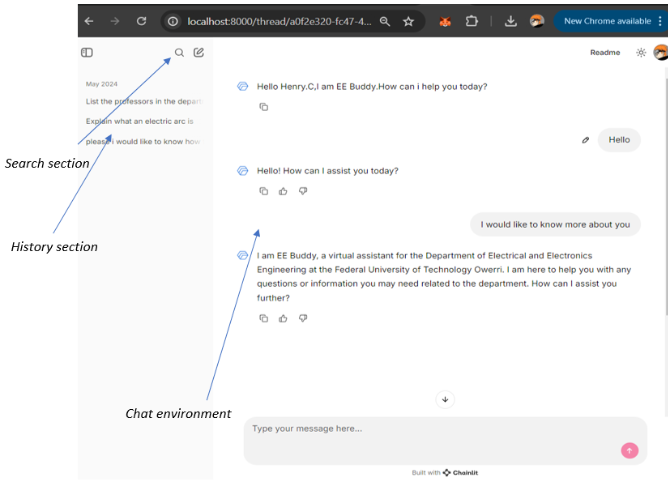

- History Recollection

- The chatbot stores previous conversations, allowing students to review past interactions.

- This feature enhances usability by preventing students from repeatedly asking the same questions.

- It enables context-aware responses, allowing more fluid and natural conversations.

Figure 11. EE Buddy’s chat environment

- Departmental Announcements & Updates

- The chatbot can be updated to broadcast important notices such as:

- Exam schedules

- Course registration deadlines

- School events and workshops

- This feature ensures students stay informed without needing to visit multiple platforms.

- AI-Powered Assistance

- The chatbot can provide academic guidance, such as:

- Recommending study materials

- Explaining complex engineering concepts

- Directing students to relevant textbooks or past questions

- It uses natural language processing (NLP) to understand and respond appropriately to user queries.

4.6.2 Non-Functional Requirements

Although not directly related to the chatbot’s core functions, these requirements ensure efficiency, security, and user experience:

- Security & Data Privacy

- The chatbot implements data encryption to protect sensitive student information.

- Multi-factor authentication (MFA) can be added for enhanced security.

- Regular security audits and vulnerability assessments are performed.

- Usability & Accessibility

- The interface is designed to be simple, intuitive, and user-friendly.

- The chatbot supports text-based inputs, with potential integration for voice-based queries in future iterations.

- It is accessible to students with varying levels of technical expertise.

- Compatibility & Cross-Platform Support

- The chatbot is designed to work seamlessly across multiple devices (PCs, smartphones, tablets).

- It is optimized for all major web browsers (Chrome, Firefox, Edge, Safari).

- A mobile-friendly version ensures accessibility on smaller screens.

- Performance & Scalability

- The chatbot can handle multiple concurrent users without performance degradation.

- The system is designed to scale horizontally, allowing the chatbot to manage an increasing number of students as needed.

- Authentication & Authorization

- Strong password policies and secure hashing techniques are implemented.

- Different user roles can be added in the future (e.g., students, faculty, admin users).

- The system ensures that only authorized users can access personalized data.

4.7 Knowledge Base Integration

To ensure the chatbot provides accurate and contextually relevant responses, a systematic process was followed:

1. Data Gathering

- Information was sourced from:

- The official university website

- Departmental handbooks and guides

- Oral interviews with faculty members

- Academic regulations and course outlines

2. Data Analysis

- The collected information was sorted into logical categories based on the most common student queries.

- Redundant or outdated information was removed or updated.

3. Data Sourcing & Preprocessing

- Data was cleaned and formatted for machine readability.

- Unstructured information was converted into structured documents.

4. Data Organization & Storage in Pinecone

- The collected text was processed into small, searchable text chunks using text splitters.

- Each chunk was embedded as a high-dimensional vector using a pre-trained embedding model.

- These vectors were stored in Pinecone, allowing efficient semantic search and retrieval.

5. Vector Embedding & Knowledge Base Training

- The knowledge base was vectorized, meaning each chunk of text was converted into a numerical format that the chatbot could understand.

- This vectorized knowledge was indexed in Pinecone to improve response accuracy.

6. Testing & Continuous Improvement

- The chatbot was tested using real student queries.

- Responses were evaluated based on:

- Accuracy – Was the answer correct?

- Relevance – Was the response useful?

- Coherence – Did the chatbot provide a clear and logical answer?

- Iterative improvements were made based on student feedback to enhance chatbot performance.

5.Results

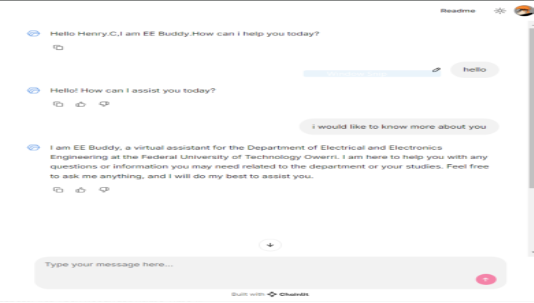

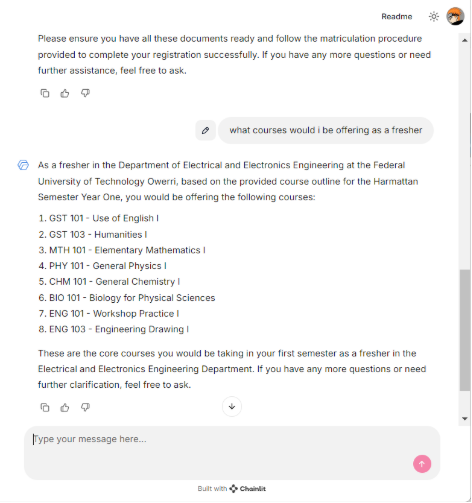

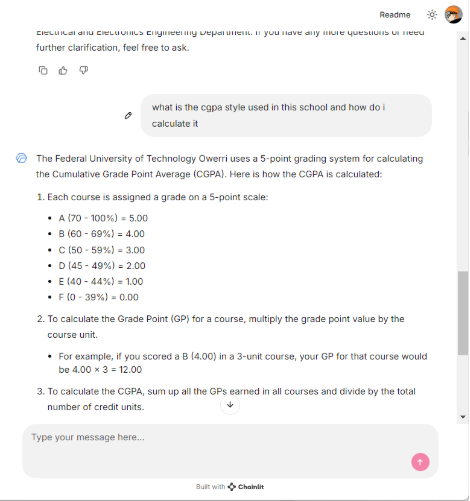

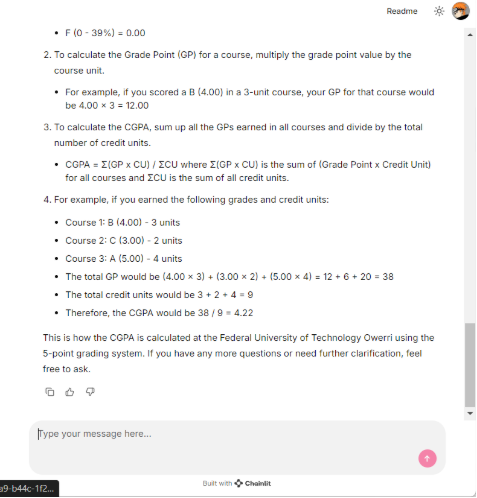

Here are some of the results from queries made after the development of EE Buddy was successfully completed. The chatbot was evaluated based its effectiveness in assisting students by providing responses to queries related to the Department of Electrical and Electronic Engineering (EEE), the Federal University of Technology, Owerri (FUTO), and general student-related inquiries.

Figure 12. Results from queries

Figure 13. Results from queries

Figure 14. Results from queries

Figure 15. Results from queries

Figure 16. Results from queries

By leveraging a knowledge base, official FUTO-related websites, and search engines, EE-Buddy delivers relevant and accurate responses. Its performance was very satisfactory as show by the figures above. Answers were explanatory but concise enough to pass the correct information without ambiguity and also its ability to retain context, delivering adequate information in a firm but friendly manner.

6. Conclusion

The development of the EE-Buddy Chatbot marks a significant step toward enhancing academic support and administrative efficiency using AI. By leveraging advanced technologies and integrating multiple frameworks, the chatbot provided real-time, personalized responses to students' inquiries regarding academic procedures, course-related questions, and administrative processes. The integration of vector databases ensured that responses were not only contextually relevant but also efficiently retrievable, overcoming the limitations of traditional keyword-based search systems.

This project demonstrates the potential of conversational AI in higher education. As educational institutions continue to embrace digital transformation, AI-powered assistants like EE-Buddy can significantly reduce administrative bottlenecks, improve information accessibility, and provide students with instant, reliable academic guidance.

6.1 Constraints, Boundaries and Limitations

Developing EE-Buddy came with several challenges, some of which can be improved in the future, while others stem from current technological limitations. The major limitations encountered include:

- Integration Challenges

EE-Buddy faced difficulties integrating with the existing university portals and student databases. Without seamless integration, students must still manually check different platforms for information they require. - Context Retention in Multi-Turn Conversations

Despite leveraging Retrieval-Augmented Generation (RAG) for improved accuracy, EE-Buddy struggles with complex, multi-turn conversations requiring deep context awareness and memory retention. - Reliance on Structured Knowledge Bases

The chatbot’s responses depend on structured university-specific documents. If this information is outdated, incomplete, or inconsistent, EE-Buddy may provide incorrect or irrelevant responses. Additionally, frequent policy updates require continuous maintenance. - Performance & Scalability Issues

The round-trip time from query to response averaged around 12 seconds. As the number of concurrent users increases, server load and response times may degrade. Optimization of the vector database and frontend is necessary to improve efficiency. - Security & Data Privacy Risks

Ensuring the secure handling of student data is a critical challenge. Without robust encryption, authentication, and access control mechanisms, EE-Buddy could become vulnerable to data breaches or cyberattacks.

6.2 Future directions

Moving forward, future iterations of the chatbot could incorporate voice-based interactions, a more robust agentic processing algorithm, expanded knowledge domains, and integration with additional university systems for a more comprehensive and interactive experience. The success of this project underscores the importance of AI and machine learning in revolutionizing student support systems, paving the way for smarter, more efficient academic environments.

7. References

- Brown, T. et al. (2020). "Language Models are Few-Shot Learners." Advances in Neural Information Processing Systems (NeurIPS), 33, 1877-1901.

- Colby, K. M. (1975). Artificial Paranoia: A Computer Simulation of Paranoid Processes. Pergamon Press.

- Henderson, M., Thomson, B., & Young, S. (2017). "Deep Learning for Conversational AI." IEEE Signal Processing Magazine, 34(5), 45-54.

- Jain, M., Kumar, P., & Patel, D. (2018). "Evaluating Chatbot Effectiveness for Customer Support." International Journal of Computer Applications, 180(20), 15-20.

- Lowe, R. et al. (2015). "The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems." arXiv preprint arXiv.08909.

- Radford, A. et al. (2019). "Language Models are Unsupervised Multitask Learners." OpenAI Technical Report.

- Roller, S. et al. (2021). "Recipes for Building an Open-Domain Chatbot." arXiv preprint arXiv.13637.

- Shawar, B. A., & Atwell, E. (2007). "Chatbots: Are They Ready for Prime Time?" International Journal of Computer Science Issues, 4(2), 29-38.

- Vaswani, A. et al. (2017). "Attention is All You Need." Advances in Neural Information Processing Systems (NeurIPS), 30, 5998-6008.

- Weizenbaum, J. (1966). "ELIZA—A Computer Program for the Study of Natural Language Communication Between Man and Machine." Communications of the ACM, 9(1), 36-45.

- Yan, R. et al. (2016). "Learning to Respond with Deep Neural Networks for Retrieval-Based Dialog Systems." Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, 55-64.

Chainlit Docs

OpenAI Docs

LangChain Docs

LangChain Pinecone Docs