In Forex trading, the ability to dynamically adjust key parameters such as take profit and stop loss is crucial for maximizing returns and minimizing risks in highly volatile markets. Traditional algorithmic trading systems often rely on static or manually tuned parameters, which can struggle to adapt to rapidly changing market conditions. This paper presents an innovative approach using Reinforcement Learning (RL) to optimize take profit and stop loss levels in real-time, employing a cooperative multi-agent scenario.

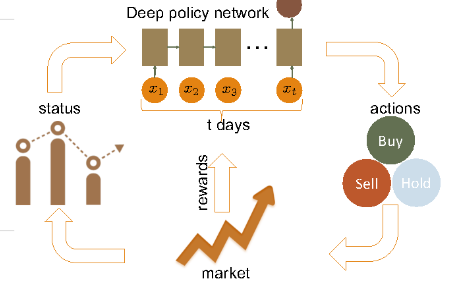

Reinforcement Learning (RL) introduces a promising solution by enabling trading systems to dynamically adjust and optimize their parameters through continuous interaction with the market. In this framework, multiple agents collaborate, each learning different aspects of the trading environment and adjusting parameters through interaction with the market. These agents work together to balance risk and reward, dynamically optimizing the take profit and stop loss values based on evolving market data. By leveraging this cooperative multi-agent approach, the system adapts to complex market patterns, enhancing decision-making and improving overall trading performance.

The proposed method demonstrates the potential of RL for dynamic parameter optimization in Forex trading, offering a more flexible and responsive alternative to traditional trading strategies. Through extensive backtesting, we show that this approach outperforms static models, providing greater adaptability and profitability in various market conditions.

Adding TP and SL value insert in market condition. It has two scenerios, how TP is saving more alpha and SL is optimizing for risk minimization.

In this approach, we deploy two cooperative agents, each with a distinct yet complementary role aimed at achieving the overall objective of maximizing profits in Forex trading. The two agents are structured as follows:

Take Profit Agent: This agent focuses on determining the optimal target profit level. Its goal is to maximize returns by setting an appropriate exit point for profitable trades.

Stop Loss Agent: This agent is responsible for minimizing risk by adjusting the stop loss level. Its role is to protect against significant losses by deciding the threshold at which a trade should be exited to prevent further losses.

Both agents work toward the same goal of optimizing profits through dynamic parameter adjustment in real-time. The system adapts to evolving market conditions by adjusting the take profit and stop loss values, utilizing reinforcement learning to continuously improve decision-making based on past performance.

Action Space:

The action space for each agent consists of 12 discrete actions, representing various levels of adjustment for take profit and stop loss parameters. These actions allow the agents to fine-tune their respective decisions throughout the trading process.

Observation Space:

Five different information is passed to agent for taking a action. 1.State_information includes current trading position, No of shares, balance.

2. OHLCV

3. Date, Weekdays, Time

4. Multiple Technical Indicators

5. Other market participant

Implementation:

Python-based: The entire system is implemented in Python, making use of well-established libraries and frameworks tailored for algorithmic trading.

QStrader Boilerplate Architecture: The implementation builds upon the QStrader architecture, which serves as a robust foundation for backtesting and live trading strategies in the Forex market.

Oanda.py Library: We utilize the Oanda.py library for integration with the Oanda trading platform, enabling real-time data retrieval and order execution in the USD/JPY Forex pair.

TA-Lib Library: TA-Lib is used to implement various technical analysis indicators that assist the agents in making informed decisions based on historical market data and trends.

Backtrader Framework: Some features from the Backtrader framework are incorporated to enhance the flexibility of our trading strategy, allowing for better backtesting and strategy validation.

Target Currency Pair:

The system specifically targets the USD/JPY currency pair, using this pair to train the agents and validate their performance in different market conditions.

Validation and Comparison:

To ensure robustness, the performance of our methodology is validated against popular trading platforms like MetaTrader 4 (MT4)/MetaTrader 5 (MT5) and TradingView. The results obtained from our system are compared with those platforms to assess the accuracy and effectiveness of the dynamic parameter optimization.

This cooperative multi-agent RL system offers a flexible and adaptive approach to optimizing key parameters in Forex trading, providing enhanced performance and profit potential when compared to static models.

The primary objective of this experiment is to develop and validate a cooperative multi-agent reinforcement learning (RL) framework for optimizing dynamic trading parameters (Take Profit and Stop Loss) in Forex trading. The agents aim to maximize profit while minimizing loss, focusing on the USDJPY currency pair using historical trading data. We will evaluate the model's performance through backtesting in a separate time period.

Our implementation use hyperparameters:

Learning Rate: 0.0001

Gamma (Discount Factor): 0.99

Tau (Soft Update Rate): 0.01

Update Target Network: Every 2000 episodes

Update Double DQN: Every 6 episodes

Features: OHLCV, indicator data, prev_actions,

We have trained the model on the first three months of data from 2020, 2021, and 2022. After the training phase, we conducted a backtest on the following three months (April to June), where the model demonstrated competitive profitability.

For further evaluation and benchmarking, we also ran the model on the same time periods used during training, and the performance remained stable and consistent with expectations.

Additionally, we tested the model on older data from 2018 and 2019 to assess its robustness and ability to generalize to different market conditions. The model continued to perform well, showing promising results even with data from previous years, confirming its adaptability to various market scenarios.

In conclusion, this study effectively utilized Take Profit (TP) strategies to maximize alpha, while employing Stop Loss (SL) measures for risk minimization. The integration of various indicator strategies for the buying side further enhanced the model's performance. By training on data from early 2020 to early 2022 and backtesting in the subsequent months, we achieved competitive profits and stable results. The model also demonstrated its robustness when evaluated against historical data from 2018 and 2019, yielding favorable outcomes. Overall, this research underscores the potential of using reinforcement learning to optimize trading strategies in the Forex market, emphasizing the importance of well-defined risk management and decision-making frameworks. Future implementation includes LSTM encoder architectuer with attention with adding more feature, loopback period also focus on specific reward design.