Abstract

BrainBot is an AI learning assistant that enables users to interact with various types of content, including documents, images, and webpages, using natural language. It leverages advanced technologies such as Natural Language Processing (NLP) and Retrieval Augmented Generation (RAG) to provide an interactive learning experience. Throughout the development process, several challenges were encountered, ranging from integrating complex large language models to ensuring seamless user experience. This case study provides an overview of the project, shows its implementation in detail, highlights the challenges faced, outlines the solutions implemented, and discusses key learnings gained from the experience.

Introduction

BrainBot has been conceptualized as a solution to provide users with an interactive learning experience powered by AI. It has been built using cutting-edge technologies such as LangChain, FastAPI, and Streamlit. It integrates powerful large language models like GPT-4 (OpenAI API) and Llama3-8b-8192 (GROQ API) to enable users to chat with their documents, images, and webpages, facilitating a more interactive learning experience. The application is containerized using Docker for easy deployment and scalability.

The project aims to utilize the power of advanced technologies such as Natural Language Processing (NLP) and Retrieval Augmented Generation (RAG), and the development process involved integrating various components, including large language models, frontend and backend systems, and deployment infrastructure.

Retrieval Augmented Generation (RAG)

Retrieval augmented generation (RAG) is the most widely used technique for NLP applications because it enables the users to interact with a domain specific knowledge source by utilizing the powerful capabilities of LLMs while maintaining the conversational context. The response is generated through the customized data source instead of the LLM's pre-trained knowledge.

There are three main components of a RAG-based application:

- Data Ingestion

- Data Retrieval

- Synthesis

Let's see how we implement these components in our application using LangChain, Streamlit, FastAPI, LLMs (GPT-4 | Groq API) and FAISS as a vector store.

Application Workflow

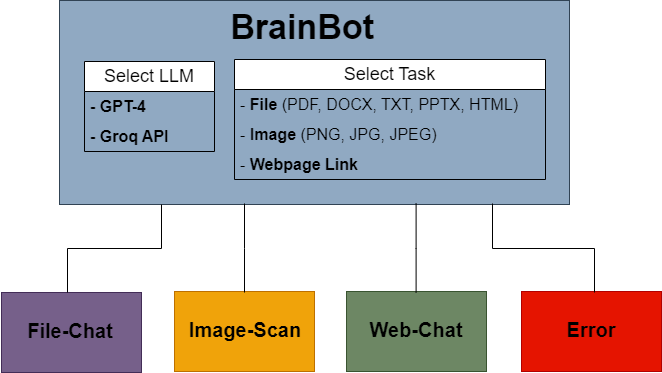

High Level Diagram

Here's a high level diagram of BrainBot showing its main components.

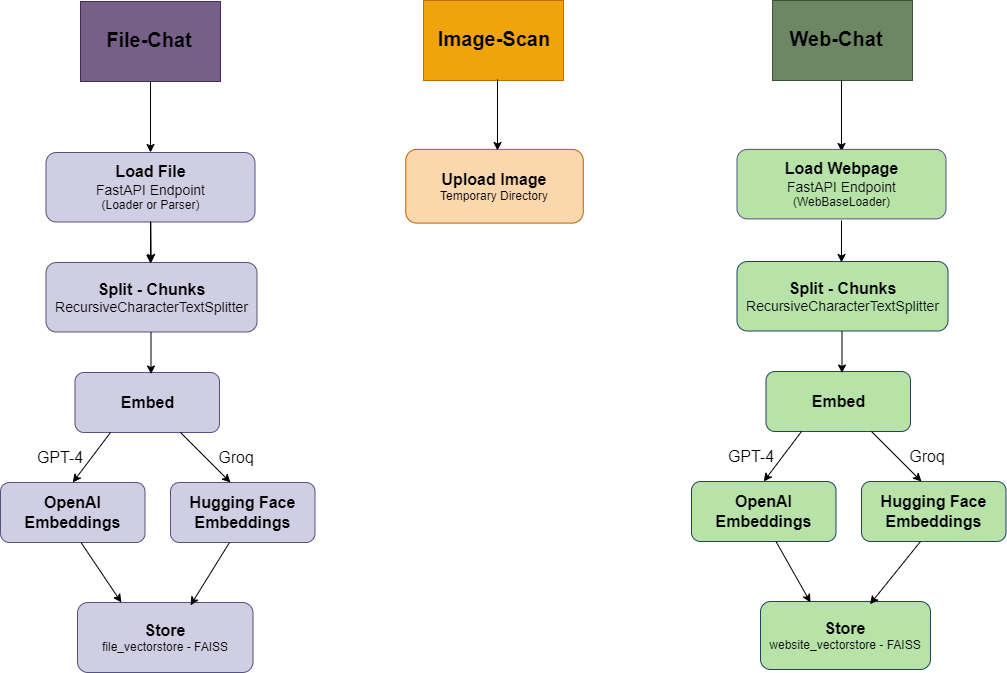

Data Ingestion

Data ingestion is the first step in building a RAG-based application. The quality of response depends on how well the data has been ingested into the system. The diagram below shows the data ingestion pipelines of various BrainBot components.

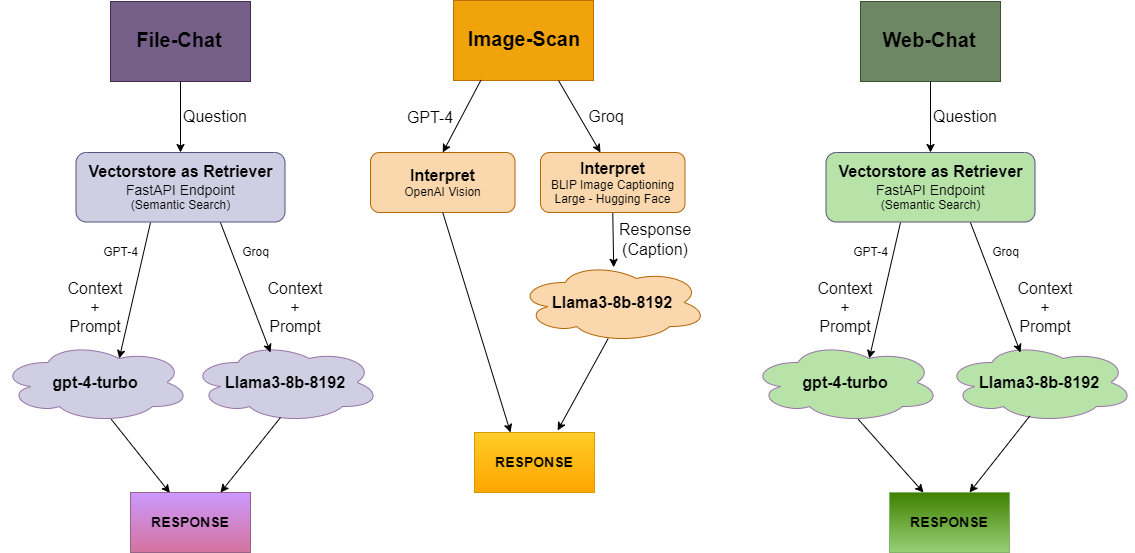

Data Retrieval & Synthesis

Here are the diagrams showing the data retrieval and synthesis workflow of BrainBot components.

Implementation using FastAPI, LLMs, LangChain, StreamLit, and Docker

Installing necessary libraries

Install the following libraries from the requirements.txt file.

- bs4

- docx2txt

- faiss-cpu

- fastapi

- langchain

- langchain-community

- langchain-core

- langchain-groq

- langchain-openai

- Pillow

- pydantic

- pypdf

- python-dotenv

- python-pptx

- requests

- sentence-transformers

- streamlit

- validators

- uvicorn

Hugging Face - Docker Configuration

- README.md

This is the default configuration file for Docker on the Hugging Face platform.

title: BrainBot

emoji: 🤖

colorFrom: pink

colorTo: yellow

sdk: docker

app_port: 8501

pinned: false

license: apache-2.0

- Dockerfile

This is the Docker configuration file containing all application related configurations.

# Comments are provided throughout this file to help you get started. # If you need more help, visit the Dockerfile reference guide at # https://docs.docker.com/go/dockerfile-reference/ # Want to help us make this template better? Share your feedback here: https://forms.gle/ybq9Krt8jtBL3iCk7 ARG PYTHON_VERSION=3.11.9 FROM python:${PYTHON_VERSION}-slim as base # Prevents Python from writing pyc files. ENV PYTHONDONTWRITEBYTECODE=1 # Keeps Python from buffering stdout and stderr to avoid situations where # the application crashes without emitting any logs due to buffering. ENV PYTHONUNBUFFERED=1 WORKDIR /app # Create a non-privileged user that the app will run under. # See https://docs.docker.com/go/dockerfile-user-best-practices/ ARG UID=10001 RUN adduser \ --disabled-password \ --gecos "" \ --home "/nonexistent" \ --shell "/sbin/nologin" \ --no-create-home \ --uid "${UID}" \ appuser # Download dependencies as a separate step to take advantage of Docker's caching. # Leverage a cache mount to /root/.cache/pip to speed up subsequent builds. # Leverage a bind mount to requirements.txt to avoid having to copy them into # into this layer. RUN --mount=type=cache,target=/root/.cache/pip \ --mount=type=bind,source=requirements.txt,target=requirements.txt \ python -m pip install -r requirements.txt # Create a directory named 'data' and assign its ownership to appuser RUN mkdir -p /data RUN chown appuser /data # Create a directory named 'images' and assign its ownership to appuser RUN mkdir -p /images RUN chown appuser /images # Switch to the non-privileged user to run the application. USER appuser # Set the TRANSFORMERS_CACHE environment variable ENV TRANSFORMERS_CACHE=/tmp/.cache/huggingface # Create the cache folder with appropriate permissions RUN mkdir -p $TRANSFORMERS_CACHE && chmod -R 777 $TRANSFORMERS_CACHE # Copy the source code into the container. COPY . . # Expose the port that the application listens on. EXPOSE 7860 EXPOSE 8501 # Run the application. CMD ["bash", "-c", "uvicorn main:app --host 0.0.0.0 --port 7860 & streamlit run BrainBot.py --server.port 8501 --server.enableXsrfProtection false"]

CSS

- styles.css

This file contains application-wide CSS styles.

img { border-radius: 10px; } .stApp { background: linear-gradient(to bottom, rgba(247,251,252,1) 0%,rgba(217,237,242,1) 40%,rgba(173,217,228,1) 100%); /* W3C, IE10+, FF16+, Chrome26+, Opera12+, Safari7+ */ } ul li:nth-child(2) { display: none; }

Helper Functions

- utils.py

This file contains few helper functions to be used in different modules during development. Using such functions enables code reuse and reduces redundancy.

import re import os ## HELPER FUNCTIONS ## ------------------------------------------------------------------------------------------ # Function to format response received from a FastAPI endpoint def format_response(response_text): # Replace \n with newline character in markdown response_text = re.sub(r'\\n', '\n', response_text) # Check for bullet points and replace with markdown syntax response_text = re.sub(r'^\s*-\s+(.*)$', r'* \1', response_text, flags=re.MULTILINE) # Check for numbered lists and replace with markdown syntax response_text = re.sub(r'^\s*\d+\.\s+(.*)$', r'1. \1', response_text, flags=re.MULTILINE) # Check for headings and replace with markdown syntax response_text = re.sub(r'^\s*(#+)\s+(.*)$', r'\1 \2', response_text, flags=re.MULTILINE) return response_text # Function to unlink all images when the application closes def unlink_images(folder_path): # List all files in the folder image_files = os.listdir(folder_path) # Iterate over image files and unlink them for image_file in image_files: try: os.unlink(os.path.join(folder_path, image_file)) print(f"Deleted: {image_file}") except Exception as e: print(f"Error deleting {image_file}: {e}")

Building the Frontend

- BrainBot.py

This is the entry point to the frontend of the application. It gives the options to select the LLM of your choice from GPT-4 or Groq API and to choose what task you want to perform out of these three:

- Chat with a file or document - PDF, DOCX, TXT, PPTX, or HTML

- Scan and interpret an image

- Chat with a Webpage

import streamlit as st import requests import tempfile import validators import os # Custom CSS with open('styles.css') as f: css = f.read() st.markdown(f'<style>{css}</style>', unsafe_allow_html=True) ## FUNCTIONS ## ------------------------------------------------------------------------------------------- # Function to save the uploaded file as a temporary file and return its path. def save_uploaded_file(uploaded_file): file_content = uploaded_file.read() # Load the document # Create a temporary file with tempfile.NamedTemporaryFile(delete=False) as temp_file: temp_file.write(file_content) # Write the uploaded file content to the temporary file temp_file_path = temp_file.name # Get the path of the temporary file return temp_file_path # Function to save the uploaded image as a temporary file and return its path. def save_uploaded_image(uploaded_image): # Create a temporary file path with .png extension temp_file_path = os.path.join(tempfile.NamedTemporaryFile(suffix=".png").name) # Write the uploaded image content to the temporary file with open(temp_file_path, "wb") as temp_file: temp_file.write(uploaded_image.read()) return temp_file_path ## LOGO and TITLE ## ------------------------------------------------------------------------------------------- # Show the logo and title side by side col1, col2 = st.columns([1, 4]) with col1: st.image("brainbot.png", use_column_width=True,) with col2: st.title("Hi, I am BrainBot - Your AI Learning Assistant!") # Main content st.header("Upload any 📄 file, 🖼️ image, or 🔗 webpage link and ask me anything from it!") st.subheader("Supported file formats: PDF, DOCX, TXT, PPTX, HTML") st.subheader("Supported image formats: PNG, JPG, JPEG") col3, col4 = st.columns([2, 3]) with col3: ## LLM OPTIONS # Select the LLM to use (either GPT-4 or GROQ) llm = st.radio( "Choose the LLM", ["GPT-4", "GROQ"], index=1 ) st.session_state["llm"] = llm ## CHAT OPTIONS - FILE, IMAGE, WEBSITE ## ------------------------------------------------------------------------------------------- # User Inputs uploaded_file = None uploaded_image = None website_link = None question = None if llm == "GPT-4" and "api_key_flag" not in st.session_state: st.warning("Please enter your OpenAI API key.") # Get OpenAI API Key from user openai_api_key = st.sidebar.text_input("Enter your OpenAI API Key", type="password") # Send POST request to a FastAPI endpoint to set the OpenAI API key as an environment # variable with st.spinner("Activating OpenAI API..."): try: FASTAPI_URL = "http://localhost:7860/set_api_key" data = {"api_key": openai_api_key} if openai_api_key: response = requests.post(FASTAPI_URL, json=data) st.sidebar.success(response.text) st.session_state['api_key_flag'] = True st.experimental_rerun() except Exception as e: st.switch_page("pages/error.py") with col4: if llm == "GROQ" or "api_key_flag" in st.session_state: # Select to upload file, image, or link to chat with them upload_option = st.radio( "Select an option", ["📄 Upload File", "🖼️ Upload Image", "🔗 Upload Link"] ) # Select an option to show the appropriate file_uploader if upload_option == "📄 Upload File": uploaded_file = st.file_uploader("Choose a file", type=["txt", "pdf", "docx", "pptx", "html"]) elif upload_option == "🖼️ Upload Image": uploaded_image = st.file_uploader("Choose an image", type=["png", "jpg", "jpeg"]) elif upload_option == "🔗 Upload Link": website_link = st.text_input("Enter a website URL") ## CHAT HISTORY ## ------------------------------------------------------------------------------------------- # Initialize an empty list to store chat messages with files if 'file_chat_history' not in st.session_state: st.session_state['file_chat_history'] = [] # Initialize an empty list to store image interpretations if 'image_chat_history' not in st.session_state: st.session_state['image_chat_history'] = [] # Initialize an empty list to store chat messages with websites if 'web_chat_history' not in st.session_state: st.session_state['web_chat_history'] = [] ## FILE ## ------------------------------------------------------------------------------------------- # Load the uploaded file, then save it into a vector store, and enable the input field to ask # a question st.session_state['uploaded_file'] = False if uploaded_file is not None: with st.spinner("Loading file..."): # Save the uploaded file to a temporary path temp_file_path = save_uploaded_file(uploaded_file) try: # Send POST request to a FastAPI endpoint to load the file into a vectorstore data = {"file_path": temp_file_path, "file_type": uploaded_file.type} FASTAPI_URL = f"http://localhost:7860/load_file/{llm}" response = requests.post(FASTAPI_URL, json=data) st.success(response.text) st.session_state['current_file'] = uploaded_file.name st.session_state['uploaded_file'] = True st.switch_page("pages/File-chat.py") except Exception as e: st.switch_page("pages/error.py") ## IMAGE ## ------------------------------------------------------------------------------------------- # Load the uploaded image if user uploads an image, then interpret the image st.session_state['uploaded_image'] = False if uploaded_image is not None: try: # Save uploaded image to a temporary file temp_img_path = save_uploaded_image(uploaded_image) except Exception as e: st.switch_page("pages/error.py") st.session_state['temp_img_path'] = temp_img_path st.session_state['current_image'] = uploaded_image.name st.session_state['uploaded_image'] = True st.switch_page("pages/Image-scan.py") ## WEBSITE LINK ## ------------------------------------------------------------------------------------------- # Load the website content, then save it into a vector store, and enable the input field to # ask a question st.session_state['uploaded_link'] = False if website_link is not None: if website_link: # Ensure that the user has entered a correct URL if validators.url(website_link): try: # Send POST request to a FastAPI endpoint to scrape the webpage and load its text # into a vector store FASTAPI_URL = f"http://localhost:7860/load_link/{llm}" data = {"website_link": website_link} with st.spinner("Loading website..."): response = requests.post(FASTAPI_URL, json=data) st.success(response.text) st.session_state['current_website'] = website_link st.session_state['uploaded_link'] = True st.switch_page("pages/Web-chat.py") except Exception as e: st.switch_page("pages/error.py") else: st.error("Invalid URL. Please enter a valid URL.")

- pages/File-chat.py

This is the File chat interface. It allows the user to ask questions from the uploaded file. The conversation with the user is maintained through a conversational retrieval chain.

import streamlit as st import requests import utils # Custom CSS with open('styles.css') as f: css = f.read() st.markdown(f'<style>{css}</style>', unsafe_allow_html=True) ## LOGO and TITLE ## ------------------------------------------------------------------------------------------- # Show the logo and title side by side col1, col2 = st.columns([1, 4]) with col1: st.image("brainbot.png", width=100) with col2: st.title("File-Chat") question = None llm = st.session_state["llm"] if "current_file" in st.session_state: current_file = st.session_state['current_file'] if st.sidebar.button("Upload New File"): st.switch_page("BrainBot.py") st.subheader("Your file has been uploaded successfully. You can now chat with it.") st.success(current_file) question = st.chat_input("Type your question here...") else: st.warning("Upload a file to begin chat with it.") if st.button("Upload File"): st.switch_page("BrainBot.py") ## CHAT # Clear the file chat history if user has uploaded a new file if st.session_state['uploaded_file'] == True: st.session_state['file_chat_history'] = [] # Display the file chat history for message in st.session_state['file_chat_history']: with st.chat_message("user"): st.write(message["Human"]) with st.chat_message("ai"): st.markdown(utils.format_response(message["AI"])) ## QUESTION - WITH CHAT HISTORY ## ------------------------------------------------------------------------------------------- # Retrieve the answer to the question asked by the user if question is not None: # Display the question entered by the user in chat with st.chat_message("user"): st.write(question) resource = "file" try: # Send POST request to a FastAPI endpoint to retrieve an answer for the question data = {"question": question, "resource": resource} FASTAPI_URL = f"http://localhost:7860/answer_with_chat_history/{llm}" with st.spinner("Generating response..."): response = requests.post(FASTAPI_URL, json=data) # Append the response to the chat history st.session_state['file_chat_history'].append({"Human": question, "AI": response.text}) st.session_state['uploaded_file'] = False # Display the AI's response to the question in chat with st.chat_message("ai"): # Format the response formatted_response = utils.format_response(response.text) st.markdown(formatted_response) except Exception as e: st.switch_page("error.py")

- pages/Image-scan.py

This is the Image scan interface. It interprets the uploaded image and explains it in detail using OpenAI Vision model or BLIP image captioning large model from Hugging Face depending on the choice of LLM.

import streamlit as st import requests import utils # Custom CSS with open('styles.css') as f: css = f.read() st.markdown(f'<style>{css}</style>', unsafe_allow_html=True) ## LOGO and TITLE ## ------------------------------------------------------------------------------------------- # Show the logo and title side by side col1, col2 = st.columns([1, 4]) with col1: st.image("brainbot.png", width=100) with col2: st.title("Image-Scan") llm = st.session_state["llm"] if "current_image" in st.session_state: current_image = st.session_state['current_image'] if st.sidebar.button("Upload New Image"): st.switch_page("BrainBot.py") st.subheader("Your image has been uploaded successfully.") st.success(current_image) else: st.warning("Upload an image to interpret it.") if st.button("Upload Image"): st.switch_page("BrainBot.py") ## CHAT # Clear the image chat history if user has uploaded a new image if st.session_state['uploaded_image'] == True: st.session_state['image_chat_history'] = [] # Display the image chat history for image in st.session_state['image_chat_history']: with st.chat_message("user"): st.image(image["path"], caption=current_image) with st.chat_message("ai"): st.markdown(utils.format_response(image["Description"])) ## IMAGE # Display the image uploaded by the user if "temp_img_path" in st.session_state and st.session_state['uploaded_image'] == True: temp_img_path = st.session_state['temp_img_path'] with st.chat_message("human"): st.image(temp_img_path, width=300, caption=current_image) try: # Send POST request to a FastAPI endpoint with temporary image path FASTAPI_URL = f"http://localhost:7860/image/{llm}" with st.spinner("Interpreting image..."): response = requests.post(FASTAPI_URL, json={"image_path": temp_img_path}) # Append the image and response to the chat history st.session_state['image_chat_history'].append({"path": temp_img_path, "Description": response.text}) st.session_state['uploaded_image'] = False # Display the AI's interpretation of the image in chat with st.chat_message("assistant"): # Format the response formatted_response = utils.format_response(response.text) st.markdown(formatted_response) except Exception as e: st.switch_page("error.py")

- pages/Web-chat.py

This is the Web chat interface. It allows the user to ask questions from the uploaded webpage link and get the required information.

import streamlit as st import requests import utils # Custom CSS with open('styles.css') as f: css = f.read() st.markdown(f'<style>{css}</style>', unsafe_allow_html=True) ## LOGO and TITLE ## ------------------------------------------------------------------------------------------- # Show the logo and title side by side col1, col2 = st.columns([1, 4]) with col1: st.image("brainbot.png", width=100) with col2: st.title("Web-Chat") question = None llm = st.session_state["llm"] if "current_website" in st.session_state: current_website = st.session_state['current_website'] if st.sidebar.button("Upload New Webpage Link"): st.switch_page("BrainBot.py") st.subheader("Your website content has been uploaded successfully. You can now chat with it.") st.success(current_website) question = st.chat_input("Type your question here...") else: st.warning("Upload a webpage link to begin chat with it.") if st.button("Upload Webpage Link"): st.switch_page("BrainBot.py") ## CHAT # Clear the web chat history if user has uploaded a new webpage link if st.session_state['uploaded_link'] == True: st.session_state['web_chat_history'] = [] # Display the web chat history for message in st.session_state['web_chat_history']: with st.chat_message("user"): st.write(message["Human"]) with st.chat_message("ai"): st.markdown(utils.format_response(message["AI"])) ## QUESTION - WITH CHAT HISTORY ## ------------------------------------------------------------------------------------------- # Retrieve the answer to the question asked by the user if question is not None: # Display the question entered by the user in chat with st.chat_message("user"): st.write(question) resource = "web" try: # Send POST request to a FastAPI endpoint to retrieve an answer for the question data = {"question": question, "resource": resource} FASTAPI_URL = f"http://localhost:7860/answer_with_chat_history/{llm}" with st.spinner("Generating response..."): response = requests.post(FASTAPI_URL, json=data) # Append the response to the chat history st.session_state['web_chat_history'].append({"Human": question, "AI": response.text}) st.session_state['uploaded_link'] = False # Display the AI's response to the question in chat with st.chat_message("ai"): # Format the response formatted_response = utils.format_response(response.text) st.markdown(formatted_response) except Exception as e: st.switch_page("error.py")

- pages/error.py

This is the interface to the error page. This page is displayed whenever an error or exception occurs.

import streamlit as st # Custom CSS with open('styles.css') as f: css = f.read() st.markdown(f'<style>{css}</style>', unsafe_allow_html=True) ## LOGO and TITLE ## ------------------------------------------------------------------------------------------- # Show the logo and title side by side col1, col2 = st.columns([1, 4]) with col1: st.image("brainbot.png", width=100) with col2: st.title("Error") st.error("Oops - Something went wrong! Please try again.")

Building the Backend

- main.py

This is the main file containing all the backend functionality including FastAPI endpoints and LangChain implementation of RAG for conversation with the user. It implements the following FastAPI endpoints to control the application workflow:

- /set_api_key - It sets the OpenAI API key entered by the user into the system environment variables.

- /load_file - It loads the file using the appropriate loader or parser, splits it into document chunks, and uploads the document embeddings into a vectorstore.

- /image - It interprets the image using the LLM - OpenAI Vision or BLIP-image-captioning-large from Hugging Face & Llama3-8b-8192 from Groq.

- /load_link - It loads the website content through scraping, splits it into document chunks, and uploads the document embeddings into a vectorstore.

- /answer_with_chat_history - It retrieves the answer to the question using vectorstore as a retriever and augments it through LLM and RAG maintaining the chat history using conversational retrieval chain.

from fastapi import FastAPI, HTTPException from pydantic import BaseModel from contextlib import asynccontextmanager from langchain_community.document_loaders import PyPDFLoader from langchain_community.document_loaders import WebBaseLoader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain_community.vectorstores import FAISS from langchain_openai import OpenAIEmbeddings from langchain_community.embeddings import HuggingFaceEmbeddings from langchain_openai import ChatOpenAI from langchain_groq import ChatGroq from langchain.chains import create_history_aware_retriever, create_retrieval_chain from langchain.chains.combine_documents import create_stuff_documents_chain from langchain_community.chat_message_histories import ChatMessageHistory from langchain_core.chat_history import BaseChatMessageHistory from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder from langchain_core.runnables.history import RunnableWithMessageHistory from transformers import pipeline from bs4 import BeautifulSoup from dotenv import load_dotenv from PIL import Image import base64 import requests import docx2txt import pptx import os import utils ## APPLICATION LIFESPAN # Load the environment variables using FastAPI lifespan event so that they are available throughout the application @asynccontextmanager async def lifespan(app: FastAPI): # Load the environment variables load_dotenv() #os.environ['OPENAI_API_KEY'] = os.getenv("OPENAI_API_KEY") ## Langsmith tracking os.environ["LANGCHAIN_TRACING_V2"] = "true" # Enable tracing to capture all the monitoring results os.environ["LANGCHAIN_API_KEY"] = os.getenv("LANGCHAIN_API_KEY") ## load the Groq API key os.environ['GROQ_API_KEY'] = os.getenv("GROQ_API_KEY") os.environ['HF_TOKEN'] = os.getenv("HF_TOKEN") os.environ['NGROK_AUTHTOKEN'] = os.getenv("NGROK_AUTHTOKEN") global image_to_text image_to_text = pipeline("image-to-text", model="Salesforce/blip-image-captioning-large") yield # Delete all the temporary images utils.unlink_images("/images") ## FASTAPI APP # Initialize the FastAPI app app = FastAPI(lifespan=lifespan, docs_url="/") ## PYDANTIC MODELS # Define an APIKey Pydantic model for the request body class APIKey(BaseModel): api_key: str # Define a FileInfo Pydantic model for the request body class FileInfo(BaseModel): file_path: str file_type: str # Define an Image Pydantic model for the request body class Image(BaseModel): image_path: str # Define a Website Pydantic model for the request body class Website(BaseModel): website_link: str # Define a Question Pydantic model for the request body class Question(BaseModel): question: str resource: str ## FUNCTIONS # Function to combine all documents def format_docs(docs): return "\n\n".join(doc.page_content for doc in docs) # Function to encode the image def encode_image(image_path): with open(image_path, "rb") as image_file: return base64.b64encode(image_file.read()).decode('utf-8') ## FASTAPI ENDPOINTS ## GET - / @app.get("/") async def welcome(): return "Welcome to Brainbot!" ## POST - /set_api_key @app.post("/set_api_key") async def set_api_key(api_key: APIKey): os.environ["OPENAI_API_KEY"] = api_key.api_key return "API key set successfully!" ## POST - /load_file # Load the file, split it into document chunks, and upload the document embeddings into a vectorstore @app.post("/load_file/{llm}") async def load_file(llm: str, file_info: FileInfo): file_path = file_info.file_path file_type = file_info.file_type # Read the file and split it into document chunks try: # Initialize the text splitter text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200) # Check the file type and load each file according to its type if file_type == "application/pdf": # Read pdf file loader = PyPDFLoader(file_path) docs = loader.load() elif file_type == "application/vnd.openxmlformats-officedocument.wordprocessingml.document": # Read docx file text = docx2txt.process(file_path) docs = text_splitter.create_documents([text]) elif file_type == "text/plain": # Read txt file with open(file_path, 'r') as file: text = file.read() docs = text_splitter.create_documents([text]) elif file_type == "application/vnd.openxmlformats-officedocument.presentationml.presentation": # Read pptx file presentation = pptx.Presentation(file_path) # Initialize an empty list to store slide texts slide_texts = [] # Iterate through slides and extract text for slide in presentation.slides: # Initialize an empty string to store text for each slide slide_text = "" # Iterate through shapes in the slide for shape in slide.shapes: if hasattr(shape, "text"): slide_text += shape.text + "\n" # Add shape text to slide text # Append slide text to the list slide_texts.append(slide_text.strip()) docs = text_splitter.create_documents(slide_texts) elif file_type == "text/html": # Read html file with open(file_path, 'r') as file: soup = BeautifulSoup(file, 'html.parser') text = soup.get_text() docs = text_splitter.create_documents([text]) # Delete the temporary file os.unlink(file_path) # Split the document into chunks documents = text_splitter.split_documents(docs) if llm == "GPT-4": embeddings = OpenAIEmbeddings() elif llm == "GROQ": embeddings = HuggingFaceEmbeddings() # Save document embeddings into the FAISS vectorstore global file_vectorstore file_vectorstore = FAISS.from_documents(documents, embeddings) except Exception as e: # Handle errors raise HTTPException(status_code=500, detail=str(e.with_traceback)) return "File uploaded successfully!" ## POST - /image # Interpret the image using the LLM - OpenAI Vision or BLIP-image-captioning-large from Hugging Face @app.post("/image/{llm}") async def interpret_image(llm: str, image: Image): try: # Get the base64 string base64_image = encode_image(image.image_path) if llm == "GPT-4": headers = { "Content-Type": "application/json", "Authorization": f"Bearer {os.environ['OPENAI_API_KEY']}" } payload = { "model": "gpt-4-turbo", "messages": [ { "role": "user", "content": [ { "type": "text", "text": "What's in this image?" }, { "type": "image_url", "image_url": { "url": f"data:image/jpeg;base64,{base64_image}" } } ] } ], "max_tokens": 300 } response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload) response = response.json() # Extract description about the image description = response["choices"][0]["message"]["content"] elif llm == "GROQ": # Use image-to-text model from Hugging Face response = image_to_text(image.image_path) # Extract description about the image description = response[0]["generated_text"] chat = ChatGroq(temperature=0, groq_api_key=os.environ["GROQ_API_KEY"], model_name="Llama3-8b-8192") system = "You are an assistant to understand and interpret images." human = "{text}" prompt = ChatPromptTemplate.from_messages([("system", system), ("human", human)]) chain = prompt | chat text = f"Explain the following image description in a small paragraph. {description}" response = chain.invoke({"text": text}) description = str.capitalize(description) + ". " + response.content except Exception as e: # Handle errors raise HTTPException(status_code=500, detail=str(e)) return description ## POST - load_link # Load the website content through scraping, split it into document chunks, and upload the document # embeddings into a vectorstore @app.post("/load_link/{llm}") async def website_info(llm: str, link: Website): try: # load, chunk, and index the content of the html page loader = WebBaseLoader(web_paths=(link.website_link,),) global web_documents web_documents = loader.load() # split the document into chunks text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200) documents = text_splitter.split_documents(web_documents) if llm == "GPT-4": embeddings = OpenAIEmbeddings() elif llm == "GROQ": embeddings = HuggingFaceEmbeddings() # Save document embeddings into the FAISS vectorstore global website_vectorstore website_vectorstore = FAISS.from_documents(documents, embeddings) except Exception as e: # Handle errors raise HTTPException(status_code=500, detail=str(e)) return "Website loaded successfully!" ## POST - /answer_with_chat_history # Retrieve the answer to the question using LLM and the RAG chain maintaining the chat history @app.post("/answer_with_chat_history/{llm}") async def get_answer_with_chat_history(llm: str, question: Question): user_question = question.question resource = question.resource selected_llm = llm try: # Initialize the LLM if selected_llm == "GPT-4": llm = ChatOpenAI(model="gpt-4-turbo", temperature=0) elif selected_llm == "GROQ": llm = ChatGroq(groq_api_key=os.environ["GROQ_API_KEY"], model_name="Llama3-8b-8192") # extract relevant context from the document using the retriever with similarity search if resource == "file": retriever = file_vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 5}) elif resource == "web": retriever = website_vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 5}) ### Contextualize question ### contextualize_q_system_prompt = """Given a chat history and the latest user question \ which might reference context in the chat history, formulate a standalone question \ which can be understood without the chat history. Do NOT answer the question, \ just reformulate it if needed and otherwise return it as is.""" contextualize_q_prompt = ChatPromptTemplate.from_messages( [ ("system", contextualize_q_system_prompt), MessagesPlaceholder("chat_history"), ("human", "{input}"), ] ) history_aware_retriever = create_history_aware_retriever( llm, retriever, contextualize_q_prompt ) ### Answer question ### qa_system_prompt = """You are an assistant for question-answering tasks. \ Use the following pieces of retrieved context to answer the question. \ If you don't know the answer, just say that you don't know. \ Use three sentences maximum and keep the answer concise.\ {context}""" qa_prompt = ChatPromptTemplate.from_messages( [ ("system", qa_system_prompt), MessagesPlaceholder("chat_history"), ("human", "{input}"), ] ) question_answer_chain = create_stuff_documents_chain(llm, qa_prompt) rag_chain = create_retrieval_chain(history_aware_retriever, question_answer_chain) ### Statefully manage chat history ### store = {} def get_session_history(session_id: str) -> BaseChatMessageHistory: if session_id not in store: store[session_id] = ChatMessageHistory() return store[session_id] conversational_rag_chain = RunnableWithMessageHistory( rag_chain, get_session_history, input_messages_key="input", history_messages_key="chat_history", output_messages_key="answer", ) response = conversational_rag_chain.invoke( {"input": user_question}, config={ "configurable": {"session_id": "abc123"} }, # constructs a key "abc123" in `store`. )["answer"] except Exception as e: # Handle errors raise HTTPException(status_code=500, detail=str(e)) return response

Challenges Faced

I faced the following challenges while developing this application:

1. Integration of Large Language Models

Challenge:

Integrating state-of-the-art large language models such as GPT-4 (OpenAI API) and Llama3-8b-8192 (GROQ API) presented a significant challenge due to their complexity and computational requirements. GPT-4 has a cost associated with it so it is not a feasible solution for everyone. On the other hand, various LLMs on the Hugging Face platform have intensive hardware requirements (GPUs and memory) both in the development and production environments.

Solution:

I experimented with different approaches for model integration, including pre-trained models from Hugging Face and using the OpenAI API. Ultimately, a combination of pre-trained models and API use was adopted to achieve optimal performance. OpenAI API has multimodal capabilities in gpt-4-turbo model which is capable of handling both the textual as well as visual content. Moreover, the inferences of GPT-4 model are more organized and comprehensive for text as well as images, so it has been kept as an option for the ones who want to use it. For the free tier, Llama3-8b-8192 model from GROQ API has been used for chatting with textual documents and webpages and a pre-trained image-to-text Hugging Face model Salesforce/blip-imagecaptioning-large has been used for interpreting the images. The one-liner inference from this model has been prompted to the GROQ API LLM (Llama3-8b-8192) for further comprehension but this has a chance of some hallucination.

2. Containerization and Deployment

Challenge:

Containerizing the application using Docker has been a challenge because Docker does not allow directory permissions by default. It also does not allow to create new directories at runtime. Launching the Streamlit frontend has been another point of concern as the Docker container launches it smoothly on the local system but when it’s deployed on Hugging Face, the

frontend does not launch.

Solution:

The issue of directory permissions has been catered by providing appropriate commands in the Docker file to create the required directories and assign their permissions to the current user. Streamlit frontend launch has been resolved by providing the appropriate port in the README.md configuration file.

3. User Experience Design

Challenge:

Designing an intuitive and user-friendly interface that seamlessly integrates with the AI functionality posed a challenge, especially considering the diverse range of content types supported by the application.

Solution:

Extensive user research and iterative design processes were employed to refine the user interface and ensure a smooth user experience.

Solutions Adopted

1. Model Selection

To address the challenge of integrating complex large language models, I employed a combination of pre-training and API usage techniques. This involved selecting appropriate pre-trained models from libraries such as Hugging Face and GROQ API along with providing diversity in the choice of LLMs through OpenAI API.

2. Infrastructure Optimization

Docker containerization was utilized to encapsulate the application and its dependencies, enabling seamless deployment across different environments. Additionally, cloud-based infrastructure, Hugging Face, was used to ensure scalability and reliability.

3. User Experience Enhancement

Through iterative design iterations and user feedback, the user interface was refined to improve usability and accessibility. Features such as drag-and-drop file uploads, real-time chat interaction, and customizable settings were implemented to enhance the overall user experience.

Key Learnings

Implementing this project has been a great learning experience for me both technically and non-technically.

1. Importance of Collaboration

Collaboration among peers and instructors from diverse backgrounds, including software development, machine learning, and user experience design, was essential for the success of the project. Effective communication facilitated the integration of various components and the resolution of complex challenges.

2. Continuous Improvement

The iterative nature of the development process highlighted the importance of continuous improvement. Regular feedback loops and user testing enabled to identify areas for enhancement and implement iterative improvements throughout the project lifecycle.

3. Flexibility and Adaptability

The dynamic nature of the project necessitated flexibility and adaptability in response to evolving requirements and challenges. Embracing agile methodologies and maintaining a mindset of continuous learning and adaptation were critical for navigating uncertainties and achieving project goals.

Future Directions

Moving forward, several opportunities exist for further enhancement and expansion of the BrainBot platform:

- Enhanced Large Language Models:

Continuously integrating state-of-the-art large language models and exploring novel techniques for improving conversational AI capabilities.

- Advanced Features:

Introducing advanced features such as multi-modal interaction through speech, personalized learning recommendations, personalized assessments from the uploaded content, and collaborative learning environments.

- Community Engagement:

Engaging with the user community to gather feedback, prioritize feature requests, and foster a collaborative development ecosystem.

Conclusion

In conclusion, the development of BrainBot presented various challenges, ranging from model integration to user experience design. Through collaborative efforts and innovative solutions, these challenges were successfully addressed, leading to the creation of a powerful AI learning assistant. The project highlighted the importance of collaboration, continuous improvement, and adaptability in navigating complex development endeavors. As BrainBot continues to evolve, it holds great potential to revolutionize the way users interact with and learn from digital content.