AI-Powered Podcast Summarization and Intelligent YouTube Recommendations: Enhancing Audio Content Discovery with NLP and Speech Processing

Abstract

In an era dominated by digital media, podcast consumption has surged, necessitating efficient ways to process and extract insights from long-form audio content. This research introduces an AI-powered Podcast Summarization and YouTube Recommendation System that leverages state-of-the-art speech-to-text, natural language processing (NLP), and deep learning models to transcribe, summarize, and recommend relevant podcasts. Our solution integrates OpenAI's Whisper, Google's Long-T5 summarization model, spaCy's Named Entity Recognition (NER), and Deepgram's Text-to-Speech (TTS) API within a Streamlit-based user interface, providing users with a seamless experience for summarizing and exploring podcast content. This paper explores the system's architecture, underlying models, implementation challenges, coding methodologies, adoption of new technologies, and future research opportunities in the field of AI-driven media summarization.

1. Introduction

As digital audio content continues to expand, listeners often struggle to sift through long-form discussions for key takeaways. Traditional manual methods of summarization and podcast discovery are time-consuming and inefficient. This study proposes a fully automated AI-driven pipeline that simplifies transcription, summarization, keyword extraction, and podcast recommendation to enhance content accessibility and discovery.

Key Contributions:

State-of-the-art Speech Recognition: OpenAI's Whisper model for high-accuracy transcription.

Advanced Summarization: Google's Long-T5 Transformer for abstractive summarization.

Keyword Extraction for Context Awareness: spaCy's Named Entity Recognition (NER) for extracting key topics.

Smart Podcast Recommendations: Utilizing YouTube’s API to retrieve similar long-form content.

Text-to-Speech Integration: Employing Deepgram's neural TTS models to convert summaries into audio.

Optimized Codebase: A modular and scalable Python-based implementation.

User-Friendly Web Interface: Built with Streamlit for intuitive interaction.

2. Technology Stack & Implementation

2.1 Core Technologies & Frameworks

🔹 Programming Language: Python

🔹 Speech Processing: OpenAI Whisper ASR

🔹 Summarization Model: Google Long-T5 Transformer

🔹 Named Entity Recognition: spaCy NLP model

🔹 Recommendation System: YouTube API with keyword-based filtering

🔹 Text-to-Speech (TTS): Deepgram API

🔹 Web Framework: Streamlit

🔹 Audio Handling: yt-dlp & pydub for YouTube audio conversion

2.2 System Architecture & Workflow

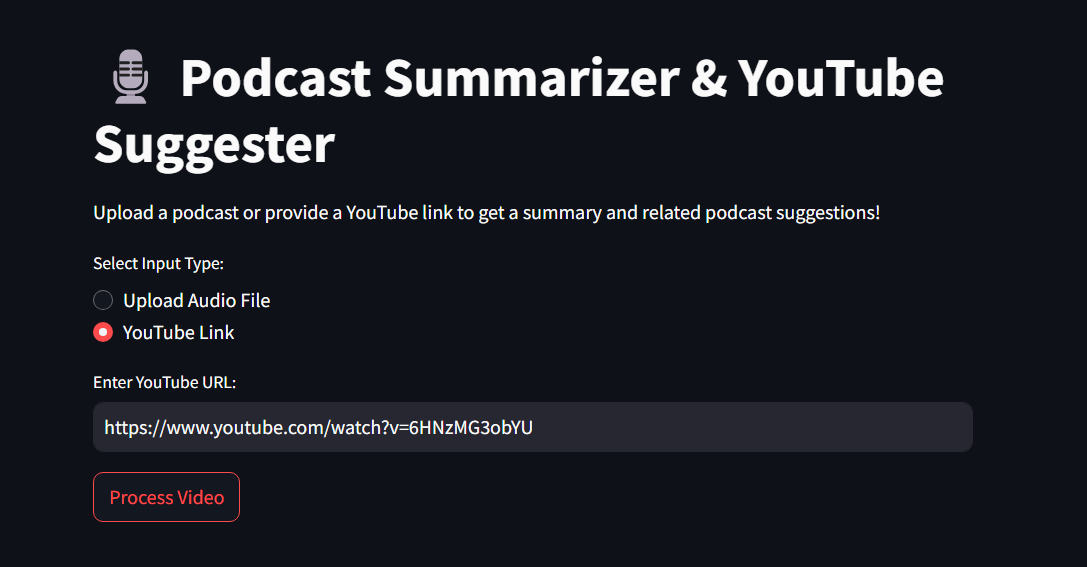

1)User Input: Users upload an MP3 file or provide a YouTube link.

2)Audio Processing & Transcription:

If a YouTube link is provided, the system downloads and converts the audio via yt-dlp.

The Whisper ASR model transcribes the audio into text.

3)Summarization & Keyword Extraction:

The transcript is summarized using Google’s Long-T5 model.

spaCy NER extracts key topics from the transcript.

4)Podcast Recommendation:

Extracted keywords are used to query YouTube’s API for related content.

5)Text-to-Speech Conversion:

Summaries are synthesized into speech using Deepgram API.

6)User Interface:

Displaying transcriptions, summaries, keywords, and recommendations on a Streamlit dashboard.

Code Snippet: Summarization & Keyword Extraction

from transformers import pipeline import spacy def summarize_text(text): summarizer = pipeline("summarization", model="google/long-t5-tglobal-base") summary = summarizer(text, max_length=250, min_length=50, do_sample=False) return summary[0]['summary_text'] def extract_keywords(text): nlp = spacy.load("en_core_web_sm") doc = nlp(text) return list(set(ent.text for ent in doc.ents))

3. Adoption of Advanced AI Models

3.1 Whisper ASR for Speech Recognition

✅Transformer-based architecture ensures highly accurate speech-to-text conversion.

✅Robust against background noise and various accents.

✅Supports multi-language transcription, making it adaptable for global content.

3.2 Google Long-T5 for Abstractive Summarization

✅Optimized for handling long-form text summarization.

✅Uses global attention mechanisms to process extended sequences.

✅Enhances coherence and readability of podcast summaries.

3.3 spaCy NER for Context-Aware Recommendations

✅Extracts relevant named entities to improve recommendation queries.

✅Ensures contextually relevant podcast suggestions.

3.4 Deepgram TTS for Audio Playback

✅Converts summaries into natural-sounding speech.

✅Provides low-latency TTS synthesis for real-time interactions.

3.5 AI-Powered Podcast Discovery

✅Uses YouTube’s API to retrieve related podcasts dynamically.

✅Ensures personalized recommendations based on extracted keywords.

4. Experimental Results & Discussion

Preliminary testing indicates high transcription accuracy, with Whisper outperforming previous ASR models in handling noisy environments and diverse accents. The Long-T5 model effectively condenses lengthy transcripts into concise, information-rich summaries. Keyword-driven podcast recommendations yield relevant and engaging results.

5. Conclusion & Future Research

This study successfully integrates AI-based transcription, summarization, and recommendation systems to automate podcast content discovery. Our approach enhances user accessibility, efficiency, and engagement in consuming long-format audio content.

Future Research Directions:

1)Expanding Multi-Language Support to cater to a global audience.

2)Fine-tuning Summarization Models for better domain-specific performance.

3)Hybrid AI-based Recommendation Systems using collaborative filtering + content-based methods.

4)Deploying the System as a Cloud-Based API for enterprise-level adoption.

6. References

Radford, A. et al. (2022) - Whisper: A Robust Speech Recognition Model, OpenAI.

Raffel, C. et al. (2020) - Exploring the Limits of Transfer Learning with T5, Google Research.

Deepgram (2023) - Deepgram TTS API Documentation.

Honnibal, M. & Montani, I. (2020) - spaCy 2: Natural Language Processing in Python.

Developed By:

Absar Raashid

GitHub Repository: https://github.com/AbsarRaashid3/PodcastSummarizerAndYoutubeSuggester.git