Introduction

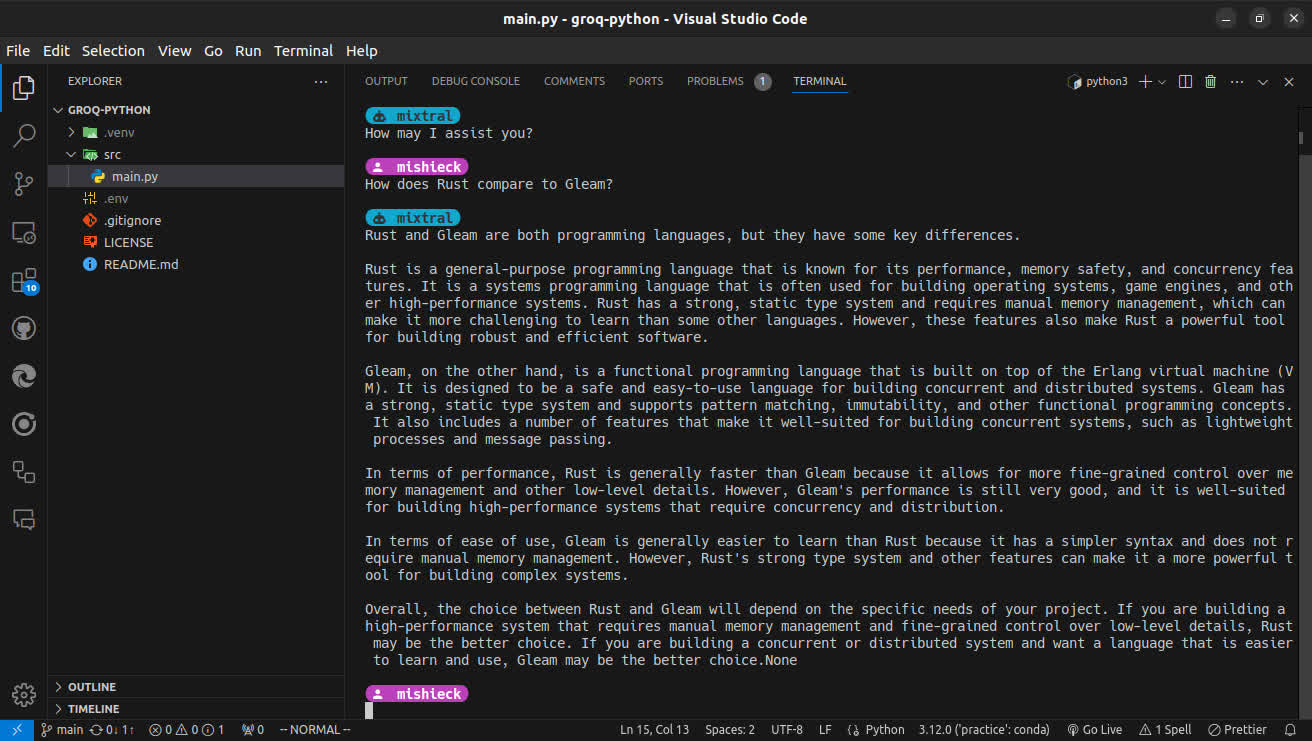

Groq is one of the fastest inference platforms in AI. Command Line Interfaces (CLI) are very fast when all you have work with is text. Terminal user interfaces are boring by default. However, terminal coloring can be used to make CLI look more interesting. This project combined these factors to create a very good user experience.

Implementation

Python was used as the language for implementing the app. The Groq SDK was used for communicating with the Groq inference platform. Rich was used for coloring the headers for the AI and the user. Symbols were used to represent the chat bot and the user. The app uses emojis to for symbols by default. The app also gives the user the option to use a nerd font for the symbols.

Setting Up the Environment

The following environment variables were used:

GROQ_API_KEY: the API key used to access Groq services.MODEL: the AI model to use for the inference.

Entities

Data classes and type hints were used to create entities. The following sections give an overview of the entities that were involved.

Message

The Message data class was used to create messages used in the chat.

@dataclass(frozen = True) class Message: role: str # 'system' | 'assistant' | 'user' content: str

Text Styles

This data class was used for terminal coloring using Rich.

@dataclass(frozen = True) class TextStyles: foreground: str background: str @property def foreground_and_background(self) -> str: return f'bold {self.foreground} on {self.background}'

Header

The Header data class was used for displaying the header of the AI and user messages.

@dataclass(frozen = True) class Header: using_nerd_font: bool role: str text: str def __repr__(self): if self.using_nerd_font: return self.format_using_nerd_font() else: return self.format_without_nerd_font() def format_using_nerd_font(self) -> str: icon = self.get_icon(nerd_font_icons) heading = f'{icon} {self.text}' styles = text_styles[self.role] open = Header.set_text_styles(styles.background, '') close = Header.set_text_styles(styles.background, '') middle = Header.set_text_styles(styles.foreground_and_background, heading) return f'{open}{middle}{close}' def format_without_nerd_font(self) -> str: styles = text_styles[self.role].foreground_and_background return Header.set_text_styles(styles, f' @{self.text} ') def get_icon(self, icons: Icons) -> str: return icons.user if self.role == 'user' else icons.assistant @classmethod def set_text_styles(cls, styles: str, text: str) -> str: return f'[{styles}]{text}[/]'

Running the App

The following code was used to run the app:

def main(): handle_missing_env_var('GROQ_API_KEY', GROQ_API_KEY) handle_missing_env_var('MODEL', MODEL) print("Enter 'exit' to quit chat.\n") console.print(headers['assistant']) print('How may I assist you?') print() while True: user_message = get_user_message() if user_message.content == 'exit': break messages.append(asdict(user_message)) print() ai_message = get_ai_message() messages.append(asdict(ai_message)) print('\n')

The function handle_missing_env_var checks if an environment variable is set. If that variable is not set, it

informs the user of that, and closes the app. The message sent by the user is gotten using get_user_message. The function handles prompting the user and getting the response. If the user enters

exit, the app is closed. AI messages were gotten using get_ai_message. The function gets the

message using streaming and displays whatever content has been accumulated.

All the messages from the AI and the user are stored in a list for use in further prompts.

Strengths and Weaknesses

Strengths

The app had the following strengths:

- Fast: the technologies used provided very fast inference and display of messages.

- Robust: from checking environment variables to using streaming, the app minimized errors. Streaming ensures that some content is returned even if there is a network error or depletion of tokens while the inference is going on. Single shot prompts on the other hand are an all-or-nothing method.

- Good-looking UI: the use of terminal coloring and symbols enhanced the look of the app. The headers made it easier to differentiate AI and user messages.

Weaknesses

- Storage: the app does not permanently store the messages. So, all the messages are lost when the app is closed. Saving messages was avoided due to the token window for the Groq API being small. Saving messages would risk going beyond the window quickly. This may even prevent the conversation from starting at all if the app is started when the messages stored already exceed the token window.

- No inference error handling: the app provides no inference error handling. This may be fixed in the future.

Applications

The app can be used for simple and fast inference. If you want quick answers to questions, instead of opening your browser, which is much slower, you could use your terminal instead.

For generative AI developers, this app can be used for learning how to create AI chat apps with streaming capabilities.

What Could be Worked on

Error Handling

More error handling could be added to reduce surprises. The area that lacks error handling the most is the usage of the Groq SDK. Inference errors could be caught and investigated. Upon investigation, the user could be informed on what went wrong. Possible errors include network errors, exceeding the token window, and unknown errors.

Message Display

Chat AI usually use markdown for the responses. The markdown could be formatted and highlighted. This would result in better looking messages which would provide a good user experience.

Conclusion

I had a lot of fun creating this project. The experience was much better than I expected. The Groq inference platform really delivers on its promises. AI chat apps have gotten much faster these days. But, at the time I started this project, they were very slow. Seeing how fast the app could display messages, I was amazed. The app is open sourced on GitHub at mishieck/groq-python-chat.