In recent years, AI assistants have made staggering leaps in capability. Large language models (LLMs) like GPT-4, Claude, and others are now capable of answering complex questions, generating entire reports, writing code, and more.

But there's a catch.

These models are still disconnected from the environments we actually work in. They don't inherently have access to the documents we're editing, the codebases we're debugging, or the internal databases we manage.

Every time we want an AI assistant to really help with our work, we have to manually copy-paste content into the chat—or build a custom integration from scratch.

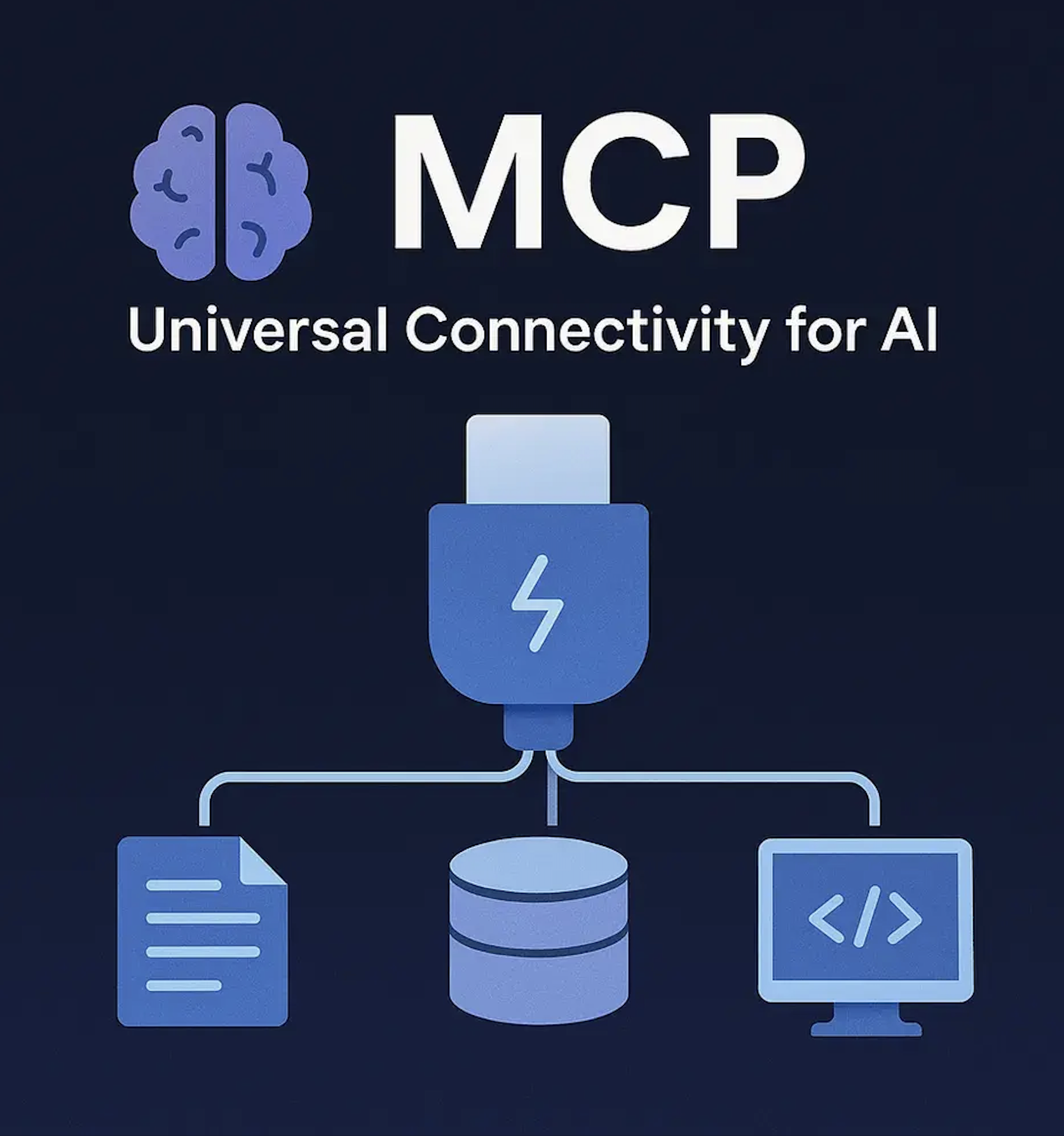

That's where MCP comes in.

The Model Context Protocol (MCP) is a universal, open-source standard for connecting AI assistants to the places where your data lives—your files, tools, and platforms.

Think of it as the USB-C for AI applications.

Just as USB-C provides a standard way for your laptop to connect with everything from keyboards to displays, MCP standardizes how AI assistants connect to data sources, whether they're local files, databases, APIs, or cloud-based enterprise systems like Google Drive or GitHub.

🔄 Client-Server Architecture

At its core, MCP follows a client-server architecture:

MCP Host (Client): The AI assistant or tool you're using (e.g., Claude Desktop, VS Code with AI support).

MCP Server: A small program that knows how to access a specific data source—like your local filesystem, a Postgres database, or GitHub.

Data Sources: Local or cloud-based systems that hold the content you want your AI assistant to understand and work with.

When the MCP client needs information, it sends a request via the MCP protocol to an MCP server, which then retrieves or modifies the data in the data source and sends the relevant context back to the AI assistant.

Here's what the MCP ecosystem looks like today:

🛠 MCP Specification and SDKs

A complete set of documentation and software development kits to help developers build their own MCP clients or servers.

💻 Local MCP Server Support

Built into the Claude Desktop apps, allowing users to instantly connect their local environment to Claude.

🧩 Open-Source Server Repository

Ready-to-use, community-contributed MCP servers for:

Google Drive

Slack

GitHub

Git

Postgres

Puppeteer (for web automation and scraping)

✅ 1. AI Assistants with Real Context

The most advanced model still can't guess what's in your codebase or internal CRM unless you tell it. With MCP, the AI assistant can automatically access relevant files or data—leading to better, more accurate outputs.

✅ 2. No More Custom Connectors

In the past, every data source needed its own custom-built integration. Now, thanks to MCP's standardized protocol, you build once and use everywhere.

✅ 3. Model-Agnostic Flexibility

MCP isn't tied to any one AI model. Whether you use Claude, GPT, or another LLM, you can plug in the same connectors.

✅ 4. Secure by Design

MCP is designed for enterprise environments, supporting fine-grained access control, local-only connections, and privacy-aware integration.

✅ 5. Build Better Agents and Workflows

Want an AI agent to automate a workflow? With MCP, you can connect it to:

i.) Your internal wiki

ii.)Your CRM

iii.)A web browser (via Puppeteer)

iv.)Your SQL database

v.) GitHub PRs

Now it can plan, reason, and act using real context.

Now that you understand the concept and importance of MCP, let's build a simple but functional MCP implementation that demonstrates the power of this protocol. In this tutorial, we'll create:

i.) An MCP server that provides access to a local filesystem

ii.) An MCP client that connects to this server

iii.) A simulated AI assistant that uses MCP to access files

🏗️ Setting Up the Project

First, let's set up our project structure:

mcp-tutorial/

├── mcp_server.py

├── mcp_client.py

├── ai_assistant.py

└── main.py

Let's create an MCP server that can handle filesystem operations. This will be our data source adapter.

# mcp_server.py

import os

import json

from typing import Dict, List, Optional

class MCPFileSystemServer:

"""

A simple MCP server that provides access to the local filesystem.

"""

def handle_request(self, request: Dict) -> Dict:

"""Process an incoming MCP request and return a response."""

request_type = request.get("type")

if request_type == "list_files":

return self._handle_list_files(request)

elif request_type == "read_file":

return self._handle_read_file(request)

elif request_type == "write_file":

return self._handle_write_file(request)

else:

return {

"status": "error",

"error": f"Unsupported request type: {request_type}"

}

def _handle_list_files(self, request: Dict) -> Dict:

"""List files in the specified directory."""

directory = request.get("directory", ".")

try:

files = os.listdir(directory)

return {

"status": "success",

"files": [

{

"name": file,

"is_directory": os.path.isdir(os.path.join(directory, file)),

"path": os.path.join(directory, file)

}

for file in files

]

}

except Exception as e:

return {

"status": "error",

"error": str(e)

}

def _handle_read_file(self, request: Dict) -> Dict:

"""Read the contents of a file."""

file_path = request.get("path")

if not file_path:

return {

"status": "error",

"error": "No file path provided"

}

try:

with open(file_path, "r", encoding="utf-8") as f:

content = f.read()

return {

"status": "success",

"content": content,

"metadata": {

"size": os.path.getsize(file_path),

"modified": os.path.getmtime(file_path)

}

}

except Exception as e:

return {

"status": "error",

"error": str(e)

}

def _handle_write_file(self, request: Dict) -> Dict:

"""Write content to a file."""

file_path = request.get("path")

content = request.get("content")

if not file_path:

return {

"status": "error",

"error": "No file path provided"

}

if content is None:

return {

"status": "error",

"error": "No content provided"

}

try:

with open(file_path, "w", encoding="utf-8") as f:

f.write(content)

return {

"status": "success",

"metadata": {

"size": os.path.getsize(file_path),

"modified": os.path.getmtime(file_path)

}

}

except Exception as e:

return {

"status": "error",

"error": str(e)

}

Next, let's create a client that can connect to MCP servers and route requests:

# mcp_client.py

from typing import Dict, Any

class MCPClient:

"""

A simple MCP client that can be used by an AI assistant to access data sources.

"""

def __init__(self):

self.servers = {}

def register_server(self, name: str, server: Any) -> None:

"""Register an MCP server with this client."""

self.servers[name] = server

def send_request(self, server_name: str, request: Dict) -> Dict:

"""Send a request to a specific MCP server."""

server = self.servers.get(server_name)

if not server:

return {

"status": "error",

"error": f"Server '{server_name}' not found"

}

return server.handle_request(request)

Now, let's create an AI assistant that uses our MCP client to access the filesystem:

# ai_assistant.py

from typing import Dict, Optional

from mcp_client import MCPClient

class AIAssistant:

"""

A simulated AI assistant that uses MCP to access data.

"""

def __init__(self, mcp_client: MCPClient):

self.mcp_client = mcp_client

def list_directory(self, server_name: str, directory: str = ".") -> Dict:

"""List files in a directory using an MCP server."""

request = {

"type": "list_files",

"directory": directory

}

return self.mcp_client.send_request(server_name, request)

def read_file(self, server_name: str, file_path: str) -> Dict:

"""Read a file using an MCP server."""

request = {

"type": "read_file",

"path": file_path

}

return self.mcp_client.send_request(server_name, request)

def write_file(self, server_name: str, file_path: str, content: str) -> Dict:

"""Write to a file using an MCP server."""

request = {

"type": "write_file",

"path": file_path,

"content": content

}

return self.mcp_client.send_request(server_name, request)

def analyze_code_file(self, server_name: str, file_path: str) -> Dict:

"""Analyze a code file and provide insights."""

# First, read the file

response = self.read_file(server_name, file_path)

if response["status"] != "success":

return response

# Here, we'll just do some basic analysis

content = response["content"]

lines = content.split("\n")

analysis = {

"lines_of_code": len(lines),

"empty_lines": len([line for line in lines if line.strip() == ""]),

"comments": len([line for line in lines if line.strip().startswith("#")])

}

return {

"status": "success",

"file_path": file_path,

"analysis": analysis

}

Finally, let's create a main script that uses all these components:

# main.py

from mcp_server import MCPFileSystemServer

from mcp_client import MCPClient

from ai_assistant import AIAssistant

import json

def print_response(response):

"""Pretty-print a response from the AI assistant."""

print(json.dumps(response, indent=2))

def run_demo():

# Create an MCP server for filesystem access

fs_server = MCPFileSystemServer()

# Create an MCP client

mcp_client = MCPClient()

# Register the filesystem server with the client

mcp_client.register_server("filesystem", fs_server)

# Create an AI assistant that uses the MCP client

assistant = AIAssistant(mcp_client)

print("=== MCP Demo: AI Assistant with Filesystem Access ===")

# Example 1: List files in the current directory

print("\n📁 Listing files in the current directory:")

response = assistant.list_directory("filesystem")

print_response(response)

# Example 2: Read a Python file

print("\n📄 Reading a Python file (main.py):")

response = assistant.read_file("filesystem", "main.py")

if response["status"] == "success":

print(f"File size: {response['metadata']['size']} bytes")

print("Content preview (first 100 chars):")

print(response["content"][:100] + "..." if len(response["content"]) > 100 else response["content"])

else:

print_response(response)

# Example 3: Analyze a Python file

print("\n🔍 Analyzing a Python file (main.py):")

response = assistant.analyze_code_file("filesystem", "main.py")

print_response(response)

# Example 4: Write a new file

print("\n✏️ Writing a new file (hello_mcp.txt):")

content = "Hello from MCP!\nThis file was created using the Model Context Protocol."

response = assistant.write_file("filesystem", "hello_mcp.txt", content)

print_response(response)

print("\n✅ Demo completed successfully!")

print("You now have a basic MCP implementation that connects an AI assistant to your filesystem.")

if __name__ == "__main__":

run_demo()

🚀 Running the Demo

Save all the files in your project directory and run:

python main.py

You should see output similar to this:

=== MCP Demo: AI Assistant with Filesystem Access ===

📁 Listing files in the current directory:

{

"status": "success",

"files": [

{

"name": "ai_assistant.py",

"is_directory": false,

"path": "ai_assistant.py"

},

{

"name": "mcp_client.py",

"is_directory": false,

"path": "mcp_client.py"

},

{

"name": "mcp_server.py",

"is_directory": false,

"path": "mcp_server.py"

},

{

"name": "main.py",

"is_directory": false,

"path": "main.py"

}

]

}

📄 Reading a Python file (main.py):

File size: 1872 bytes

Content preview (first 100 chars):

from mcp_server import MCPFileSystemServer

from mcp_client import MCPClient

from ai_assistant import AIAssistant

import...

🔍 Analyzing a Python file (main.py):

{

"status": "success",

"file_path": "main.py",

"analysis": {

"lines_of_code": 54,

"empty_lines": 8,

"comments": 5

}

}

✏️ Writing a new file (hello_mcp.txt):

{

"status": "success",

"metadata": {

"size": 69,

"modified": 1681228652.7845678

}

}

✅ Demo completed successfully!

You now have a basic MCP implementation that connects an AI assistant to your filesystem.

That is basically how the Model Context Protocol works. With just a few hundred lines of code, we've created a system that allows an AI assistant to access and manipulate the filesystem in a secure, standardized way.

As you have seen, MCP truly is the "USB-C of AI-Data Connectivity", it provides a universal interface for AI assistants to connect with the data sources they need to be truly helpful.