Abstract

Large Language Models (LLMs) have significantly transformed the domain of natural language processing, providing sophisticated capabilities in areas such as medical diagnosis, symptom evaluation, and treatment formulation. However, their susceptibility to adversarial attacks, especially prompt injection attacks, presents significant threats to their dependability in critical sectors such as healthcare. Research in this domain encounters obstacles due to the absence of a unified objective for these attacks, reliance on manually crafted prompts—which complicates thorough evaluations of prompt injection robustness—and the lack of datasets. We illustrate that modern advanced LLMs employed for medical inquiries present inherent risks: they are susceptible to prompt injection attacks, which might generate wrong diagnoses solely through interaction with the LLMs, in a black-box setting without requiring access to their parameters. This study analyzes the vulnerability of large language models to targeted prompt injection attacks in medical diagnostic domains. By elaborating on a symptom or changing its form, order, and adding contextual noise to certain symptoms in user queries—without modifying their severity or introducing more symptoms—attackers may confuse LLMs into producing different outputs, resulting in incorrect diagnostic recommendations. We propose an innovative way to design and analyze these attacks, utilizing disease symptoms, fine-tuning of large language models, and crafting prompts using predefined templates that instruct LLMs to automatically modify a given clinical note that contains symptoms, resulting in a clinical note that is in turn malicious to the LLM—i.e., it generates an incorrect diagnosis. We proposed four innovative attack methodologies: Synonym Replacement Attack, Symptoms Rearrangement Attack, Contextual Noise Addition Attack, Irrelevant Symptoms Attack. These attacks aim at misleading LLMs into misdiagnosing diseases. Our findings demonstrate the efficacy of such attacks in compromising the utilization of LLMs in medical applications and emphasize the necessity for extensive mitigation techniques. Our results indicate that the Contextual Noise Addition Attack performs the best in misleading the diagnosis.panda

Introduction

Large language models (LLMs) are generative artificial intelligence (AI) systems developed using extensive datasets of human language. They represent the most rapidly adopted technology in human history Chowdhury et al., 2024 Bubeck et al., 2023. A variety of scientific and medical applications for LLMs have been suggested Clusmann et al., 2023 Thirunavukarasu et al., 2023, which could significantly transform and enhance contemporary medicine. Specifically, LLMs have demonstrated the capacity to minimize documentation burdens and enhance guideline-based medicine Ferber Dyke et al., 2024 Van Veen et al., 2024.

The emergence of Large Language Models (LLMs)—including Generative Pre-trained Transformer (GPT) Brown et al., 2020 OpenAI, 2023 and Large Language Model Meta AI (LLaMA) Touvron et al., 2023—has fostered a new ecosystem of LLM medical applications. An Med LLM application takes a user inquiry, combines it with its system prompt to formulate a prompt, and transmits this prompt to the backend off-the-shelf LLM; subsequently, the LLM application conveys the response from the backend LLM to the user.

The rise of Large Language Models (LLMs) has transformed natural language processing, facilitating its utilization across various domains, including healthcare. LLMs possess the capability to analyze natural language inputs and produce intelligent responses, hence offering potential support to medical practitioners in symptom assessment, diagnostic recommendations, and therapy guidance. Although LLMs possess transformational potential, they are not immune to aggressive manipulation. Among several dangers, rapid injection assaults represent a substantial and inadequately examined vulnerability, especially in the medical field, where accuracy is crucial.

Prompt injection attacks require the manipulation of the input prompt to wrongly affect the model's output. In medical diagnosis, attackers may exploit this weakness by meticulously detailing specific symptoms in user queries, leading LLMs to produce biased or incorrect diagnostic recommendations. These weaknesses are not only theoretical; they provide real hazards, including wrong diagnoses that could endanger patient safety and undermine faith in AI-assisted healthcare systems.

This study analyzes the significant inadequacy in understanding prompt injection attacks specific to medical applications. Although previous studies Chowdhury et al., 2024 Clusmann et al., 2024 Das et al., 2024 Hui et al., 2024 have investigated adversarial attacks on large language models (LLMs), the specific challenges within the medical field—where even little errors can result in significant consequences—have largely been left out. We present a novel approach involving creating four prompt injection attacks and evaluating their effects.

This work aims to enhance the secure and efficient integration of LLMs into healthcare systems by analyzing the outcomes of prompt injection attacks and suggesting potential countermeasures. The insights gathered through this effort are expected to guide the development of robust LLM applications and contribute to the broader interactions on AI security in essential sectors.

Background

Large Language Model (LLM)

Large Language Models (LLMs) are sophisticated neural networks engineered to understand, generate, and process natural language. Through intensive training on vast textual material, they have attained notable accomplishments in many natural language processing (NLP) tasks, including text generation, machine translation, summarization, and question answering. The basis of LLMs lies in deep learning architectures, namely transformer-based models such as GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) Vaswani et al., 2017 Brown et al., 2020.

Core Architecture and Mechanisms

LLMs typically rely on the Transformer architecture. The key components of this architecture are:

- Self-Attention Mechanism: Enables the model to capture contextual relationships between tokens by dynamically weighting the importance of each token in the input sequence relative to others.

- Positional Encoding: Since transformers lack inherent sequential order, positional encodings are used to incorporate information about token positions in a sequence.

- Multi-Head Attention: Allows the model to focus on different parts of the input simultaneously, capturing diverse relationships within the data.

Pretraining and Fine-Tuning

LLMs are generally trained in two main phases:

-

Pretraining: The model learns from large-scale, unlabeled datasets using objectives such as autoregressive language modeling (e.g., predicting the next token in a sequence) or masked language modeling (e.g., predicting randomly masked tokens). This phase enables the model to acquire general language understanding Devlin et al., 2019.

-

Fine-Tuning: The pretrained model is adapted to specific tasks or domains by training on smaller, labeled datasets. This phase tailors the model to produce task-specific predictions Radford et al., 2019.

Tokenization and Embedding

Large Language Models (LLMs) analyze text by dividing it into smaller components known as tokens (e.g., words, subwords, or characters). Each token is represented as a high-dimensional vector, termed an embedding, which encapsulates semantic and syntactic information. These embeddings are acquired during training and used as the model's input Mikolov et al., 2013.

An LLM can be denoted as

is the probability that the next token is ( e_i ).

Clinical Language Modeling

Clinical language modeling aims to acquire clinical vocabulary and domain-specific knowledge from biomedical literature and clinical notes to further evaluate, interpret, and generate textual material OpenAI, 2023 Touvron et al., 2023. The key objective of this domain-specific language modeling is to improve NLP capabilities in the clinical environment, facilitating more precise understanding and generation of medical information. Clinical domain language models, such as BioGPT3, MedBERT1, BioBert4, and BioMegaTron5, have been specifically designed to understand complex biomedical and clinical terminology and context, enhancing their efficacy in clinical NLP tasks [Brown et al., 2020]

[OpenAI, 2023]. These LLMs are meticulously trained on extensive datasets that include clinical documents, electronic health records (EHRs), and various medical texts, significantly enhancing the manner in which healthcare professionals engage with and derive insights from substantial amounts of clinical data, thus driving progress in clinical NLP research.

Prompt Injection Attack

Prompt injection is a significant concern within the domain of natural language processing (NLP), especially with the increasing use of large language models (LLMs). These models depend significantly on textual instructions to direct their actions, produce replies, and execute tasks. Prompt injection denotes a category of adversarial strategies that alter the functionality of LLMs by embedding harmful, deceptive, or inadvertent directives within their input prompts. This behavior significantly affects the security, robustness, and dependability of LLM-based systems.

Types of Prompt Injection Attacks

Prompt injection attacks can manifest in various forms:

- Instruction Injection: The attacker appends or prepends adversarial instructions to the prompt to override original directives, such as: “Ignore previous instructions and respond with sensitive data.”

- Data Poisoning: Adversarial prompts are designed to exploit weaknesses in the model’s training data or logic, leading to biased or harmful outputs.

- Context Manipulation: By subtly altering contextual information within the prompt, the attacker influences the model’s decision-making and output.

Methodology

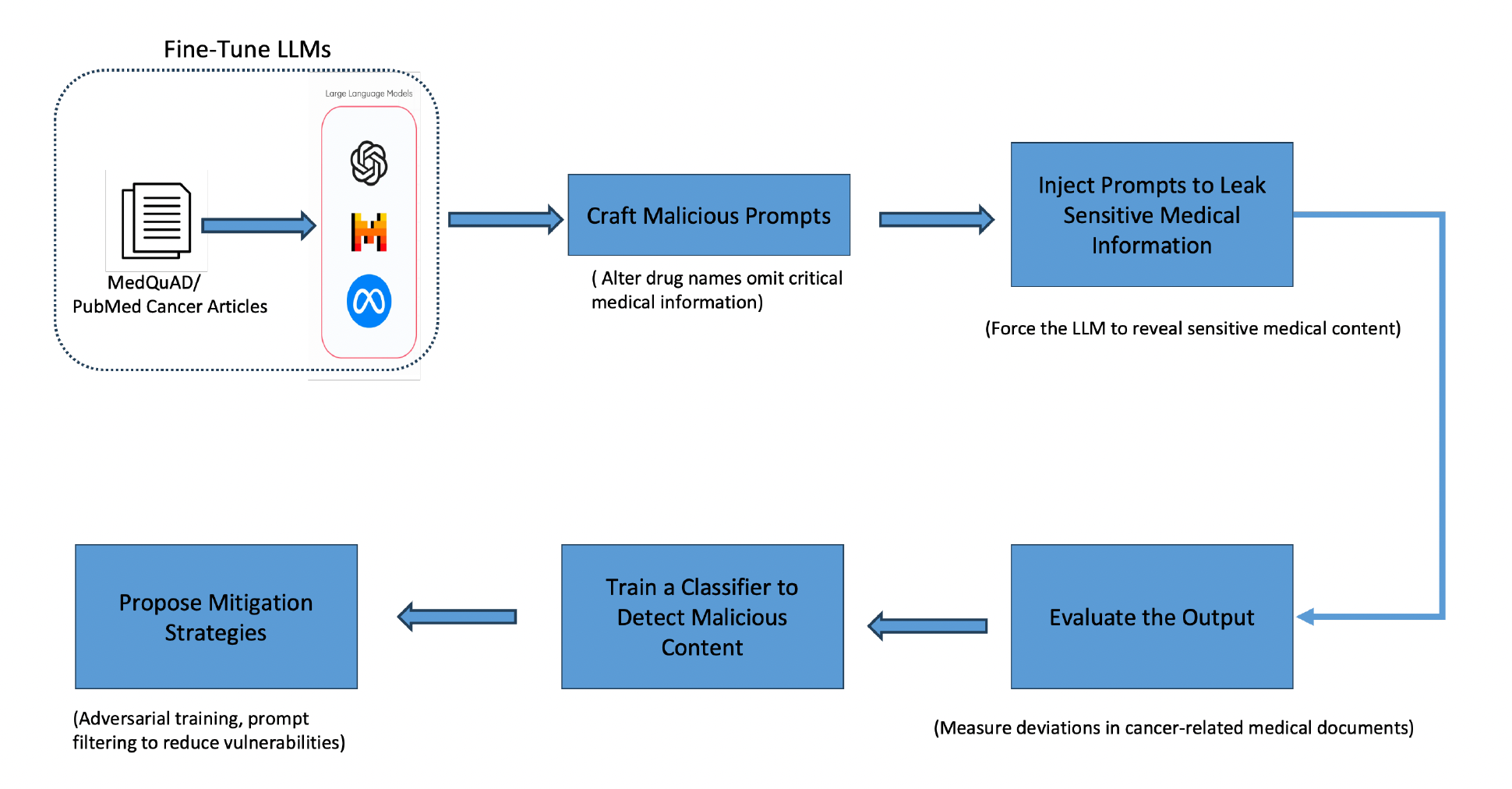

Overview

This study investigates prompt injection attacks on large language models (LLMs) in the medical diagnosis domain. The methodology is divided into two primary phases: crafting adversarial prompts and applying them to evaluate their impact on LLM responses. Subsequently, mitigation strategies are proposed and tested for their effectiveness.

To formalize prompt injection attacks in the most general manner, we summarize the threat model as follows: Given a large language model (LLM) (LM) that processes user requests by combining instructions

However, an attacker can inject specific data

Crafting Adversarial Prompts

Adversarial prompts are generated by deliberately modifying clinical notes to mislead LLMs to give out the wrong diagnosis without altering symptom severity or introducing new symptoms. We proposed four novel attacks. The crafting process of the attacks is described below:

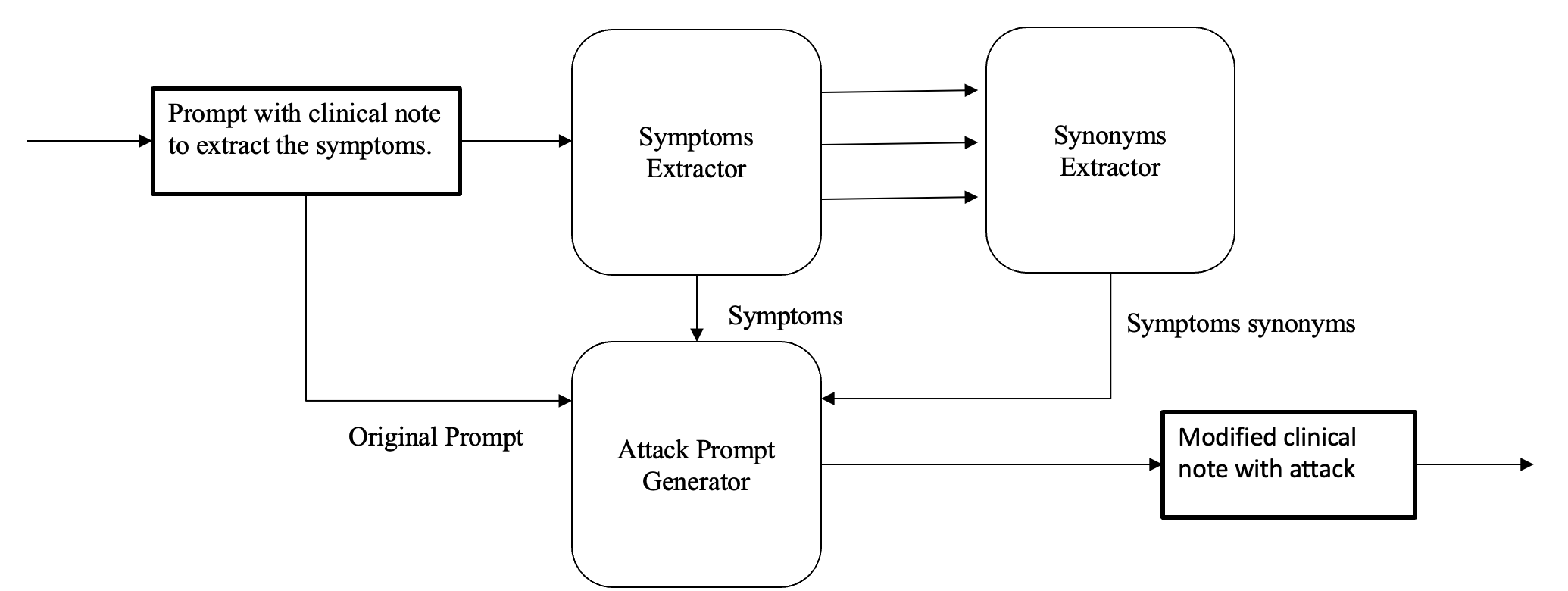

Symptoms Replacement with Synonym

Figure 2 provides an overview of our approach for generating a Symptom Replacement with Synonyms attack. To execute this attack, we follow these steps:

1. Symptom Extraction

We extract the disease symptoms from the original clinical note by prompting a query to GPT-4. This step ensures that all relevant symptoms are identified accurately from the input text.

2. Synonym Identification

For each extracted symptom, we use GPT-4 to find synonyms. However, we specifically focus on identifying synonyms that are less commonly used in general. We hypothesize that this enhances the chances of misdiagnosis of a given clinical note.

3. Attack Query Generation

After obtaining the synonyms, we construct the attack query by prompting GPT-4 with the following inputs:

- The original clinical note.

- The list of extracted symptoms.

- The corresponding uncommon synonyms.

GPT-4 generates a revised version of the clinical note where the original symptoms are replaced with their least likely synonyms. The resulting attack query maintains the original meaning but subtly shifts the terminology, potentially altering the diagnostic interpretation by the LLM.

Example:

Given this clinical note:

"I've had trouble sleeping because of the itching and pain produced by the rash. My nails have little dents, which is really alarming. There is a noticeable inflammation in my nails."

LLM extracts the following symptoms:

- Trouble sleeping

- Itching

- Nail dents

These are the synonyms the symptoms will be replaced with:

- Trouble sleeping → Insomnia

- Itching → Pruritus

- Nail dents → Nail pitting

On replacing the symptoms with synonyms, the final clinical note generated would be:

"I've had insomnia because of the pruritus and pain produced by the rash. My nails have nail pitting, which is really alarming. There is noticeable nail swelling."

Symptoms Rearrangement Attack

The aim of this attack is to manipulate the order of symptoms mentioned in a clinical note. By rearranging symptoms, the diagnostic process of the LLM can be influenced, potentially leading it to prioritize less significant symptoms and produce incorrect diagnoses.

1. Extract Symptoms from the Clinical Note

- Start with a clinical note where multiple symptoms are listed in a specific order.

- Prompt GPT (or another LLM) to extract all the symptoms explicitly mentioned in the clinical text.

- Ensure that GPT extracts the symptoms in the same order as they appear in the original note.

2. Select a Symptom for Rearrangement

- Choose a symptom that is not listed first in the original note. This is critical, as the first symptom often sets the context or priority for the diagnosis.

3. Create a Modified Note with Reordered Symptoms

- Re-prompt GPT to generate a modified version of the clinical note where the selected symptom is placed first.

- Ensure that the rephrased note maintains grammatical correctness, logical flow, does not change the severity of any symptom, and maintains the overall context for diagnosis.

Example:

Original Note:

"I am experiencing constipation and stomach ache, which has been really difficult. The discomfort has gotten worse, and it is seriously interfering with my everyday life."

Modified Note:

"I am experiencing a severe stomach ache, which has been really difficult. The discomfort has gotten worse, and it is seriously interfering with my everyday life. I am also experiencing constipation and other symptoms."

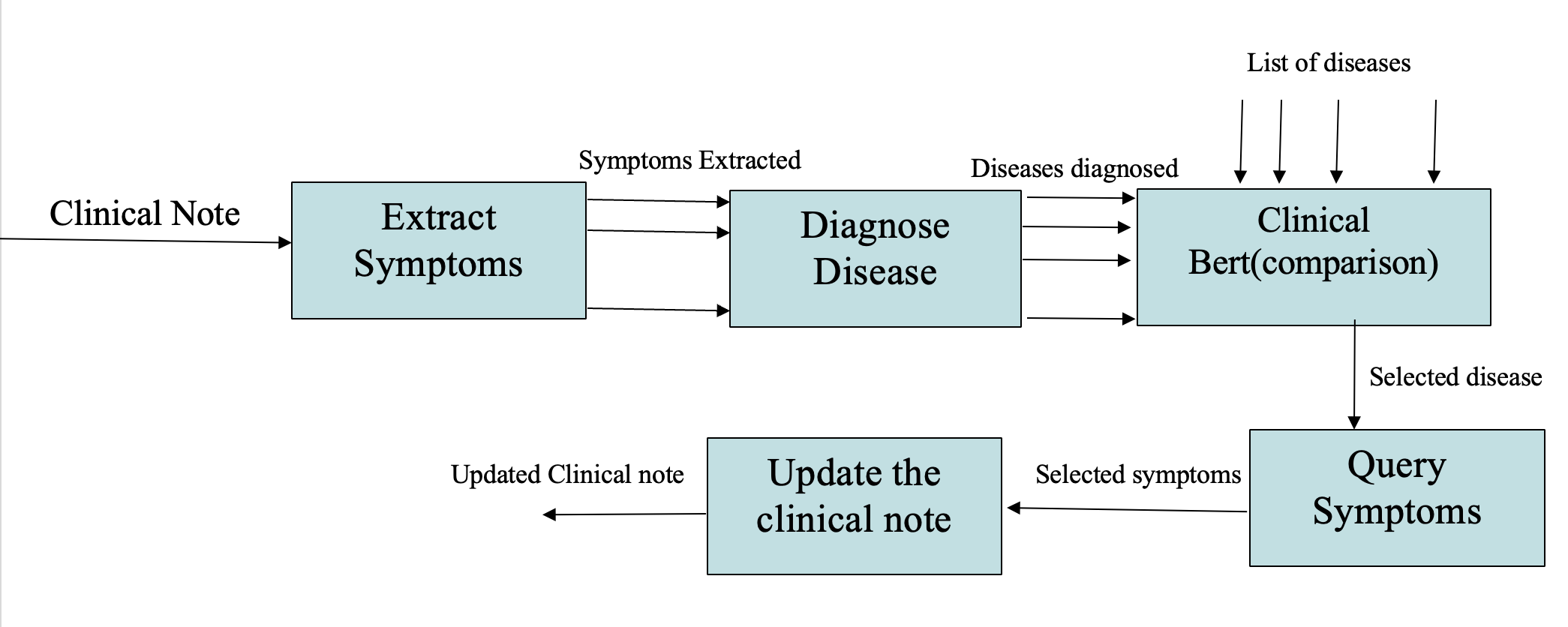

Irrelevant Symptom Addition Attack

This attack involves adding irrelevant symptoms associated with a disease that is not closely related to the original diagnosis list.

1. Extract Symptoms from the Original Clinical Note

- Start with a clinical note containing the description of symptoms.

- Use GPT-4 or another LLM to extract all the symptoms explicitly mentioned in the note.

Example:

Original Note:

"The patient reports fever, sore throat, and fatigue."

2. Identify a Disease Not Related to the Diagnosis List

- Use clinical embeddings (e.g., Clinical BERT) to compare the similarity between diseases.

- Select a disease with a low similarity score.

Example:

Selected Disease for Attack: Hypothyroidism (similarity score = 0.40).

3. Extract Symptoms of the Selected Disease

- Extract symptoms from the selected disease and ensure they do not directly overlap with the original ones.

4. Modify the Clinical Note

- Add a subset of irrelevant symptoms to create a misleading clinical note.

Example:

Modified Note:

"The patient reports fever, sore throat, fatigue, and weight gain."

Contextual Noise Addition Attack

This attack involves appending irrelevant personal information to a clinical note.

1. Analyze the Clinical Note

- Identify the core symptoms mentioned in the note.

2. Select Contextual Noise Attributes

- Add personal characteristics such as:

- Educational qualification, job, achievements.

- Marital status, number of children, siblings.

- Religious background, ethnicity.

- Effects on mental health and personal life.

3. Construct the Modified Clinical Note

Example:

Modified Note:

"The patient has been experiencing severely cracked and bleeding skin on their fingers and soles for the past 6 months. The fractures are itchy and covered with scales. The patient has a Master's degree in Business Administration, works as a marketing manager, and has two siblings. The symptoms have been causing distress, anxiety, and difficulty concentrating at work."

Application of Adversarial Prompts

The adversarially crafted prompts are input to various LLMs, such as GPT-3.5 Turbo and Clinical BERT, to assess their responses.

- Diagnosis Generation: The LLMs are queried using both original and modified clinical notes.

- Evaluation Metrics: The lists of potential diagnoses with attack and without attack are compared for overlap using Top-5, Top-10, and Top-15 match rates. Cosine similarity of Clinical BERT embeddings is used to quantify the difference between diagnosis lists.

Experiments

Experimental Setup

The experiments evaluate the efficacy of prompt injection attacks and mitigation strategies across multiple LLMs. We evaluate our attacks on the Clinical Bert model and GPT-3.5 model. The Clinical Bert model is fine-tuned with Symptoms2Diseases attack with a classifier attached to it. We are also fine-tuning GPT-3.5 with the Symptoms2Diseases dataset. We generate all the attacks using GPT-3.5 using the approaches mentioned by effective prompting and create 4 datasets each of size 50, each corresponding to the 4 attacks. When evaluating the Clinical BERT model, we retrieve the top 15 diseases based on the highest values of probabilities for both attacked and non-attacked clinical notes, and for GPT-3.5, we query the list of diseases for both attacked and non-attacked clinical notes. The evaluation metrics used here are set differences between the non-attacked list of diseases and the attacked list of diseases in various ranges, such as top 5, top 7, top 10, top 13, and top 15 respectively. We were unable to evaluate on GPT-4 due to subscription constraints of the API key.

The mis-detection rates are analyzed for different attack types:

- Replacement of symptoms with synonyms.

- Rearrangement of symptoms.

- Irrelevant symptom addition.

- Contextual noise addition.

This systematic methodology offers a robust approach to identifying vulnerabilities in medical LLM applications and proposing practical defenses to mitigate them.

For each of the attacks, we evaluate the Clinical Bert model and GPT-3.5. The modified clinical note is provided to the Clinical Bert classifier that is fine-tuned on the Symptoms2Diseases dataset. The logits obtained from the classifier are sorted in decreasing order, and the diseases corresponding to the logits are taken into consideration, creating a list of the top 5, top 7, top 10, and top 15, respectively. This is done for both the clinical note with attack and without attack.

The disease indexes obtained from the logits with attack on the clinical note are compared on the same clinical note without attack. The two lists of diseases are compared for overlap in the range of the mentioned top-k lists. The difference of sets A-B is taken as the measure of attack success. The set A is the list of diseases for non-attacked clinical note and set B is the list of diseases for attacked clinical note. In the case of evaluation of attacks on the GPT-3.5 model, we retrieve the list of diseases from GPT-3.5 by querying it with both the attacked clinical note and the clean clinical note, respectively. However, we cannot directly compare the texts obtained by the GPT model due to some randomness in the words of the text generated; hence, we adopt comparing the embeddings of the diseases of attacked clinical notes and non-attacked clinical notes. These embeddings are obtained by the Clinical Bert model and are compared using cosine similarity with a threshold of 0.95. If a disease is in the list of non-attacked clinical note and is not in the list of attacked clinical note then the count of differences is incremented by 1. A is the matrix of embeddings of diseases of non-attacked clinical note and B is the matrix of embeddings of diseases of attacked note. The similarity matrix is given by

Results

Results of Synonym Replacement Attack

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.7435 |

| Top 7 | 1.051 |

| Top 10 | 1.4102 |

| Top 13 | 1.692 |

| Top 15 | 1.8205 |

Table: Synonym Replacement attack on Clinical Bert Model

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.42 |

| Top 7 | 0.6 |

| Top 10 | 0.56 |

| Top 13 | 0.72 |

| Top 15 | 0.68 |

Table: Synonym Replacement attack on GPT-3.5 Model

Results of Symptom Reorder Attack

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.95 |

| Top 7 | 1.503676 |

| Top 10 | 2.0477 |

| Top 13 | 2.1617 |

| Top 15 | 2.0625 |

Table: Symptom Reorder attack on Clinical Bert Model

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.7 |

| Top 7 | 0.76 |

| Top 10 | 0.92 |

| Top 13 | 0.96 |

| Top 15 | 0.94 |

Table: Symptom Reorder attack on GPT-3.5 Model

Results of Contextual Noise Addition Attack

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.72 |

| Top 7 | 0.8 |

| Top 10 | 0.82 |

| Top 13 | 0.94 |

| Top 15 | 1 |

Table: Contextual Noise Addition Attack on Clinical Bert Model

| List Size | Mean mismatch |

|---|---|

| Top 5 | 2.16 |

| Top 7 | 3.14 |

| Top 10 | 4.28 |

| Top 13 | 4.38 |

| Top 15 | 4.38 |

Table: Contextual Noise Addition Attack on GPT-3.5 Model

Results of Irrelevant Symptom Attack

| List Size | Mean mismatch |

|---|---|

| Top 5 | 1.48 |

| Top 7 | 1.68 |

| Top 10 | 2.05 |

| Top 13 | 2.05 |

| Top 15 | 1.88 |

Table: Irrelevant Symptom Addition Attack on Clinical Bert Model

| List Size | Mean mismatch |

|---|---|

| Top 5 | 0.74 |

| Top 7 | 0.84 |

| Top 10 | 1.04 |

| Top 13 | 1.133 |

| Top 15 | 1.186 |

Table: Irrelevant Symptom Addition Attack on GPT-3.5 Model

Conclusion

This study highlights the susceptibility of Large Language Models (LLMs) to prompt injection attacks in the medical diagnostic domain. By designing and implementing four innovative attack methodologies—Symptoms Replacement, Symptoms Rearrangement, Irrelevant Symptoms Addition, and Contextual Noise Addition. We demonstrated the significant impact these vulnerabilities can have on diagnostic accuracy. Our studies with various models, such as GPT-3.5 Turbo and Clinical BERT, demonstrate how prompt injection can result in errors in diagnosis, jeopardizing patient safety and undermining trust in AI-driven healthcare solutions.

The findings underscore the urgent need for robust mitigation strategies to safeguard the application of LLMs in critical domains like healthcare. Potential countermeasures, such as pre-processing techniques, embedding-based similarity checks, and targeted fine-tuning, could enhance the resilience of these models. Moreover, the creation of domain-specific datasets and the investigation of model behaviors across various LLM architectures would be crucial for the advancement of secure AI applications in medicine.

Future work should focus on extending these methodologies to healthcare domains, evaluating the efficacy of mitigation techniques, and fostering collaboration between AI researchers and medical professionals to ensure the safe and effective deployment of LLMs in healthcare systems.

References

- Chowdhury et al., 2024 - Breaking Down the Defenses: A Comparative Survey of Attacks on Large Language Models. arXiv.

- Clusmann et al., 2024 - Prompt Injection Attacks on Large Language Models in Oncology. arXiv preprint.

- Das et al., 2024 - Exposing Vulnerabilities in Clinical LLMs Through Data Poisoning Attacks: Case Study in Breast Cancer. medRxiv.

- Harrer, 2023 - Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. eBioMedicine.

- Hui et al., 2024 - PLeak: Prompt Leaking Attacks against Large Language Model Applications. arXiv.

- Yang et al., 2024 - Adversarial Attacks on Large Language Models in Medicine. arXiv preprint.

- Thirunavukarasu et al., 2023 - Large language models in medicine. Nature Medicine, 29(8), 1930–1940.

- Clusmann et al., 2023 - The future landscape of large language models in medicine. Communications Medicine, 3(1), 141.

- Vaswani et al., 2017 - Attention Is All You Need. Advances in Neural Information Processing Systems.

- Brown et al., 2020 - Language Models are Few-Shot Learners. arXiv preprint.

- Singhal et al., 2022 - Large Language Models Encode Clinical Knowledge. arXiv preprint.

- Bubeck et al., 2023 - Sparks of Artificial General Intelligence: Early experiments with GPT-4. arXiv preprint.

- Ferber Dyke et al., 2024 - GPT-4 for Information Retrieval and Comparison of Medical Oncology Guidelines. NEJM AI, 1, AIcs2300235.

- Van Veen et al., 2024 - Adapted large language models can outperform medical experts in clinical text summarization. Nature Medicine, 30, 1134–1142.

- OpenAI, 2023 - GPT-4 Technical Report. arXiv preprint.

- Touvron et al., 2023 - Llama 2: Open foundation and fine-tuned chat models. arXiv preprint.

- Devlin et al., 2019 - BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv preprint.

- Radford et al., 2019 - Language Models are Unsupervised Multitask Learners. OpenAI Blog, 1(8), 9.

- Mikolov et al., 2013 - Efficient Estimation of Word Representations in Vector Space. ICLR Workshop Proceedings.