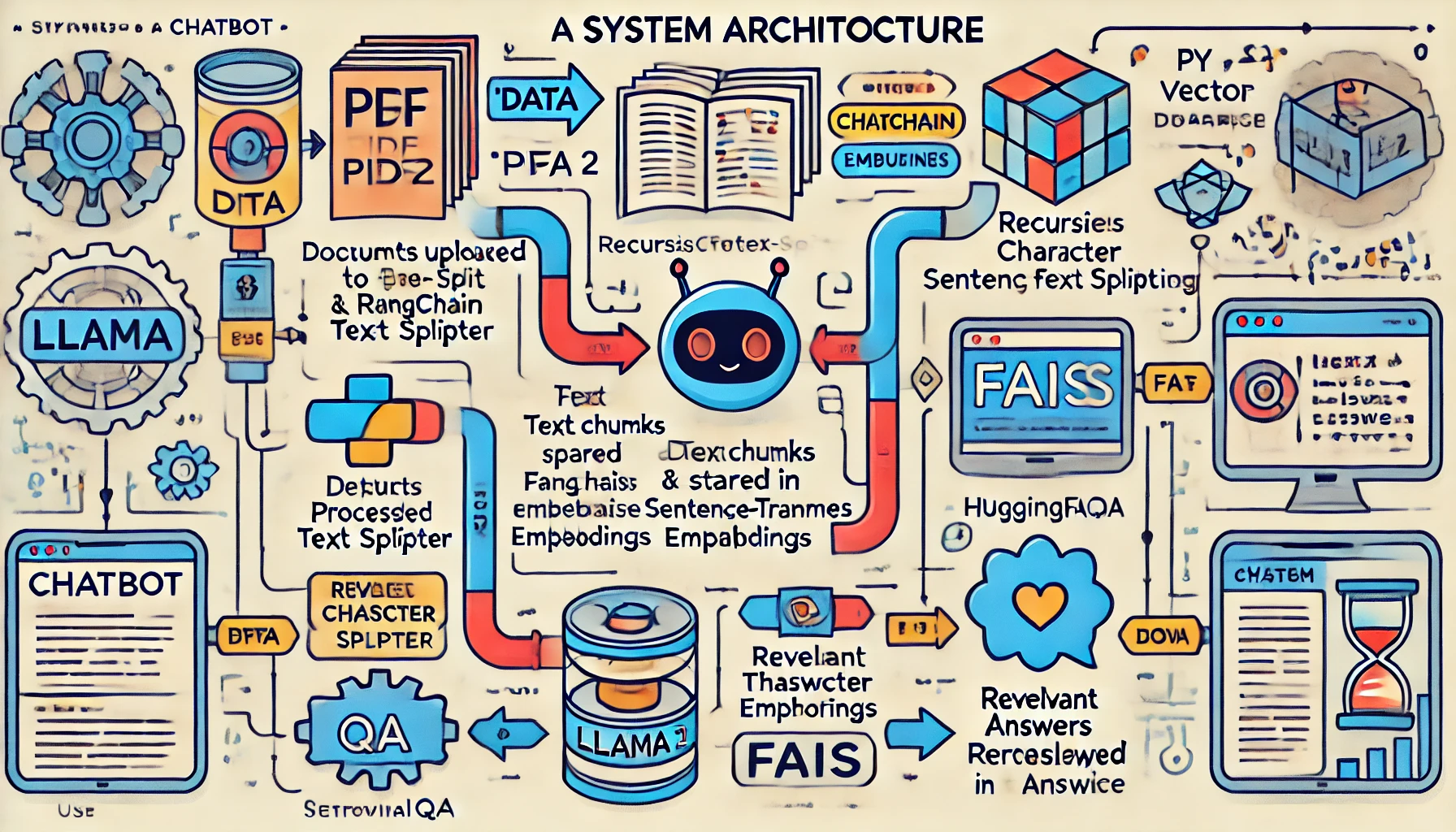

The Personal-Chatbot is an intelligent conversational agent that allows users to upload PDF documents and query relevant information using the Llama 2 model and FAISS vector store. It integrates LangChain, Hugging Face embeddings, and Chainlit to efficiently process user queries and retrieve contextually relevant answers. The chatbot utilizes sentence-transformers for embedding generation and local Llama 2 inference for response generation, ensuring an interactive and responsive experience.

from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain_community.document_loaders import PyPDFLoader, DirectoryLoader from langchain_huggingface import HuggingFaceEmbeddings from langchain_community.vectorstores import FAISS DATA_PATH = "Data/" DB_FAISS_PATH = "vectorstore/db_faiss" def create_vector_db(): loader = DirectoryLoader(DATA_PATH, glob='*.pdf', loader_cls=PyPDFLoader) documents = loader.load() text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50) texts = text_splitter.split_documents(documents) embeddings = HuggingFaceEmbeddings(model_name='sentence-transformers/all-MiniLM-L6-v2') db = FAISS.from_documents(texts, embeddings) db.save_local(DB_FAISS_PATH) if __name__ == "__main__": create_vector_db()

from langchain_community.llms import CTransformers def load_llm(): llm = CTransformers( model="llama-2-7b-chat.ggmlv3.q8_0.bin", model_type="llama", max_new_tokens=356, temperature=0.4 ) return llm

from langchain.chains import RetrievalQA def retrieval_qa_chain(llm, prompt, db): return RetrievalQA.from_chain_type( llm=llm, chain_type='stuff', retriever=db.as_retriever(search_kwargs={'k': 2}), return_source_documents=True, chain_type_kwargs={'prompt': prompt} )

The chatbot successfully processes PDF documents and answers user queries with high accuracy. It retrieves the most relevant information using FAISS and generates human-like responses with Llama 2. Users receive precise answers along with source document references.

✔ Efficient Information Retrieval – Queries are resolved quickly using FAISS indexing.

✔ Contextually Accurate Responses – Llama 2 generates answers based on retrieved document chunks.

✔ Scalability – The chatbot can handle multiple PDFs and improve response quality by fine-tuning retrieval parameters.