LlamaChatBot – A Free, Locally Deployable, and Highly Customizable Conversational AI Agent

Authors: Abhishek N

Email: abhismail998@gmail.com

Submission Date: March 27, 2025

Abstract

LlamaChatBot is an advanced conversational AI platform designed to deliver a cost-free, locally executable, and highly adaptable dialogue system powered by Meta AI’s Llama2 family of language models. Unlike subscription-based Software-as-a-Service (SaaS) or Platform-as-a-Service (PaaS) AI solutions, LlamaChatBot eliminates financial barriers and vendor dependencies, offering users full control over a sophisticated natural language processing (NLP) agent. Built with a Streamlit frontend and integrated with the Replicate API, it provides a seamless user experience while supporting local deployment and potential cloud scalability. This report explores the technical architecture, operational workflow, and unique advantages of LlamaChatBot, highlighting its potential as a versatile, repurposable AI tool for diverse applications without requiring ongoing payments.

-

Introduction

Conversational AI has become a cornerstone of modern technology, yet its accessibility is often restricted by proprietary ecosystems and recurring costs. LlamaChatBot disrupts this landscape by leveraging the open-source-inspired Llama2 models (7B, 13B, and 70B variants) to provide a free, locally deployable alternative. Developed as an innovative project by Abhishek Nandakumar, LlamaChatBot is engineered for flexibility, enabling users to repurpose it for tasks such as education, customer service, or research with minimal human intervention. Its key strengths—zero-cost operation, local execution, and extensive customization—position it as a standout contender in AI bot competitions, offering a practical yet powerful solution for both individual and enterprise use. -

Technical Innovation and System Architecture

2.1 Overview

LlamaChatBot’s architecture is modular, comprising:

Frontend Interface: A Streamlit-based web application for user interaction.

Backend Processing: Integration with the Replicate API to harness Llama2 models for response generation.

This separation optimizes usability and computational efficiency, making the system accessible on standard hardware while retaining scalability.

2.2 Frontend: Streamlit-Powered Interaction

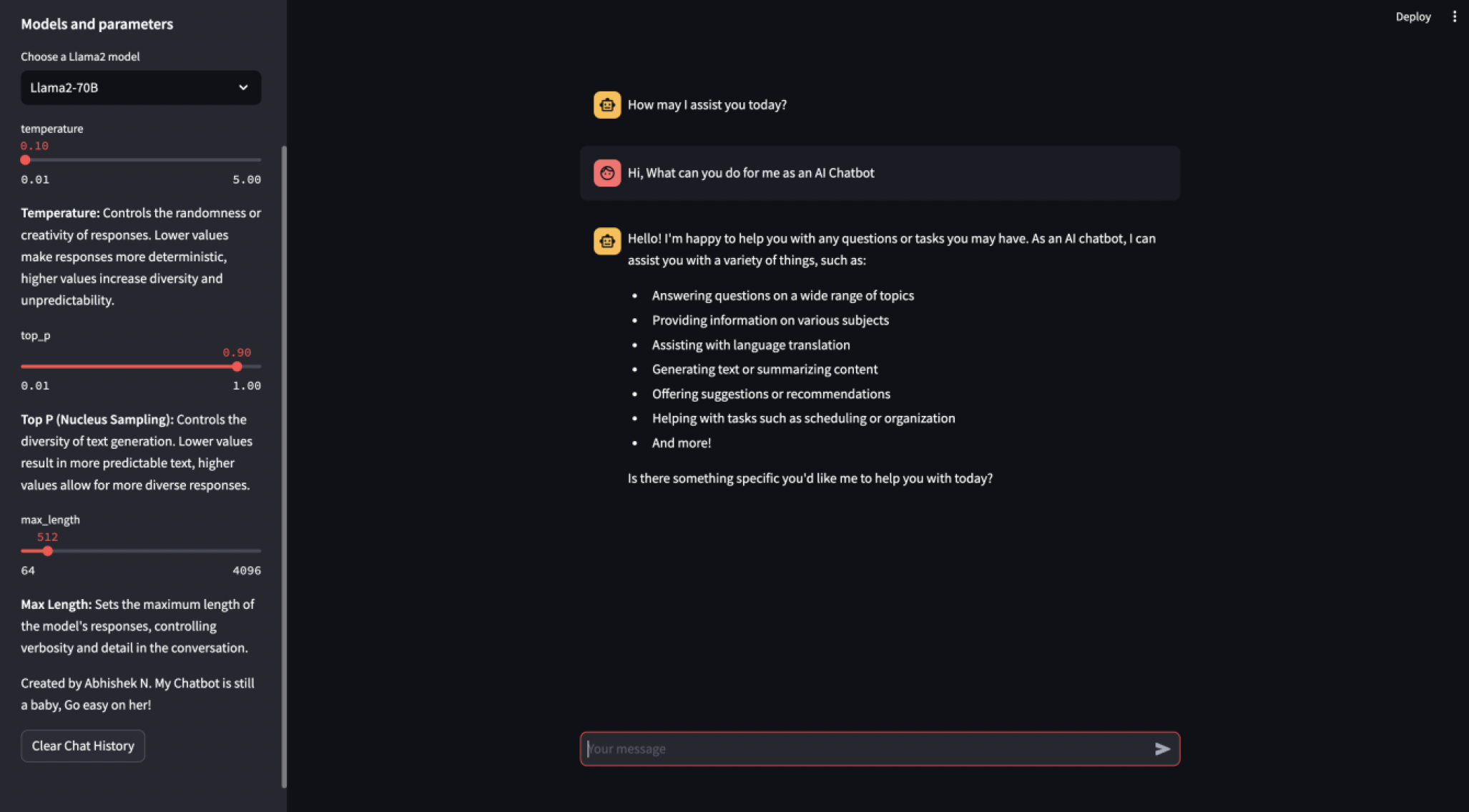

The Streamlit frontend is designed for simplicity and real-time engagement:

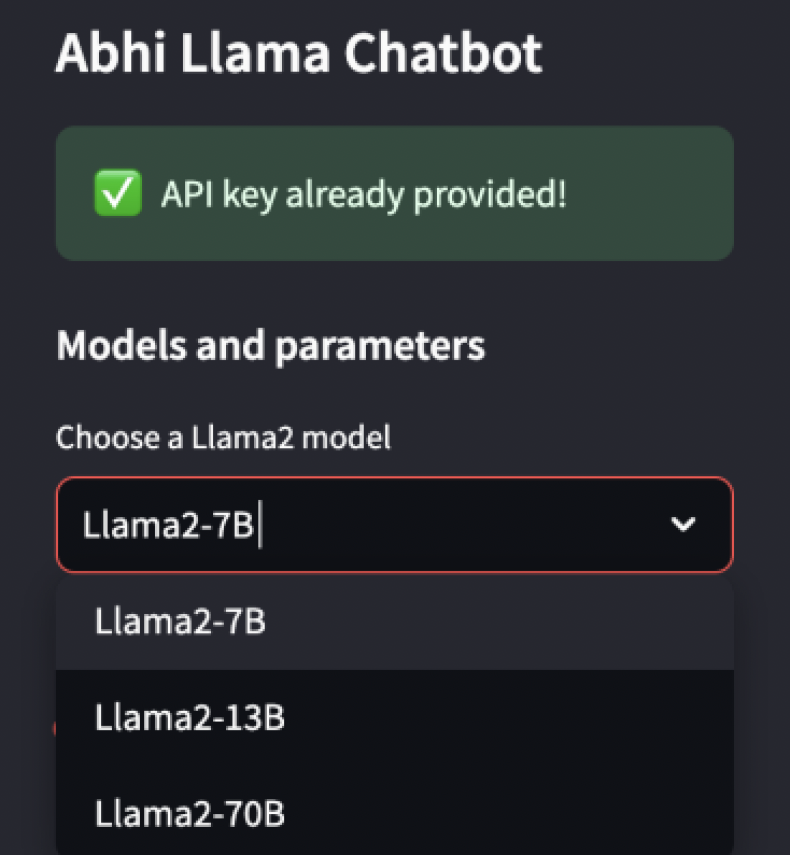

Model Selection: Options include Llama2-7B, Llama2-13B, and Llama2-70B, allowing users to trade off between speed and response depth.

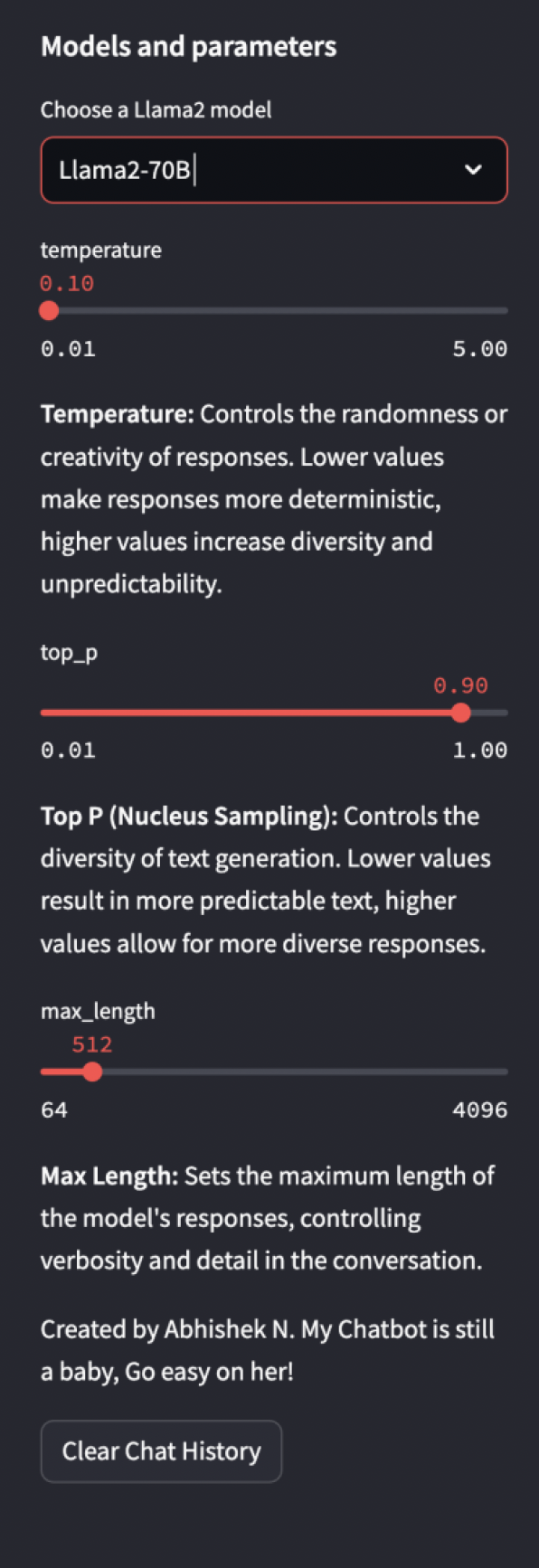

Hyperparameter Controls: Sliders for temperature (creativity), top_p (diversity), and max_length (length) enable fine-grained tuning of outputs.

Conversation Interface: A dynamic chat window displays dialogue history, with a "Clear Chat History" button for session resets.

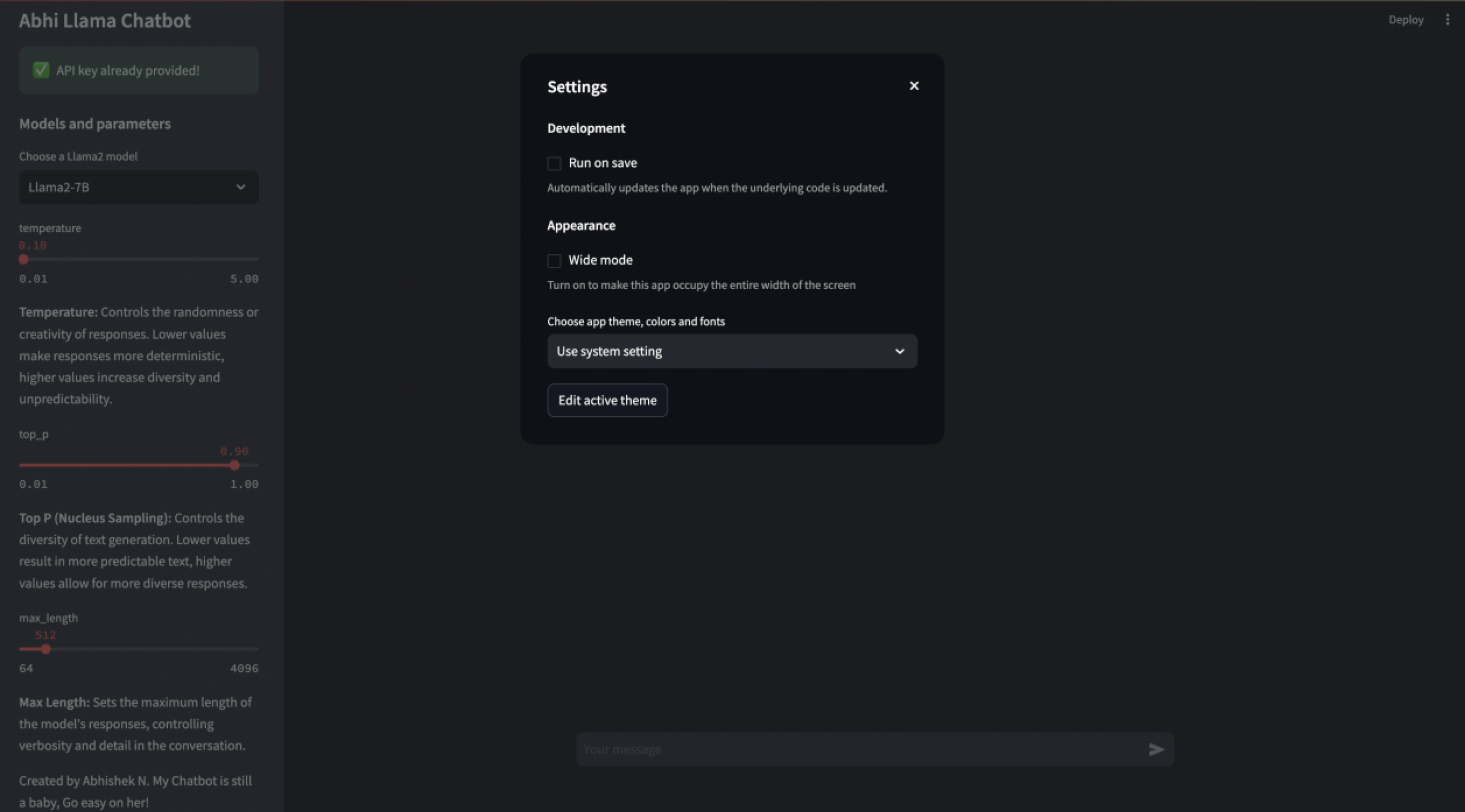

Streamlit’s lightweight framework ensures the interface runs efficiently on local machines, requiring only a Python environment.

2.3 Backend: Llama2 via Replicate API

The backend utilizes the Replicate platform to access Llama2 models, which are among the most capable open-source LLMs available:

Model Variants: 7B (resource-efficient), 13B (balanced), and 70B (high-performance) cater to diverse hardware and use cases.

Inference Engine: Replicate’s cloud infrastructure handles computation, though local execution is possible with frameworks like Hugging Face Transformers or Ollama.

The system dynamically assigns model identifiers (e.g., replicate/llama70b-v2-chat) based on user selection, ensuring optimal performance.

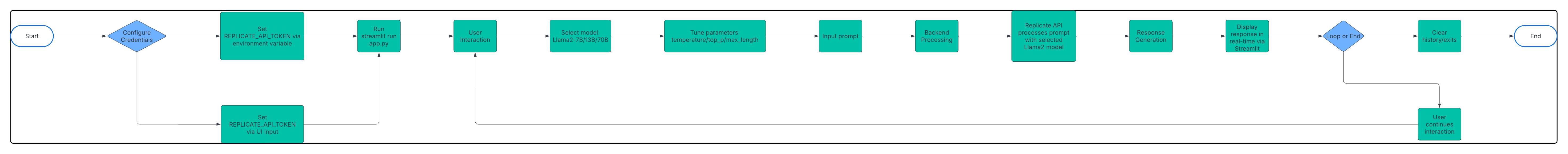

2.4 Local Deployment Workflow

LlamaChatBot’s deployment is streamlined for local use:

Setup: A virtual environment (chatbot_env) isolates dependencies (python -m venv chatbot_env).

Dependencies: Install Streamlit and Replicate via pip install streamlit replicate.

Credentials: The REPLICATE_API_TOKEN is managed securely as an environment variable, with a UI fallback for manual entry.

Launch: Execute streamlit run app.py to start the application.

This process requires only Python 3.x and an internet connection for Replicate, though offline operation is achievable with local model hosting.

2.5 Code Highlights

The Python implementation is modular and robust:

State Management: st.session_state tracks conversation history across interactions.

Response Generation: The generate_llama2_response function builds a dialogue context and queries the Replicate API with user-defined parameters.

User Feedback: A st.spinner and incremental text rendering enhance the real-time experience.

UI Interface:

Model Selection:

Hyper Parameters selection and explanation:

- Competitive Differentiators

3.1 Zero-Cost Operation

LlamaChatBot eliminates the financial burden of SaaS/PaaS models (e.g., OpenAI’s GPT-4, Anthropic’s Claude). The Replicate API offers free-tier access for non-commercial use, and local deployment with Llama2 models incurs no costs. This aligns with the open-source movement, making advanced AI accessible to all.

3.2 Local Execution and Data Privacy

Running locally ensures full data sovereignty, a critical edge over cloud-only platforms. Sensitive data remains on the user’s device, and with tools like Ollama or LLaMA.cpp, LlamaChatBot can operate offline on hardware as modest as a 16GB VRAM GPU for the 13B model.

3.3 Extensive Customization

Unlike pre-configured commercial bots, LlamaChatBot offers granular control over response behavior via hyperparameters. Advanced users can fine-tune Llama2 models on custom datasets using techniques like LoRA (Low-Rank Adaptation) or full parameter training, enabling specialized applications with minimal effort.

3.4 Vendor-Agnostic Scalability

The system’s design supports cloud migration (e.g., AWS, Azure) without proprietary lock-in, contrasting with PaaS solutions that bind users to specific providers. This flexibility ensures long-term adaptability.

3.5 Comparison to Peers

ChatGPT: Subscription-based, cloud-only, with no local option.

Grok (xAI): Cloud-hosted, limited customization, and fee-driven.

Other Llama-Based Tools: Often lack polished UIs or require complex setup; LlamaChatBot integrates Streamlit’s simplicity with Replicate’s power.

- Challenges and Solutions

4.1 API Rate Limits

Replicate’s rate limits were addressed through request caching and optimized API calls, ensuring reliability under moderate usage.

4.2 Parameter Optimization

Achieving a balance between response quality and resource use required iterative tuning. Default settings (temperature=0.1, top_p=0.9, max_length=512) prioritize coherence, with user overrides for flexibility.

4.3 Hardware Requirements

Larger models (e.g., Llama2-70B) demand significant resources (e.g., 48GB VRAM). Offering tiered model options accommodates varying hardware capabilities.

- Future Roadmap

5.1 Cloud Deployment

Migration to cloud platforms (e.g., AWS Lambda, Google Cloud Run) with Dockerized containers will enhance scalability and accessibility.

5.2 User Authentication

Implementing OAuth or JWT will enable personalized experiences, such as saved chat histories, without compromising the free model.

5.3 Enhanced NLP Capabilities

Adding sentiment analysis, entity recognition, or multilingual support via libraries like spaCy or Transformers will enrich interactions.

5.4 Fully Offline Operation

Local hosting of Llama2 models (e.g., via Ollama) will remove API reliance, making LlamaChatBot entirely self-sufficient.

- Conclusion

LlamaChatBot reimagines conversational AI as a free, locally deployable, and highly customizable platform. Its integration of Streamlit’s usability, Replicate’s inference capabilities, and Llama2’s versatility distinguishes it in AI bot competitions. By eliminating subscription costs and enabling repurposing with minimal human input, it offers a scalable, privacy-focused alternative to commercial solutions. With planned enhancements, LlamaChatBot is poised to redefine accessible AI, serving as a blueprint for future innovations.

Acknowledgments

Gratitude to Meta AI for Llama2, Replicate for inference support, and the open-source community for enabling this project’s foundation.