Hand-Gesture Control for Subway Surfers Game

Table of contents

Hand gesture game control

This project implements a hand gesture recognition system using OpenCV and Mediapipe, enabling users to control the popular game Subway Surfers through intuitive hand movements. The system captures real-time video input from a webcam, processes hand landmarks to recognize gestures, and translates these gestures into keyboard commands for the game.

Features

Real-time hand gesture detection using Mediapipe.

Smooth integration with the game, allowing for an engaging and interactive gaming experience.

Gesture-based control for Subway Surfers:

- Jump: Up arrow

- Roll: Down arrow

- Move Left: Left arrow

- Move Right: Right arrow

Requirements

- Python 3.x

- OpenCV

- Mediapipe

- PyAutoGUI

- NumPy

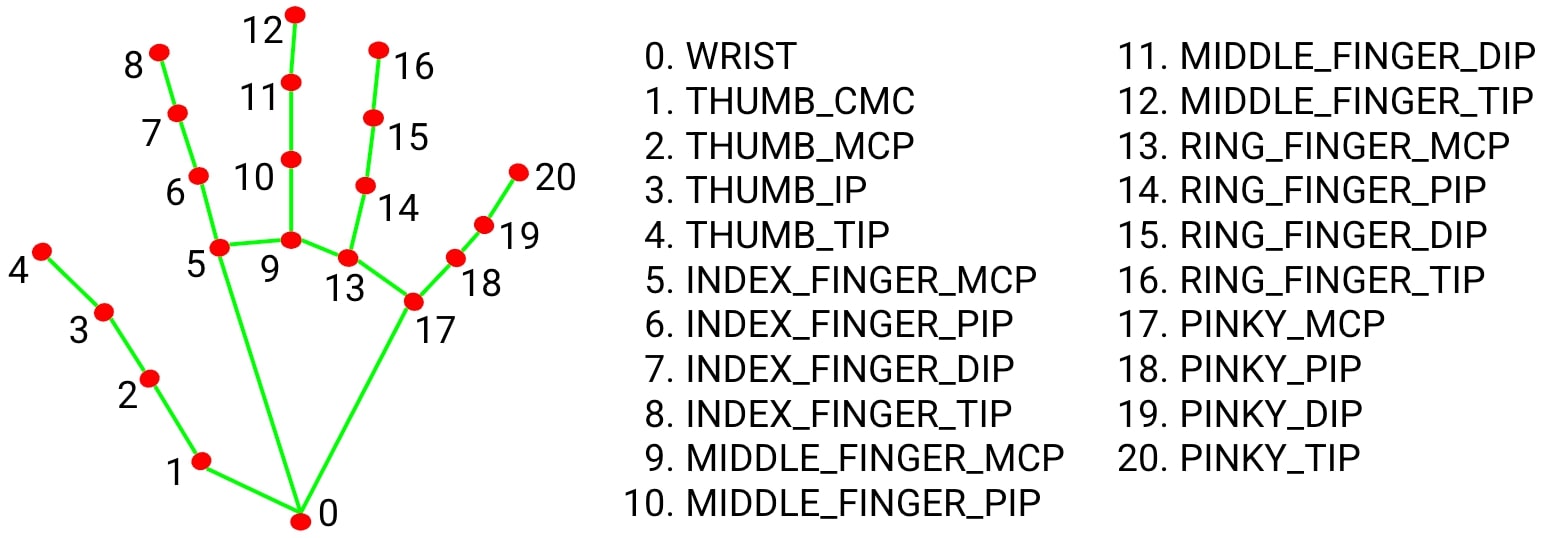

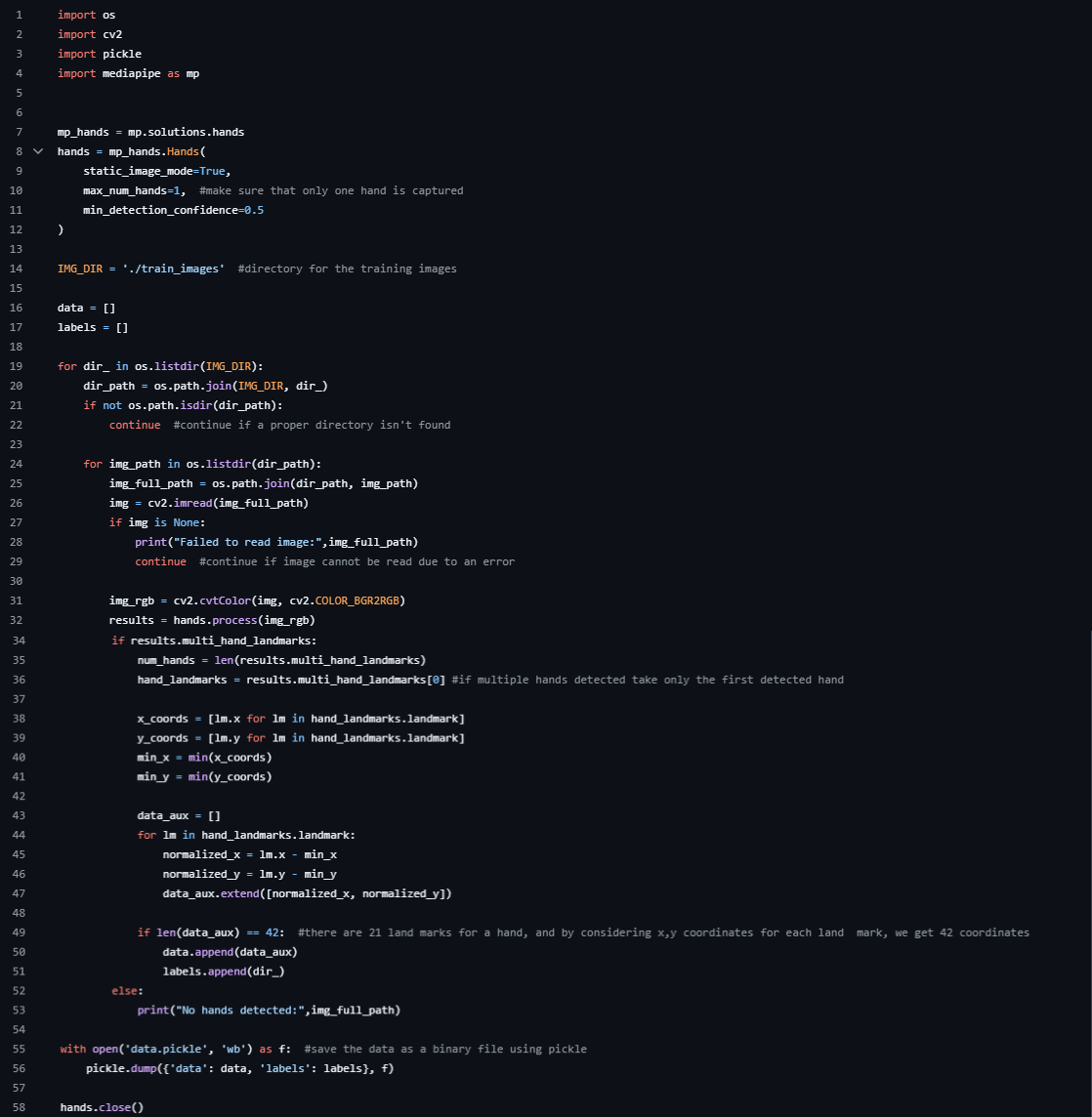

MediaPipe Hand Landmark Model was used to recognize hands in this project with 21 data points per hand.

I could've used a matrix related approach to detect how the hand is placed, or track hand movement, but later i relaized it would be much easier to train model because I only need 5 hand gestured in this scenario. As a result i used MediaPipe Hand Landmark Model to label the hands appearing on the screen with 21 data points and cobert them to a set of normalized x and y coordinates with respective labels (left,right,jump,roll,nothing).

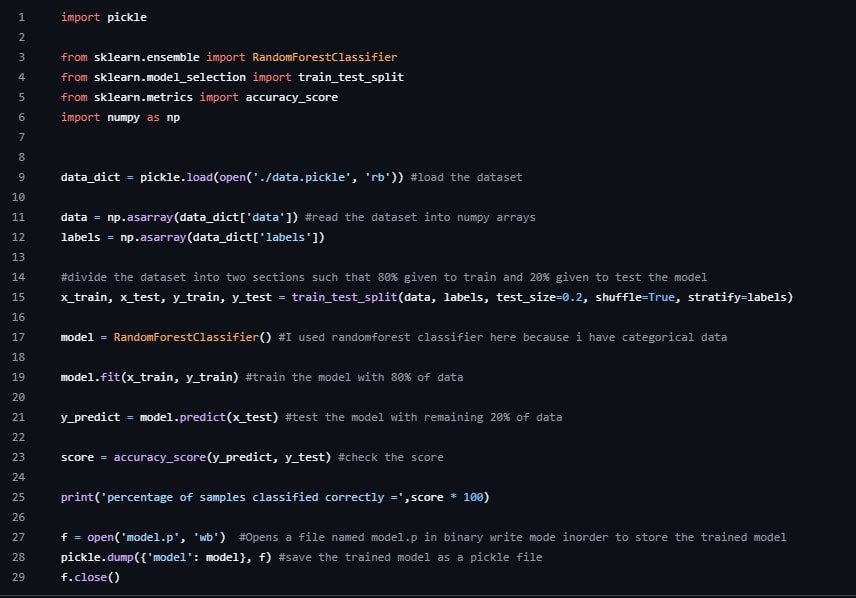

Then I trained a model using RandomForestClassifier from sklearn.

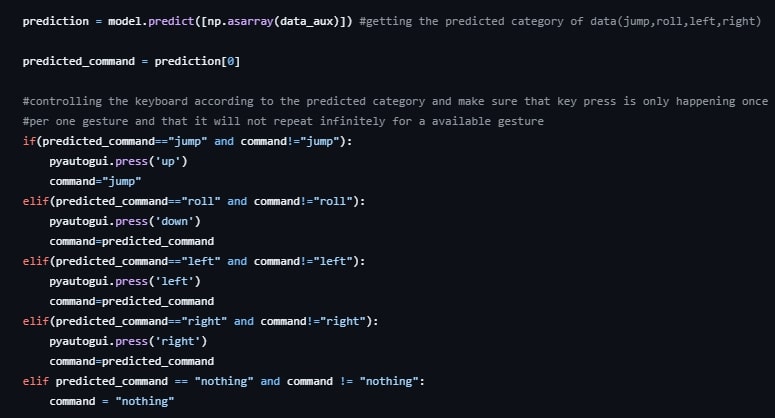

Then in the detection process, hand will be recognized again with the same Hand Landmark Model,then fed as an input to the trained mdoel and the output of the model will be the respective label suitbale for that respective hand gesture(left,right,jump,roll,nothing). And using python pyautogui module, I converted above labels obtained into keystrokes as below to automatically controll the ingame character according ot the gestures

- Jump: Up arrow

- Roll: Down arrow

- Move Left: Left arrow

- Move Right: Right arrow