Since companies has published its LLM APIs, integrating AI chats to your client apps has become a rather easy option. Given that the LLM API is HTTP-based and there are also many call-wrapping libraries for any platform, the one might implement the chat directly in a mobile app or a web-site. However, it might be not a good architectural decision to go this way. Among the reasons are:

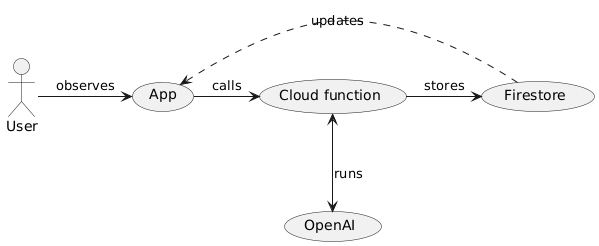

Thus, it might be a good idea to put the AI interaction to the back-end and to update your client with just the results of AI runs.

Firebase AI Chat uses Firebase - a popular platform for Web and Mobile application development to run your AI in a scalable and reliable way.

Check out this short video for a quick introduction on the travel agency AI-Concierge experiment using this engine.

The task described in this publication started as an experiment. Being mobile developers, we used the tools most known for us - the Firebase platform. But going through the project we decided that the core of it might come handy to those who want to launch their own LLM chat using the same technology stack. So the chat engine behind the experiment is now available as an open source.

The chat engine strives to implement a client-server Unidirectional Data Flow (UDF) architecture:

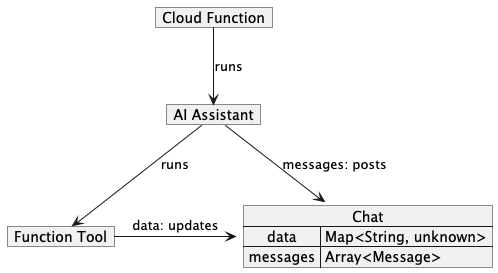

Besides the messages, each chat maintains some data object - the product of the goal being solved by your assistant. Function tools bound to your assistant read and update the data, producing the final result of a chat - an order, a summary, etc:

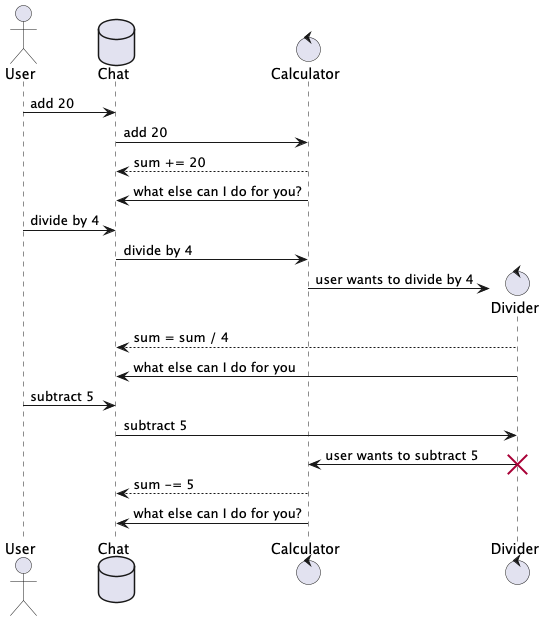

As your main goal grows complex it is worth considering delegating simpler tasks to several assistants (or Agents), each of them trained to perform the certain scope of the main goal. Thus, a crew of assistants work as a team to decompose the main task and move step by step to fulfil it. This library supports changing assistants on-demand depending on the context. Whenever the main agent decides the task is appropriate for an assistant it may switch the chat handling to him providing current context and supplementary data. Consider an example where all the division operations are done by the main calculator subordinate - the Divider:

To take all the advantages of different LLM engines you may want to use, the engine is built in a modular way to support different platforms. Together with the Agentic Flow support that gives you the ability to dedicate different tasks to different engines to take the most advantage for each particular task. At the time of writing the available engines are:

Working in one of the leading private aviation companies, our team strives to create a fundamentally new travel experience and make the booking service as efficient as possible.

To manage an order the client may use our set of web and mobile applications. However a chat or a phone call with a personal concierge to fix all the details is always a good option.

We decided to check how modern LLMs may help the concierge in his daily tasks and help him to gather all the required data for the trip.

We have taken the three major tasks that form the private flight order:

Each step requires certain data from a client and also accessing some business data to limit the choice and to fit the order into our business flow. Soon enough we have found that building a mega prompt to manage all the goals together - is a tough task. Inspired by the multi-AI solutions such as CrewAI and ChatDev we have updated our Firebase chat engine to allow several agents to work as a team, each of them solving their own part of the complex order.

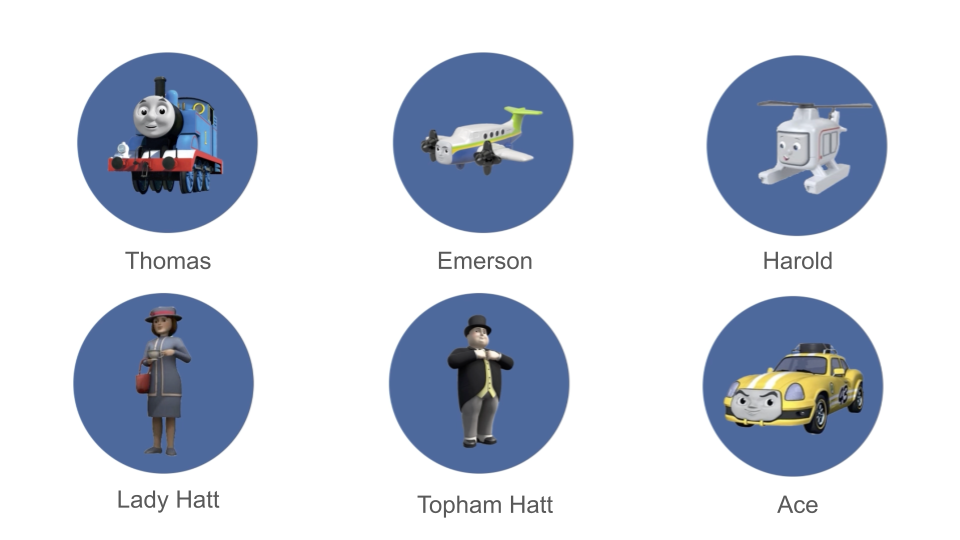

The agents switch the chat to one another selecting the most appropriate team-member for the task. We needed some inspiration to give our agents friendly names so we took the famous British TV series - Thomas And Friends as a prototype of a team that work together in the field of transportation :)

Here's the team of our AI-agents:

The roles of the team members in a crew:

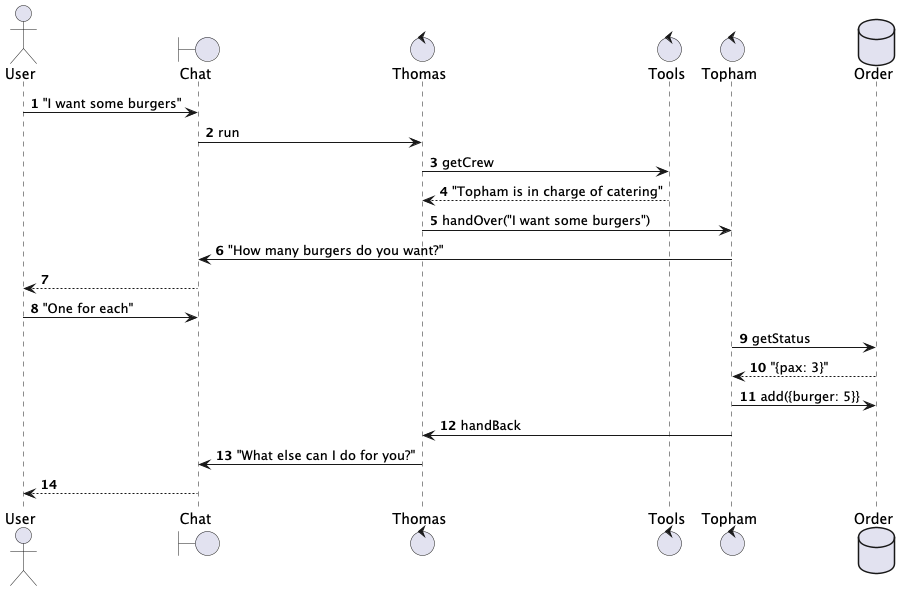

At the beginning Thomas controls the chat and responds to the client's demands.

When the assistant gets some special request, like catering, he calls the getCrew function.

Thomas chooses the most appropriate crew member for the task and hands the chat over to him passing some instructing messages internally.

Above you can see an example of switching the chat to Topham whenever the client asks something relative to catering. Being tuned to handle catering requests, Topham manages the order, fulfils the client's request and passes the control back as soon as he's done.

You can check the Thomas' prompt and switching calls here

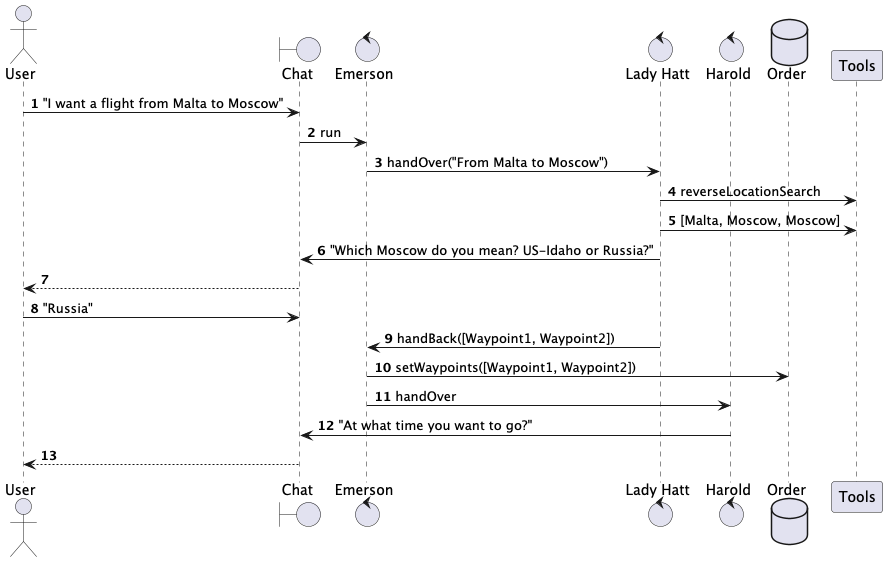

Preliminary trip planning requires the input of departure and destination airports and the desired flight date. To make things more fun, besides the exact airports the client may provide a street address, a geo-point or some named "favourite" available from his flight history: "home", "work", etc. Given the input of the departure and the destination the system needs to find the appropriate airports. Here Emerson, Harold, Ace and Lady Hatt work together.

When Emerson gathers the points that look like locations, he handles the chat to Lady Hatt to validate the locations and to perform a reverse location search. Lady will ask some more questions if the locations given are ambiguous and return the digested waypoints. Emerson will then update the order with data required for detailed trip planning and switch the client to Harold to fill the details and find the optimal route. If required Ace will later pickup the starting and final locations to plan the airport transfer.

Within the flow agents make extensive use of function tools to stay in bounds of real business data and to limit hallucination. The order itself is also maintained by function calls, so the order data is always valid and contains real data. As the engine supports multiple AI engines the tool usage is made abstract from the particular engine and is presented by a single functional interface (some parameters reduced for clarity):

export interface ToolsDispatcher<DATA extends ChatData> { ( data: DATA, name: string, args: Record<string, unknown> ): ToolDispatcherReturnValue<DATA> }

The parameters are the following:

data - Chat state (order here) data so farname - Name of function calledargs - Function argumentsThe function returns ToolDispatcherReturnValue value which may be one or a combination of:

{result: "Response from your tool"}data state passed to dispatcher.{error: "You have passed the wrong argument}Each time the dispatcher runs, the chat data will be updated by the reduced value returned by the dispatcher.

The very same technique is used to switch the active agent. And given that the tools are asynchronous, we could also implement the preliminary checks and fetch some context data for the assistant.

For example, this function checks if all required data is settled in the order and fetches additional charter information required to complete the booking:

export async function switchToFlightOptions( soFar: OrderChatData, chatData: ChatDispatchData<OrderChatMeta>, args: Record<string, unknown>, toolsHandover: ToolsHandOver<Meta, OrderChatMeta>, from: ASSISTANT ): Promise<ToolDispatcherReturnValue<OrderChatData>> { logger.d("Switching to charter flight options..."); // Preparing initial message input for Harold const messages: Array<string> = ["Here is the data for the current order:"]; // Check if basic flight options are set, otherwise, tell the caller that the data is incomplete const flightOrder = soFar.flightOrder; if (!areBasicFlightOptionsSet(flightOrder)) { return { error: `Basic flight options are not yet configured. Ask ${getAssistantName(FLIGHT)} to create the flight order before changing flight details.` }; } // Print current order state for Harold messages.push(`Current flight order state: ${JSON.stringify(flightOrder)}`); // Getting charter details from the system backend for given basic options logger.d("Getting charter options..."); const options = await getCharterOptions( flightOrder.from, flightOrder.to, flightOrder.departureDate, flightOrder.plane, ); messages.push(`Here are available flight options: ${JSON.stringify(options)}`); // Switch to Harold, providing initial messages as a start return doHandOver( getChatConfig(FLIGHT_OPTIONS), chatData, getChatMeta(FLIGHT_OPTIONS).aiMessageMeta, messages, toolsHandover, from ); }

Such combination of config

Here are some links for you:

In conclusion, embracing the agentic flow for complex LLM tasks offers a robust and scalable approach to problem-solving. By leveraging a crew of specialized agents, each available as a separate npm package and easily testable in isolation, we can effectively decompose and tackle intricate tasks. This modularity not only enhances the flexibility and efficiency of the workflow but also ensures that each component can be independently optimized and maintained. As these agents collaboratively work towards fulfilling the global task, they exemplify the power of distributed intelligence and the potential for innovative solutions in the realm of large language models.

Moreover, integrating a chat engine that works natively in Firebase Cloud further simplifies the process of building chat flows for both mobile and web applications. Utilizing Firebase's robust infrastructure, developers can seamlessly create, deploy, and manage chat functionalities, ensuring real-time communication and a smooth user experience. This combination of agentic flow and Firebase's capabilities promises to drive advancements and unlock new possibilities in AI-driven applications.

We invite you to visit our GitHub repository and if you find it useful - join the project. We are open to code contributions and welcome collaboration from developers around the world.