Image Credit: Freepik

This guide outlines best practices for creating compelling AI and data science publications on Ready Tensor. It covers selecting appropriate publication types, assessing technical content quality, structuring information effectively, and enhancing readability through proper formatting and visuals. By following these guidelines, authors can create publications that effectively showcase their work's value to the AI community.

If you are participating in a Ready Tensor publication competition, follow these steps to efficiently use this guide:

Step 1: Identify Your Project Type

→ Go to Section 2.2 - Ready Tensor Project Types

Step 2: Choose Your Presentation Style

→ Go to Sections 2.4 and 2.5

Step 3: Understand Assessment Criteria

→ Go to Appendix B

Step 4: Enhance Your Presentation

→ Go to Section 5

This quick guide helps you focus on the most essential sections for competition preparation. For comprehensive understanding, we recommend reading the entire guide when time permits.

The AI and data science community is expanding rapidly, encompassing students, practitioners, researchers, and businesses. As projects in this field multiply, their success hinges not only on the quality of work but also on effective presentation. This guide aims to help you showcase your work optimally on Ready Tensor. It covers the core tenets of good project presentation, types of publishable projects, selecting appropriate presentation styles, structuring your content, determining information depth, enhancing readability, and ensuring your project stands out. Throughout this guide, you'll learn to present your work in a way that engages and inspires your audience, maximizing its impact in the AI and data science community.

This guide is designed to help AI and data science professionals effectively showcase their projects on the Ready Tensor platform. Whether you're a seasoned researcher, an industry practitioner, or a student entering the field, presenting your work clearly and engagingly is crucial for maximizing its impact and visibility.

The purpose of this guide is to:

We cover a range of topics, including:

By following the guidelines presented here, you'll be able to create project showcases that not only effectively communicate your work's technical merit but also capture the attention of your target audience, whether they're potential collaborators, employers, or fellow researchers.

This guide is not a technical manual for conducting AI research or developing models. Instead, it focuses on the crucial skill of presenting your completed work in the most impactful way possible on the Ready Tensor platform.

An effectively presented project can:

By investing time in thoughtful presentation, you demonstrate not only technical skills but also effective communication—a critical professional asset. Remember, even groundbreaking ideas can go unnoticed if not presented well.

This section covers the core tenets of great projects, Ready Tensor project types, and how to select the right presentation approach.

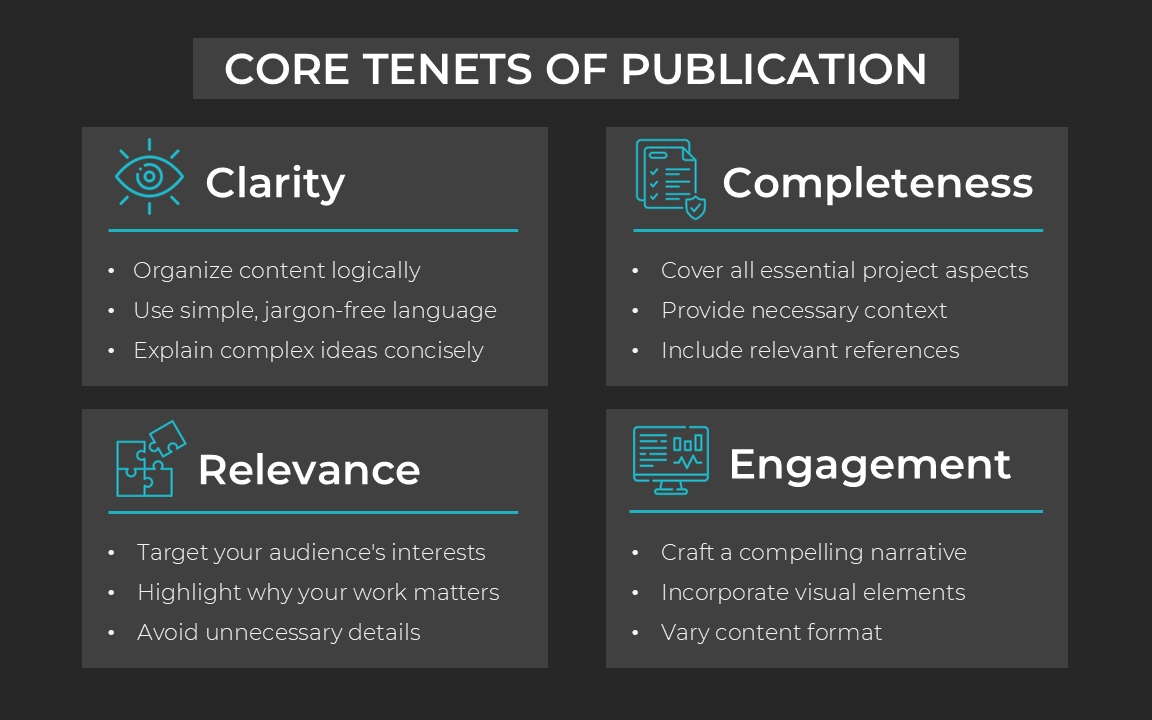

To create a publication that truly resonates with your audience, focus on these core tenets:

Let's expand on each of these tenets:

Clarity: Present your ideas in a straightforward, easily understood manner. Use simple language, organize your content logically, and explain complex concepts concisely. Clear communication ensures your audience can follow your work without getting lost in technical jargon.

Completeness: Provide comprehensive coverage of your project, including all essential aspects. Offer necessary context and include relevant references. A complete presentation gives your audience a full understanding of your work and its significance.

Relevance: Ensure your content is pertinent to your audience and aligns with current industry trends. Target your readers' interests and highlight practical applications of your work. Relevant content keeps your audience engaged and demonstrates the value of your project.

Engagement: Make your presentation captivating through varied and visually appealing content. Use visuals to illustrate key points, vary your content format, and tell a compelling story with your data. An engaging presentation holds your audience's attention and makes your work memorable.

By adhering to these core tenets, you'll create a project presentation that not only communicates your ideas effectively but also captures and maintains your audience's interest. Remember, a well-presented project is more likely to make a lasting impact in the AI and data science community.

In addition to these four key tenets, consider addressing the originality and impact of your work. While Ready Tensor doesn't strictly require originality like academic journals or conferences, highlighting what sets your project apart can increase its value to readers. Similarly, discussing the potential effects of your work on industry, academia, or society helps readers grasp its significance. These aspects, when combined with the core tenets, create a comprehensive and compelling project presentation.

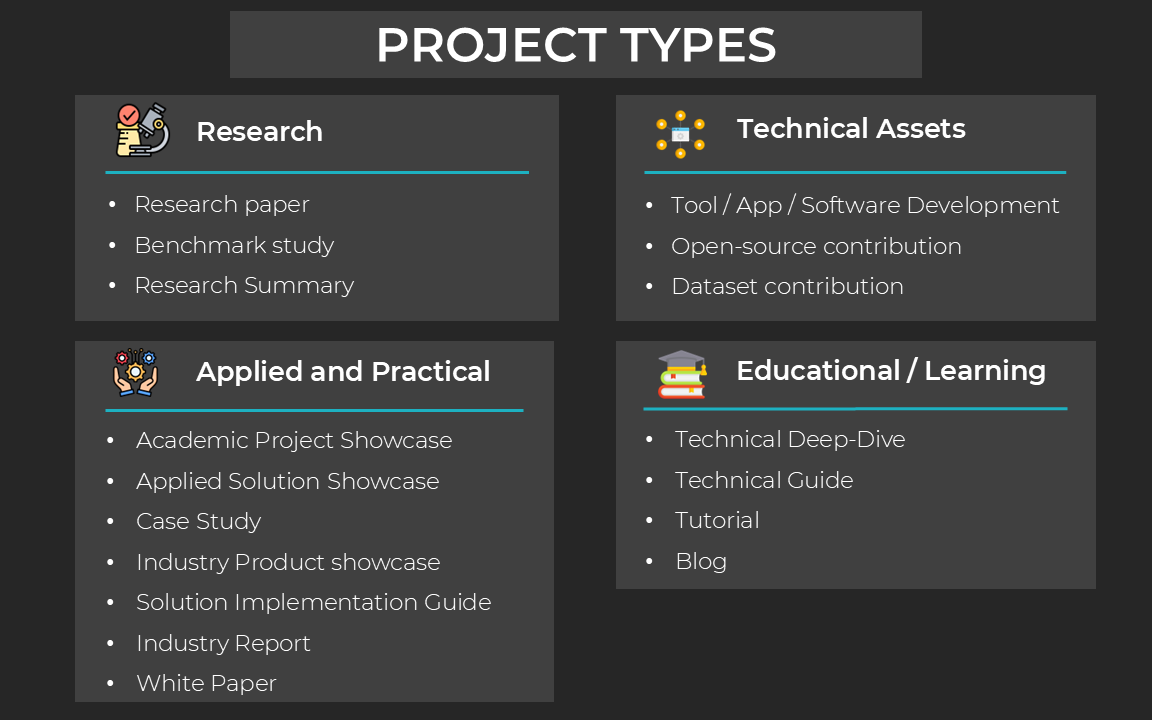

Ready Tensor supports various project types to accommodate different kinds of AI and data science work. Understanding these types and appropriate presentation styles will help you showcase your work effectively. The following chart lists the common project types:

The following table describes each project type in detail, including the publication category, publication type, and a brief description along with examples:

| Publication Category | Publication Type | Description | Examples |

|---|---|---|---|

| Research & Academic Publications | Research Paper | Original research contributions presenting novel findings, methodologies, or analyses in AI/ML. Must include comprehensive literature review and clear novel contribution to the field. Demonstrates academic rigor through systematic methodology, experimental validation, and critical analysis of results. | • "Novel Attention Mechanism for Improved Natural Language Processing" • "A New Framework for Robust Deep Learning in Adversarial Environments" |

| Research & Academic Publications | Research Summary | Accessible explanations of specific research work(s) that maintain scientific accuracy while making the content more approachable. Focuses on explaining key elements and significance of original research rather than presenting new findings. Includes clear identification of original research and simplified but accurate descriptions of methodology. | • "Understanding GPT-4: A Clear Explanation of its Architecture" • "Breaking Down the DALL-E 3 Paper: Key Innovations and Implications" |

| Research & Academic Publications | Benchmark Study | Systematic comparison and evaluation of multiple models, algorithms, or approaches. Focuses on comprehensive evaluation methodology with clear performance metrics and fair comparative analysis. Includes detailed experimental setup and reproducible testing conditions. | • "Performance Comparison of Top 5 LLMs on Medical Domain Tasks" • "Resource Utilization Study: PyTorch vs TensorFlow Implementations" |

| Educational Content | Academic Solution Showcase | Projects completed as part of coursework, self-learning, or competitions that demonstrate application of AI/ML concepts. Focuses on learning outcomes and skill development using standard datasets or common ML tasks. Documents implementation approach and key learnings. | • "Building a CNN for Plant Disease Detection: A Course Project" • "Implementing BERT for Sentiment Analysis: Kaggle Competition Entry" |

| Educational Content | Blog | Experience-based articles sharing insights, tips, best practices, or learnings about AI/ML topics. Emphasizes practical knowledge and real-world perspectives based on personal or team experience. Includes authentic insights not found in formal documentation. | • "Lessons Learned from Deploying ML Models in Production" • "5 Common Pitfalls in Training Large Language Models" |

| Educational Content | Technical Deep Dive | In-depth, pedagogical explanations of AI/ML concepts, methodologies, or best practices with theoretical foundations. Focuses on building deep technical understanding through theory rather than implementation. Includes mathematical concepts and practical implications. | • "Understanding Transformer Architecture: From Theory to Practice" • "Deep Dive into Reinforcement Learning: Mathematical Foundations" |

| Educational Content | Technical Guide | Comprehensive, practical explanations of technical topics, tools, processes, or practices in AI/ML. Focuses on practical understanding and application without deep theoretical foundations. Includes best practices, common pitfalls, and decision-making frameworks. | • "ML Model Version Control Best Practices" • "A Complete Guide to ML Project Documentation Standards" |

| Educational Content | Tutorial | Step-by-step instructional content teaching specific AI/ML concepts, techniques, or tools. Emphasizes hands-on learning with clear examples and code snippets. Includes working examples and troubleshooting tips. | • "Building a RAG System with LangChain: Step-by-Step Guide" • "Implementing YOLO Object Detection from Scratch" |

| Real-World Applications | Applied Solution Showcase | Technical implementations of AI/ML solutions solving specific real-world problems in industry contexts. Focuses on technical architecture, implementation methodology, and engineering decisions. Documents specific problem context and technical evaluations. | • "Custom RAG Implementation for Legal Document Processing" • "Building a Real-time ML Pipeline for Manufacturing QC" |

| Real-World Applications | Case Study | Analysis of AI/ML implementations in specific organizational contexts, focusing on business problem, solution approach, and impact. Documents complete journey from problem identification to solution impact. Emphasizes business context over technical details. | • "AI Transformation at XYZ Bank: From Legacy to Innovation" • "Implementing Predictive Maintenance in Aircraft Manufacturing" |

| Real-World Applications | Technical Product Showcase | Presents specific AI/ML products, platforms, or services developed for user adoption. Focuses on features, capabilities, and practical benefits rather than implementation details. Includes use cases and integration scenarios. | • "IntellAI Platform: Enterprise-grade ML Operations Suite" • "AutoML Pro: Automated Model Training and Deployment Platform" |

| Real-World Applications | Solution Implementation Guide | Step-by-step guides for implementing specific AI/ML solutions in production environments. Focuses on practical deployment steps and operational requirements. Includes infrastructure setup, security considerations, and maintenance guidance. | • "Production Deployment Guide for Enterprise RAG Systems" • "Setting Up MLOps Pipeline with Azure and GitHub Actions" |

| Real-World Applications | Industry Report | Analytical reports examining current state, trends, and impact of AI/ML adoption in specific industries. Provides data-driven insights about adoption patterns, challenges, and success factors. Includes market analysis and future outlook. | • "State of AI in Financial Services 2024" • "ML Adoption Trends in Healthcare: A Comprehensive Analysis" |

| Real-World Applications | White Paper | Strategic documents proposing approaches to industry challenges using AI/ML solutions. Focuses on problem analysis, solution possibilities, and strategic recommendations. Provides thought leadership and actionable recommendations. | • "AI-Driven Digital Transformation in Banking" • "Future of Healthcare: AI Integration Framework" |

| Technical Assets | Dataset Contribution | Creation and publication of datasets for AI/ML applications. Focuses on data quality, comprehensive documentation, and usefulness for specific ML tasks. Includes collection methodology, preprocessing steps, and usage guidelines. | • "MultiLingual Customer Service Dataset: 1M Labeled Conversations" • "Medical Image Dataset for Anomaly Detection" |

| Technical Assets | Open Source Contribution | Contributions to existing open-source AI/ML projects. Focuses on collaborative development and community value. Includes clear description of changes, motivation, and impact on the main project. | • "Optimizing Inference Speed in Hugging Face Transformers" • "Adding TPU Support to Popular Deep Learning Framework" |

| Technical Assets | Tool/App/Software | Introduction and documentation of specific software implementations utilizing AI/ML. Focuses on tool's utility, functionality, and practical usage rather than theoretical foundations. Includes comprehensive usage information and technical specifications. | • "FastEmbed: Efficient Text Embedding Library" • "MLMonitor: Real-time Model Performance Tracking Tool" |

You can choose the most suitable project type by considering these key factors:

1. Primary Focus of Your Project

Identify the main contribution or core content of your work. Examples include:

2. Objective for Publishing

Clarify what you aim to achieve by sharing your project. Common objectives include:

3. Target Audience

Determine who will benefit most from your project. Potential audiences include:

Based on these considerations, select the project type that best aligns with your work.

Remember, the project type serves as a primary guide but doesn't limit the scope of your content. Use tags to highlight additional aspects of your project that may not be captured by the primary project type.

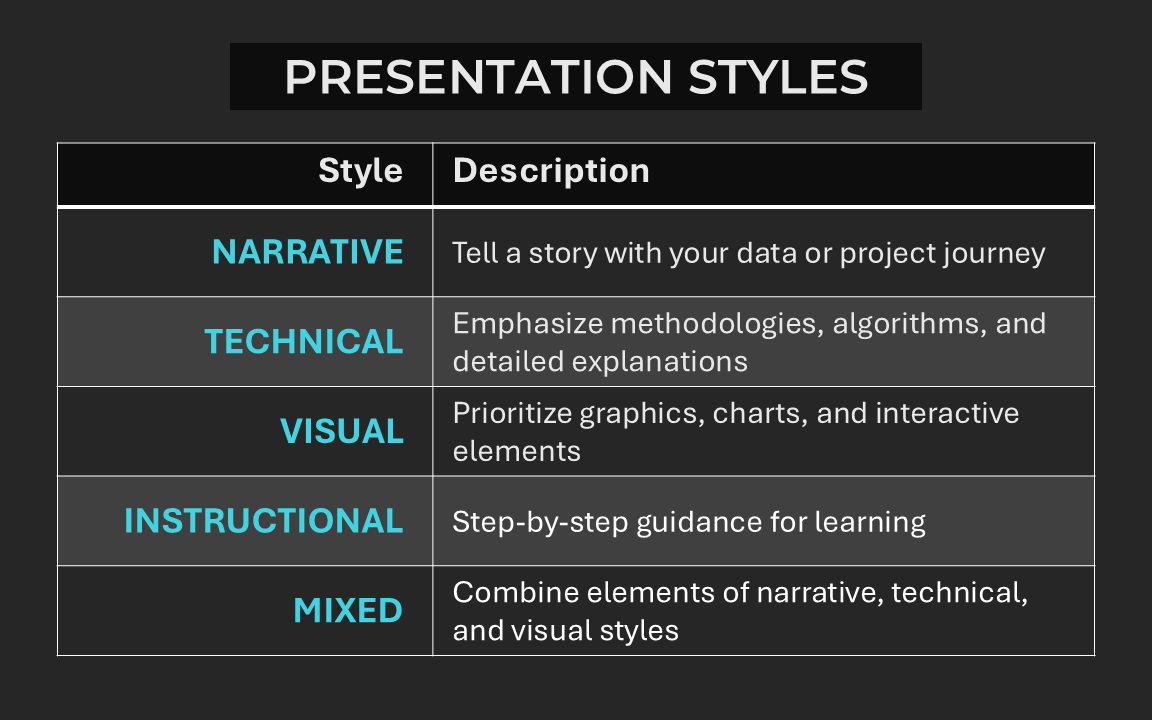

Choosing the right presentation style is crucial for effectively communicating your project's content and engaging your target audience. See the following chart for various styles for presenting your project work.

Let's review the styles in more detail:

• Narrative: This style weaves your project into a compelling story, making it accessible and engaging. It's particularly effective for showcasing the evolution of your work, from initial challenges to final outcomes.

• Technical: Focused on precision and detail, the technical style is ideal for projects that require in-depth explanations of methodologies, algorithms, or complex concepts. It caters to audiences seeking thorough understanding.

• Visual: By prioritizing graphical representations, the visual style makes complex data and ideas more digestible. It's particularly powerful for illustrating trends, comparisons, and relationships within your project.

• Instructional: This style guides the audience through your project step-by-step. It's designed to facilitate learning and replication, making it ideal for educational content or showcasing reproducible methods.

• Mixed: Combining elements from other styles, the mixed approach offers versatility. It allows you to tailor your presentation to diverse aspects of your project and cater to varied audience preferences.

We will now explore how to match the project type and presentation style to your project effectively.

Different project types often lend themselves to certain presentation styles. While there's no one-size-fits-all approach, the following grid can guide you in selecting the most appropriate style(s) for your project:

Remember, this grid is a guide, not a strict rule. Your unique project may benefit from a creative combination of styles.

The goal is to make technical content more accessible without compromising scientific rigor. This approach helps bridge the gap between technical depth and public engagement, allowing publications to serve both expert and general audiences effectively.

The platform supports both traditional technical presentations and visually enhanced versions to accommodate different learning styles and improve content accessibility. For research summaries in particular, visual elements are highly encouraged as they help communicate complex research findings to a broader audience.

Now that you understand the foundational principles of effective project presentation, it’s time to bring your work to life. This section will guide you through crafting a well-structured, visually appealing, and engaging publication that maximizes the impact of your AI/ML project on Ready Tensor.

Metadata plays a critical role in making your project discoverable and understandable. Here’s how to ensure your project’s metadata is clear and compelling:

Choosing a Compelling Title:

Your title should be concise yet descriptive, capturing the core contribution of your work. Aim for a title that sparks curiosity while clearly reflecting the project’s focus.

Selecting Appropriate Tags:

Tags help users find your project. Choose tags that accurately represent the project’s content, methods, and application areas. Prioritize terms that are both relevant and commonly searched within your domain.

Picking the Right License:

Select an appropriate license from the dropdown to specify how others can use your work. Consider licenses like MIT or GPL based on your goals, ensuring it aligns with your project’s intended use.

Authorship:

Clearly list all contributors, recognizing those who played significant roles in the project. Include affiliations where relevant to establish credibility and traceability of contributions.

Abstract or TL;DR:

Provide a concise summary of your project, focusing on its key contributions, methodology, and impact. Keep it brief but informative, as this is often the first thing readers will see to gauge the relevance of your work. Place this at the beginning of your publication to provide a quick overview.

This section is crucial in setting the stage for how your project will be perceived, so invest time to make it both informative and engaging.

Each project type has a standard structure that helps readers navigate your content. Below are typical sections to include based on the type of project you are publishing. Note that the abstract or tl;dr is mandatory and is part of the project metadata.

The technical quality of an AI/ML publication depends heavily on its type. A research paper requires comprehensive methodology and experimental validation, while a tutorial focuses on clear step-by-step instructions and practical implementation. Understanding these differences is crucial for creating high-quality content that meets readers' expectations.

Understanding Assessment Criteria

Refer to the comprehensive bank of assessment criteria specifically for AI/ML publications (detailed in Appendix A). These criteria cover various aspects including:

Matching Criteria to Publication Types

Different publication types require different combinations of these criteria. For example:

A complete mapping of criteria to publication types is provided in Appendix B, serving as a checklist for authors. When writing your publication, refer to the criteria specific to your chosen type to ensure you're meeting all necessary requirements.

Using the Assessment Framework

To create high-quality technical content:

Identify Your Publication Type

Review Relevant Criteria

Assess Your Content

Iterate and Improve

Remember, these criteria serve as guidelines rather than rigid rules. The goal is to ensure your publication effectively serves its intended purpose and audience. For detailed criteria descriptions and publication-specific requirements, refer to Appendices A and B.

Quality vs. Quantity

Meeting the assessment criteria isn't about increasing length or adding unnecessary complexity. Instead, focus on:

With these technical content fundamentals in place, we can move on to enhancing readability and appeal, which we'll cover in the next section.

Creating an engaging publication requires more than just presenting your findings. To capture and maintain your audience's attention, it's essential to structure your content in a visually appealing and easy-to-read format. The following guidelines will help you enhance the readability and overall impact of your publication, making it accessible and compelling to a wide audience.

The title is the first element readers see, so it should be concise and compelling. Aim to communicate the essence of your project in a way that piques curiosity and invites further exploration. Avoid overly technical jargon in the title, but ensure it's descriptive enough to reflect the project's main focus.

A well-chosen banner or hero image helps set the tone for your publication. It should be relevant to your project and visually engaging, drawing attention while providing context. Use high-quality images that align with your content’s theme—whether it's a dataset visualization, a model architecture diagram, or an industry-related image.

Headers and subheaders break up your content into digestible sections, improving readability and making it easier for readers to navigate your publication. Use a consistent hierarchy (e.g., h2 for primary sections, h3 for subsections) to create a clear structure. This also helps readers scan for specific information quickly.

Incorporate visuals such as images, diagrams, and videos to complement your text. Multimedia elements can illustrate complex concepts, making your publication more engaging and accessible. Use visuals to break up long sections of text and help readers retain information.

Large blocks of text can overwhelm readers. Break up paragraphs with images, bullet points, or callouts. Vary sentence length to keep your content dynamic and engaging. Consider adding whitespace between sections to create breathing room and guide the reader’s eye.

Callouts and info boxes help emphasize important points or provide additional context. Use these selectively to highlight key insights or offer helpful tips:

Bullet points and numbered lists are useful for organizing key ideas and steps. However, overusing them can make your publication feel fragmented. Use lists strategically to break down processes or summarize important points, but balance them with regular paragraphs to maintain flow.

Charts, graphs, and tables are essential for presenting data and results clearly. Ensure they are labeled appropriately, with clear legends and titles. Use them to complement your text, not replace it. Highlight important trends or insights within the accompanying text to help readers understand their significance.

While it’s important to share your methodology, avoid overwhelming readers with large blocks of code. Instead, include code snippets that demonstrate key processes or algorithms, and link to your full codebase via a repository.

Below is an example of a useful code snippet to include. It demonstrates a custom loss function that was used in a project:

def loss_function(recon_x, x, mu, logvar): BCE = F.binary_cross_entropy(recon_x, x, reduction='sum') KLD = -0.5 * torch.sum(1 + logvar - mu.pow(2) - logvar.exp()) return BCE + KLD

Don’t bury your most important insights in lengthy sections. Use bold text, bullet points, or callouts to highlight key findings. Ensure that readers can quickly identify the main contributions or conclusions of your work.

Consistent use of colors in charts and graphs helps readers follow trends and comparisons. Pick a color scheme that is visually appealing, easy to read, and, if possible, consistent with your publication’s theme. Avoid overly bright or clashing colors.

Make your publication accessible to all readers by adopting basic accessibility principles. Use alt text for images, choose legible fonts, and ensure there is sufficient color contrast in your charts. Accessibility improves inclusivity and helps reach a broader audience.

When including images in your Ready Tensor publication, it’s essential to maintain proper aspect ratios and image sizes to ensure your visuals are clear, engaging, and enhance the overall readability of your project.

Here are some best practices for handling image dimensions:

Aspect Ratio

The aspect ratio of an image is the proportional relationship between its width and height. Common aspect ratios include:

Maintaining a consistent aspect ratio across images in your publication can create a professional and uniform look. Distorted images (those stretched or compressed) can detract from the quality of your presentation, so it’s important to ensure that any resizing preserves the original aspect ratio.

By following these guidelines, you ensure that your images not only look good but also contribute effectively to the storytelling in your project, making it both visually appealing and easy to comprehend for your audience.

In this article, we explored the key practices for making your AI and data science projects stand out on Ready Tensor. From structuring your project with clarity to focusing on concepts and results over code, the way you present your work is as important as the technical accomplishments themselves. By utilizing headers, bullet points, and visual elements like graphs and tables, you ensure that your audience can easily follow along, understand your approach, and appreciate your outcomes.

Your ability to clearly communicate your project's purpose, methodology, and findings not only enhances its value but also sets you apart in a crowded space. The goal is not just to showcase your skills but to engage your readers, foster collaboration, and open doors to future opportunities.

As you wrap up each project, take a moment to reflect on its impact and consider any potential improvements or next steps. With these best practices in mind, your work will not only be technically sound but also compelling and impactful to a wider audience.

Choose a License: A website that explains different open-source licenses and helps users decide which one to pick.

Unsplash: A site for royalty-free images.

Freepik: A site for royalty-free images.

Web Content Accessibility Guidelines (WCAG) Overview: Guidelines for making your content accessible on the web.

The following is the comprehensive list of criteria to assess the quality of technical content for AI/ML publications of different types.

| Criterion Name | Description |

|---|---|

| Clear Purpose and Objectives | Evaluates whether the publication explicitly states its core purpose within the first paragraph or two. |

| Specific Objectives | Assesses whether the publication lists specific and concrete objectives that will be addressed. |

| Intended Audience/Use Case | Evaluates whether the publication clearly identifies who it's for and how it benefits them. |

| Target Audience Definition | Evaluates how well the publication identifies and describes the target audience for the tool, software package, dataset, or product, including user profiles, domains, and use cases. |

| Specific Research Questions/Objectives | Assesses whether the publication breaks down its purpose into specific, measurable research questions or objectives that guide the investigation. |

| Testability/Verifiability | Assesses whether the research questions and hypotheses can be tested or verified using the proposed approach. Research hypothesis must be falsifiable. |

| Problem Definition | Evaluates how well the publication defines and articulates the real-world problem that motivated the AI/ML solution. This includes the problem's scope, impact, and relevance to stakeholders. |

| Literature Review Coverage & Currency | Assesses the comprehensiveness and timeliness of literature review of similar works. |

| Literature Review Critical Analysis | Evaluates how well the publication analyzes and synthesizes existing work in literature. |

| Citation Relevance | Evaluates whether the cited works are relevant and appropriately support the research context. |

| Current State Gap Identification | Assesses whether the publication clearly identifies gaps in existing work. |

| Context Establishment | Evaluates how well the publication establishes context for the topic covered. |

| Methodology Explanation | Evaluates whether the technical methodology is explained clearly and comprehensively, allowing readers to understand the technical approach. |

| Step-by-Step Guidance Quality | Evaluates how effectively the publication breaks down complex procedures into clear, logical, and sequential steps that guide readers through the process. The steps should build upon each other in a coherent progression, with each step providing sufficient detail for completion before moving to the next. |

| Assumptions Stated | Evaluates whether technical assumptions are clearly stated and explained. |

| Solution Approach and Design Decisions | Evaluates whether the overall solution approach and specific design decisions are appropriate and well-justified. This includes explanation of methodology choice, architectural decisions, and implementation choices. Common/standard approaches may need less justification than novel or unconventional choices. |

| Experimental Protocol | Assesses whether the publication outlines a clear, high-level approach for conducting the study. |

| Study Scope & Boundaries | Evaluates whether the publication clearly defines the boundaries, assumptions, and limitations of the study. |

| Evaluation Framework | Assesses whether the publication defines a clear framework for evaluating results. |

| Validation Strategy | Evaluates whether the publication outlines a clear approach to validating results. |

| Dataset Sources & Collection | Evaluates whether dataset(s) used in the study are properly documented. For existing datasets, proper citation and sourcing is required for each. For new datasets, the collection methodology must be described. For benchmark studies or comparative analyses, all datasets must be properly documented. |

| Dataset Description | Assesses whether dataset(s) are comprehensively described, including their characteristics, structure, content, and rationale for selection. For multiple datasets, comparability and relationships should be clear. |

| Data Requirements Specification | For implementations requiring data: evaluates whether the publication clearly specifies the data requirements needed. |

| Dataset Selection or Creation | Evaluates whether the rationale for dataset selection is explained, or for new datasets, whether the creation methodology is properly documented. |

| Datset procesing Methodology | Evaluates whether data processing steps are clearly documented and justified. This includes any preprocessing, missing data handling, anomalies handling, and other data clean-up processing steps. |

| Basic Dataset Stats | Evaluates whether the publication provides clear documentation of fundamental dataset properties |

| Implementation Details | Assesses whether sufficient implementation details are provided with enough clarity. Focuses on HOW the methodology was implemented. |

| Parameters & Configuration | Evaluates whether parameter choices and configuration settings are clearly specified and justified where non-standard. Includes model hyperparameters, system configurations, and any tuning methodology used. |

| Experimental Environment | Evaluates whether the computational environment and resources used for the work are clearly specified when relevant. |

| Tools, Frameworks, & Services | Documents the key tools, frameworks, 3rd party services used in the implementation when relevant. |

| Implementation Considerations | Evaluates coverage of practical aspects of implementing or applying the model, concept, app, or tool described in the publication. |

| Deployment Considerations | Evaluates whether the publication adequately discusses deployment requirements, considerations, and challenges for implementing the solution in a production environment. This includes either actual deployment details if deployed, or thorough analysis of deployment requirements if proposed. |

| Monitoring and Maintenance Considerations | Evaluates whether the publication discusses how to monitor the solution's performance and maintain its effectiveness over time. This includes monitoring strategies, maintenance requirements, and operational considerations for keeping the solution running optimally. |

| Performance Metrics Analysis | Evaluates whether appropriate performance metrics are used and properly analyzed to demonstrate the success or effectiveness of the work. |

| Comparative Analysis | Assesses whether results are properly compared against relevant baselines or state-of-the-art alternatives. At least 4 or 5 alternatives are compared with. |

| Statistical Analysis | Evaluates whether appropriate statistical methods are used to validate results. |

| Key Results | Evaluates whether the main results and outcomes of the research are clearly presented in an understandable way. |

| Results Interpretation | Assesses whether results are properly interpreted and their implications explained. |

| Solution Impact Assessment | Evaluates how well the publication quantifies and demonstrates the real-world impact and value created by implementing the AI/ML solution. This includes measuring improvements in organizational metrics (cost savings, efficiency gains, productivity), user-centered metrics (satisfaction, adoption, time saved), and where applicable, broader impacts (environmental, societal benefits). The focus is on concrete outcomes and value creation, not technical performance measures. |

| Constraints, Boundaries, and Limitations | Evaluates whether the publication clearly defines when and where the work is applicable (boundaries), what constrains its effectiveness (constraints), and what its shortcomings are (limitations). |

| Summary of Key Findings | Evaluates whether the main findings and contributions of the work are clearly summarized and their significance explained. |

| Significance and Implications of Work | Assesses whether the broader significance and implications of the work are properly discussed. |

| Features and Benefits Analysis | Evaluates the clarity and completeness of feature descriptions and their corresponding benefits to users. |

| Competitive Differentiation | Evaluates how effectively the publication demonstrates the solution's unique value proposition and advantages compared to alternatives. |

| Future Directions | Evaluates whether meaningful future work and research directions are identified. |

| Originality of Work | Evaluates whether the work presents an original contribution, meaning work that hasn't been done before. This includes novel analyses, comprehensive comparisons, new methodologies, or new implementations. |

| Innovation in Methods/Approaches | Evaluates whether the authors created new methods, algorithms, or applications. This specifically looks for technical innovation, not just original analysis. |

| Advancement of Knowledge or Practice | Evaluates how the work advances knowledge or practice, whether through original analysis or innovative methods or implementation. |

| Code & Dependencies | Evaluates whether code is available and dependencies are properly documented for reproduction. |

| Data Source and Collection | Evaluates whether the publication clearly describes where the data comes from and the strategy for data collection or generation. This criterion only applies if the publication involved sourcing and creation of the data by authors. |

| Data Inclusion and Filtering Criteria | Assesses whether the publication defines clear criteria for what data is included or excluded from the dataset |

| Dataset Creation Quality Control Methodology | Evaluates the systematic approach to ensuring data quality during collection, generation, and processing |

| Dataset Bias and Representation Consideration | Assesses whether potential biases in data collection/generation are identified and addressed. For synthetic or naturally bias-free datasets, clear documentation of why bias is not a concern is sufficient. |

| Statistical Characteristics | Assesses whether the publication provides comprehensive statistical information about the dataset |

| Dataset Quality Metrics and Indicators | Evaluates whether the publication provides clear metrics and indicators of data quality |

| State-of-the-Art Comparisons | Evaluates whether the study includes relevant state-of-the-art methods from recent literature for comparison. Must contain at least 4 or 5 other top methods for comparison |

| Benchmarking Method Selection Justification | Evaluates whether the choice of methods, models, or tools for comparison is well-justified and reasonable for the study's objectives. |

| Fair Comparison Setup | Assesses whether all methods are compared under fair and consistent conditions. |

| Benchmarking Evaluation Rigor | Evaluates whether the comparison uses appropriate metrics and statistical analysis. |

| Purpose-Aligned Topic Coverage | Evaluates whether the publication covers all topics and concepts necessary to fulfill its stated purpose, goals, or learning objectives. Coverage should be complete relative to what was promised, rather than exhaustive of the general topic area. |

| Clear Prerequisites and Requirements | Evaluates whether the publication clearly states what readers need to have (tools, environment, software) or need to know (technical knowledge, concepts) before they can effectively use or understand the content. Most relevant for educational content like tutorials, guides, and technical implementations, but can also apply to technical deep dives and implementation reports. |

| Appropriate Technical Depth | Assesses whether the technical content matches the expected depth for the intended audience and publication type. For technical audiences, evaluates if it provides sufficient depth. For general audiences, evaluates if it maintains accessibility while being technically sound. |

| Code Usage Appropriateness | Assesses whether code examples, when present, are used judiciously and add value to the explanation. If the publication type or topic doesn't require code examples, then absence of code is appropriate and should score positively. |

| Code Clarity and Presentation | When code examples are present, evaluates whether they are well-written, properly formatted and integrated with the surrounding content. If the publication contains no code examples, this criterion is considered satisfied by default. |

| Code Explanation Quality | When code snippets are present, evaluates how well they are explained and contextualized within the content. If the publication contains no code snippets, this criterion is considered satisfied by default. |

| Real-World Applications | Assesses whether the publication clearly explains the practical significance, real-world relevance, and potential applications of the topic. This shows readers why the content matters and how it can be applied in practice. |

| Limitations and Trade-offs | Assesses whether the content discusses practical limitations, trade-offs, and potential pitfalls in real-world applications. |

| Supporting Examples | Evaluates whether educational content (tutorials, guides, blogs, technical deep dives) includes concrete and contemporary examples to illustrate concepts and enhance understanding. Examples should help readers better grasp the material through practical demonstration. |

| Industry Insights | Evaluates inclusion of industry trends, statistics, or patterns observed in practice. |

| Success/Failure Stories | Assesses whether specific success or failure stories are shared to illustrate outcomes and lessons learned. |

| Content Accessibility | Evaluates how well technical concepts are explained for a broader audience while maintaining scientific accuracy. |

| Technical Progression | Assesses how well the content builds technical understanding progressively, introducing concepts in a logical sequence that supports comprehension. |

| Scientific Clarity | Evaluates whether scientific accuracy is maintained while presenting content in an accessible way. |

| Source Credibility | Evaluates whether the publication properly references and cites its sources, clearly identifies the origin of data/code/tools used, and provides sufficient version/environment information for reproducibility. This helps readers validate claims, trace information to original sources, and implement solutions reliably. |

| Reader Next Steps | Evaluates whether the publication provides clear guidance on what readers can do after consuming the content. This includes suggested learning paths, topics to explore, further reading materials, skills to practice, or actions to take. The focus is on helping readers understand their potential next steps. |

| Uncommon Insights | Evaluates whether the publication provides valuable insights that are either unique (from personal experience/expertise) or uncommon (not easily found in standard sources). Looks for expert analysis, real implementation experiences, or carefully curated information that is valuable but not widely available. |

| Technical Asset Access Links | Evaluates whether the publication provides links to access the technical asset (tool, dataset, model, etc.), such as repositories, registries, or download locations |

| Installation and Usage Instructions | Evaluates whether the publication provides clear instructions for installing and using the tool, either directly in the publication or through explicit references to external documentation. The key is that a reader should be able to quickly understand how to get started with the tool. |

| Performance Characteristics and Requirements | Evaluates documentation of tool's performance characteristics |

| Maintenance and Support Status | Evaluates whether the publication clearly communicates the maintenance and support status of the technical asset (tool, dataset, model, etc.) |

| Access and Availability Status | Evaluates whether the publication clearly states how the technical asset can be accessed and used by others |

| License and Usage Rights of the Technical Asset | Evaluates whether the publication clearly communicates the licensing terms and usage rights of the technical asset itself (not the publication). This includes software licenses for tools, data licenses for datasets, model licenses for AI models, etc. |

| Contact Information of Asset Creators | Evaluates whether the publication provides information about how to contact the creators/maintainers or the technical asset or get support, either directly or through clear references to external channels |

| Publication Type | Criterion Name |

|---|---|

| Research Paper | Clear Purpose and Objectives |

| Research Paper | Intended Audience/Use Case |

| Research Paper | Specific Research Questions/Objectives |

| Research Paper | Testability/Verifiability |

| Research Paper | Literature Review Coverage & Currency |

| Research Paper | Literature Review Critical Analysis |

| Research Paper | Citation Relevance |

| Research Paper | Current State Gap Identification |

| Research Paper | Context Establishment |

| Research Paper | Methodology Explanation |

| Research Paper | Assumptions Stated |

| Research Paper | Solution Approach and Design Decisions |

| Research Paper | Experimental Protocol |

| Research Paper | Study Scope & Boundaries |

| Research Paper | Evaluation Framework |

| Research Paper | Validation Strategy |

| Research Paper | Dataset Sources & Collection |

| Research Paper | Dataset Description |

| Research Paper | Dataset Selection or Creation |

| Research Paper | Datset procesing Methodology |

| Research Paper | Basic Dataset Stats |

| Research Paper | Implementation Details |

| Research Paper | Parameters & Configuration |

| Research Paper | Experimental Environment |

| Research Paper | Tools, Frameworks, & Services |

| Research Paper | Implementation Considerations |

| Research Paper | Performance Metrics Analysis |

| Research Paper | Comparative Analysis |

| Research Paper | Statistical Analysis |

| Research Paper | Key Results |

| Research Paper | Results Interpretation |

| Research Paper | Constraints, Boundaries, and Limitations |

| Research Paper | Key Findings |

| Research Paper | Significance and Implications of Work |

| Research Paper | Future Directions |

| Research Paper | Originality of Work |

| Research Paper | Innovation in Methods/Approaches |

| Research Paper | Advancement of Knowledge or Practice |

| Research Paper | Code & Dependencies |

| Research Paper | Code Usage Appropriateness |

| Research Paper | Code Clarity and Presentation |

| Publication Type | Criterion Name |

|---|---|

| Benchmark Study | Clear Purpose and Objectives |

| Benchmark Study | Intended Audience/Use Case |

| Benchmark Study | Specific Research Questions/Objectives |

| Benchmark Study | Testability/Verifiability |

| Benchmark Study | Literature Review Coverage & Currency |

| Benchmark Study | Literature Review Critical Analysis |

| Benchmark Study | Citation Relevance |

| Benchmark Study | Current State Gap Identification |

| Benchmark Study | Context Establishment |

| Benchmark Study | Methodology Explanation |

| Benchmark Study | Assumptions Stated |

| Benchmark Study | Solution Approach and Design Decisions |

| Benchmark Study | Experimental Protocol |

| Benchmark Study | Study Scope & Boundaries |

| Benchmark Study | Evaluation Framework |

| Benchmark Study | Validation Strategy |

| Benchmark Study | Dataset Sources & Collection |

| Benchmark Study | Dataset Description |

| Benchmark Study | Dataset Selection or Creation |

| Benchmark Study | Datset procesing Methodology |

| Benchmark Study | Basic Dataset Stats |

| Benchmark Study | Implementation Details |

| Benchmark Study | Parameters & Configuration |

| Benchmark Study | Experimental Environment |

| Benchmark Study | Tools, Frameworks, & Services |

| Benchmark Study | Implementation Considerations |

| Benchmark Study | Performance Metrics Analysis |

| Benchmark Study | Comparative Analysis |

| Benchmark Study | Statistical Analysis |

| Benchmark Study | Key Results |

| Benchmark Study | Results Interpretation |

| Benchmark Study | Constraints, Boundaries, and Limitations |

| Benchmark Study | Key Findings |

| Benchmark Study | Significance and Implications of Work |

| Benchmark Study | Future Directions |

| Benchmark Study | Originality of Work |

| Benchmark Study | Innovation in Methods/Approaches |

| Benchmark Study | Advancement of Knowledge or Practice |

| Benchmark Study | Code & Dependencies |

| Benchmark Study | Benchmarking Method Selection Justification |

| Benchmark Study | Fair Comparison Setup |

| Benchmark Study | Benchmarking Evaluation Rigor |

| Publication Type | Criterion Name |

|---|---|

| Research Summary | Clear Purpose and Objectives |

| Research Summary | Specific Objectives |

| Research Summary | Intended Audience/Use Case |

| Research Summary | Specific Research Questions/Objectives |

| Research Summary | Current State Gap Identification |

| Research Summary | Context Establishment |

| Research Summary | Methodology Explanation |

| Research Summary | Solution Approach and Design Decisions |

| Research Summary | Experimental Protocol |

| Research Summary | Evaluation Framework |

| Research Summary | Dataset Sources & Collection |

| Research Summary | Dataset Description |

| Research Summary | Performance Metrics Analysis |

| Research Summary | Comparative Analysis |

| Research Summary | Key Results |

| Research Summary | Results Interpretation |

| Research Summary | Constraints, Boundaries, and Limitations |

| Research Summary | Key Findings |

| Research Summary | Significance and Implications of Work |

| Research Summary | Reader Next Steps |

| Research Summary | Originality of Work |

| Research Summary | Innovation in Methods/Approaches |

| Research Summary | Advancement of Knowledge or Practice |

| Research Summary | Industry Insights |

| Research Summary | Content Accessibility |

| Research Summary | Technical Progression |

| Research Summary | Scientific Clarity |

| Research Summary | Section Structure |

| Publication Type | Criterion Name |

|---|---|

| Tool / App / Software | Clear Purpose and Objectives |

| Tool / App / Software | Specific Objectives |

| Tool / App / Software | Intended Audience/Use Case |

| Tool / App / Software | Clear Prerequisites and Requirements |

| Tool / App / Software | Current State Gap Identification |

| Tool / App / Software | Context Establishment |

| Tool / App / Software | Features and Benefits Analysis |

| Tool / App / Software | Tools, Frameworks, & Services |

| Tool / App / Software | Implementation Considerations |

| Tool / App / Software | Constraints, Boundaries, and Limitations |

| Tool / App / Software | Significance and Implications of Work |

| Tool / App / Software | Originality of Work |

| Tool / App / Software | Innovation in Methods/Approaches |

| Tool / App / Software | Advancement of Knowledge or Practice |

| Tool / App / Software | Competitive Differentiation |

| Tool / App / Software | Real-World Applications |

| Tool / App / Software | Source Credibility |

| Tool / App / Software | Technical Asset Access Links |

| Tool / App / Software | Installation and Usage Instructions |

| Tool / App / Software | Performance Characteristics and Requirements |

| Tool / App / Software | Maintenance and Support Status |

| Tool / App / Software | Access and Availability Status |

| Tool / App / Software | License and Usage Rights of the Technical Asset |

| Tool / App / Software | Contact Information of Asset Creators |

| Publication Type | Criterion Name |

|---|---|

| Dataset Contribution | Clear Purpose and Objectives |

| Dataset Contribution | Specific Objectives |

| Dataset Contribution | Intended Audience/Use Case |

| Dataset Contribution | Current State Gap Identification |

| Dataset Contribution | Context Establishment |

| Dataset Contribution | Datset procesing Methodology |

| Dataset Contribution | Basic Dataset Stats |

| Dataset Contribution | Implementation Details |

| Dataset Contribution | Tools, Frameworks, & Services |

| Dataset Contribution | Constraints, Boundaries, and Limitations |

| Dataset Contribution | Key Findings |

| Dataset Contribution | Significance and Implications of Work |

| Dataset Contribution | Future Directions |

| Dataset Contribution | Originality of Work |

| Dataset Contribution | Innovation in Methods/Approaches |

| Dataset Contribution | Advancement of Knowledge or Practice |

| Dataset Contribution | Data Source and Collection |

| Dataset Contribution | Data Inclusion and Filtering Criteria |

| Dataset Contribution | Dataset Creation Quality Control Methodology |

| Dataset Contribution | Dataset Bias and Representation Consideration |

| Dataset Contribution | Statistical Characteristics |

| Dataset Contribution | Dataset Quality Metrics and Indicators |

| Dataset Contribution | Source Credibility |

| Dataset Contribution | Technical Asset Access Links |

| Dataset Contribution | Maintenance and Support Status |

| Dataset Contribution | Access and Availability Status |

| Dataset Contribution | License and Usage Rights of the Technical Asset |

| Dataset Contribution | Contact Information of Asset Creators |

| Dataset Contribution | Section Structure |

| Publication Type | Criterion Name |

|---|---|

| Academic Project Showcase | Clear Purpose and Objectives |

| Academic Project Showcase | Specific Objectives |

| Academic Project Showcase | Context Establishment |

| Academic Project Showcase | Methodology Explanation |

| Academic Project Showcase | Solution Approach and Design Decisions |

| Academic Project Showcase | Evaluation Framework |

| Academic Project Showcase | Dataset Sources & Collection |

| Academic Project Showcase | Dataset Description |

| Academic Project Showcase | Datset procesing Methodology |

| Academic Project Showcase | Implementation Details |

| Academic Project Showcase | Tools, Frameworks, & Services |

| Academic Project Showcase | Performance Metrics Analysis |

| Academic Project Showcase | Comparative Analysis |

| Academic Project Showcase | Key Results |

| Academic Project Showcase | Results Interpretation |

| Academic Project Showcase | Constraints, Boundaries, and Limitations |

| Academic Project Showcase | Key Findings |

| Academic Project Showcase | Future Directions |

| Academic Project Showcase | Purpose-Aligned Topic Coverage |

| Academic Project Showcase | Appropriate Technical Depth |

| Academic Project Showcase | Code Usage Appropriateness |

| Academic Project Showcase | Code Clarity and Presentation |

| Academic Project Showcase | Code Explanation Quality |

| Publication Type | Criterion Name |

|---|---|

| Applied Project Showcase | Clear Purpose and Objectives |

| Applied Project Showcase | Specific Objectives |

| Applied Project Showcase | Current State Gap Identification |

| Applied Project Showcase | Context Establishment |

| Applied Project Showcase | Methodology Explanation |

| Applied Project Showcase | Solution Approach and Design Decisions |

| Applied Project Showcase | Evaluation Framework |

| Applied Project Showcase | Dataset Sources & Collection |

| Applied Project Showcase | Dataset Description |

| Applied Project Showcase | Datset procesing Methodology |

| Applied Project Showcase | Implementation Details |

| Applied Project Showcase | Deployment Considerations |

| Applied Project Showcase | Tools, Frameworks, & Services |

| Applied Project Showcase | Implementation Considerations |

| Applied Project Showcase | Monitoring and Maintenance Considerations |

| Applied Project Showcase | Performance Metrics Analysis |

| Applied Project Showcase | Comparative Analysis |

| Applied Project Showcase | Key Results |

| Applied Project Showcase | Results Interpretation |

| Applied Project Showcase | Constraints, Boundaries, and Limitations |

| Applied Project Showcase | Key Findings |

| Applied Project Showcase | Significance and Implications of Work |

| Applied Project Showcase | Future Directions |

| Applied Project Showcase | Advancement of Knowledge or Practice |

| Applied Project Showcase | Purpose-Aligned Topic Coverage |

| Applied Project Showcase | Appropriate Technical Depth |

| Applied Project Showcase | Code Usage Appropriateness |

| Applied Project Showcase | Code Clarity and Presentation |

| Applied Project Showcase | Code Explanation Quality |

| Applied Project Showcase | Industry Insights |

| Applied Project Showcase | Technical Progression |

| Applied Project Showcase | Scientific Clarity |

| Applied Project Showcase | Source Credibility |

| Applied Project Showcase | Uncommon Insights |

| Publication Type | Criterion Name |

|---|---|

| Case Study | Clear Purpose and Objectives |

| Case Study | Specific Objectives |

| Case Study | Problem Definition |

| Case Study | Current State Gap Identification |

| Case Study | Context Establishment |

| Case Study | Methodology Explanation |

| Case Study | Dataset Sources & Collection |

| Case Study | Implementation Details |

| Case Study | Performance Metrics Analysis |

| Case Study | Key Results |

| Case Study | Results Interpretation |

| Case Study | Key Findings |

| Case Study | Solution Impact Assessment |

| Case Study | Significance and Implications of Work |

| Case Study | Uncommon Insights |

| Publication Type | Criterion Name |

|---|---|

| Industry Product Showcase | Clear Purpose and Objectives |

| Industry Product Showcase | Target Audience Definition |

| Industry Product Showcase | Clear Prerequisites and Requirements |

| Industry Product Showcase | Problem Definition |

| Industry Product Showcase | Current State Gap Identification |

| Industry Product Showcase | Context Establishment |

| Industry Product Showcase | Deployment Considerations |

| Industry Product Showcase | Tools, Frameworks, & Services |

| Industry Product Showcase | Implementation Considerations |

| Industry Product Showcase | Constraints, Boundaries, and Limitations |

| Industry Product Showcase | Significance and Implications of Work |

| Industry Product Showcase | Features and Benefits Analysis |

| Industry Product Showcase | Competitive Differentiation |

| Industry Product Showcase | Originality of Work |

| Industry Product Showcase | Innovation in Methods/Approaches |

| Industry Product Showcase | Advancement of Knowledge or Practice |

| Industry Product Showcase | Real-World Applications |

| Industry Product Showcase | Technical Asset Access Links |

| Industry Product Showcase | Installation and Usage Instructions |

| Industry Product Showcase | Performance Characteristics and Requirements |

| Industry Product Showcase | Maintenance and Support Status |

| Industry Product Showcase | Access and Availability Status |

| Industry Product Showcase | License and Usage Rights of the Technical Asset |

| Industry Product Showcase | Contact Information of Asset Creators |

| Publication Type | Criterion Name |

|---|---|

| Solution Implementation Guide | Clear Purpose and Objectives |

| Solution Implementation Guide | Specific Objectives |

| Solution Implementation Guide | Intended Audience/Use Case |

| Solution Implementation Guide | Problem Definition |

| Solution Implementation Guide | Current State Gap Identification |

| Solution Implementation Guide | Context Establishment |

| Solution Implementation Guide | Clear Prerequisites and Requirements |

| Solution Implementation Guide | Step-by-Step Guidance Quality |

| Solution Implementation Guide | Data Requirements Specification |

| Solution Implementation Guide | Deployment Considerations |

| Solution Implementation Guide | Tools, Frameworks, & Services |

| Solution Implementation Guide | Implementation Considerations |

| Solution Implementation Guide | Significance and Implications of Work |

| Solution Implementation Guide | Features and Benefits Analysis |

| Solution Implementation Guide | Reader Next Steps |

| Solution Implementation Guide | Purpose-Aligned Topic Coverage |

| Solution Implementation Guide | Appropriate Technical Depth |

| Solution Implementation Guide | Code Usage Appropriateness |

| Solution Implementation Guide | Code Clarity and Presentation |

| Solution Implementation Guide | Code Explanation Quality |

| Solution Implementation Guide | Real-World Applications |

| Solution Implementation Guide | Content Accessibility |

| Solution Implementation Guide | Technical Progression |

| Solution Implementation Guide | Scientific Clarity |

| Solution Implementation Guide | Source Credibility |

| Solution Implementation Guide | Uncommon Insights |

| Publication Type | Criterion Name |

|---|---|

| Technical Deep-Dive | Clear Purpose and Objectives |

| Technical Deep-Dive | Specific Objectives |

| Technical Deep-Dive | Intended Audience/Use Case |

| Technical Deep-Dive | Clear Prerequisites and Requirements |

| Technical Deep-Dive | Current State Gap Identification |

| Technical Deep-Dive | Context Establishment |

| Technical Deep-Dive | Methodology Explanation |

| Technical Deep-Dive | Assumptions Stated |

| Technical Deep-Dive | Solution Approach and Design Decisions |

| Technical Deep-Dive | Implementation Considerations |

| Technical Deep-Dive | Key Results |

| Technical Deep-Dive | Results Interpretation |

| Technical Deep-Dive | Constraints, Boundaries, and Limitations |

| Technical Deep-Dive | Key Findings |

| Technical Deep-Dive | Significance and Implications of Work |

| Technical Deep-Dive | Reader Next Steps |

| Technical Deep-Dive | Purpose-Aligned Topic Coverage |

| Technical Deep-Dive | Appropriate Technical Depth |

| Technical Deep-Dive | Code Usage Appropriateness |

| Technical Deep-Dive | Code Clarity and Presentation |

| Technical Deep-Dive | Code Explanation Quality |

| Technical Deep-Dive | Real-World Applications |

| Technical Deep-Dive | Supporting Examples |

| Technical Deep-Dive | Content Accessibility |

| Technical Deep-Dive | Technical Progression |

| Technical Deep-Dive | Scientific Clarity |

| Publication Type | Criterion Name |

|---|---|

| Technical Guide | Clear Purpose and Objectives |

| Technical Guide | Specific Objectives |

| Technical Guide | Intended Audience/Use Case |

| Technical Guide | Clear Prerequisites and Requirements |

| Technical Guide | Context Establishment |

| Technical Guide | Methodology Explanation |

| Technical Guide | Implementation Considerations |

| Technical Guide | Constraints, Boundaries, and Limitations |

| Technical Guide | Key Findings |

| Technical Guide | Significance and Implications of Work |

| Technical Guide | Reader Next Steps |

| Technical Guide | Purpose-Aligned Topic Coverage |

| Technical Guide | Appropriate Technical Depth |

| Technical Guide | Code Usage Appropriateness |

| Technical Guide | Code Clarity and Presentation |

| Technical Guide | Code Explanation Quality |

| Technical Guide | Real-World Applications |

| Technical Guide | Supporting Examples |

| Technical Guide | Content Accessibility |

| Technical Guide | Technical Progression |

| Technical Guide | Scientific Clarity |

| Publication Type | Criterion Name |

|---|---|

| Tutorial | Clear Purpose and Objectives |

| Tutorial | Specific Objectives |

| Tutorial | Intended Audience/Use Case |

| Tutorial | Context Establishment |

| Tutorial | Clear Prerequisites and Requirements |

| Tutorial | Step-by-Step Guidance Quality |

| Tutorial | Data Requirements Specification |

| Tutorial | Constraints, Boundaries, and Limitations |

| Tutorial | Reader Next Steps |

| Tutorial | Purpose-Aligned Topic Coverage |

| Tutorial | Appropriate Technical Depth |

| Tutorial | Code Usage Appropriateness |

| Tutorial | Code Clarity and Presentation |

| Tutorial | Code Explanation Quality |

| Tutorial | Real-World Applications |

| Tutorial | Supporting Examples |

| Tutorial | Content Accessibility |

| Tutorial | Technical Progression |

| Tutorial | Scientific Clarity |

| Tutorial | Source Credibility |

| Tutorial | Uncommon Insights |

| Publication Type | Criterion Name |

|---|---|

| Blog | Clear Purpose and Objectives |

| Blog | Context Establishment |

| Blog | Purpose-Aligned Topic Coverage |

| Blog | Appropriate Technical Depth |

| Blog | Real-World Applications |

| Blog | Supporting Examples |

| Blog | Industry Insights |

| Blog | Success/Failure Stories |

| Blog | Content Accessibility |

| Blog | Source Credibility |

| Blog | Reader Next Steps |

| Blog | Uncommon Insights |