Whether you are a beginner eager to build your portfolio or a seasoned pro looking to collaborate on meaningful projects, open-source AI/ML offers endless opportunities to learn, grow, and make an impact.

In this article, we will introduce you to curated open-source projects; from emerging frameworks like Swarmauri to industry staples like PyTorch and Hugging Face. You will learn how to contribute effectively, avoid common pitfalls, and turn your code into a career-building asset. But first, let’s tackle the basics.

Open-source projects are projects whose source code is publicly available for anyone to view, use, modify, and contribute to. These projects are usually maintained by a community of developers who collaborate to improve the software/framework/library, fix bugs, add new features, and ensure its overall stability.

In Al/ML, open-source projects play a huge role in innovation. Many of the tools and frameworks we use daily, like TensorFlow, PyTorch, LangChain, Scikit-Learn, etc., are open source, meaning anyone can contribute to their development.

Contributing to open-source projects is one of the most rewarding ways to grow as a developer, data scientist, or AI/ML professional. Whether you're just starting or have years of experience, getting involved in open source offers a unique set of benefits that can help you build your skills, expand your network, and elevate your career. Here’s why you should consider contributing:

Open-source projects provide a platform to work on real-world problems and cutting-edge technologies. Unlike personal projects or coursework, contributing to open source exposes you to production-level code, collaborative workflows, and industry-standard tools. This hands-on experience is invaluable and can set you apart in the job market.

Whether it’s debugging, writing documentation, or optimizing algorithms, open-source contributions allow you to hone your technical skills in a practical setting. You will also get to work with tools and frameworks that are widely used in the industry, such as TensorFlow, PyTorch, or Hugging Face, giving you a competitive edge. From my personal experience, you will learn Git and GitHub very well when you start contributing to open source. I learnt Git and GitHub the best when contributing to open-source projects

Your contributions to open-source projects are public and visible to everyone. This means you can showcase your work on platforms like GitHub, LinkedIn, or your portfolio. Potential employers often look at open-source contributions as proof of your skills, initiative, and ability to collaborate with others.

Open-source projects are often maintained by some of the brightest minds in the field. By contributing, you get the opportunity to learn from experienced developers, receive feedback on your code, and understand best practices in software development and machine learning.

Many of the tools and frameworks we use daily, like Scikit-learn, Jupyter Notebooks, or LangChain, are all open source. Contributing to these projects is a way to give back to the community that has built the tools you rely on. It’s a chance to support innovation and make these resources better for everyone.

Open-source communities are global and diverse. By contributing, you will connect with like-minded individuals, collaborate with professionals from different backgrounds, and build relationships that can lead to mentorship, job opportunities, or even lifelong friendships.

Seeing your code merged into a popular project or receiving positive feedback from maintainers can be incredibly motivating. It’s a tangible way to measure your progress and gain confidence in your abilities as a developer or data scientist.

Open-source projects are often at the forefront of innovation. By contributing, you will stay updated on the latest trends, tools, and techniques in AI/ML. This knowledge can help you stay relevant in a fast-evolving field.

You don’t need to be an expert to contribute to open source. Many projects have beginner-friendly issues labelled as good-first-issue or help-wanted. These are great starting points for newcomers to get their feet wet and gradually build their confidence.

Your contributions, no matter how small, can have a significant impact. Whether it’s fixing a bug, improving documentation, or adding a new feature, your work can help thousands of users and developers around the world.

If you already have an open-source project in mind and just want to learn how to contribute effectively, skip to the end of the article. Otherwise, stick around as we’ve got plenty of great options to explore!

In this article, we will be categorizing these open-source projects based on their focus areas, making it easier. for you to find the ones that match your interests and skill level. We will cover a mix of well-established, stable projects and emerging projects that are worth keeping an eye on. This way, whether you're looking for something reliable to contribute to or want to get involved in the next big thing, you will have plenty of options to choose from.

Let's jump right into it

PyTorch

PyTorch is a flexible deep learning framework developed by Meta AI in 2016, which is renowned for its dynamic computation graph and Python-first design. It dominates research workflows for tasks like computer vision, NLP, and reinforcement learning.

🔗 GitHub | Docs

TensorFlow

TensorFlow is Google’s flagship machine learning framework which was released in 2015 and optimized for production-grade deployments. TensorFlow powers industrial-scale AI applications.

🔗 GitHub | Docs

Scikit-Learn

This is the go-to Python library for classical/traditional machine learning (e.g., regression, clustering, SVMs). Released in 2007, it offers simple APIs for data preprocessing, model training, and evaluation.

🔗 GitHub | Docs

JAX

Jax is a high-performance numerical computing library from Google (2018), combining NumPy-like syntax with automatic differentiation and GPU/TPU acceleration. Key for cutting-edge research in physics, optimization, and ML.

🔗 GitHub | Docs

XGBoost

XGBoost is a scalable gradient-boosting library (2014) for structured/tabular data. It dominates Kaggle competitions and enterprise ML pipelines with its speed, accuracy, and support for distributed training.

🔗 GitHub | Docs

MLflow

A platform for managing the ML lifecycle (2018), including experiment tracking, model packaging, and deployment. Critical for MLOps and collaborative workflows.

🔗 GitHub | Docs

LangChain

This is one of the first and most popular open-source frameworks designed to simplify the development of LLM-powered applications. Developed in 2022, it provides tools for chaining LLM calls, integrating with external data sources, and building AI-driven applications like chatbots and autonomous agents.

🔗 GitHub | Docs

LangGraph

Built on top of LangChain, this framework is designed for creating stateful, multi-agent, and graph-based workflows with LLMs. Developed in 2023, it excels in constructing complex AI applications that require dynamic task coordination.

🔗 GitHub | Docs

CrewAI

An open-source framework for building and managing multi-agent AI workflows, CrewAI enables developers to design teams of AI agents that collaborate efficiently. Developed in 2023, it is ideal for applications that benefit from role-based, coordinated task execution.

🔗 GitHub | Docs

LlamaIndex

This data framework helps LLMs connect with external data sources by providing tools for indexing, retrieving, and querying information. Developed in 2022, it streamlines the creation of knowledge-driven AI applications.

🔗 GitHub | Docs

Swarmauri

Swarmauri is still in its early stages as an open-source tool for building, testing, and deploying AI-powered applications and agents. With a low adoption rate so far, it’s a great time to contribute and help shape its future. Swarmauri was first released in 2024.

🔗 GitHub | Docs

Pydantic AI

Developed by the creators of Pydantic, Pydantic AI is a Python-based framework designed to simplify the development of production-grade applications powered by Generative AI. Released in 2024, it provides robust tools for building, validating, and deploying AI agents, ensuring reliability and scalability in real-world applications. While still in its early stages, Pydantic AI is rapidly gaining traction for its focus on developer productivity and seamless integration with existing AI workflows.

🔗 GitHub | Docs

AgentGPT

An emerging framework that empowers developers to create fully autonomous agents capable of multi-step reasoning and task execution. Released in 2023, AgentGPT is gaining attention for its simplicity and robust design in building interactive AI systems.

🔗 GitHub | Docs

SmolAgents

A lightweight, modular agent framework from HuggingFace, SmolAgents is designed for rapid prototyping and deployment of specialized AI agents. Developed in 2024, it offers an intuitive API and seamless integration with HuggingFace’s ecosystem.

🔗 GitHub | Docs

OpenAGI

An ambitious open-source platform aiming to push toward Artificial General Intelligence (AGI) by integrating LLMs with domain-specific expert models. Developed in 2023, OpenAGI leverages reinforcement learning from task feedback to tackle complex, multi-step real-world tasks.

🔗 GitHub

Ollama

This is an open-source tool that allows you to download and run large language models locally on your computer. Developed to optimize both performance and data privacy, Ollama provides a simple, user-friendly interface for managing multiple LLMs on your hardware. By eliminating the need for cloud-based processing, it offers faster response times and greater control over model configurations, making it an excellent choice for developers and researchers looking to experiment with and deploy AI models locally.

🔗 GitHub

HuggingFace Transformers

This is an open-source Python library that simplifies working with transformer-based models across a wide range of tasks, from natural language processing to audio and video processing. It provides seamless access to a vast collection of pre-trained models via the Hugging Face Model Hub, making it the go-to solution if you want to quickly implement state-of-the-art AI without building models from scratch.

🔗 GitHub | Docs

spaCy

spaCy is a fast, production-ready NLP library for Python, released in 2015. It excels in tasks like tokenization, named entity recognition (NER), and dependency parsing, with pre-trained models for multiple languages.

🔗 GitHub | Docs

NLTK (Natural Language Toolkit)

NLTK, released in 2001, is a comprehensive NLP library designed for education and research. It offers tools for tokenization, stemming, lemmatization, and parsing, along with a vast collection of linguistic resources.

🔗 GitHub | Docs

OpenCV (Open Source Computer Vision Library)

OpenCV is one of the most widely used libraries for computer vision tasks. It provides tools for image processing, object detection, facial recognition, and more. Developed in 2000, it has become a cornerstone for both academic research and industrial applications.

🔗 GitHub | Docs

YOLO (You Only Look Once)

YOLO is a state-of-the-art real-time object detection system. Known for its speed and accuracy, YOLO has gone through several iterations, with YOLO11 being the latest version as of today. It is widely used in applications like surveillance, autonomous vehicles, and robotics.

🔗 GitHub | Docs

Stable Diffusion

Stable Diffusion is a generative AI model for creating high-quality images from text prompts. Released in 2022, it has revolutionized the field of AI art and image generation. The model is open-source, allowing developers to fine-tune and deploy it for various creative and commercial applications.

🔗 GitHub | Docs

Detectron2

Developed by Facebook AI Research (FAIR), Detectron2 is a powerful framework for object detection, segmentation, and other vision tasks. It is built on PyTorch and offers pre-trained models for quick deployment. Released in 2019, it is widely used in research and industry.

🔗 GitHub | Docs

MediaPipe

Developed by Google, MediaPipe is a framework for building multimodal (e.g., video, audio, and sensor data) applications. It includes pre-built solutions for face detection, hand tracking, pose estimation, and more. Released in 2019, it is widely used for real-time vision applications.

🔗 GitHub | Docs

MMDetection

MMDetection is an open-source object detection toolbox based on PyTorch. It supports a wide range of models, including Faster R-CNN, Mask R-CNN, and YOLO. Developed in 2018, it is part of the OpenMMLab project and is widely used in academic and industrial research.

🔗 GitHub | Docs

Segment Anything Model (SAM)

Developed by Meta AI, SAM is a groundbreaking model for image segmentation. Released in 2023, it can segment any object in an image with minimal input, making it highly versatile for applications in medical imaging, autonomous driving, and more.

🔗 GitHub | Docs

Fast.ai

Fast.ai is a deep learning library that simplifies training and deploying computer vision models. It includes pre-trained models and high-level APIs for tasks like image classification and object detection. Released in 2016, it is widely used for educational purposes and rapid prototyping.

🔗 GitHub | Docs

OpenPose

OpenPose is a real-time multi-person keypoint detection library. It can detect human poses, hands, and facial keypoints in images and videos. Released in 2017, it is widely used in applications like fitness tracking and animation.

🔗 GitHub | Docs

Kubeflow

Kubeflow is the go-to open-source platform for deploying machine learning workflows on Kubernetes. Launched in 2017, it simplifies scaling ML pipelines; from data preprocessing to model serving in cloud-native environments.

🔗 GitHub | Docs

BentoML

BentoML streamlines deploying ML models into production with a unified framework for packaging, serving, and monitoring. Released in 2019, it supports all major frameworks (PyTorch, TensorFlow, etc.) and integrates seamlessly with Kubernetes, AWS Lambda, or your custom infrastructure.

🔗 GitHub | Docs

Seldon Core

Seldon Core is a production-grade platform for deploying ML models at scale. Launched in 2017, it converts models into REST/gRPC microservices, handles A/B testing, and monitors performance. It is perfect for enterprises needing reliability and governance in their AI systems.

🔗 GitHub | Docs

Feast

Feast (Feature Store) is an open-source tool for managing and serving ML features in production. Released in 2019, it bridges the gap between data engineering and ML teams, ensuring consistent feature pipelines for training and real-time inference.

🔗 GitHub | Docs

Cortex

Cortex automates deploying ML models as scalable APIs on AWS, GCP, or Azure. Launched in 2020, it handles everything from autoscaling to monitoring, letting you focus on building models instead of infrastructure.

🔗 GitHub | Docs

Pandas

Pandas is the go-to Python library for data manipulation and analysis. Released in 2008, it simplifies tasks like cleaning, transforming, and analyzing structured data with its intuitive DataFrame API.

🔗 GitHub | Docs

NumPy

The backbone of numerical computing in Python, NumPy (2006) powers everything from data science to deep learning. Its ndarray object handles multi-dimensional arrays and matrices, making it essential for efficient ML workloads.

🔗 GitHub | Docs

Matplotlib

The granddaddy of Python visualization (2003), Matplotlib turns raw data into publication-quality plots. Pair it with Pandas for quick exploratory data analysis (EDA).

🔗 GitHub | Docs

Seaborn

Seaborn (2012) supercharges Matplotlib with sleek statistical visualizations. Perfect for heatmaps, distribution plots, and correlation matrices.

🔗 GitHub | Docs

Plotly

Plotly (2013) creates interactive, web-ready visualizations. Build dashboards, 3D plots, or geographic maps, all with Python or JavaScript.

🔗 GitHub | Docs

Contributing to open-source projects might feel intimidating at first, especially when you’re staring at a massive codebase. But don’t worry. Once you break it down into small steps, it becomes surprisingly straightforward. Let’s walk through the process together.

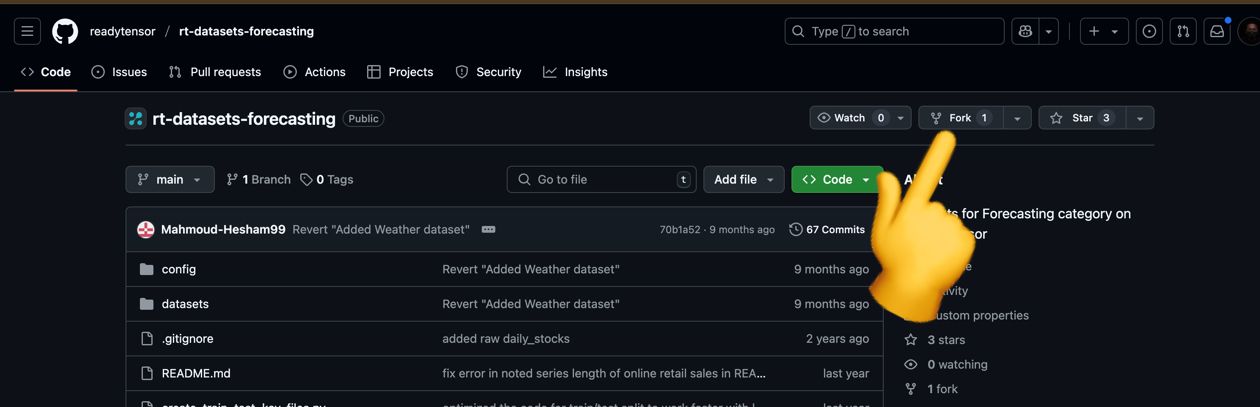

Start by navigating to the project’s GitHub repository. For example, if you want to contribute to Hugging Face Transformers, search for “Hugging Face Transformers GitHub” or use the direct link from their documentation.

🔍 Pro tip: Most projects link their GitHub repo in their official documentation or website footer. Look for a tiny 🐙 or "View on GitHub" button!

CONTRIBUTING.md FileEvery well-maintained project has a CONTRIBUTING.md file (sometimes named CONTRIBUTORS.md or GUIDELINES.md). This document is your cheat sheet because it explains exactly how to contribute, including:

For example, here’s what you will see in Swarmauri’s CONTRIBUTING.md:

How to Contribute 1. Fork the Repository: - Navigate to the repository and fork it to your GitHub account. 2. Star and Watch: - Star the repo and watch for updates to stay informed. 3. Clone Your Fork: - Clone your fork to your local machine: git clone https://github.com/your-username/swarmauri-sdk.git 4. Create a New Branch: - Create a feature branch to work on: git checkout -b feature/your-feature-name 5. Make Changes: - Implement your changes. Write meaningful and clear commit messages. - Stage and commit your changes: git add . git commit -m "Add a meaningful commit message" 6. Push to Your Fork: - Push your branch to your fork: git push origin feature/your-feature-name 7. Write Tests: - Ensure each new feature has an associated test file. - Tests should cover: a. Component Type: Verify the component is of the expected type. b. Resource Handling: Validate inputs/outputs and dependencies. c. Serialization: Ensure data is properly serialized and deserialized. d. Access Method: Test component accessibility within the system. e. Functionality: Confirm the feature meets the project requirements. 8. Create a Pull Request: - Once your changes are ready, create a pull request (PR) to merge your branch into the main repository. - Provide a detailed description, link to related issues, and request a review.

Don’t skip this step! Maintainers love contributors who follow their guidelines. Ignoring the rules could lead to your Pull Request (PR) being rejected, even if your code is perfect.

What If There’s No CONTRIBUTING.md?

Don’t panic. Many projects are still evolving, and documentation might lag. Here’s how to navigate this:

Check the Issues Tab:

Look for labels like good-first-issue or help-wanted as these are golden tickets for newcomers. For instance, AgentGPT doesn’t have a CONTRIBUTING.md yet, but its GitHub Issues are filled with tagged tasks, perfect for newcomers.

Join the Conversation:

Head to the project’s Discussions, Slack, or Discord (linked in the repo’s “About” section). A quick “How can I help?” post often gets maintainers excited to guide you.

Learn from Others:

Browse recent pull requests to see how contributors structured their code, wrote commit messages, or addressed feedback. Mimic their workflow to avoid rookie mistakes.

If all of the above fails, open an issue asking, “How can I contribute?” Most maintainers will gladly point you to the right direction. 👍

Time to roll up your sleeves. Let’s turn theory into action with a step-by-step walkthrough.

1. Fork the Repository

Forking creates your copy of the project on GitHub, allowing you to experiment without affecting the original codebase.

How to do it:

2. Clone Your Fork Locally

Clone the forked repo to your machine to start coding:

Open your terminal/command line and write the command below

git clone https://github.com/your-username/project-name.git cd project-name

Heads up: Use the SSH URL if you’ve set up SSH keys for GitHub (fewer password prompts).

3. Set Up the Development Environment

Most projects require dependencies and configurations. Check the CONTRIBUTING.md or README.md for setup instructions.

Typical workflow:

# Create a virtual environment (avoid dependency conflicts) python -m venv venv source venv/bin/activate # Install dependencies pip install -r requirements.txt # Run tests to confirm everything works pytest tests/

💡 Pro Tip: If the project uses Docker, run docker-compose up for a hassle-free setup.

4. Create a Feature Branch

Never work directly on the main branch. Create a new branch for your changes:

git checkout -b fix/typo-in-docs

🚨 Fun fact: Branch names like add-spaceship-emojis are more memorable (and fun) than patch-1.

5. Make Changes & Commit

Now, code away 🚀. Once done, commit your changes with a clear, concise message:

git add . git commit -m "Fix typo in quickstart guide"

💥 Golden rule: One logical change per commit. No “fixed stuff” messages.

6. Write Tests (If Required)

Many projects require tests for new features. For example, Swarmauri mandates test coverage for every component.

# Example test for a new feature def test_new_feature(): result = my_function(input="test") assert result == "expected_output"

📉 Pain avoided: Debugging failing tests now beats cryptic errors in code review later.

7. Push to Your Fork

Upload your branch to GitHub:

git push origin fix/typo-in-docs

8. Open a Pull Request (PR)

🎯 Pro move: Tag a maintainer (e.g., “@janedoe PTAL”) if the project’s guidelines allow it.

9. Respond to Feedback

Maintainers might request changes. Update your code, push to the same branch, and the PR auto-updates!

git add . git commit -m "Address review feedback" git push origin fix/typo-in-docs

After the PR is Merged

Your first PR might feel like climbing Everest, but soon you will be sprinting up these hills.

Solve Meaningful Problems:

Focus on high-impact issues (bugs, feature requests) that users care about. Quality > quantity.

Communicate Clearly:

Write detailed PR descriptions, link related issues, and respond promptly to feedback. If possible, use screenshots/GIFs to explain UI changes.

Document Everything:

Fix typos, improve tutorials, or add code comments. Great docs are rare; your work will get noticed.

Share Your Work:

Post about contributions on LinkedIn/Twitter, tag the project, and link to your PR. Example:

“Just added [feature] to @PyTorch! Learned [X], check out the PR 👇”

Help Others:

Answer questions in Discussions/forums. Mentoring newcomers builds trust and visibility.

Stay Consistent:

Regular contributions > one-off PRs. Even small fixes keep you on maintainers’ radar.

Contributing to open-source AI/ML isn’t just about code. It’s about learning, collaborating, and shaping the future of technology. Whether you’re fixing a typo in PyTorch’s docs or building a new feature for LangChain, every contribution matters.

Your journey starts now:

good-first-issue, and submit that PR.The open-source world thrives on curiosity and courage.

P.S. Tag us when you land your first contribution, we would love to celebrate with you🤝